Everybody Talks: Gossip, Serf, memberlist, Raft, and SWIM in HashiCorp Consul

Learn about Consul's internals and foundational concepts in more human-friendly terms with this illustration-filled talk.

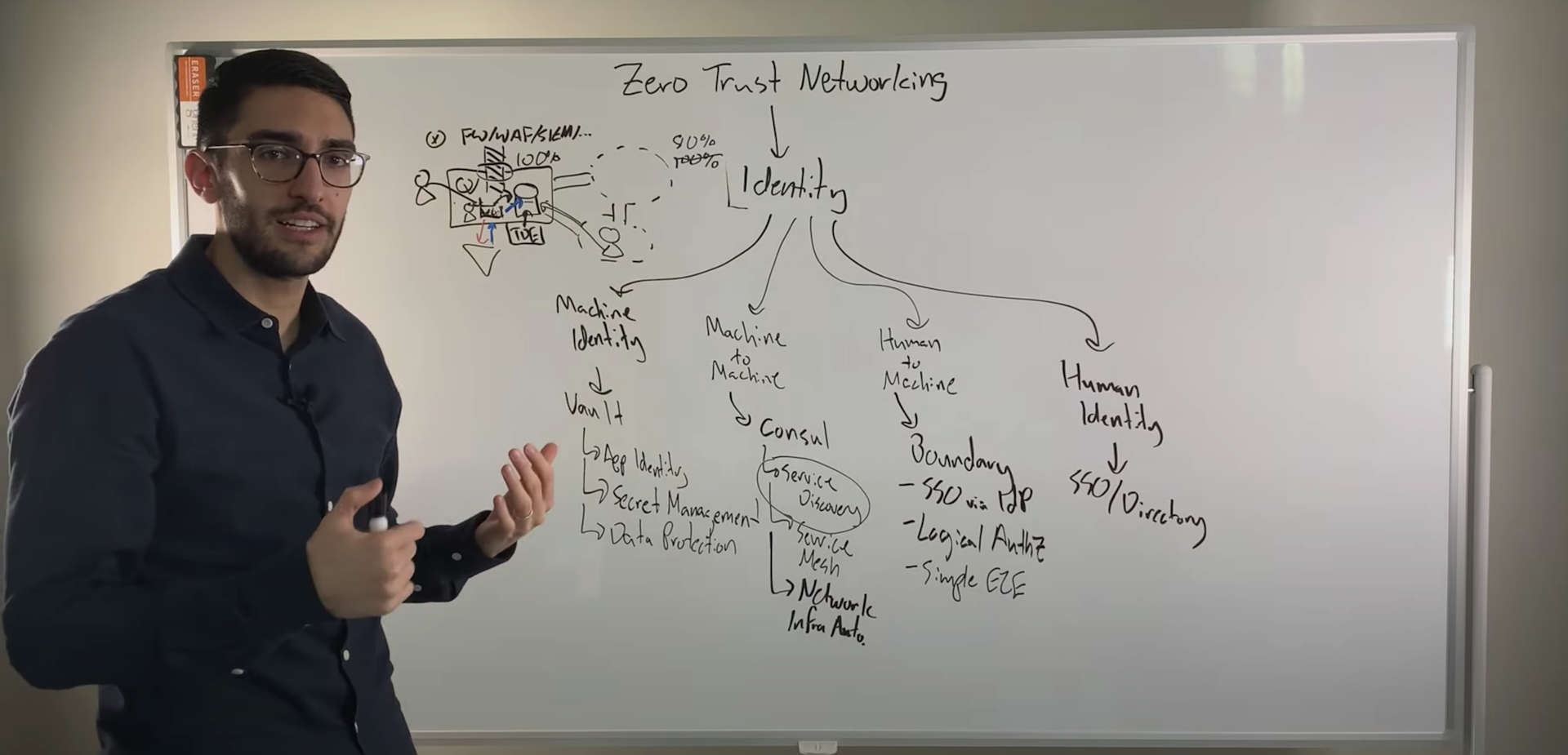

If you've looked a little deeper at the service discovery and service mesh solution HashiCorp Consul, you've probably heard about the Gossip protocol, Serf, memberlist, Raft, and SWIM. Where do all of these things fit into Consul and the modern challenges of distributed systems communication and reliability patterns?

Sarah Christoff breaks it down in this fun, easy-to-understand talk with the help of some great illustrations.

Speakers

Sarah ChristoffApprentice Engineer, HashiCorp

Sarah ChristoffApprentice Engineer, HashiCorp

Transcript

I'm Sarah Christoff. I am an apprentice engineer on Consul and my mom thinks I’m cool. 5 Usually, in my talks, I make an agenda or a lesson plan. We start with building the base, the sides, and then the summary is always a roof—like a house. This talk is a little bit more complex. We're going to go over Consul's history, why it exists, the problems that we came across when building it, and what it has become today.

We're going to start with the problem. Why did we build Consul? Go over things like SWIM, memberlist and Serf. You've probably never heard of them. Neither had I. Eventually we'll end up knowing more about Consul.

What problem is Consul trying to fix?

I used to work as a systems engineer at a consultant firm. I was used to hard coding IP addresses everywhere—even though we were in the cloud. I figured this was a problem. And even though I wasn't technically adept enough to solve it, I whined about it.

Well, guess what? Armon probably had that same problem at least once. And he did something about it. If you don't know who Armon and Mitchell are, then they were the people here yesterday. They're our co-founders and CTOs.

We had to figure out a model to tackle the hard coded IP problem. We tried to find the most fault-tolerant, robust, scalable solution. When I say scalable—at the time, scalable was 5,000 nodes.

We needed something decentralized because it's scalable and it's very easy to reason about—and it's very fault-tolerant. Fun fact: In the 1990s, when things like ARPANET were floating around—might be a little too late for ARPANET—but the Internet was made to be decentralized because the Internet was made for the military to communicate.

Defining decentralization

Centralized is when all of these nodes go to one point for their information. Decentralized is when we have key nodes—multiples of them—and we can go to different points for this information.

I've worked at a WordPress hosting site before. I like to think of them as MySQL databases. You could have one MySQL database, and everyone hitting that MySQL database, or you could have many of them and us all hitting those many different points.

Admittedly I played Destiny 2 very poorly. This is what came to mind when I heard peer-to-peer. Peer-to-peer is used a lot in online gaming. It's more cost-effective for companies to use the peer-to-peer model because they get to offload the cost of having dedicated servers while they offload it on the users.

In my destiny model—when I join an online match, someone in that match becomes the host. The rest of us are clients, every shot, kill, movement is registered through that host. It's checked, and then that's where it's stored.

If that host has a poor Internet connection—if they drop out—that's going to affect your gameplay. Hopefully, it doesn't. But you're all huddled around this one person praying that they have a good Internet connection. That also leaves us with vulnerabilities.

If I'm the host, then I can say I killed four bazillion people, and I'm the best SB player. That can upset some people. In cases of counterstrike—which use the dedicated servers—every shot, kill, movement goes through those servers to check the validity of what you're doing.

From here on out, it's best to understand peer-to-peer as implicitly decentralized. Now we know what decentralized peer-to-peer communication is, what do we do with this? We still have this problem of hard coding IP addresses everywhere.

Introducing Gossip Protocol

After evaluating a lot of protocols, we decided that Gossip was scalable, efficient, and—you’ll see this is a recurring theme—simple. It was time to start researching how we would implement such a solution. That's where Gossip protocols came into play.

To explain how Gossip works, I found this story that my significant other told me the other day. He said, in the military, if you're in the mess hall, and you drop one of the glasses, that person has to say, "Broken glass, be careful." Everyone around that person who hears that phrase has to repeat it.

That goes on until everyone in the mess hall knows that there's broken glass, be careful. And he says if you're in an open field and you drop a glass than everyone in that open field would know, "Hey, someone dropped that glass."

Gossip uses the same method of spreading information from one node to another—picking up traction as more people hear this information. And if you don't believe that this is a good model, you should go to high schools because rumors do spread.

There are two different primary types of Gossip protocol, and we'll go over a couple key distinctions and different types you can choose. One is structured versus unstructured. In structured protocols, you're looking at rings, binary trees.

The trade-off here is they're more complex. When they try to fail over or recover from a failure, then it can be more complex to reason about—or it couldn't happen at all. Unstructured being things like epidemic broadcasts—like we talked about—are simpler. They're more failure-resistant, but they're also slower.

Understanding Gossip and visibility

Why don't we have the Gossip protocol only talk to who's close to it? Know those people, and there you go? Eventually, if you take that model and you scale it, those people would only talk to the people close to it—we'd have overlapping members.

The reason we didn't choose this is because limited visibility increases the complexity. And even though it could increase efficiency, it opens doors to failures not being recoverable as fast. There are lots of trade-offs, as you can see. However, full visibility—it's simple. We know everyone knows everyone. With that, we have lower complexity.

Now we have all these ingredients. We know we want Gossip because it's peer-to-peer, we want something unstructured because we want to have something randomized and something based on epidemics and full visibility. Because our goal here is to keep it simple.

SWIM and its features

That brings us to SWIM—our flavor of Gossip. We're going to talk about some of SWIM's features and break down each of the words that make up the SWIM. Every website about SWIM does this.

Scalable

If you guys work in the cloud—or I assume you do because you're at HashiConf—you should know what scalable means. But originally the SWIM paper talked about I think 50 or 60 nodes. It was written in 2001 so when we were looking at it, like I told you, we were hoping to scale up to 5,000—that was our cap. That's a huge cluster. The scalability extends to how all of the features are built.

Weakly consistent

This is a fun one for me because—as I wanted to explain about consistency models—I found there are 50-plus consistency models. There's a lot of inconsistency about consistency models—which is fun to say.

SWIM is weakly consistent. That means it can take some time to get the information you want once you send it up into that node. Now eventually it'll resolve. But sometimes if I send my information up to node A and I say, "Hey, KV equals one," and node A explodes, then guess what? That's gone.

There is no guarantee once you send up that request to node A that it will be stored. And in things like databases, that's a pretty big deal. This will eventually converge in about two seconds, but it's not immediate.

The SWIM paper touches on why they did this. The TL;DR is, the stronger the consistency we add, the more complex we get. As I said—lots of trade-offs.

Infection-style process group

This is our snazzy way of saying that this is the Gossip or epidemic style to broadcast information.

Membership

It sets out to answer the question, who are my members? Some of the cool properties of SWIM are around its scalability and failure detection. In SWIM, no matter how large the group size is, the load per member stays about the same—and that's regardless of the cluster size.

No matter how big I get, I'm only going to be sending out my pings and acts at the same intervals. We'll cover more about that later. For failure detection latency, this is also independent of the entire size of the cluster. We'll cover more about failure detection in a second. But our rate of announcing that we have a failure has always stayed the same. We use Gossip to send out membership updates—alive, suspect, and dead—throughout the cluster.

One of the key requirements was to understand node failure to remove faulty nodes from the group. You'll see here that I put not heartbeat driven. That's a big deal because if I am a centralized node and I sent out, "Hey, are you alive?" to all the nodes in my cluster at a regular rate, this can increase complexity and also increase resource utilization. We needed a different approach from other protocols.

Failure detection

This might teach you a lot more about how the protocol works. In this scenario, we're sending out a direct probe to node B. We're saying, "Hey, are you alive? How are you doing?" We expect to send this node out and get a direct probe back that says, "Hey, I'm alive, and I'm doing well."

Typically, we have lots of friends in the cluster to make sure that this one node is alive, but if node B doesn't respond, what do we do? We can ask another node to probe node B on our behalf. This is called an indirect probe. We'll message node C and say, "Hey, my friend, I can't hear from B, can you talk to him for me? Maybe node B is mad at me?"

If node B responds to C, and then C will say, "Hey, I heard from node B, and maybe node B will ask us if we're okay." But let's say, "Hey?" and no one can hear from node B—what do we do?

We start telling everyone, "Hey, I can't hear from node B. This is a big deal." Eventually, there will be a time outreach that says, "Node B's failed. We're not going to talk to node B anymore. They're not cool."

One of the biggest properties of SWIM that we'll iterate on is the incarnation number. The incarnation number is stored locally on the node—and it's to help us refute false suspicions. If I'm node B and I know I'm alive and someone tells me I'm dead, I'm going to say I am not. If that does occur, then I will increase my incarnation number to say, "Hey, I'm alive. Someone said I was dead."

Now we know more about SWIM and SWIM is like our recipe. We take sugar, we take some spice—we take some robust failure detection, and we make a make member list. Up there at the very top is a quote from Armon, saying, "memberlist was supposed to be our purest implementation of SWIM."

Memberlist gives us the three states again from SWIM. Alive, suspect, and dead. We have indirect and direct probes for live nets and member updates. Like, "Hey, I think this guy is dead." Or, "Hey, I have joined the cluster." We'll piggyback off those probes.

Memberlist additions

We added a bunch of additions to SWIM in memberlist. One of them was using TCP. In the original SWIM papers, we only used UDP, but for memberlists, we added TCP to send direct probes in case there might be an issue with traffic or network configuration.

Say you don't have your UDP port open, then guess what? This isn't going to work. We use TCP in case that happens. We also added an anti-entropy mechanism, which helps us sync state between nodes.

Randomly I'll choose a node in the cluster and say, "Hey, let's meet up, let's talk about all the things that have been happening lately." Those states things will happen to help us converge on the information.

We also added a separate messaging layer for member updates. Instead of only sending member updates via direct and indirect probes, we separated that layer out. We realized it's super important to share that anytime anyone joins or leaves or dies.

Lifeguard and situational awareness

On top of this, eventually, our research department and friends did research on how to improve memberlist, and we came up with Lifeguard. We're going to touch on three key points of Lifeguard:

Self-awareness

An awareness score changes due to the outcome of indirect and direct probes. If I asked for a lifeless check and I don't receive a response in time, maybe I was too slow to process it? If that's the case, then I will get a timeout from that indirect or direct probe, and I will increase my awareness score. If I suddenly receive a large number of lifeless pings, maybe the cluster thinks I'm offline, and I probably shouldn't suspect other members if I'm appearing offline.

What self-awareness does is if we're not feeling confident in ourselves—if we're thinking we're having networking issues or resource constraints from another application—don't worry; we'll take that into consideration. Our awareness score will increase, and when the awareness score increases—sending out suspicions will decrease.

Dogpile

For each node there's a suspicion timeout set before we decide, okay, you're dead, you're out of the cluster. For new suspicions—ones we haven't heard before, ever—the time out will be the longest. We'll believe since this is the first time that we've heard the suspicion, but it's not true.

But once we keep hearing the suspicion—once people keep telling us, "Hey, node B is dead." Then we're like, "Maybe node B is actually dead." And that suspicion timeout will decrease till eventually node B is dead. Dogpile assists with messaging processing to remove failed nodes. Even if there's a time of high resource utilization, we can still quickly remove any failed node.

Buddy System

This prioritizes sending out suspicions. Like we covered earlier, member updates usually piggyback off direct and indirect pings. And because of that, it might take some time to send out a suspicion.

Now anytime we think there's a suspicion about a node that gets prioritized to the top of the queue. We're sending it out—we're going to let that guy know, "Hey, we think you're dead." And that way he or she can refute it and let us know that they're alive. If you want to know more about Lifeguard, please go watch Jon Curry's talk. It is very cool.

Keyring

Last thing we'll cover. Imagine you have a keyring, and you put a bunch of keys on it—and you slam it into a door until it gets in and it gets unlocked magically. That's how keyring works. That's my definition. That's your definition.

Keyring is used to encrypt communications between nodes. I like to think of it as a plaintext file that gets read, and you encrypt all your information that way. So many keys can be stored to try and decrypt the message. Similarly, many keys can be used to encrypt a message and memberlist, you can have many keys but only one key can encrypt a message or not, yeah in memberlist so yeah. And one key can encrypt a message, but many keys can decrypt a message.

Introducing Serf and new functionality

Eventually, we had memberlist. But what are we going to do with this? No one wants this. We needed to add some features to make it user-friendly. You'll see why I put that in quotes—and we added Serf, not SWIM—too many s’s.

In Serf, we add new functions, Vivaldi—which we'll cover—Lamport clocks and CLIs. This shows the difference between the two that were made—lots more too. Many new functions.

We added a bunch of cool things to Serf. One of them is graceful leave. Say I don't like node B but node B is alive. Guess what, I can tell node B to get out, and it will do it gracefully. Snapshotting—you can save a state of a node.

Network coordinates—this ties into Vivaldi, which is also very fun and we'll cover it soon.

Key manager—you can install and uninstall keys and much, much more—like custom event propagation.

You can add events that piggyback onto messages sent within the cluster. It can be like, "hey, trigger a deploy, run a script," whatever. The only constraint is there are certain message sizes that you must adhere to within memberlist and Serf.

It has to be pretty tiny, but you can do custom event propagation throughout the cluster to trigger deploys or run a script or whatever. You can do it off of member status or—say, I only want to run this on member A. So lots of customization.

Vivaldi

Vivaldi calculates the roundtrip time between two nodes in the cluster, We use all this information—all these round trip times—to build a matrix of where we think the nodes are.

Vivaldi in Serf uses network coordinates to map out where it thinks all the nodes are. There's a lot of cool stuff on Vivaldi, and if you want to know more about it, Armon talked about it. This is how I learned about it—you should check it out.

Lamport Clocks & CLI

This is how I feel every time I read a paper by Leslie Lamport. Leslie Lamport wrote Lamport Clocks, which isn't that complicated, but it replaces incarnation numbers to keep all of our messages ordered. The Lamport Clocks we use in Serf are used for every message and every event. We use it to keep the order of things—whereas in memberlist, like we discussed, it's only used for suspicions. It's a logical clock that's event-based, and it's local to your node. And we added a CLI.

The problem with Serf is people struggled to reason about the eventual consistency, and people wanted a central UI and API. So, how do we accomplish that?

Raft

We added Raft into the mixture. Its strong consistency and central servers allowed us to give the things people wanted to them like essential UI and API. Let's deep dive and by deep dive, I mean like six slides into Raft.

The first question is—what is Raft, and what does Raft seek to solve? Raft solves this consensus problem. Say you have five children, and you are like, "All right kids, what's up? What's for dinner?" Well, I don't know. I can't make a decision. I can't see five people trying to make a decision, and all adhere to it. If you ask all your children what they want for dinner, then you're not going to get a decision. They will never reach a consensus.

If you use Raft on your children—I don't know how you'd install that—they would come to a consensus. In the beginning, Raft has to decide a leader. We have to have someone who's our central, our big dog on the school playground here. And Raft will do that.

Each node and our Raft cluster is given a randomized timeout. The first node to reach its randomized timeout will then say, "Hey, can you guys elect for me?" And when that happens, he'll generally get elected first because it was the first one to reach the timeout. The leader will then send out regular heartbeats to the cluster and say, "Hey, let's talk about this. Are you still alive?" And each time you get that heartbeat, the other nodes’ heartbeats clock will reset.

At any given time, a server can be a follower, a candidate, or a leader. And to keep all of our different elections on track, we divide this into terms. One leader or one election. One election is one term. The leader's log is always seen as the source of truth, and Raft is built around keeping the log up to date.

To break it down—say your timeout happens and you haven't received a heartbeat from the leader. You're going to request others to vote for you. You're going to either become the leader—good for you. Someone else is going to become the leader. Or the vote's going to split. You're going to get a new term. This is all going to happen over again until a new leader is elected.

Raft’s leader election process

About that log. Say someone is trying to request that they get voted for, but their log is out of date or inconsistent with ours. If that's the case, then we're not going to fall for that. We don't want that game. We want a leader that comes to the same consensus and ideals as us. Raft is designed around keeping that log up to date, and we will not elect anyone with inconsistent logs.

Here you can see up to six entries are committed. However, you can see some servers don't have all those entries, right? Like those two. Is that a big deal? Yes and no. As long as a majority of the cluster has stuff committed, then it'll persist through crashes. That means it is committed. I should have used committed before, but we're in too deep. Any committed entry is safe to withstand failures because it is on the majority of the machines.

All right, problem solved. Serf is made—we've added Raft, right? We don't need anything else. No way. Well, here's how I see it. Raft is a big deal. If you add it to something, you're changing the consistency model of Serf—people might be upset about that.

Something I've found when researching this talk and understanding more about HashiCorp is we love to keep things separated so that everyone's happy. You still have added complexity when you're trying to trace a bug, but I think for the user side, it's awesome.

Consul basics explained

In Consul, we're all about the service—we're all about that service life. In Serf, we're more focused on our members and our nodes.

Let's talk about Consul. Strongly consistent via Raft. We use multiple Gossip pools to span datacenters, which is cool. We have a key-value store, and all that service life as well as the centralized API and UI people wanted.

There are still people who work at HashiCorp—not calling anyone out—who don't know the difference between an agent and a client server. And I didn't know it till two weeks ago so I don't blame you.

Vocabulary

An agent is a process that runs on every machine and a Consul cluster, and it can be either a server or client. I like to think about it as a generic term. A server as a standalone instance that is involved in the Raft quorum and maintains state. It can communicate across datacenters. It's your beefy machines.

A client monitors your application—runs alongside it, and it's not a part of Raft; it doesn't hold state. If you've ever used Kubernetes—I like to think about it as a kubelet. I feel like I'm going to get a tomato thrown at me for saying that.

Service configuration

We have our key-value store, and this is implemented by a simple in-memory database that's based on Radix trees. You can go check it out there. It's stored on Consul servers, but it can be accessed by both clients and servers. And it's strongly consistent because of Raft.

Network coordinates

Remember we talked about Vivaldi. When we added Vivaldi into Serf, we did not do much. It was very ‘choose your own adventure—have fun.’ That's not the true story.

We added Vivaldi into Serf because it belonged in that level. Adding it just into Consul would make things messy—but that's my opinion.

Prepared queries

You use prepared queries in Consul. They're rules or guidelines for people to follow. You can use prepared queries to say, "Hey, if my service fails—explodes—whatever, then use network coordinates to find the nearest two instances or available services and fail over to those." This makes it easy for your customers or clients. I always think of it as a website, and if you're rerouting traffic, you always want to do it to the area closest to that traffic.

Service discovery and service monitoring

Service discovery and service monitoring information is still stored in the same database as the KVs, but it has a different table. We can use this to give Consul services to monitor. Health checks are very focused on service. You can use many different things to build out your health checks. The picture is a build your own—it's a script I think.

No more hard-coded IPs

In the beginning, I talked about the IP address problem Armon and I had—it was a bonding moment—and now we're going to wrap it up. Up top, you'll see that's an IP address hard-coded. That was my life before Hashi. Down bottom with Consul—you can see that we can call out to Consul and Consul will dynamically resolve our service for us.

If you haven't heard about Consul today or yesterday, then that's cool. If you have any questions about service mesh or any of the scary buzzwords—please find Paul Banks and go bug him. He loves it.

Consul is all about your service—and it's built upon Serf, which is all about your nodes. Memberlist—that's your library that implements SWIM. A good quote I saw is Consul can't operate if central servers can't form a quorum, but Serf will continue to function almost under all circumstances.

If you want to follow me on Twitter, please DM me any questions—even if it says Nic Jackson, it is totally me. Please send me messages and go to learn.consul. Thank you very much.