Bridging Securely Across the Cloud Native Divide With Gloo, Consul, Nomad & Vault

This talk will illustrate an integration between Gloo, Consul, Nomad, and Vault to operate an API gateway.

Hybrid is king in today’s application stack, as development and deployment of Kubernetes native must co-exist with applications in VMs or functions. The challenges in deploying and operating an environment with such diversity lie within ensuring security, consistency, and connectivity across these different environments. Gloo is an API Gateway and control plane built on Envoy Proxy.

In this talk, Idit Levine from Solo.io will demonstrate the integration of Gloo to Nomad, Consul, and Vault to operate an API Gateway and discover and route to services living within or outside of Kubernetes.

Speakers

Idit LevineFounder & CEO, Solo.io

Idit LevineFounder & CEO, Solo.io

Transcript

My name is Idit Levine, and I'm the founder and the CEO of Solo.io, a company in Boston, Massachusetts. We're working very close with the Hashi guys, on the team that helped them integrate Envoy to Consul Connect. We've been working a lot of years on Envoy. We are contributing to Envoy, and we are working very closely with the Hashi team.

Let's talk a little bit about the problem that we're trying to solve. The way we are looking at this—and every customer that we have is trying to do this—is as a transformation journey. And they all, with no exception, started with one thing, which is they want to move from monolithic to microservices.

And if you think about it, this is what HashiCorp is all about. Every tool that HashiCorp has created is targeting exactly that problem, which is, "We were monolithic, and now with microservices, everything is distributed. How do we fix the next problem?"

From the monolith to 'everything on the network'

The first thing that a customer will realize is that the problem of migrating from monolithic to microservices is not only rewriting your applications. That's only the beginning, because when you create a distributed application, that means that everything is happening on the network.

Before that, you have a monolithic application and it has an API on top of it. And you had only one place that you needed to manage this API on this monolithic application. Now, this monolithic application is cut into way more pieces and you need to manage all those APIs.

And when managing all these APIs, you need to make sure that it's secure. You need to make sure that you can see what's going on. You need to make sure that it's routing, and that you can replicate it and create a replication of instances and the scaling that you need.

So customers understand that they will have way more problems than just migrating the code.

Ensuring inter-microservice communication

That's the first problem that we'll have. But then you will discover that it's more robust than that, because it's not only on the API level. Now, all the communication between 2 microservices is on APIs, which means that now you need to manage that.

How do you make sure that 2 microservices will be able to communicate with each other? How do we make sure that they're going to do it in a secure way? How do we make sure that when you're getting a request to this microservice, you understand what's going on?

Or why did it fail? Is that latency? Is that on the pipe? Or maybe it's on the microservice itself. The 3 that I just described, routing, security, and observability, is being solved today with service mesh. And Hashi has Consul Connect. I'm a big fan. It's great.

But that's not going to be the end problem, because what you will discover is that most likely you will need to manage more than one service mesh. Probably you will need to have multiple service meshes.

Connecting multiple environments

For instance, let's just take a simple example. Today HashiCorp announced together with Microsoft that Microsoft Azure is going to give Consul Connect as a service. This is great.

But if you want to do the same thing with Amazon, you can only do something like App Mesh. And if you want to do the same thing in Google, most likely Istio. So "How do you connect those environments?" is the question.

As a company, that's the problem that we are attacking, besides the problem of API gateway. That's one of the things that we will talk about today.

The last one is that I'm a big fan of service mesh, but in my opinion, the advantage of service mesh is not only those 3 problems that it solves. That's only where it starts.

Routing, security, and observability are the first problems and probably the most attractive ones that people want to fix. But there's way more to it, because we are obstructing the network, and if we are obstructing the network, there is way more we can do.

For instance, debugging the application. We can do some security, very interesting stuff. We can do canary deployment. There is a lot more we can do because we are absracting the network.

This is where we believe that the service mesh will go beyond.

That's, in a nutshell, the things we see in the market.

The pluses of microservices

As I said, microservices, big fan. Everybody needs to use them.

We solve tons of problem by having microservices:

Improved productivity and speed

A convention that forces good software design

Encapsulation of knowledge

Flexible span of technologies, and scalability

It's just amazing how fast we can put stuff in production. The design is the right one. It's a separation of concerns, which is the right way to do this. Encapsulation, flexibility, all good stuff.

And the minuses

But like everything we're doing in this market, we're solving 1 problem and introducing a lot of new ones:

More dependencies and complexity

Network latency and other performance issues

More complex and costly deployments

End-to-end testing is more difficult

Complexity

Right now, it's really hard to understand the state of the application. It's spread all around; you don't know where it is.

Performance

You have a network involved. Is there latency? What's going on there? Where is the problem?

Cost. Now you need to buy more tools like ours. Ha. You need to pay.

Testing

How do you test something? If before you did a lot of unit tests and a few integration tests, now most of the stuff that you're doing is integration tests.

I'm integrating a little bit just to make sure that everybody's talking the same language.

Managing hundreds of microservices

On the left side of this slide is what a monolithic application looks like, and on the right is what we did with microservices. The application itself now builds from a lot of microservices.

This is great, but this is a very simplified diagram. Here is how it really looks. This is what people are actually doing out there; companies like Netflix and Twitter have hundreds of microservices. That would be you, right? This is what you will do very soon.

And the question is, "How do you manage 500-plus microservices in production?" Problems happen. How do you find the problem with so many microservices? It's like this tweet from the Honest Status Page Twitter account: "We replaced our monolith with microservices so that every outage could be more like a murder mystery." People don't know what to do.

So, again, as you solve 1 problem, suddenly you're discovering new tooling. Before, you might do metrics for monolithic apps with APM, and now maybe Prometheus, right? Because the scale is way bigger.

Logging: same thing. Before, here's the monolithic; is it on your node? Just take the log. Now, the logs are spread all around. You don't even know where to take it from, and the correlation doesn't make sense because there are so many replications of the same instance. You can't figure out where your request landed.

So we need something like Open Telemetry instead of Splunk. The idea is tracing. It needs to be transactional logging. When the request is coming, I need to follow it, so it will make sense after.

Debugging. Native debugger for the monolith, right? I can just attach the debugger. Are any of you debugging microservices today? No. We're troubleshooting. We never debug anymore. It's troubleshooting.

That's one of the things that we as a company solved. We created a project called Squash that orchestrates the debugger for you so it can attach the debugger to each of the microservices. It's open source. You can go try it.

Architecture. It's the difference between SOA and microservices.

Deployment. There are options like Puppet, Chef, and Ansible for monolith and Helm for microservices.

Testing. Now way more of your testing is going to be integration testing. It was 80% unit testing and 20% integration testing before, and now that is reversed.

And managing hundreds of APIs

Now the question is, "How do we manage all those APIs?" You want one place to be able to set up all the security policies and so on. You don't want to spread it out in all your microservices. It's just too hard.

And how do you make sure that this team is forcing it? Doesn't make a lot of sense.

Same thing with security. You need to make sure that everything is secure. Zero-trust networking is the buzzword right now. We don't know where things are coming from, but if it's in the network, we need to inspect everything.

And then observability. That can be managed on the edge. API gateway probably can do a very good job with it. But with microservices, what's called east-west traffic should also be secure. You also need to set the routing and make sure that you can see what's going on in the cluster.

Now imagine that you're doing all this over the cloud. One of our customers wanted to do a canary deployment in 3 datacenters, and if the first one succeeds, the second one succeeds, but the third one fails, they want to roll back all of them.

Now everything is way complex, when you're talking about multi-datacenter, let alone if you're taking this application and spreading it all around. So that's become very complex.

Gloo, or service mesh?

And this is why we created Gloo. We believe that there should be a new API gateway out there.

First of all, companies want to go service mesh, but service mesh is a very complex solution—and a lot of the time is even not necessary. People start with Envoy because Envoy is the building block of every service mesh today, minus Linkerd.

And most likely, it will be easier for them to start on the edge. But they want to see how Envoy works so that they will be able to extend that. That's why we built Gloo.

Gloo is a control plane for Envoy Proxy. Its job is to glue those environments; this is why it's named Gloo. If you have instances of OpenStack, EC2, or whatever you need, if you have some services, or if you even have serverless, you want to glue those together. You want to make sure that you will be able to manage that.

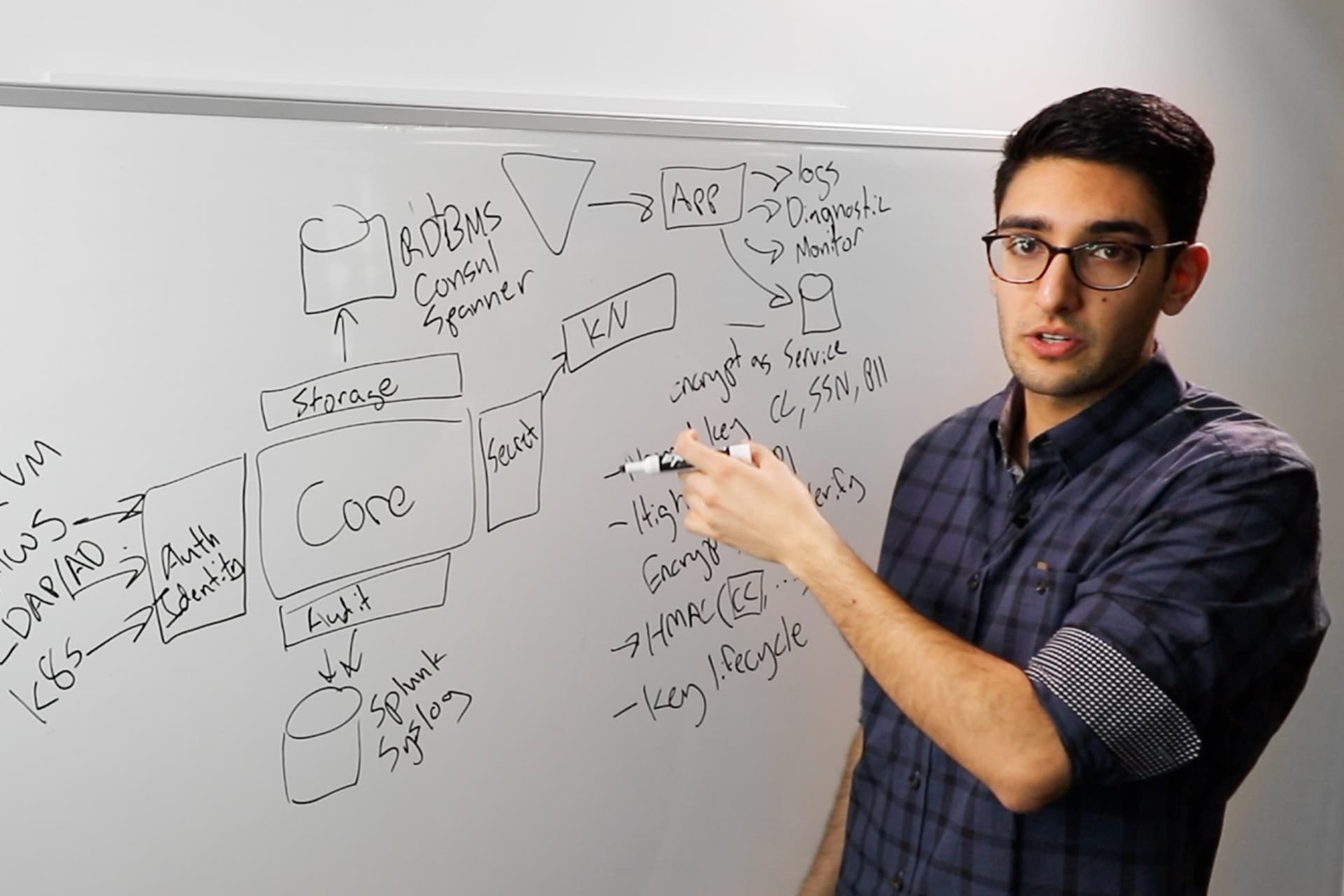

The architecture of Gloo

Let's look at Gloo and understand what's special about this architecture. We'll see a little bit how it works beautifully with everything that exists out there. And then we'll talk a little bit about how it works seamlessly with the architecture of Hashi.

The architecture of Gloo is very pluggable on purpose. I worked at a startup called DynamicOps that was acquired by VMware, and what we realized there was that there is no such thing as simple customers. You already have investments in previous tooling, and we should embrace that. We shouldn't just throw it away. That's never working. We understood that everything should be pluggable.

Gloo basically watches. It's pretty dumb. It's watching 3 things:

Environment

Secrets

Configuration

Gloo watches your environment, and that means any environment that we want, and this is also pluggable. If you want to create your own environment, you can plug it in. It can discover Consul, your Kubernetes instances, your VMware instances, EC2, whatever.

The second thing that it is watching is the secret. One of them is the Kubernetes secret, so it can send it to Envoy. And Vault. Those are the 2 things that we are watching. Every time that something changes, we know about it and we know how to update the snapshot for Envoy.

The last thing Gloo is watching is the configuration of the user. We natively run on Kubernetes, which means that we are using what are called CRDs as the storage for it, which means that you don't need to do backup of active Cassandra clusters like you should have done with the legacy API gateway.

But the beauty of Gloo and how we build it is that you don't have to do it in CRDs. You can use Consul as storage, for instance. And if you are using Consul as storage, that means that we don't need Kubernetes whatsoever. You can if you want, but you don't have to.

We have a customer that is using it via both. They're using Kubernetes and they're using Consul together, and Gloo manages them all together.

Gloo is open source. Go look at the Gloo Solo repository.

The discovery is totally pluggable.

The storage is pluggable, which means that you can use whatever environment you want.

But what is more important, after we discover something changed, we are sending this information to a plugin system. And each plugin takes this language and translates it to an Envoy snapshot. Then Gloo aggregates everything back and sends it to Envoy.

It's really strong and giving us the ability to extend our platform to a lot of use cases. And the reason we're getting a lot of customers is because they are getting a tailor-made solution. It's the technology that they want, and they are pretty overwhelmed with how quick we are giving them the results.

Using Gloo, with Consul as storage

Let's talk about Hashi. With this architecture that's really flexible, that means that you can use Consul as storage. Everything in Gloo is a declarative API. You're telling me what you want, and we're making sure that it consistently will happen, eventually will happen.

And as I said, you don't need to back up and so on. So it can use Consul as storage, not only as a discovery resource. I think this is beautiful.

And you can run on Nomad. Why not, right? It can be your ingress to Nomad. As I said, Gloo is always watching, and you'll see how quick it's happening.

And we are also watching your secret. So that means that every time there is a rotation, we know about it, we're sending it back. When the request is coming through Envoy, we already know to pack it on or create an Action with AWS Lambda, for instance. You don't need to worry about that, and that's really seamless to the user.

Envoy's role

Envoy is an important piece of infrastructure because it offers:

Performance

Extensibility

Community

API

Migration

The first reason we chose Envoy is the performance. Most of our customers evaluating Envoy versus another API gateway were just amazed by the results.

The second one is extensibility. Gloo is extensible because of Envoy. We knew that this huge ecosystem of Envoy will add so much stuff, and that was really important to us because we wanted to offer our customers this pluggable system.

You can on the request card plugin what's called "filter," and you can extend the request. As a company, that's basically our differentiator. We just announced a transformation filter, caching filter, Lambda filter. That's a huge advantage.

Community. This is what everybody is doing today for service, and we, as contributors, can go very fast as a community.

It's not like an NGINX; it's not a static file anymore. You can have an API to drive the configuration of Envoy.

For us, a lot of the use cases that we are handling are migration from monolithic to microservices, and that's the reason that Envoy was chosen from the get-go. For us, that was a no-brainer: Envoy should be the proxy that we choose.

Gloo security

Security is the most important thing. I will pay everything to protect my kids. I'm sure you will pay everything to protect your application and your environment. This is something that people are outsourcing because they want to make sure that it's being dealt with very well.

For Gloo, we have authorization in layers. So, basic auth, DEX, Open AD Connect, widget, JSON Web Token, API keys, custom auth, with everything working and tested and very solid to give support for your application.

We also doing stuff with policy, like OPA, which is Open Policy Agent. One of our customers manages a lot of dentists, and they wanted to make sure that, while the backend service is returning everything, including the license of the dentist, they don't expose that to everybody, only to some people.

What they are doing is, when the request card goes to OPA, it returns an OK request, but it's also telling him which field this guy is allowed to see. When it gets down to the backend itself, it returns all the fields, and we are filtering it and bringing it to the users. You don't need to put this information in your application. It's too complex.

RBOC, of course. Key cloak integration, and CORS, and delegation.

Delegation is very important. This is a new feature that we just announced. What's exciting about that is the ability to delegate the domain to someone else.

Let's say that I own Solo.io, but I want to give my marketing team the ability to manage their domain. I can delegate Solo.io/marketing to my marketing team, and they can do whatever they want, and then they can delegate it to somewhere else and so on. It's giving you the ability to delegate inside the organization.

WAF. Very excited about it. I don't know any way that anybody has an Envoy-based application not supporting WAF. We do. So, more security. Again, it's available in Gloo.

Rate limiting for DDoS protection. Circuit breaking, very important.

And the last one is encryption. Of course, Mutual TLS. Let's increase mTLS upstream and downstream. Make sure that it's all working nicely together.

We integrated with everything that exists there. I want to show you them. I think it's really more interesting. But everything that you wanted to glue, we basically can do this.

Gloo and Knative

If you are interested in Knative: When Google open-sourced it in the beginning, they depended it only on Istio. They got huge pushback from the community. Knative needed a good ingress, that's all. It doesn't need the service mesh. It's like I ask someone to clean my room and the way they do is just bumping everything. Yeah, it's clean but you don't need to do that. It's way more complex.

Google reached out to us, and we were the first official implementation with Knative. And every feature that Istio is supplying to Knative, we are supporting as well. Go try it. It's really simple and easy.

Using Gloo with the Hashi stack

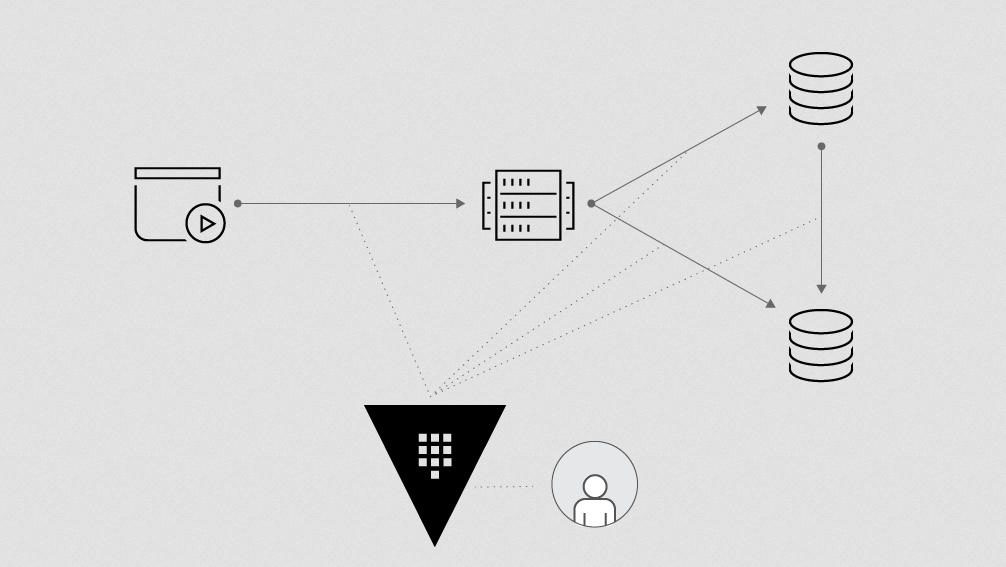

Let's do real quick the demo with the HashiCorp stack and Kubernetes. This should look familiar.

What we have here is Nomad. We don't have any job right now. And what we have here is a clean Consul installation. And here is Vault.

What we're going to do right now is really simple, which is to install Gloo on Nomad. The storage will be in Consul, and Gloo is going to watch Vault. So it's like all this stack working together and giving you this ability to manage that.

First, we use Levant, which is the Helm for Nomad installation. Immediately we got a job. It's running in 4 instances. It's very important to us to separate the control plane from the data plane. That's the right way to do the API gateway or anything else.

You can see here that you have the proxy, which is Envoy, in our case. There is the Gloo, which is our integrated language. It's way more low level than we hope people will use it. And then there is the gateway itself, which is bringing all the API gateway management and much more. And we're discovering everything that exists. The discovery is going to Consul to discover everything we have there. And, as you see, it's installed.

It's really simple. I took Nomad, installed Gloo, and installed a demo application in that.

The next one that we need to do is a put route. We want to see that we can get to this application. For that, I'm going to do cat route and copy that command for Gloo to create the route. What you see, I immediately created the line, and the last thing that I will do is port-forward in gate and cURL it, and you will see that it's working. Pretty simple, right?

So I just installed Gloo to Consul. If I go right now to Consul, to the key value, we see that everything is stored here, the route and everything. Hashi is giving us the ability to browse and see everything that exists there.

Gloo and Kubernetes

Now let's do something else.

This is a very simple application, a Spring application. You can see here the code and a founder, the orders, and so on. It's really simple, a monolithic application. But I have a new engineer in my team, and I want to add another column that shows the location of the user.

What do I need to do? First of all, I need to learn the code. It's a monolithic application, and usually that's way more complex than what I'm showing here. The second thing, you need to add the functionality. Then you need to test the functionality. Then you need to aggression test the functionality to make sure that they didn't break anything. And then you need to redeploy the monolithic application.

So let's look real quick at how Gloo can help you. This is the Gloo UI. What's special about the Gloo UI is the fact that the first thing that you're getting is understanding what's going on in your system. As I said, discovery is really important to us. You're getting health information about Envoy. You know that it's healthy. If it's not healthy, you will know about it because we immediately are going to show you.

The second thing is that it shows you that you have 2 virtual services configured. It also shows you problems. For instance, here I have 29 Kubernetes upstream containers successfully configured, but I have 1 static upstream that is a problem. I can click and I see immediately why it was rejected. I can see what's wrong with my system.

Let's go back and look at what we discovered so far. This is my environment. It's installing Kubernetes. You can see that it's green, meaning healthy. You can click on this and you can see that it's accepted. So it's all good. You continue going and you can see that there's 1 that is not accepted.

What we can do right now is see the virtual service itself. What you will see is that the Gloo UI is served by Gloo. And the other thing that you can see is that I created a default application, and it's healthy, which is good. When I click on this, I can see there's 1 route, and this route is going to the monolithic application.

Now we'll go back to the Gloo UI, to the app stream, and look for this application, PetsClinic. You can see we have 4 instances of this application. The first one is the monolithic application, lifted and shifted. The second one is the database that the application is going to explore. The third one is another instance of the database.

The last one is a very simple Go microservice that I wrote to replace all those steps I described earlier, about learning the code, adding the functionality, and testing. It's really, 100 lines. All it does is go to the database, using Go, because that's the language that I'm comfortable with, taking the information from there and rendering it as HTML.

Now I'm going to add the Go microservice to the default application. I will say, "Please go to the default application." I add this Go microservices, headed to the /vet.

You can see it's healthy, which means it's already there. If we go back now to the PetsClinic, this is a Java application. You can see the Go microservice already. You see how quick it is, and the reason it's so quick is because Gloo is a really good control plane. And Envoy is really solid.

Now we're going to come back to the contact, and just for fun, let's set it up so that every time someone clicks on this page, I want to serve a Lambda. Let's see how Gloo can help you with this.

As you can see, we can put all the authorization and rate limiting and everything on the virtual service.

First of all, I need to add an upstream because I want an AWS upstream. Creating an upstream is pretty simple. I'm coming here, I'm giving it a name, I'm choosing an AWS one. I'm going to choose the secret that I want to use. And I'm going to click.

Now see what happened. Immediately it created one, but not only this. It found all the Lambda at once there. This discovery already managed to create the upstream, and you saw how quick it is. It discovered all the Lambda, including version and so on and serving it.

Let's do the other one from here. You'll see that we can do both. We create a route. It will come to the AWS default. We are going to choose contact from tree.

Now, Lambda is always returning JSON, but I want to show you here HTML. So we created a crazy transformation filter on top of Envoy that will give you the ability to transform from whatever you want to whatever you want. I'm going to enable it. Boom, it's ready.

So this is the monolithic application, and this is the Go microservice. Every time that I click this button, it's spinning up AWS Lambda. That's just an example; you can do with it everything you want. Then glue those environments together and you have an application that you have added new functionality to and extended.

That in a nutshell is what Gloo is. I really recommend you go and check it out. As I said, it's open source, so you can go and look at it.

SuperGloo and the service mesh interface

A year ago, I understood that there's going to be more than one service mesh, and people will need the support. And the reason is, every cloud provider will choose a different one. Potentially, you should use them because they're probably integrated very nicely with their environment. Now the question is, "How do you glue those together?" And I came up with the vision of SuperGloo.

After 6 months, Microsoft reached out to us and said, "This is great. We love it; we want it." And we knocked together what we call SMI, service mesh interface.

Service mesh interface is the ability to unify the language of every service mesh. We specifically also grouping them to flatten the network. If you're familiar with what Mesos was doing before, the vision of you have a lot of small servers and Mesos treats them as one big cluster.

We're doing the same thing with service mesh, flattening the networking, because everybody is using Envoy. We can glue them together as one and say, "All of this is your production environment. Use this route certificate and talk to this Prometheus, versus this one, which is a new development. So the policy needs to be different and so on.

That's SuperGloo. We are working right now with VMware on inter-mobility, or the grouping that we are doing, so that you get the global namespace and you don't care about the implementation. That's coming very soon. Stay tuned.

The partner team on SMI with Microsoft included Linkerd and HashiCorp. And we also brought Hashi in on the one that we're doing with VMware.

I have way more to say, but I don't have time. Thank you.