HashiCorp Terraform 0.11

We are pleased to announce the release of HashiCorp Terraform 0.11. Terraform enables you to safely and predictably create, change, and improve infrastructure via declarative code.

Highlights of the Terraform 0.11 include:

- Improved Terraform Registry integration with module versioning and private registry support

- Per-module provider configuration

- Streamlined CLI workflow with the `terraform apply` command

For full details on the Terraform 0.11 release, please refer to the Terraform Core changelog and the 0.11 upgrade guide.

Alongside Terraform 0.11 development, many Terraform providers saw improvements since the 0.10 release date, including:

- Amazon Web Services Provider

- Microsoft Azure Provider

- Google Cloud Provider

- VMware vSphere Provider

- HashiCorp Vault Provider

Also during the 0.11 development period, two new providers were added via the Provider Development Program: Terraform can now be used to manage resources for LogicMonitor (a performance monitoring platform) and cloudscale.ch (a cloud compute provider).

»Improved Terraform Registry Integration

The Terraform Registry was launched at HashiConf 2017 with initial support added in Terraform 0.10.6 to coincide with its launch. Terraform 0.11 completes this integration with full support for module version constraints and private registries.

»Module Version Constraints

Prior to Terraform 0.11, Terraform would always install the latest module version available at a particular source, which presented challenges for gradual rollouts of a new module version across potentially-many configurations.

In conjunction with Terraform Registry, Terraform now has first-class support for version constraints on modules, following on from similar behavior for provider plugins as added in Terraform 0.10:

module "example" {

source = "hashicorp/consul/azurerm"

version = "0.0.4"

}

With the above configuration, Terraform will always install version 0.0.4 of the Consul module for Microsoft Azure, regardless of any new versions being released. The author of this configuration can then choose to upgrade at a convenient time, without being forced by the system.

While this is particularly important for the public Terraform Registry, where new releases of public modules may arrive at any time, this is also a much-requested feature for those maintaining internal, private modules that are used across numerous environment configurations.

Unlike provider plugins, it is possible to mix different versions of the same module within a given configuration, allowing for use-cases such as blue/green deployment where one instance of a module is upgraded while leaving the other untouched. Each module block resolves its constraint independently of all others, giving operators full control over upgrades.

Changes to the version constraints for both modules and provider plugins are handled by terraform init. After changing version constraints in the configuration, run terraform init -upgrade to install the latest version of each module and each provider plugin that is allowed within the given constraints:

$ terraform init -upgrade

Upgrading modules...

- module.consul_servers

Updating source "./modules/consul-cluster"

- module.consul_clients

Updating source "./modules/consul-cluster"

- module.consul_servers.security_group_rules

Updating source "../consul-security-group-rules"

- module.consul_servers.iam_policies

Updating source "../consul-iam-policies"

- module.consul_clients.security_group_rules

Updating source "../consul-security-group-rules"

- module.consul_clients.iam_policies

Updating source "../consul-iam-policies"

Initializing provider plugins...

- Checking for available provider plugins on https://releases.hashicorp.com...

- Downloading plugin for provider "aws" (1.2.0)...

- Downloading plugin for provider "template" (1.0.0)...

Terraform has been successfully initialized!

...

»Private Registry Support

The public-facing Terraform Module Registry can only host public modules, and is intended for generally-reusable open source modules that can be used as building blocks across many organizations. Terraform 0.11 introduces support for private module registries, which are offered as part of Terraform Enterprise to streamline distribution and usage of reusable modules within an organization. Private registries use an extended version of the registry source syntax that includes the hostname of the registry, or of the Terraform Enterprise instance providing it:

module "example" {

source = "app.terraform.io/engineering/nomad/aws"

version = "1.2.0"

}

Private registries share all the same capabilities as the public registry, but require authentication credentials. When used as part of a private install of Terraform Enterprise Premium, the private registry is also deployed within an organization's local network to allow usage without Internet access.

The Terraform open source project does not include a private registry server implementation, but we have documented the module registry API and welcome the community to create other implementations of this protocol to serve unique needs.

»Per-module Provider Configuration

A big area of current investment for Terraform Core is improving the Terraform configuration format. We have collected feedback on common pain points and are attempting to address these holistically, while also being cautious and respectful of existing usage. Local Values was our first change in this area, released in Terraform 0.10.3, and Terraform 0.11 continues this effort by addressing limitations and usability concerns with modules and their interaction with provider configurations.

In many Terraform configurations, providers are declared only once in the root module and then inherited automatically by resources in any child modules. There are, however, more complex situations where each module in a configuration requires different combinations of provider configurations, such as when a configuration manages resources across multiple regions.

Terraform 0.11 introduces a new mechanism that allows provider configurations to be explicitly passed down into child modules, using the providers argument:

provider "google" {

region = "us-central1"

alias = "usc1"

}

provider "google" {

region = "europe-west2"

alias = "euw2"

}

module "network-usc1" {

source = "tasdikrahman/network/google"

providers = {

"google" = "google.usc1"

}

}

module "network-euw2" {

source = "tasdikrahman/network/google"

providers = {

"google" = "google.euw2"

}

}

The providers map allows each module to have its own "view" of the provider configurations. In the above example, any resource in module.network-usc1 that uses the google provider will use the configuration whose alias is usc1 in this parent module, while module.network-euw1 manages an equivalent set of resources using the configuration whose alias is euw2.

This mechanism also allows more complex scenarios, such as passing multiple aliased provider configurations for the same provider down to a child module. For more information, see the module usage documentation.

Since modules are a central feature of Terraform, further module changes will follow in later releases with ongoing improvements elsewhere in the language, including support for complex-typed variables.

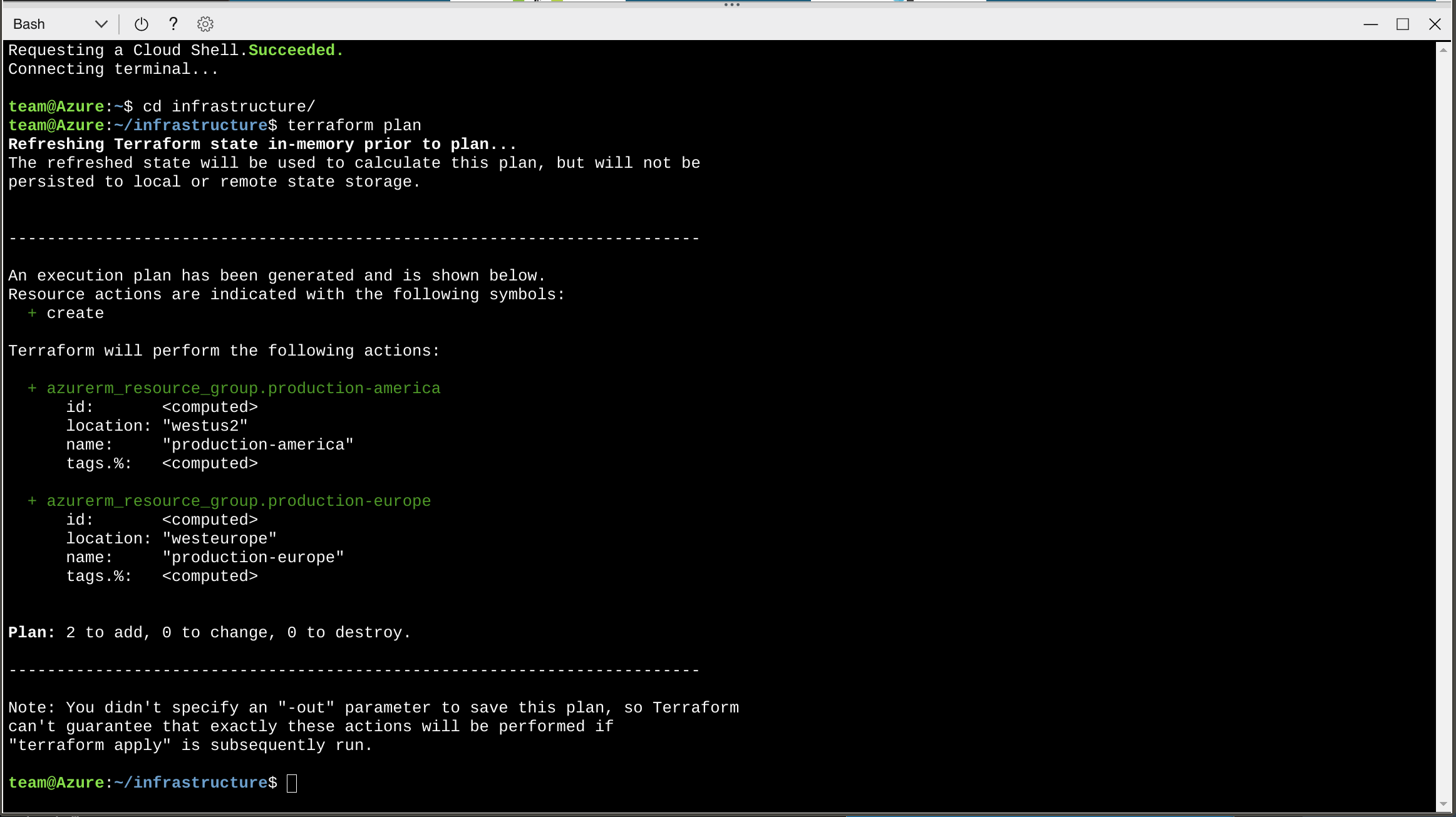

»Streamlined CLI Workflow

The terraform plan command is an important feature of Terraform that allows a user to see which actions Terraform will perform prior to making any changes, increasing confidence that a change will have the desired effect once applied. However, using plans safely has traditionally required writing the plan to disk as a file and then providing that file separately to the terraform apply command.

From Terraform 0.11, the functionality of both of these commands has been combined into the terraform apply command, with an interactive approval step to review the generated plan:

$ terraform apply

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

+ aws_instance.example

id: <computed>

ami: "ami-2757f631"

associate_public_ip_address: <computed>

availability_zone: <computed>

ebs_block_device.#: <computed>

ephemeral_block_device.#: <computed>

instance_state: <computed>

instance_type: "t2.micro"

ipv6_address_count: <computed>

ipv6_addresses.#: <computed>

key_name: <computed>

network_interface.#: <computed>

network_interface_id: <computed>

placement_group: <computed>

primary_network_interface_id: <computed>

private_dns: <computed>

private_ip: <computed>

public_dns: <computed>

public_ip: <computed>

root_block_device.#: <computed>

security_groups.#: <computed>

source_dest_check: "true"

subnet_id: <computed>

tenancy: <computed>

volume_tags.%: <computed>

vpc_security_group_ids.#: <computed>

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value:

By generating the plan and applying it in the same command, Terraform can guarantee that the execution plan won't change, without needing to write it to disk. This reduces the risk of potentially-sensitive data being left behind, or accidentally checked into version control.

If the current configuration is using a remote backend that supports state locking, Terraform will also retain the state lock throughout the entire operation, preventing concurrent creation of other plans.

This new usage is recommended as the primary workflow for interactive use. The previous workflow of using terraform plan to generate a plan file is still supported and recommended when running Terraform in automation.

This new approach was suggested and implemented by community contributor David Glasser, originally as an opt-in mode. After seeing it in action, the Terraform team realized that this approach is actually a much better default behavior. Thanks, David!

## AWS Provider Improvements Terraform's AWS provider has received numerous improvements and bugfixes in the time since Terraform 0.10.0 was released. It's impossible to describe them all here, but the following sections will cover some of the highlights.For full details, please refer to the AWS Provider changelog.

###Network Load Balancer AWS now offers Network Load Balancers (NLBs) as an extension of the next-generation load balancer architecture previously introduced with Application Load Balancers (ALBs). While an ALB operates at the HTTP layer, an NLB handles arbitrary TCP connections and leaves application-level details up to the backend service.

Since both types of load balancer are built on the same underlying APIs, AWS Provider 1.1.0 has renamed the resource aws_alb to have the more-general name aws_lb, with the two load balancer types now distinguished by the new load_balancer_type argument:

resource "aws_lb" "example" {

name = "example-load-balancer"

load_balancer_type = "network"

internal = false

security_groups = ["${aws_security_group.lb_sg.id}"]

subnets = ["${aws_subnet.public.*.id}"]

}

Network Load Balancer improves over the TCP-balancing functionality of the original Elastic Load Balancer by supporting more flexible routing to different ports on different backends, making it easier to use with arbitrary port number assignments often created in container-based deployments.

Network Load Balancer support was contributed by Paul Stack.

»AWS Batch

AWS Batch is a service for scheduling batch jobs in containers managed by the EC2 Container Service. AWS Batch support was added over several releases starting with AWS Provider 1.0.0. AWS Batch manages scaling of a compute environment in response to the creation of batch submitted by applications. Terraform's AWS Provider can be used to manage job queues, compute environments, and job definitions.

resource "aws_batch_compute_environment" "example" {

compute_environment_name = "sample"

compute_resources {

instance_role = "${aws_iam_instance_profile.ecs_instance_role.arn}"

instance_type = [

"c4.large",

]

max_vcpus = 16

min_vcpus = 0

security_group_ids = [

"${aws_security_group.sample.id}"

]

subnets = [

"${aws_subnet.sample.id}"

]

type = "EC2"

}

service_role = "${aws_iam_role.aws_batch_service_role.arn}"

type = "MANAGED"

}

resource "aws_batch_job_queue" "example" {

name = "example-queue"

state = "ENABLED"

priority = 1

compute_environments = ["${aws_batch_compute_environment.example.arn}"]

}

These AWS Batch resources were contributed by Andrew Shinohara and Takao Shibata and are available as of AWS Provider 1.1.0.

»Amazon Cognito

Amazon Cognito is a service for managing user sign-up, sign-in and permissions for mobile and web apps. Support for more Amazon Cognito resources was added iteratively in 1.2.0 and 1.3.0. Terraform’s AWS provider can be used to manage Identity Pools, relationships between Identity Pool & IAM role, and User Pools.

resource "aws_cognito_user_pool" "example" {

name = "example-pool"

admin_create_user_config {

invite_message_template {

email_message = "Your username is {username} and temporary password is {####}. "

email_subject = "FooBar {####}"

sms_message = "Your username is {username} and temporary password is {####}."

}

}

}

resource "aws_cognito_identity_pool_roles_attachment" "main" {

identity_pool_id = "${aws_cognito_identity_pool.main.id}"

role_mapping {

identity_provider = "graph.facebook.com"

ambiguous_role_resolution = "AuthenticatedRole"

type = "Rules"

mapping_rule {

claim = "isAdmin"

match_type = "Equals"

role_arn = "${aws_iam_role.authenticated.arn}"

value = "paid"

}

}

roles {

"authenticated" = "${aws_iam_role.authenticated.arn}"

}

}

These Amazon Cognito resources were contributed by Gauthier Wallet and are available as of AWS Provider 1.3.0.

»Microsoft Azure Provider Improvements

As part of HashiCorp's collaboration with Microsoft, Terraform's Azure provider has seen many improvements over the last four months across a broad set of Azure products.

Full details on the changes in recent versions can be found in the Azure provider changelog.

»Azure App Service

Azure App Service (formerly known as Azure Web Apps) provides a fully-managed hosting environment for applications.

The Azure provider 0.3.3 introduces initial support for Azure App Service, allowing management of App Service Plans and App Services themselves. Additional App Service resources will be introduced in future releases.

resource "azurerm_app_service_plan" "example" {

name = "example"

location = "${azurerm_resource_group.example.location}"

resource_group_name = "${azurerm_resource_group.example.name}"

sku {

tier = "Standard"

size = "S1"

}

}

resource "azurerm_app_service" "example" {

name = "example"

location = "${azurerm_resource_group.example.location}"

resource_group_name = "${azurerm_resource_group.example.name}"

app_service_plan_id = "${azurerm_app_service_plan.example.id}"

site_config {

dotnet_framework_version = "v4.0"

}

app_settings {

"ENVIRONMENT" = "production"

}

}

»Azure Key Vault

Key Vault is a managed solution for secure storage of keys and other secrets used by cloud apps and services. As of Azure provider 0.3.0 Terraform has support for managing key vaults, certificates, keys, and secrets, allowing secrets to be managed as code.

resource "azurerm_key_vault" "example" {

name = "example"

location = "${azurerm_resource_group.example.location}"

resource_group_name = "${azurerm_resource_group.example.name}"

sku {

name = "standard"

}

tenant_id = "2e72bec8-f870-49af-b16a-cc1b0743ccdf"

access_policy {

tenant_id = "2e72bec8-f870-49af-b16a-cc1b0743ccdf"

object_id = "c95fcfeb-05fe-49b1-9f42-68da17319a22"

key_permissions = ["get"]

secret_permissions = ["get"]

}

}

resource "azurerm_key_vault_key" "example" {

name = "example"

vault_uri = "${azurerm_key_vault.example.uri}"

key_type = "RSA"

key_size = 2048

key_opts = ["sign", "verify"]

}

»Azure Cloud Shell Integration

Azure Cloud Shell provides an administrative shell integrated into Azure Portal, with many pre-installed administrative tools that can be used to interact with Azure products and services.

In September, Microsoft announced that Terraform is now pre-installed in Cloud Shell. This provides a convenient way for Azure users to get started with Terraform, and gives more experienced users a convenient place to run Terraform without installing credentials on their local machines. Terraform in Cloud Shell is automatically configured to access Azure resources through your personal user account, avoiding the need to manually set environment variables, populate credentials files, or configure the azurerm provider.

»Google Cloud Provider Improvements

The Google Cloud Graphite Team has been hard at work expanding the features of Terraform's Google Cloud provider over the last four months. Recent releases have, amongst numerous other changes, added support for several Google Cloud features that are important for larger organizations with many Google Cloud projects.

For full details on all recent changes, please refer to the Google Cloud provider changelog.

»Shared VPC

Shared VPC support allows for a single Virtual Private Cloud to be shared across multiple projects within a single Google Cloud organization.

Larger organizations split their infrastructure and services across multiple projects in order to separate budgets, access controls, and so forth. Shared VPC permits this multi-project organization structure while allowing applications in different projects to communicate over a common private network.

Shared VPC configuration requires both a Shared VPC host project, which contains the networking resources to be shared, and one or more service projects that are granted access to the shared resources.

# Host project shares its VPC resources with the service projects

resource "google_compute_shared_vpc_host_project" "example" {

project = "vpc-host"

}

# Each service project gains access to the Shared VPC resources

resource "google_compute_shared_vpc_service_project" "example1" {

host_project = "${google_compute_shared_vpc_host_project.example.project}"

service_project = "example1"

}

resource "google_compute_shared_vpc_service_project" "example2" {

host_project = "${google_compute_shared_vpc_host_project.example.project}"

service_project = "example2"

}

»Project Folders

A further challenge for larger organizations is understanding the relationships between a multitude of different projects.

Folders serve as an additional layer of organization between organizations and projects, allowing projects within a particular organization to be arranged into a hierarchical structure that reflects relationships within the organization itself. Google Cloud provider 1.0.0 introduced the google_folder resource, allowing an organization's folder structure to be codified with Terraform. Managing an organization's projects and project folders within Terraform gives all of the usual benefits of infrastructure as code, allowing changes to be tracked over time in version control, and allowing complex updates to be automated as organizational structures change.

locals {

organization = "organizations/1234567"

}

resource "google_folder" "publishing" {

display_name = "Publishing Dept."

parent = "${local.organization}"

}

resource "google_project" "reader_exp" {

name = "Reader Experience"

folder_id = "${google_folder.publishing.name}"

}

resource "google_project" "author_exp" {

name = "Author Experience"

folder_id = "${google_folder.publishing.name}"

}

resource "google_folder" "advertising" {

display_name = "Advertising Dept."

parent = "${local.organization}"

}

resource "google_project" "ad_mgmt" {

name = "Ad Management"

folder_id = "${google_folder.advertising.name}"

}

resource "google_project" "ad_delivery" {

name = "Ad Delivery"

folder_id = "${google_folder.advertising.name}"

}

##VMware vSphere Provider Improvements Terraform's VMware vSphere provider was originally contributed by community member Takaaki Furukawa, and was improved by many more community contributors to build out a baseline of functionality.

Shortly after the Terraform v0.10.0 release the Terraform team at HashiCorp began directly investing in this provider, expanding its capabilities to include additional vSphere features and additional capabilities for existing resources. A theme of these new developments is to broaden Terraform's capabilities from management of individual virtual machines to allow codification of datacenter-level resources and inventory.

The following sections cover some of the highlights of the work over the last few months. Most of these improvements are already available in the 0.4.2 release of the provider, though version 1.0.0 will round out these features in the near future. For full details, please refer to the vSphere Provider changelog.

»vCenter Distributed Networking

As of vSphere Provider 0.4.2, Terraform has support for distributed virtual switches and port groups. Distributed virtual switches allow centralized management and monitoring of all virtual machines associated with a switch across several separate hosts, including high-availability failover, traffic shaping, and network monitoring.

The below snippet shows you how a DVS and port group can be set up Terraform, drawing data from the vsphere_datacenter and vsphere_host data sources:

resource "vsphere_distributed_virtual_switch" "dvs" {

name = "terraform-test-dvs"

datacenter_id = "${data.vsphere_datacenter.dc.id}"

uplinks = ["uplink1", "uplink2", "uplink3", "uplink4"]

active_uplinks = ["uplink1", "uplink2"]

standby_uplinks = ["uplink3", "uplink4"]

host {

host_system_id = "${data.vsphere_host.host.0.id}"

devices = ["vmnic0", "vmnic1"]

}

host {

host_system_id = "${data.vsphere_host.host.1.id}"

devices = ["vmnic0", "vmnic1"]

}

host {

host_system_id = "${data.vsphere_host.host.2.id}"

devices = ["vmnic0", "vmnic1"]

}

}

resource "vsphere_distributed_port_group" "pg" {

name = "terraform-test-pg"

distributed_virtual_switch_uuid = "${vsphere_distributed_virtual_switch.dvs.id}"

vlan_id = 1000

}

Along with the host-level resources for virtual switches and port groups, Terraform can now model a variety of different virtual networking use-cases.

»Storage Resources

vSphere Provider 0.2.2 introduced support for managing both VMFS datastores and NAS datastores, which can be used to provide virtual machine image storage locations for hosts. VMFS datastores use physical disks locally available on a host, with an on-disk format optimized for efficient image storage, while NAS data stores allow the use of remote storage accessible via NFS.

resource "vsphere_nas_datastore" "datastore" {

name = "terraform-test"

host_system_ids = ["${data.vsphere_host.host.*.id}"]

type = "NFS"

remote_hosts = ["storage.example.com"]

remote_path = "/export/vm-images"

}

»Resource Tagging

vSphere provider 0.4.0 introduced resources to manage tags and tag categories, and extended existing resources to allow assignment of tags.

»Virtual Machine Resource

The virtual machine resource has been revamped to support new options such as CPU and memory hot-plug, and support for vMotion. A number of issues around virtual device management have also been addressed. These improvements will be included in the forthcoming 1.0.0 release, to be announced in the coming weeks.

»HashiCorp Vault Provider Improvements

An increasing number of users are using Terraform for configuration of their HashiCorp Vault clusters, and to give other Terraform configurations access to credentials maintained in Vault. The initial release of Terraform's HashiCorp Vault provider focused on low-level Vault API functionality, allowing population and retrieval of generic secrets.

Vault Provider 1.0.0 expands this functionality to include higher-level resources and data sources to more easily use some of Vault's more complex backends. For full details, please refer to the Vault Provider changelog.

»AWS Auth Backend

Vault's AWS auth backend allows a Vault token to be automatically and securely issued for an EC2 instance or for arbitrary IAM credentials. This allows AWS to be used as a trusted third-party to avoid the need for manual distribution of secrets to new instances. There are several details to configure when enabling Vault's AWS auth backend, many of which require the ids of AWS API objects previously created. Terraform's ability to mix AWS Provider and Vault Provider resources in a single configuration can streamline this setup process by both creating the necessary AWS objects and passing them to Vault using Terraform interpolation. For example:

resource "vault_auth_backend" "aws" {

type = "aws"

}

resource "aws_iam_access_key" "vault" {

user = "${var.vault_user}"

}

resource "vault_aws_auth_backend_client" "example" {

backend = "${vault_auth_backend.example.path}"

# NOTE: secret_key value will be written to the Terraform state in cleartext;

# state must be protected by the user accordingly.

access_key = "${aws_iam_access_key.vault.id}"

secret_key = "${aws_iam_access_key.vault.secret}"

}

The Vault provider has resources to manage the auth backend's client credentials (as shown above), the certificate used to verify an EC2 instance identity document, authentication roles for the backend, and STS roles.

»AWS Secret Backend

Vault's AWS secret backend is, in some sense, the opposite of the AWS auth backend: it allows AWS tokens to be securely issued and revoked for people and applications that hold a valid Vault token. This can be used, for example, to issue time-limited AWS credentials with constrained access policies to applications that are colocated on a single EC2 instance, rather than both applications sharing a single set of credentials for an associated instance profile. The Vault provider has resources to configure the secret backend itself and to create roles that clients may assume. As usual, Terraform can be used to manage both the Vault configuration and the underlying AWS objects it refers to. For example, an application might need access to an S3 bucket that is also managed by its Terraform configuration:

resource "aws_s3_bucket" "example" {

bucket = "vault-secret-backend-example"

}

resource "vault_aws_secret_backend_role" "app" {

backend = "${vault_aws_secret_backend.aws.path}"

name = "app"

policy = "${data.aws_iam_policy_document.app.json}"

}

data "aws_iam_policy_document" "app" {

statement {

actions = ["s3:*"]

resources = ["${aws_s3_bucket.example.arn}"]

}

}

Here we use Terraform's ability to dynamically construct AWS IAM policy documents so that we can easily grant the role access to only the specific S3 bucket the application needs, automatically populating this after creating the bucket.

»AppRole Auth Backend

Vault's AppRole Auth Backend allows issuing Vault tokens with particular capabilities using limited-use role ids. A deployment orchestration system can, for example, create a one-time use role and associated secret which can be passed to an application to allow it to obtain a Vault token from Vault's API on startup.

The Vault provider's support for managing roles can be used to issue a one-time-use role for an instance before launching it:

resource "vault_auth_backend" "approle" {

type = "approle"

}

resource "vault_approle_auth_backend_role" "app_server" {

backend = "${vault_auth_backend.approle.path}"

role_name = "app_server"

policies = ["app_server"]

secret_id_num_uses = 1

secret_id_ttl = 3600

}

data "vault_approle_auth_backend_role_id" "app_server" {

backend = "${vault_auth_backend.approle.path}"

role_name = "${vault_approle_auth_backend_role.app_server.role_name}"

}

resource "vault_approle_auth_backend_role_secret_id" "app_server" {

backend = "${vault_auth_backend.approle.path}"

role_name = "${vault_approle_auth_backend_role.app_server.role_name}"

}

resource "aws_instance" "app_server" {

instance_type = "t2.micro"

ami = "ami-123abcd4"

# Assumes that a program in the given AMI will read this and use it to

# log in to Vault. The generated secret becomes useless once it has

# been used once.

user_data = <<EOT

role_id = ${data.vault_approle_auth_backend_role_id.app_server.role_id}

secret_id = ${vault_approle_auth_backend_role_secret_id.secret_id}

EOT

}

»Conclusion

This release of Terraform was packed full of great new features, enhancements, and provider updates. Thank you to all of the Terraform community members who helped make Terraform the leading tool for managing infrastructure as code.

Go download Terraform and give it a try!

Sign up for the latest HashiCorp news

More blog posts like this one

Terraform ephemeral resources, Waypoint actions, and more at HashiDays 2025

HashiCorp Terraform, Waypoint, and Nomad continue to simplify hybrid cloud infrastructure with new capabilities that help secure infrastructure before deployment and effectively manage it over time.

Terraform migrate 1.1 adds VCS workspace support and enhanced GitOps

Terraform migrate 1.1 adds support for VCS workspaces, expanded Git capabilities, and greater control through both the CLI.

Terraform adds new pre-written Sentinel policies for AWS Foundational Security Best Practices

HashiCorp and AWS introduced a new pre-written policy library to help organizations meet AWS’s Foundational Best Security Practices (FSBP).