Service Discovery at Datadog

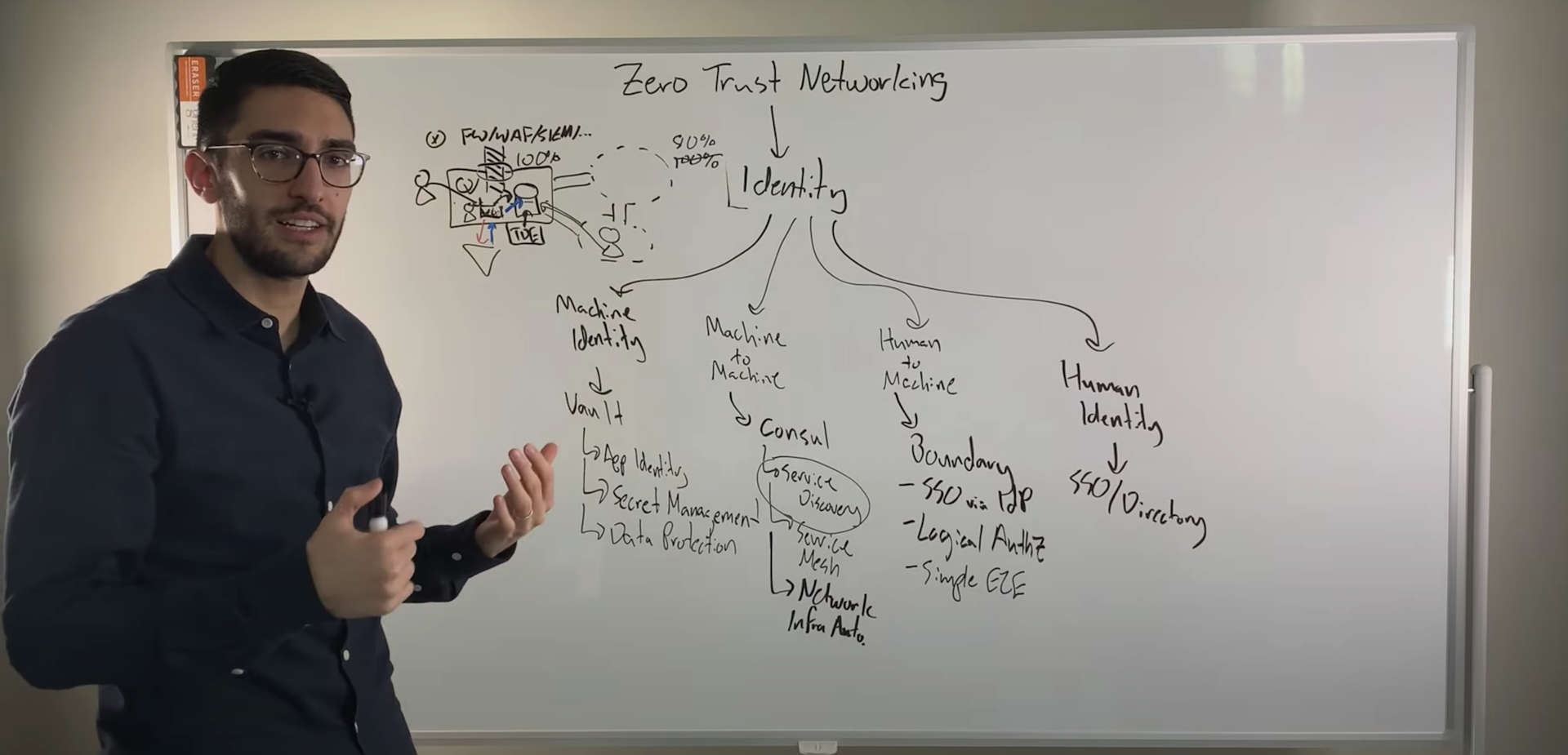

Datadog is a heavy user of HashiCorp tools, including Vault and Terraform. In this segment from the HashiConf EU keynote, hear how Datadog uses Consul in its approach to service discovery.

Datadog processes trillions of datapoints per day to provide its SaaS-based IT monitoring services. They're currently in the middle of a migration from VMs to Kubernetes, and HashiCorp's toolset has served them well in this regard by supporting both types of workloads.

In this segment of the HashiConf EU 2019 Opening Keynote, Datadog engineer Laurent Bernaille will show how his company is using Consul service discovery to make encryption and authorization across their heterogeneous VM and Kubernetes environments possible.

Speakers

Laurent BernailleStaff Engineer, Datadog

Laurent BernailleStaff Engineer, Datadog

Transcript

Hello, everyone. I'm Laurent Bernaille. I'm a Staff Engineer at Datadog, and I'm very happy to be here today to share how we do service discovery in our infrastructure and the challenges we face.

I'm going to do a very quick introduction on Datadog, if you don't know who we are. We are a SaaS-based monitoring company. We do metrics, APM and logs, and other products such as synthetics, and many other products in the works. We have thousands of customers, hundreds of integrations, and just to give you an idea of scale—because this is where part of the challenges we have are coming from—we process trillions of data points a day, and to do that, we have tens of thousands of VMs. To make it even more complex, we are migrating from virtual machines to Kubernetes, which makes it even more challenging, as we're going to see just after.

Before diving into service discovery and the challenges we face, just a quick intro on how we've been using HashiCorp software for quite a long time now. First of all, we use Terraform and Packer heavily. We use Packer to build all our base images, and we use Terraform to configure all our cloud resources across different providers such as AWS and GCP. Today we have thousands of Terraform files and each represents tens of thousands of lines of Terraform code, so we really rely heavily on these tools.

We also use Vault pretty heavily for secrets management, and I'm just going to focus on Kubernetes to just give you an idea of size and scale.

In our Kubernetes cluster today we use Vault to get secrets and to provide PKI services, so all our control plane services retrieve certificates using Vault. As you can see, we do 100 certificate signatures per hour or something. We also heavily read secrets from Vault inside Kubernetes because every time a pod starts, it's authenticating using its Kubernetes service account, getting a token from Vault, and then getting secrets.

Datadog's service discovery use case

Let's dive now into service discovery. As I was saying before, we have those virtual machines and Kubernetes clusters, and this makes service discovery very challenging. This is a quick representation of what we have. Before Kubernetes, we had a large set of virtual machines, like tens of thousands, and service discovery was local to this set of machines. Now we are migrating to Kubernetes so we're introducing Kubernetes clusters.

Because we have such a huge scale, we cannot have a single Kubernetes cluster, we have multiple ones. Also, we're providing our services in different regions. We have these different infrastructures of virtual machines, Kubernetes clusters, across different cloud providers and different regions. This raises quite a bunch of challenges.

The first one, for instance, is imagine your workloads are running in cluster 2, so the B example in the diagram, and you want to reach a service that's on a VM. In that case, you can't rely on Kubernetes services, which is what we use inside the cluster. So we need a solution to find A, for instance.

Another example would be you're in a VM in classic VMs, and you want to reach a service inside Kubernetes. You want to say, "Where is C?" and you can see that normal service discovery inside the VM is not going to help you find the service that's running inside Kubernetes.

We also have the issue between clusters, of course, because when you're inside a Kubernetes cluster, you have all these nice features for service discovery built into Kubernetes, but it doesn't allow you to do cross-cluster. Even worse, sometimes you have services that are deployed across different clusters, and this is the B example on this diagram where when you want to reach B from A, you're actually reaching services that are spanning two different Kubernetes clusters. In addition to that, sometimes we have requirements when we need encryption and authorization, which makes it even more challenging.

In addition to these features, we have a very big scalability challenge. Just to give you an idea of what we need to be able to achieve—we need to be able to address: - Thousands of services - Tens of thousands of nodes - Hundreds of thousands of pods So this is largely used and this is very tricky to make it work.

The first thing is we needed the control plane to be efficient, because as you can imagine, if you're distributing this complex topology information across all these nodes, you want it to be fast. For instance, if the application is going down, you need the endpoint to be updated very fast all over your infrastructure. I mean, to be fast—like a few seconds. Also, you need the traffic—generated by a single event of this type—to be small enough not to overload the control plane. Finally, you can't get all the topology information on all nodes because otherwise it's going to consume too much memory. Imagine you have an Envoy running on each node, and this Envoy has a full view of the whole topology, you can imagine how much memory it's going to use.

In addition to control plane efficiency, we also need the data plane to be efficient. By this I mean we need the overhead of doing service-to-service communication to be low enough so it doesn't impact your application. As you can imagine in our case, the volume of traffic we get is pretty heavy.

Datadog's approach to the problem

Here is our approach, how we've done it in the past, and how we're doing today, and what we plan to do in the future. Before migrating to Kubernetes, what we were doing is we were deploying a Consul agent on every node. So every service was discovering themselves using Consul, and that was working pretty fine even if it was sometimes challenging given the scale we're running at. In Kubernetes clusters, we use Kubernetes services, which is a logical and simple way to do it. However, we also need to solve the issues I was mentioning before. For instance, we need to address services that are running on instances, and for this what we do is we use Consul DNS.

We run Consul agent inside Kubernetes, and we use Consul DNS to reach services running on VM. But we also need to solve cluster-to-cluster communication and VM-to-cluster communications. The way we do it today, is we rely on ingresses and DNS. Basically, we publish DNS services and cloud provider DNS, and we access these services and use that as ingress.

So, it kind of works, but it doesn't solve all the issues I was mentioning before. There's no authentication, no encryption, and using DNS this way to do service discovery is not really efficient because packaging time can be a bit long.

Datadog's future plans for multi-platform service communication

In the future, what we plan to do is to do service mesh to solve all this cross-cluster communication and VM-to-cluster communication, and we're really looking forward to all the features Mitchell announced before because they should be able to help us solve these issues I was mentioning.

In conclusion, as you grow and as your topology gets more complicated, service discovery is getting pretty tricky, and so we really believe that service mesh is a good answer to this questions, and that we're really looking forward into new solutions and efficient solutions to this problem.

Watch the full keynote for HashiConf EU 2019