Service Mesh With Consul Connect and Nomad 0.10

HashiCorp Nomad engineer Nick Ethier introduces Nomad 0.10's networking features, which help keep communication secure when integrating with a service mesh.

While Nomad has integrated with Consul for service-to-service communication and other community tools like Traefik and Fabio have helped get traffic from outside of the cluster to the services inside the cluster, keeping service-to-sidecar communication secure in a service mesh has been difficult.

Nomad 0.10, which was unveiled in the HashiConf EU 2019 keynote, solves this problem by introducing shared namespaces. This gives you an isolated network for every task group within Nomad so that an attacker couldn't just masquerade as another service by using a proxy in the shared environment.

Nomad 0.10 also introduces native Consul Connect integration, so that you don't have to manually deploy sidecar proxies.

Slides

Speakers

Nick EthierSoftware engineer, Nomad, HashiCorp

Nick EthierSoftware engineer, Nomad, HashiCorp

Transcript

I can tell we’re here for a Nomad talk because you all have been packed very efficiently. My name is Nick Ethier. I’m a software engineer at HashiCorp working on Nomad. My pronouns are he/him. And when I’m not working on Nomad, I am an embarrassing dad and a hobby farmer, which I think is where the “goat dad” moniker came in.

A look back at Nomad’s history

Let’s look back a little bit. On screen you see the last 3 years of releases in Nomad. About this time 3 years ago, we introduced Nomad 0.4. It came with Nomad Plan. There’s a lot of features here that Nomad users love and use every day: Nomad deployments, improved node draining, integration with Vault and Consul, a really slick web UI, and in the latest release, 0.9, pre-emption and affinities and spread and a lot of great features.

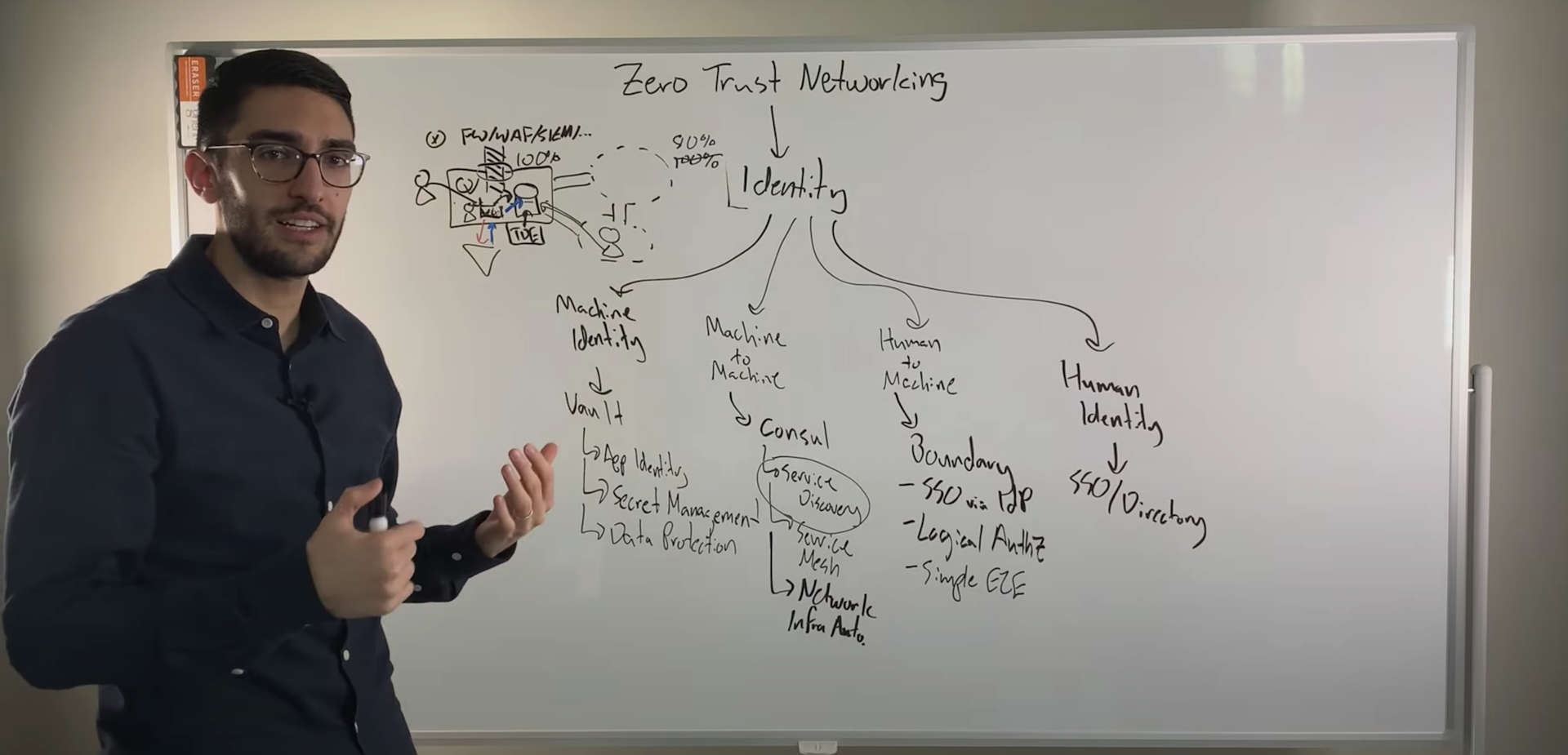

But throughout all this, there haven’t been any improvements to networking, no changes to how networking is orchestrated. And that’s on purpose. Nomad is meant to be very simple to use. We wanted to keep networking very simple. And Consul solved most of those problems in large part. Let’s look at those networking problems which you have when you’re using a cluster orchestrator:

Service-to-service communication

External-to-service communication

Service-to-sidecar communication

First is service-to-service communication. This is: Service A needs to talk to an instance of Service B. There could be 1, 10, 100 of those instances. We need to find them. The Consul integration has solved this problem really well and continues to do so.

Second is external to service. The new buzzword here is “ingress.” But this has always been a problem in cluster orchestrators: How do you get traffic outside of the cluster inside the cluster? There are many great community tools that have existed for a while now that users have been using to do this, including Traefik and Fabio, just to name a few.

Anew problem arose in the last few years: service to sidecar. We’ve seen this with many great open-source projects like Ambassador, Consul Connect, etc. But a problem in Nomad is that you can’t use these very securely. And that’s because this sidecar pattern relies on secure communication isolated from the rest of the node for tasks running together. And in Nomad today all of those tasks just run on the host.

Shared network namespaces

You can see, in this example, there’s an application that wants to talk to a Redis cluster through a proxy. And maybe that proxy adds security authentication, verifies that the service is really who they say they are. And you can’t do this when everything’s shared because anything could essentially masquerade as another service by using one of those proxies. I’m happy to announce in Nomad 0.10 we’re going to introduce shared network namespaces, and that’s coming soon.

Let’s look at how this works. In your job file we’ve taken that network stanza that used to be in the resources stanza inside of a task and we’ve made it available in the group stanza. So just like before, you can define ports, but we’ve added a new field, this mode field. In this example we have bridge mode.

There are a few different modes that Nomad will come with. Bridge gives you that shared networking namespace. It creates a virtual interface and bridges it with the host, and it creates some rules in the firewall so we can have NAT out to the world inside the cluster. And then we also are supporting port mapping so you can forward ports from the host inside of your namespace.

The port stanza’s new "to" field

This port stanza is the same as it used to be except we’ve added another field to it, this to field. So this port web stanza is saying, “Allocate a port with the label web. I want to assign it to Port 80 on a host somewhere.” That’s what that static variable or label is. “And then I want you to map it into my namespace to Port 8080.” And that’s what that to field does.

Here’s a diagram to see how this works. There is an allocation that is running with an IP address that’s different than the host IP address, completely isolated, and it’s binding to Port 8080, and we’re going to forward that to the host IP at Port 80.

The other thing we’ve done is we’ve taken the service stanza and made it available as well in the group stanza. This is because your task group becomes the base unit of networking now. Your service can be composed of other tasks running inside this task group. This brings a lot of other benefits. You can have script checks now that run against different tasks and help report the health of that service. This service stanza will also register the correct port with Consul, so you’ll get the port on the host that’s listening. So other services inside your cluster will correctly communicate through those forwarded ports.

I’m happy to also say we support shared networking namespace across drivers. So you can have a Docker driver or a Docker task and an exec task in the same task group, and they will still share the same networking namespace, which is really exciting.

I’m also happy to say that we want to support full backwards compatibility with 0.9. If you bring your jobs back that you had in 0.9. and you launch in 0.10, it will operate exactly the same. All of these new features are all opt-in.

A demo on a Nomad cluster

I want to do a demo of that real quick. I’m going to drop this in my clipboard and we have a Nomad cluster here. There are no jobs, so let’s go ahead and run a new job.

This is a very simple application that’s going to launch Redis and two tasks, all in the same task group. We have a producer task and a consumer task. And the producer at this time is just going to use Redis as a cue to send a message that says, “Hello from producer.” We’re going to use this bridge network. This is going to be an isolated network namespace. You’ll notice the producer and consumer run as raw exec tasks, and they’re using a path on my local machine. The Redis task is using a Docker image.

Let’s go ahead and plan this. Everything looks good. We’re going to schedule everything correctly. Now this is all working and spinning up. We can look at Redis and check out the logs and see that it is accepting connections at 6379. If I dropped back into my terminal on my machine and tried and find something listening on that port, there’s nothing there because it’s not in the host namespace.

We can also hop back over and check out the logs for that consumer, and we can see that we’re getting these messages, and this is all happening PubSub over the same network namespace over the loopback interface.

The prior security issues with Nomad in a service mesh

Let’s jump back to service-to-service communication. Consul has always solved the discovery of these services in your cluster. But there have always been several options to do the plumbing and to route things to the right place. Some of those options included setting up DNS and using Consul’s DNS interface. You could use a template stanza and template out those services into a configuration file and reload your application. There was native integration, where your application just spoke to Consul directly. And then there are those proxies that have been integrated with Consul, like Traefik and Fabio, as I said before.

But all of these failed to solve the problem of service segmentation. What I mean is that, if one of those services is compromised, you essentially have full range of the whole network. The attackers can hit any node they want. They can even ask the service registry in some cases where those services are. In essence you’ve let a fox into the henhouse, or in my case, my 2-year-old son.

Now the way I keep my chickens safe on my farm is I keep them in a coop and in a run. So I keep them segmented from anything where predators could attack them. And funny enough, this is sort of how enterprise network security works, in a very simplified way. You have a load balancer that sits at the edge of your network that load balances traffic through a firewall, usually with static ports and addresses to your applications. Your applications can talk to each other, usually only a few of them in this monolith world. And then they may need to talk to a database that’s in a protected segment of your network. So they again need to go through another firewall, usually with static port and IP again, to reach the database.

NativeConsul Connect integration

But as we get into the Wild West of cloud-native and microservice architecture, this becomes really hard to manage. As a former operator myself, I look at that and say, “That looks like a nightmare to try and manage security for.” I mean, that’s the face (pointing to unhappy dog on screen) I make when I try and manage microservice infrastructure. Who here has made that face before?

Our solution to this is Consul Connect, which we’ve heard a lot about these last 2 days. Consul Connect solves this problem a couple of different ways. First, is its service access graph. These are those intentions that you may have heard of. And this allows you to say, “On a service level. Service A is allowed to talk to Service B but cannot talk to the database.” This gives us our authorization. But we still need authentication. We still need to know Service A is really Service A.

This is why Consul Connect also has a certificate authority. A mutual TLS connection happens between those 2 services, and we can guarantee from both ends that Service A is talking to me and is really Service A, and I’m really talking to Service B because I can verify their certificate on that end.

Consul Connect also supports a native integration. There is an API library that you can integrate directly into your application. But typically the way that most people solve this is through this sidecar pattern. This is why having network namespace support in Nomad is so crucial to having a very secure service mesh solution. Again, in this example, we have a proxy task and an application task, and then that proxy task is going to get its configuration through Consul.

And to do that, all you have to do is drop this Connect stanza into your service stanza. And this sidecar service stanza lets you have the default settings to launch a task in Nomad that will make your task Connect-enabled.

This is really great because Nomad will launch Envoy for you, and manage it, bootstrap it, make sure the configuration is retrieved from Consul correctly. All for you. You don’t have to think about it. And this task is injected into your task group when it’s submitted.

You need to also declare who you want to talk to, what services this task is going to talk to. This is because Envoy needs to create a unique port for each of those upstream tasks. In this example we’re defining an upstream. We want to talk to Redis. The proxy is going to bind to localhost 6379. It looks like a local Redis instance, just like in that previous example. But what’s really going to happen is you’re going to have a complete end-to-end secure encryption between the application and that Redis instance.

You get a few new environment variables added into your application runtime. When you launch a task, you’ll notice we have these upstream environment variables for each upstream you define. This lets you programmatically configure applications to talk to upstreams, and that local bind port setting we plan to make optional and have Nomad just find a random port that’s available inside your network namespace for you. It all happens on automatic for you.

A Consul Connect demo

I want to do one more demo for you, this time with Consul Connect. This is a diagram of what I want to set up. We’re going to have 2 allocations, a dashboard and an API that that dashboard talks to. And they’re going to communicate through Consul Connect, and we’re going to connect to that dashboard through a forwarded port.

I’ve got a Nomad cluster here. I’m going to drop this job file there. And let’s look through it. It’s a little bit more complicated than the last one. We have one task group here for the API. It’s operating in bridge mode again. We’re defining a service. We’re just calling this count API because this is a counting dashboard, and we’re statically defining the port here. And that’s because we don’t want the service to be the port on the host, because we’re using Consul Connect. Having the port here lets this sidecar know what port to bind to when it needs to talk to your application over localhost.

We have the service defined and then we jump into our task. This is just a Docker task with a standard image. Then we have another group defined in this job, the dashboard, and it’s also in bridge mode, so it’s going to be isolated again. We’re going to allocate a port on the host, label it HTTP. I’ve got a static port here just to make it easier for us to use this, but there’s no reason it couldn’t be dynamic. And we’re going to port map it into 9002.

We’re going to set up this service stanza so we can have Consul Connect support. It’s running at Port 9002 inside the namespace. Let’s set the Connect stanza up to have an upstream for the count API, and we’re going to bind locally on Port 8080. The task of this dashboard looks like this. We define an environment variable that this application expects to be able to reach this counting service, just that loopback for 8080. Let’s go ahead and plan this.

What’s really interesting about this plan is, not only do we have our 2 task groups, which will be created as allocations and the tasks associated with them, but you’ll see there’s an extra task added to each of these task groups. When Nomad injects the sidecar into your application, you know about that at plan time, so you can see every change that’s going to happen to your application. And that’s something that’s really exciting for me.

Let’s look at the API first. This API is starting up. You see we have this Nomad Envoy task available now, and we’ve got a little icon here to denote that it’s a proxy task that we’ve injected. We have some indication that it’s a proxy task, and you have the standard “These are some task events that have happened for the task,” if you’re not familiar with Nomad. So the client received it. It built the directory, and started it up. We can look at the logs here. We have it set in debug mode right now. Envoy will log in as standard error. Everything looks great there. Let’s jump back up and look at this dashboard.

Again, we have this Nomad Envoy task that’s part of this task group too. And you can see we have the addresses that are associated with it. There is a port that we allocate for the Nomad Envoy task so that those Nomad proxies are port-forwarded to the host. And then behind the scenes we register it with Consul with the correct port. So those proxy instances know how to talk to each other.

This dashboard is running on Port 28080. Let’s find the client that it’s on. This is the client server. And we can grab the IP from its metadata data. This is just a very simple dashboard. You may have seen this before if you’ve seen some of the other Consul Connect demos. This dashboard, in real time, updates the count from that backend count API. Let’s see if I can kill it.

I’m going to connect to this cloud instance and we’re going to make this fail. We’ll try and kill some tasks and see if we can get this dashboard to crash.

While that’s going, we might be able to crash it from the Nomad dashboard. Let’s try that. This is the dashboard. It needs this Nomad Envoy proxy task. Maybe if we restart it, we can get something to happen here. I think it restarted too fast. Dang it.

I seem to have lost that. Let’s kill the task and see if we can do it that way. Let’s take the allocation itself, and let’s restart it. Bye-bye, allocate. There we go. We disconnected. Oh, it reconnected! Dang it!

Let’s try the API. Maybe we can kill the API. This is the API. Restart the API. No, come on. Well, we reset the counter, that’s something. What’s funny is, I’m just trying to show you how powerful this is to have Nomad managing this Envoy proxy for you. Anything happens to it, Nomad will take care of it and restart it as needed.

What if this bridge networking mode isn’t enough for you? What if you run on bare metal and you need to do something special with a Layer 2 network, or maybe you have this overlay networking vendor that has sold you that this is the future and you need to integrate with that. What do you do? Because this won’t work.

Coming to Nomad: CNI

In a future release of Nomad, we plan to integrate CNI directly with Nomad. CNI stands for Container Network Interface, and it provides a common interface that network vendors can use to develop plugins that, given a network namespace, can create the interfaces, the routing rules, the IP tables rules, whatever’s necessary to connect to the network. This is a very simple example here. This just says we have a bridge network we want to join. We’ve given it a name. We say we want to create a default gateway so we can route out, and we want it to masquerade as the host IP. Then IP tables are all set up. There are some IP Address Management plugins as well. We’re going to use a host local one that allocates an IP address that’s unique to that host out of the given range.

The way this works in Nomad is Nomad creates your network namespace for the allocation. Then it calls the CNI plugin, with the configuration as well as where the handle for your namespace is. That plugin can take all that information and create what is needed for your application to connect to the network.

There are many CNI plugins that the CNI community maintains, and there are many listed here. You can do ipvlan, macvlan. These are all great. If you need an address from DHCP, you can use the DHCP IPAM plugin. And then there are also some meta plugins. You can chain plugins together so the output goes into the input of the next plugin. That’s where these meta plugins come in. The port map one is really interesting because that is what does mapping of ports into a network namespace.

The bridge implementation of Nomad is just a CNI configuration under the hood. We make use of the bridge plugin, the host local IPAM plugin, and the firewall and port map meta plugins to set things up. What’s really cool about this is that, because Nomad will build the correct arguments for this port map plugin, someone who needs to use CNI can come along and just add this port map plugin as part of their plugin list, and you get that port mapping with that “To” variable from a port stanza for free. It will just work with your CNI application. This opens up a ton of really exciting possibilities for Nomad to integrate with many of the new and upcoming virtual networking solutions.

Consul Connect Mesh Gateways

Finally, I want to talk about Consul Connect gateways. Gateways are very easy to deploy on Nomad. Eric has a really great demo downstairs at the booths that uses gateways between a Kubernetes and a Nomad cluster to fail things over to a Nomad cluster through a gateway or do traffic splitting and things like that. They’re very easy to deploy right on Nomad.

We’ve talked about work that we’ve done here the last few months, but let’s look at the future for a moment. If you’ve used Nomad before, you’ve used a deployment. Nomad can roll out changes to your application. It can also do a canary deployment that lets you create 4 instances of your new application, verify things work, and roll forward.

As we continue to integrate Nomad with these Consul Connect features, we could do things like traffic splitting with that deployment. So you have a canary deployment, and as that deployment is created, Nomad it creates a traffic-splitting rule to split traffic over. As it builds more confidence, more and more traffic could be split over to the new side, and you still could make use of Nomad to pause deployments and roll back deployments. All of that could be integrated with Consul Connect to give you a very seamless networking story with your deployments.

I hope you all have enjoyed this presentation and these demos. Everything I’ve shown today will be available in open-source Nomad in 0.10, and I’m happy to announce that we’ve prepared a technology preview build for you that includes the network namespacing and the Consul Connect features. It’s available at nomadproject.io under the Guides section. Thank you.