Getting Terraform into Production with Terraform Cloud

A guide with tips and resources for getting Terraform in production using Terraform Cloud.

Introduction and Requirements

It’s critical to learn the basics of HashiCorp Configuration Language (HCL) and Terraform commands before getting started in production with Terraform. Once you’ve learned those, you can begin setting up various parts of your infrastructure in production.

Preparing infrastructure for production workloads requires much more than simply writing HCL though. Factors like collaboration, security, extensibility, and scalability come into play. Ideally, you can set up a system once and possibly tweak it, but you shouldn’t have to rip it up and start over as your needs change over time. HashiCorp built Terraform Cloud to accommodate using Terraform in production. It facilitates a collaborative Terraform workflow that can integrate into your existing DevOps pipeline. Start off for free, and scale up your plan only as your team grows.

This guide walks you through the first basic steps of setting up a Terraform project in production using Terraform Cloud. After reading this guide, you should know how to provision production infrastructure, and have a basic system in place that can accommodate the needs of other roles across your organization.

You will need: * Basic knowledge of HCL, including Terraform modules and Terraform state. You have written some HCL before, and understand the basics of Terraform configuration files. If you are not familiar with HCL yet, learn more using these Terraform tutorials. * A Terraform Cloud account.

Consider the teams who will eventually use your projects: operators, architects, developers, and security/compliance.

When you’re first getting started, you’re likely working exclusively with DevOps practitioners. This is ideal. It’s important to recognize that while Terraform allows you to provision infrastructure as code, there is no substitute for starting off with domain expertise in cloud operations.

For the sake of this document, we’re defining future Terraform stakeholders as follows:

Operators are the most frequent users of Terraform. They provision, manage, and destroy the infrastructure used by an organization.

Architects are the operators who are responsible for determining how global infrastructure is divided and delegated to the teams within the business unit. This team also determines how Terraform configurations are broken apart and mapped, and sets organization-wide variables and policies.

Architects may be responsible for initially setting up Terraform at an organization, or may be brought in later to bring order to existing configurations.

Developers consume the infrastructure provisioned by Terraform. In some cases, they may use private modules to provision infrastructure themselves. This is an advanced use case, but it is helpful to understand upfront.

Security and compliance teams ensure that all infrastructure changes adhere to respective guidelines for their organization. These teams generally write Sentinel policies, rather than Terraform configurations. You’ll need to consider these roles when setting up Terraform Cloud workspaces as well as their permissions, which we’ll get into later.

Stage 1: Build

Start with a single project first. It’s especially helpful if it is a greenfield project.

Once people get started with HCL, many users instantly start planning a complete import of all of their infrastructure. This can be overwhelming, especially when you consider dependencies, integrations with other tools in your workflow, the needs of other teams, avoiding downtime, etc.

Start with a single app’s infrastructure. This could be a greenfield project, or perhaps an older project that is not mission critical to your company. You should be able to experiment and break things without it impacting your company very much.

Helpful resources:

- Tutorials for Terraform Cloud

- Importing infrastructure to Terraform documentation

- Import infrastructure to Terraform tutorial

- Tutorial to migrate state to Terraform Cloud

Leverage existing modules in the Terraform Registry.

Rather than always writing each Terraform configuration from scratch, avoid redundant work by leveraging existing modules, if possible. Modules are HCL containers for multiple resources that are used together. By using modules, you can easily reproduce infrastructure for testing, staging, and production environments. You can find them in the Terraform Registry. It’s ideal to start with modules from Official or Verified Providers, which were created or have already been vetted by HashiCorp.

Start by provisioning a single resource for a single project. Most users choose to build out this resource before provisioning another.

Helpful resources: * Terraform Registry * Using Modules from the Registry

Start your project by mapping configuration files and workspaces.

This guide is not intended to help you or your team create your reference architecture or DevOps pipeline. That is something you will have to do on your own. It will help you figure out how this architecture maps out to features within Terraform Cloud, as well as how Terraform Cloud can integrate with the DevOps tools you already use.

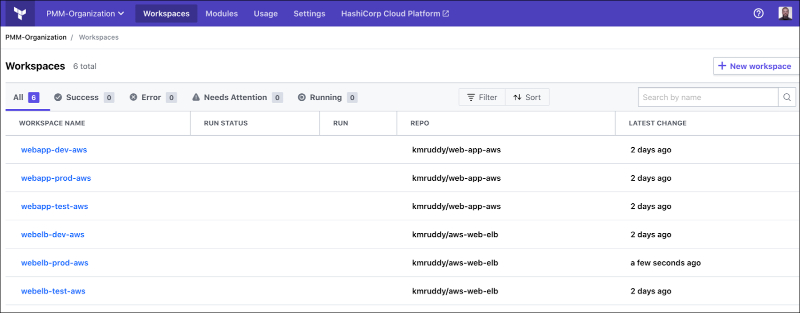

If you are a Terraform open source user, you are used to managing collections of infrastructure using persistent working directories on your local machine. Since Terraform Cloud executes runs remotely, it moves this concept into the cloud using workspaces.

Workspaces are a fundamental part of running Terraform in production.

Workspaces are a fundamental part of running Terraform in production.

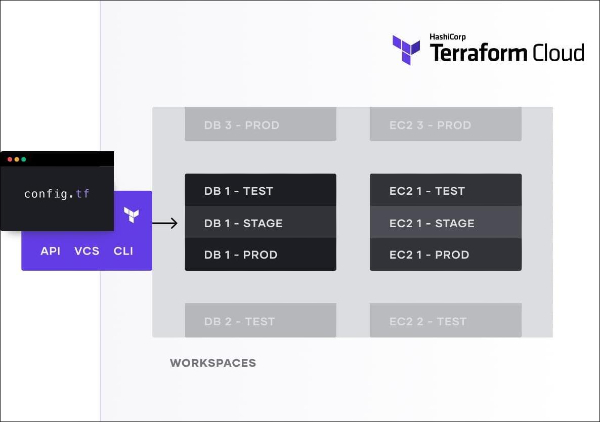

A Terraform Cloud workspace provides an environment to run a single Terraform configuration, and is a key part of using Terraform in production. Some rules of thumb for provisioning workspaces: 1. A workspace maps to a single Terraform configuration in a 1:1 fashion. When breaking down Terraform configuration and mapping it to separate workspaces, you can divide configuration into modules based on blast radius, rate of change, scope of responsibility, and ease of management. It’s also helpful to determine which input parameters will be necessary for module configuration. 2. Each one stores variables, state files, credentials, and secrets for a configuration. 3. Workspaces determine who has access to each part of your infrastructure. As you give more specialized access to teams across your organization, workspaces become the fundamental way each team manages their set of infrastructure. Consider which team or teams will interact with a workspace when setting it up. 4. For an average application environment, having one to four workspaces is a good guideline, and having two is probably sufficient in many situations. 5. Workspaces can also impact how policy as code is implemented if you choose to use it. There is more information on this later in this guide. 6. Each workspace can have its own workflow, which will determine if your team will be connecting to Terraform Cloud via the CLI, a version control system, or the API.

Choose API, VCS, or CLI-driven workflows for each workspace.

Choose API, VCS, or CLI-driven workflows for each workspace.

When first learning Terraform, it’s easy to start building one or several monolithic configurations that do not make distinctions between the resources you are provisioning. While this is convenient initially, it becomes a problem as a.) runs end up taking longer and longer to execute, and b.) more and more teams are able to make changes to the configuration. This solution does not scale. As your organization grows, DevOps architects will likely take over this task (if this is not your role already).

Helpful resources: * Terraform Adoption: a Typical Journey * Reference Architectures * Structuring Terraform Configurations for Production * “How do I Decompose Monolithic Terraform Configurations?” video

Test with the CLI, provision using version control.

The Terraform CLI is ideal for testing a configuration. Start by pulling down a module from the Registry into your configuration file, and running plans against it using the CLI. Don’t apply resources in this phase. This will allow you to test your configuration, without actually committing to or paying for any of these resources.

Once you are ready to provision a resource, it’s time to choose a workflow. As mentioned previously, there are three Terraform Cloud workflows: CLI, Version Control, and API-driven workflows. The API-driven workflow is a bit advanced if you are new to Terraform Cloud. Using the CLI-driven workflow is fine if you are working alone, or are testing something. This essentially provides a remote backend to the CLI workflow you use with open source. If you are working with a team and are just getting started, we recommend the VCS-driven workflow. Why do we recommend using version control for infrastructure as code? 1. Version control provides a single source of truth for a Terraform configuration. 2. It facilitates asynchronous code review among teams. 3. It makes it easier to experiment with, fork, or roll back changes to infrastructure. 4. Local testing is possible for anyone, just by cloning the repo and adding the appropriate variables.

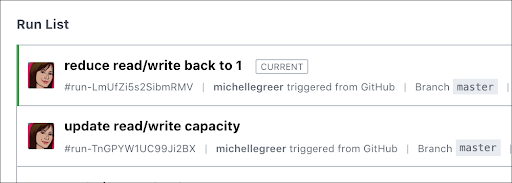

Terraform Cloud has tight integrations with VCS like GitHub, GitLab, BitBucket, and Azure DevOps server. These integrations allow you to automate runs when a change is merged into a master branch of a configuration’s repository.

Changes merged into a master branch of a connected VCS repo trigger a Terraform Cloud run

Changes merged into a master branch of a connected VCS repo trigger a Terraform Cloud run

We strongly recommend reading The Practitioner’s Guide to using HashiCorp Terraform Cloud with GitHub. This will help you outline how to map workspace to VCS repositories.

Helpful resources: * The VCS-driven workflow * Practitioner’s Guide to using HashiCorp Terraform Cloud with GitHub

Understand remote state.

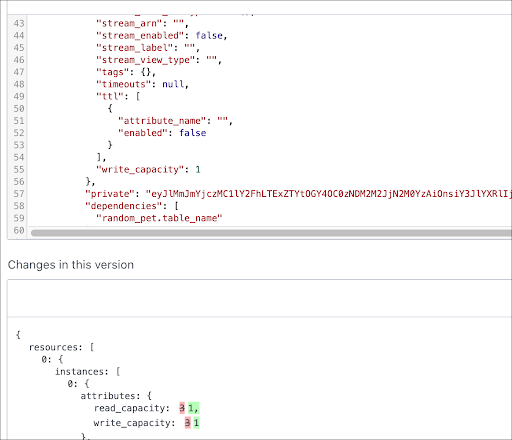

A Terraform state file maps your configuration file to resources deployed in the real world. It also keeps track of metadata, and can improve performance for large infrastructures. Please note that Terraform Cloud stores your state file remotely, so there is no reason to store it in an S3 bucket or elsewhere. Terraform Cloud also features state locking. This prevents concurrent runs against the same state, and is a key feature once you start adding members to your team. Every configuration in Terraform Cloud will have a separate state file stored in its respective workspace. You can also access outputs from other state files within the same organization.

Once you’ve executed your first run in Terraform Cloud, it will automatically generate your first remote state file. You can also migrate an existing state file to Terraform Cloud using this guide.

Terraform Cloud highlights changes to Terraform state after executing a run

Terraform Cloud highlights changes to Terraform state after executing a run

Helpful resources: * Terraform Cloud Remote State * Remote backends * Migrating state to Terraform Cloud

Use terraform graph to manage dependencies.

As you provision more and more modules, you will inevitably run into dependencies within your infrastructure. Dependencies in Terraform are managed using HCL itself. Most of the time, Terraform infers dependencies between resources based on the configuration given, so that resources are created and destroyed in the correct order. Occasionally, Terraform cannot infer dependencies between different parts of your infrastructure, and you will need to create an explicit dependency with the depends_on argument.

Terraform naturally builds a dependency graph. You can see this dependency graph at any time by running terraform graph in the CLI.

Helpful resources: * Terraform graph documentation * Create resource dependencies tutorial

Use Terraform CLI functionality to encourage HCL best practices.

HCL might be new to you, and it can be difficult to know if you’re on the right track with your configuration. If you’d like a little help ensuring you are using best practices, try these two Terraform open source commands:

1. Terraform fmt is used to rewrite Terraform configuration files to a canonical format and style. This command applies a subset of the Terraform language style conventions, along with other minor adjustments for readability.

2. Terraform validate runs checks that verify whether a configuration is syntactically valid and internally consistent, regardless of any provided variables or existing state. It is thus primarily useful for general verification of reusable modules, including correctness of attribute names and value types.

Helpful resources:

* Terraform format documentation

* Terraform validate documentation

Use terraform state to manage statefile in case of emergency.

Sometimes a condition in your state file is impeding an apply, which gets you stuck. Not to worry. Terraform state is a command that allows you to manually alter your state file. It can come in handy when importing resources with external dependencies, or dealing with early stage providers.

Terraform state has nested subcommands as well. To see them all, read the Terraform documentation.

Stage 2: Standardize

Handle security and compliance manually at first, but remember that these can be eventually automated.

When your team is small and fairly integrated, security and compliance are fairly easy to manage manually using a simple code review process. As your team grows and more teams are involved, this process may end up slowing your ability to provision and change infrastructure.

Sentinel is HashiCorp’s policy as code framework. It can be used with Terraform Cloud’s Team and Governance tier, as well as the Business tier. Sentinel allows your teams to implement policies for specific workspaces or across an entire organization in Terraform Cloud. These policies could range anywhere from security, to basic best practices like tagging and provider version pinning. When used in conjunction with cost estimation, Sentinel can even help you wrangle in costs of your infrastructure. You or members of your Security and Compliance teams can write Sentinel policies from scratch, or leverage existing ones from the Foundational Policies Library.

Your Security Team may also be familiar with HashiCorp Vault, which can be used in conjunction with Terraform Cloud.

Helpful resources: * Writing and Testing Sentinel Policies for Terraform * Managing Policy as Code with Sentinel * Terraform Foundational Policies Library * Sentinel Playground * Dynamic Secrets with Terraform and Vault * Controlling Costs with Terraform

Consider the Principle of Least Privilege with organizations and workspaces.

Access controls for infrastructure can get complex over time. You may want to give access to some team members to provision, but not to change modules or access sensitive credentials. You may want to restrict certain teams to testing and staging workspaces. Many teams consider organizational roles when setting up permissions, as well as familiarity with Terraform.

All of this is possible with Terraform Cloud. There are several major features that can help you implement PoLP: 1. Organizations, which are shared spaces to collaborate with Terraform Cloud 2. Teams, which are groups of users with a set of workspace permissions 3. Permissions, which can run across organizations or specific workspaces

Since you are just getting started, this part might be simple for now. It’s helpful to know how these features work though, so you don’t have to make major changes as you move more infrastructure and teams to Terraform Cloud.

Helpful resources: * Organizations documentation * Teams documentation * Permissions documentation

Consider setting up self-service infrastructure.

Public modules allow you to leverage existing configurations for various parts of your infrastructure, and are available in the Terraform Registry. Terraform Cloud comes with a private module registry, which has a similar function, but is only available to people within your Terraform organization. Private modules provide a benchmark for what production modules should look like at your company, and reduce the amount of code review required to provision new infrastructure. This also allows developers to deploy their own modules with little supervision, since they will only be working with pre-approved modules.

While you might not be familiar enough with Terraform to create reusable modules, it’s helpful to know this functionality is available when you are ready to scale.

Helpful resources: * Terraform private module registry documentation

Stage 3: Innovate

Integrate Terraform Cloud into your existing pipeline.

Terraform Cloud features an API, making it entirely possible to automate Terraform as part of your CI/CD pipeline. While this can be a convenient workflow, it’s best to start with a more manual workflow until you understand the basics of Terraform Cloud.

There are a few reference materials to help you get started with this, when you are ready.

Helpful resources: * Terraform Cloud API documentation * Securing infrastructure in application pipelines

Extend Terraform with plugins.

Your team may want to use an existing or custom service that is not available in the Terraform Registry, or anywhere on GitHub. Since Terraform is open source, your team can choose to write your own providers using plugins.

Helpful resources: * Plugin documentation * Learn to write a Terraform plugin