Lower your AWS cloud costs with Terraform and Lambda

Are your developers spending too much money on orphan cloud instances? A simple ChatOps utility built on AWS Lambda can help! Terraform can prevent further shadow IT practices and replace them with Infrastructure as Code.

Maybe you've experienced this before: You or your boss are shocked at how expensive your cloud services bill has become. Can you prove that all those cloud instances are being utilized with the greatest possible efficiency? Do you have a way to tag, organize, and track all of your instances? If you've lost track of some instances that are no longer necessary, you're basically leaving the water running.

Many companies are dealing with this type of mess because developers and operations wanted a more agile environment to work in, but they didn't have standards or guardrails in place beforehand. And they don't have a plan to clean things up, either.

This guide will help you fix both of these problems with AWS-specific demos that should still give you a general gameplan even if you use a different cloud. It's based on the real-life strategies we use here at HashiCorp.

What you'll learn

- The top 3 methods for cutting cloud costs

- A real example of 3 tactics used to remove unneeded instances

- Tools for organizing and tracking your cloud resources with granularity

- What "serverless" and AWS Lambda are, and how to deploy a serverless app

- What Terraform is

- How to deploy our open source ChatOps bot for cleaning up AWS instances

- How Terraform and Sentinel can help you prevent overspending

Who this article is for

This article is relevant for managers and any technical roles tasked with keeping AWS instance costs under control. This may include developers, operations engineers, system administrators, cloud engineers, SREs, or solutions architects.

Estimated time to complete

- 30 minutes to complete the ChatOps bot demo

- 35 minutes to complete the Sentinel and Terraform workflow demo

A rogue AWS account story

In large enterprises, this is a common story.

Developers are working on tight deadlines but the operations team needs days or weeks to set up their environments for building and testing new features. So the developers get permission to move their development and testing workloads to AWS, where they can set up and tear down environments almost instantly.

Unfortunately, the AWS account quickly becomes a free-for-all, with developers buying anything and everything they think they need with no oversight to ensure efficient usage of resources. The result is a huge monthly bill—caused by lots of unused instances, resources, and storage that no one bothered to manage or clean up properly.

Congratulations! You now have a shadow IT outlet.

When management finally says these costs need to come down, it's a big challenge just to get visibility into what's going on. Who created all these resources? Looking in the AWS console, it's not clear who created or owns various instances because they can be created without name tags.

If you find yourself in a similar mess, here are the questions you need to get answers to:

- What are the sizes of our EC2 instances?

- Which resources are safe to delete or shut down?

- Where are our instances running? Are they hiding in an unexpected AWS region?

- Should we really leave all instances running 24/7? Are we consuming much more than we need?

- How do we clean up this mess?

Reducing costs: Real-world strategies

The three main ways to cut cloud costs are:

Shut off anything that's not in use.

Take a look at your health checks and CloudWatch logs (for AWS) to determine if you're buying the right sized instances.

After 1 and 2 are under control, get some historical data and make informed purchases of reserved instances for your most common instance sizes. You're buying instances in advance, so if you use a lot less than what you pay for, you're not cutting costs—you might actually be making your costs higher.

The remainder of this guide will focus on the first cost-reduction strategy, which usually yields the largest reduction in cloud costs. For the other two strategies, consult these resources:

- Identifying Opportunities to Right Size

- Cost Optimization: EC2 Right Sizing

- The Ultimate Guide to AWS Reserved Instances

Know where your money is going

In order to "shut off anything that's not in use," you need to start identifying instances and resources that are safe to shut off. For AWS, your go-to tool will be its Cost Explorer. Here are the docs for that.

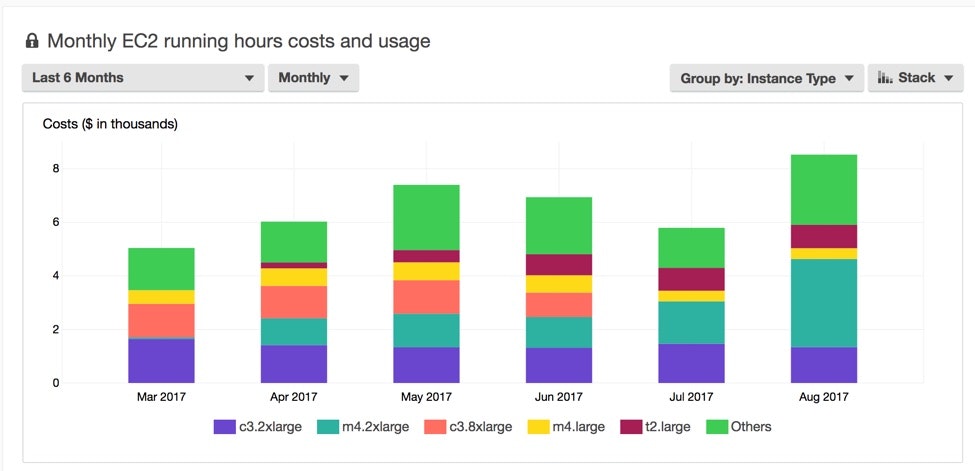

Take a look at this graph from the Cost Explorer:

This stacked bar graph shows the expenses from each instance type over time. You can also group by usage type, custom tags, and more.

The importance of tagging

AWS has a very flexible tagging system. But with that flexibility comes the need for your team to devise and enforce its own standard tagging system, so that there aren't any questions about what resources are for and who created them. When used properly, tags can tell you exactly what each resource is used for and who created it. This brings accountability to the team and their management of cloud costs.

After you've gotten familiar with the Cost Explorer, the next step should be to have your primary AWS operators create tagging standards and document them for team visibility. At HashiCorp, our most useful instance tags (especially for the purposes of the demo below) are TTL (time-to-live) and owner. Time-to-live is the time limit you put on the life of your instance, which is crucial for identifying what's not in use.

Later in this guide, we'll show you a tool that can enforce your tagging standards using more than just a stern email.

How much can you save? A real-world example

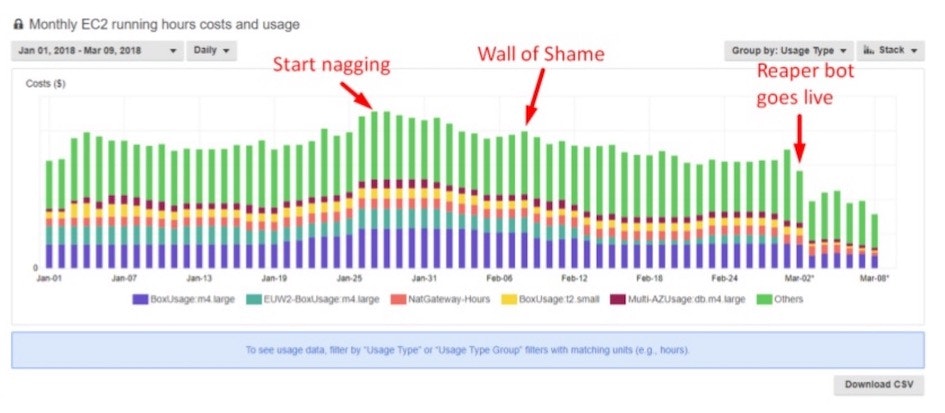

HashiCorp had its own challenges with keeping AWS costs down. We used the same strategies described above plus a few more described below. The most effective tool was a ChatOps utility we built called "N.E.P.T.R." (codename: "The reaper"). The reaper bot cleans up unused and expired AWS instances on a regular schedule. You can see the effect of each strategy in the AWS resource utilization graph below:

This graph shows where each successive action occurred, and how much it drove costs down. The three actions included:

Start nagging: We sent a notification to all AWS operators to clean out unused instances.

Wall of Shame: We encouraged a little peer pressure with the "Wall of Shame" which put operators with the most untagged instances on our reaper bot's Slack report.

Reaper bot goes live: This is when we set the reaper bot loose. It turned off or deleted any instances that didn't have the proper TTL tagging. You'll want to give your team several warnings to ensure that the most important and permanent processes are whitelisted before this last step.

You can see which step had the biggest impact. While the reminders can be helpful, there are ultimately a lot of unnecessary instances that operators lose track of without a strict tagging system. It's not uncommon to see a 30-40% drop in your daily cost. We also found that EC2 compute is the most frequently wasted resource type.

So how do you deploy a reaper bot of your own? You'll be happy to know that we open sourced our reaper bot and HashiCorp solutions engineer Sean Carolan recorded a video demo showing how to deploy it. The reaper bot is a serverless application that runs on AWS Lambda, so it's easy to run. We've also made it easy to deploy with our open source provisioning tool: Terraform. Below is a brief introduction to each technology before we jump into the demo.

What is serverless? What is AWS Lambda?

Serverless computing is a cloud-computing execution model where, in addition to providing the underlying compute infrastructure as a service, the provider also handles the server—meaning it manages the dynamic allocation of resources that would normally be managed by your own choice of Linux or Windows servers. It leaves more of your software's operational management in the hands of the cloud provider (see: 29 Concerns that go away in a serverless world).

The user simply provides application code that is compatible with the serverless provider's platform, and the platform will run that code and shut down. You are only billed when you execute your program—unlike infrastructure-as-a-service (IaaS), like EC2, where you buy units of compute capacity that you're paying for whether you run an application or not. Excluding data storage, if you're not running any applications, serverless costs you nothing. Serverless platforms come in the form of Backends-as-a-Service (BaaS) or Functions-as-a-Service (FaaS).

AWS Lambda is Amazon's FaaS platform. Azure and GCP also have FaaS services. Each FaaS provider has its own quirks and rules that you have to follow, and they don't support all programming languages, but once you've prepared the application and the platform, you simply give it functions as input, it performs the logic in those functions, returns output, and then shuts down until you want to trigger it again (manually or programmatically).

The two key benefits are: You save money if the application doesn't need to run all the time and you don't have to spend as much time managing the server operations.

What is Terraform?

Terraform is an open source tool for provisioning any kind of cloud or on-premises infrastructure. It's the realization of the infrastructure as code concept, which is now considered best practices by much of the software industry. Terraform's core focus is standing up VMs and cloud instances, but it can do much more.

Terraform has its own language for describing infrastructure-provisioning actions in a way that everyone can understand. Take a look at this HCL (HashiCorp Langauge) code below:

resource "aws_instance" "web" {

instance_type = "m1.small"

ami = "${lookup(var.aws_amis, var.aws_region)}"

# This will create 4 instances

count = 4

}

Even a non-technical stakeholder could understand some of what's going on here. instance_type = "m1.small" shows the type of EC2 instance this code will provision, and count = 4 reveals how many instances it will create.

Terraform's other big draw is its almost universal compatibility with hundreds of cloud services from all of the major cloud vendors. If you want to use Azure or GCP services alongside AWS services, Terraform can be the lingua franca or common language that allows you to communicate with and manage any cloud provider's services from one central tool. Terraform knows how to talk to all of these cloud vendors. You just need to use one language, and with a few small tweaks for each vendor, you can manage them all cleanly.

Since everything in AWS (and in most cloud infrastructure services) has an API endpoint for activating resources programmatically, you can use Terraform to describe and build entire networks and data centers with a few lines of code. It only takes a few team members (or maybe just one) to fully understand Terraform and write code for it. The rest of your users don't need to be experts. They can still understand the code enough to modify variables and use the tool for self service.

This guide will illustrate a few more reasons why we're using Terraform to save money on cloud compute usage, but first let's demo the reaper bot.

Demo: Deploying the ChatOps bot

Developers and operators can follow along with this demo by:

- downloading Terraform,

- signing up for the free tier of AWS Lambda, and

- forking this GitHub repository, which has the Python code for the reaper bot and the Terraform provisioning code.

Here's what the reaper bot can do:

- Whitelist resources to prevent the bot from touching them.

- Check for mandatory tags on AWS instances and notify people via Slack if untagged instances are found. ( We can identify the SSH key name, if it was provided, and if the name identifies a person, we can track them down)

- Post a Slack report with a count of each instance type currently running across all regions.

- Shutdown untagged instances after X days.

- Delete untagged instances after X days.

- Delete machines whose TTL has expired.

And of course, it's open source, so you can modify the code to add more features like managing NatGateways or RDS instances.

Remember that this bot can delete live AWS instances, so make sure the engineer deploying this understands the bot's code, your systems running on AWS, and holds a review process involving multiple operators.

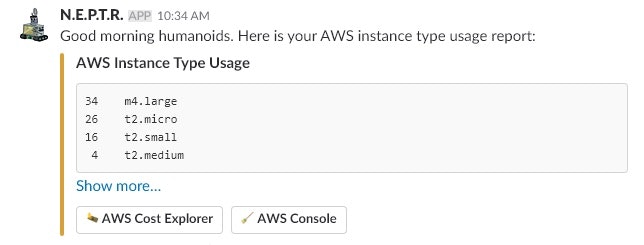

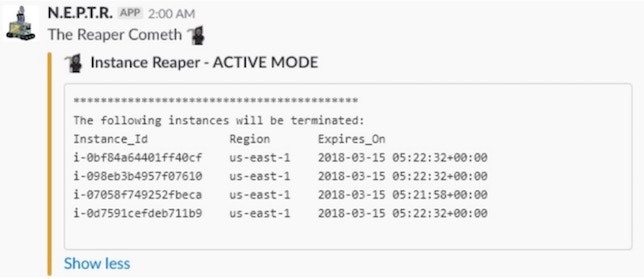

Here's what the reports looked like in Slack:

This first screenshot is a basic AWS instance report that runs every morning telling your team all the instance types your company is running and how many there are of each type. This might help you catch the sudden appearance of unnecessary instances.

This second report shows instances scheduled for termination, so that there's fair warning before the bot deletes live resources.

We found that these Slack reports also had a positive side-effect of training our engineers using AWS to think about instance usage more efficiently.

Video: Reaper bot demo

Here's Sean Carolan's screencast demo for setting up and running the reaper bot. You'll notice that the Terraform parts take less than a minute, and if you don't already have Terraform installed and set up, that can be done in a similar timeframe.

Demo starts at 20:19 and ends at 41:20

There's some technical documentation on deploying and running the reaper bot in the GitHub repo if you prefer written instructions to get set up.

Terraform Enterprise: Adding guardrails & preventing future messes

Let's assume that you've passed the cleanup stage with the help of your trusty reaper bot, or maybe you haven't started your cloud usage yet and you hope to minimize costs and prevent a mess from the outset. You need to have some rules and standards in place so you don't run into the same high cloud service bills that other companies have encountered.

From the demo, you saw that Terraform took a lot of manual steps out of deploying and updating the reaper bot application. All of the dependencies and cleanup are taken care of for you, and the team didn't need to understand Python in order to deploy or troubleshoot the bot.

But how can you make sure that operators stick to your tagging standards once you've created them? You saw that the automated cleanup by the reaper scripts was much more effective at cutting costs than reminders and the wall of shame. If you don't have strong policies that are more than just reminders and documentation, you might still end up with shadow IT practices. What if you could build the enforcement of those policies into the tools that developers and operators use to manage cloud instances? What if they couldn't forget or ignore the rules?

Sentinel

Terraform Enterprise, the commercial product that includes enterprise features on top of open source Terraform, has a framework called "Sentinel" which implements policy as code.

What that means is operators now have the ability to restrict what modifications each user can make to your infrastructure-similar to a fine-grained admin permissions system in a CMS. It also gives you the ability to enforce standards, like the suggested tagging system for AWS instances that was introduced earlier.

# This policy enforces the mandatory AWS tags "TTL" and "owner" for all aws_instance resources.

import "tfplan"

main - rule {

all tfplan.resources.aws_instance as _,instances {

all instances as _, r {

r.applied.tags contains "TTL" and r.applied.tags contains "owner"

}

}

}

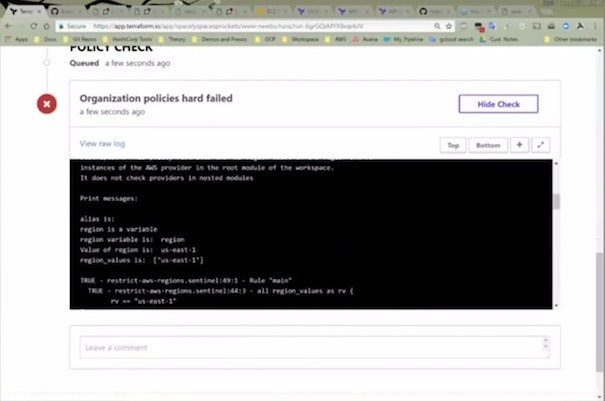

Sentinel can help you: - Limit the number of instances developers can create - Allow instances to run only in specific regions - Restrict the types of instance sizes developers can create - Enforce tagging standards, such as the "TTL" and "owner" tags example - Mandate the use of hardened images that are approved by security and operations leaders - Create a bunch of other rules

(Sentinel also works with all other HashiCorp tools as well.)

Sentinel runs like an automated test suite right before the infrastructure is provisioned. If all of your policies are followed in the Terraform provisioning plan, then the provisioning takes place. If not, the provisioning is stopped, and you can see what policies your plan violated.

Now, instead of developers using the AWS console, you'll have them use Terraform Enterprise, which has Sentinel-rules in place. This is a great way to meet the safety needs of the company stakeholders, and the velocity needs of the developers and the business.

Imagine the original rogue AWS scenario, but with Sentinel in place before you set developers loose on cloud infrastructure services. They wouldn't have been able to make a mess in the first place.

If you've already got a mess, then this tutorial should give you a helpful plan for cleaning it up and preventing future shadow IT practices.

Video: Terraform & Terraform Enterprise

Here's another clip of Sean Carolan explaining the background and value proposition of Terraform and Terraform Enterprise. The explanation starts at 9:05 and ends at 25:34.

He explains how Terraform helps security, reduces risks and human error, and also maintains an audit trail of all changes made to development, staging, and production environments.

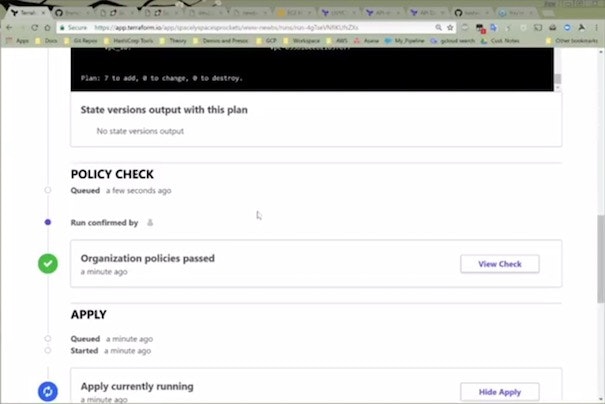

Video: Demo of Sentinel

Finally, let's end with another demo. This one doesn't involve the reaper bot, but it does show Sentinel in action, and it also illustrates a version-controlled Terraform workflow.

Demo starts at 25:44 and ends at 50:00

Here's a link to the code for this demo on GitHub.

Final takeaway

Cloud costs typically get out of hand when there is not enough organization, accountability, or oversight. Many times, a messy cloud portfolio is the result of many honest mistakes.

But the solution doesn't have to be complicated or harsh: Developers and operations professionals work best when there are awareness and guardrail features that work within their core version control and deployment tools without disrupting their workflow.

Terraform and Sentinel can do that. They are a safety net that lets engineers work quickly while steering them in the direction of your company's best and safest practices.