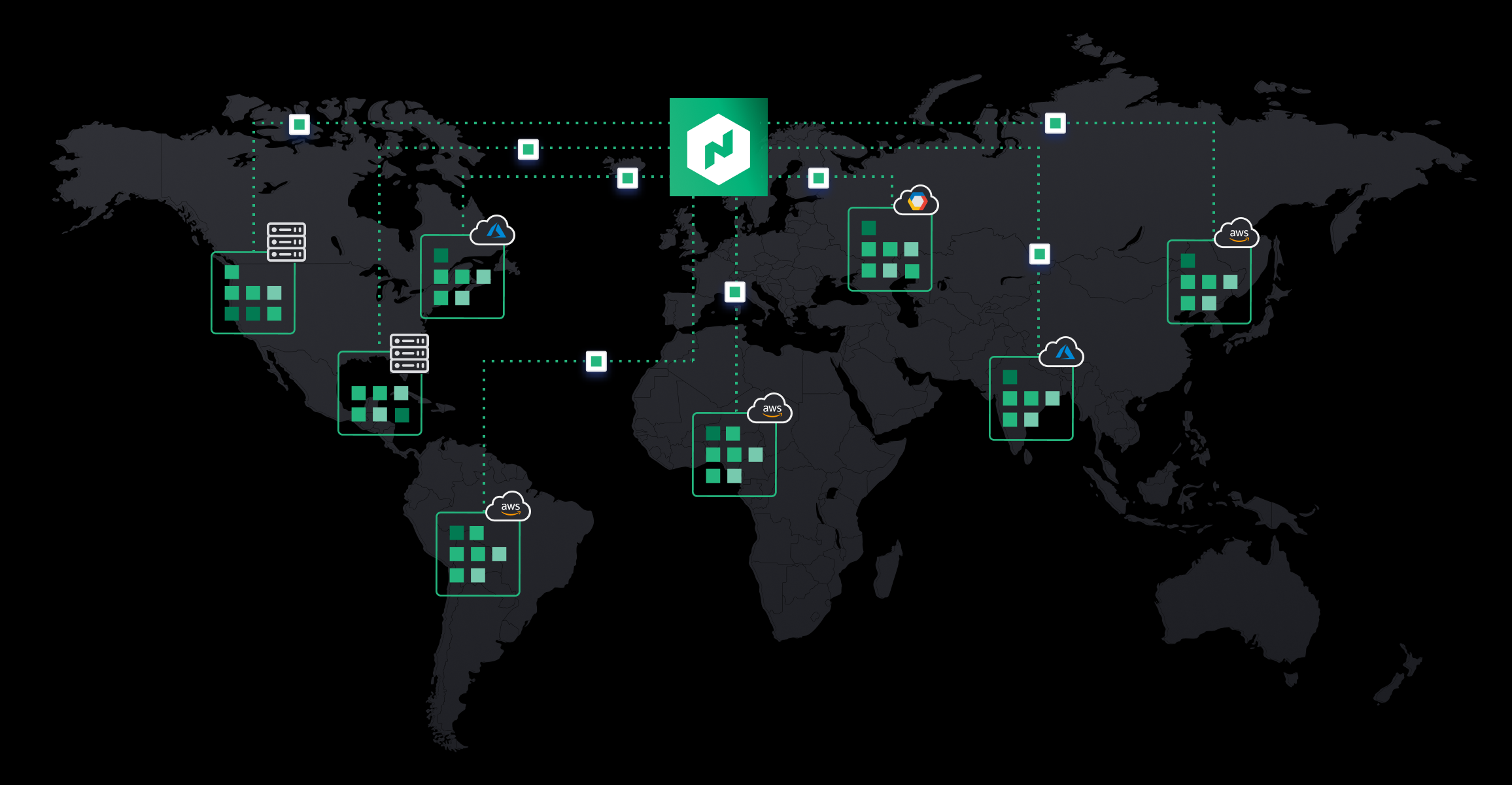

As enterprises around the globe are accelerating their transition to a dynamic, multi-cloud infrastructure model, they are facing increasing challenges of managing distributed applications across multiple datacenters and clouds. Federation has been instrumental in turning organizations' multi-cloud vision into reality. It enables one single unified monitoring and control plane for deploying and managing applications across multiple clouds and datacenters — a vision the industry has been chasing for many years.

With the release of HashiCorp Nomad 0.12, we are excited to introduce our breakthrough Multi-Cluster Deployment capability, which makes Nomad the first and only orchestrator on the market with complete and fully-supported federation capabilities for production. The Multi-Cluster Deployment capability is available as part of an add-on module in Nomad Enterprise.

Nomad Enterprise 0.12 multi-cluster deployments enable organizations to deploy applications seamlessly to federated Nomad clusters with configurable rollout and rollback strategies. It achieves simple and elegant federated deployments without the architectural complexity and overhead of running clusters on clusters.

Key benefits of this feature:

- High availability: Deploy an application to multiple datacenters or cloud regions in an active/active pattern so your most critical applications never go down.

- Built-in Flexibility: Not all applications are the same. Configurable rollout and rollback strategies allow organizations to accommodate all types of business SLAs and policies.

- No Setup Needed: Federate Nomad clusters with single CLI command and start deploying multi-cluster applications with minimal tweaks in the job spec. No namespaces, topology, or configuration changes required.

- Fully Supported by HashiCorp: Nomad’s multi-cluster federation capabilities are fully supported by HashiCorp.

To watch a live demo of this new feature, please register below.

Support for cluster federation has been available since early versions of open source Nomad. Federated Nomad clusters enable users to submit jobs targeting any region, from any server, even if that server resides in a different region. But prior to Nomad 0.12, coordinating deployments between regions fell to the operator. Users had to write their own automation to generate a job for each region, monitor the health of the deployment, and make decisions as to whether to continue or rollback.

The new Nomad Enterprise Multi-Cluster Deployment functionality allows you to submit a single job to multiple clusters (Nomad refers to each cluster as a “region”). The rollout across regions is coordinated so that each region’s deployment progress depends on the health of the other region deployments.

»The ‘multiregion’ Stanza

You can create a multi-region deployment job by adding a multiregion stanza to the job as shown below.

multiregion {

strategy {

max_parallel = 1

on_failure = "fail_all"

}

region "west" {

count = 2

datacenters = ["west-1"]

meta {

"my-key": "W"

}

}

region "east" {

count = 1

datacenters = ["east-1", "east-2"]

meta {

"my-key": "E"

}

}

}

»Parameters:

- The

strategyblock enables you to control how many regions to deploy at once with themax_parallelfield, and what to do if one of the regions fails to deploy with theon_failurefield. - The

regionblocks are the ordered list of Nomad regions. The contents of theregionblock are interpolated into each region's copy of the job. If a task group specifies acount = 0, its count will be replaced with thecountfield of the region. Thedatacentersfield will determine which Nomad datacenters within the region to deploy to. And themetablock can provide region-specific metadata to be merged with the job's mainmetastanza.

»Rollout Strategy

Federated Nomad clusters are members of the same gossip cluster but not the same raft cluster; they don't share their data stores or participate in a complicated "cluster of clusters" arrangement. Each region in a multi-region deployment gets an independent copy of the job, parameterized with the values of the region stanza. Nomad regions coordinate to rollout each region's deployment using rules determined by the strategy stanza.

In a multi-region deployment, regions begin in a "pending" state. Up to max_parallel regions will run the deployment independently. By default, Nomad will deploy all regions simultaneously.

$ nomad job status -region east example

...

Latest Deployment

ID = d74a086b

Status = pending

Description = Deployment is pending, waiting for peer region

Multiregion Deployment

Region ID Status

east d74a086b pending

west 48fccef3 running

Deployed

Task Group Auto Revert Desired Placed Healthy Unhealthy Progress Deadline

cache true 1 0 0 0 N/A

Allocations

No allocations placed

Once each region completes, it checks its place within the set of regions and the state of the other region deployments and decides whether it needs to hand off to the next region. It will block waiting for the peer regions to complete. When the last region has completed the deployment, the final region will unblock all the other regions to mark them as successful.

$ nomad job status -region west example

...

Latest Deployment

ID = 48fccef3

Status = blocked

Description = Deployment is complete but waiting for peer region

Multiregion Deployment

Region ID Status

east d74a086b running

west 48fccef3 blocked

Deployed

Task Group Auto Revert Desired Placed Healthy Unhealthy Progress Deadline

cache true 2 2 0 0 2020-06-17T13:35:49Z

Allocations

ID Node ID Task Group Version Desired Status Created Modified

44b3988a 4786abea cache 0 run running 14s ago 13s ago

7c8a2b80 4786abea cache 0 run running 13s ago 12s ago

»Failure Is Always an Option

If a region's deployment fails, the behavior depends on the on_failure field of the strategy stanza. You can choose to allow a region to fail all the remaining regions (the default), to only mark itself as failed ("fail_local"), or to fail all the peer regions, including rolling back those that have completed if the job has the auto_revert field set on its update stanza.

The example shows the default value of on_failure. Because max_parallel = 1, the "north" region will deploy first, followed by "south", and so on. But supposing the "east" region failed, both the "east" region and the "west" region would be marked failed. Because the job has an update stanza with auto_revert=true, both regions would then rollback to the previous job version.

The "north" and "south" regions would remain blocked until an operator intervenes by deploying a new version of the job or with the new nomad deployment unblock command.

multiregion {

strategy {

on_failure = ""

max_parallel = 1

}

region "north" {}

region "south" {}

region "east" {}

region "west" {}

}

update {

auto_revert = true

}

»Getting Started

We're releasing Nomad's Enterprise Multi-Cluster Deployments feature as part of the Multi-Cluster and Efficiency Module in Nomad Enterprise 0.12. Learn more about Multi-Cluster Deployments at the HashiCorp Learn website. To start a free trial of Nomad Enterprise, please sign up here.