Nomad 1.4 Adds Nomad Variables and Updates Service Discovery

The GA release of HashiCorp Nomad 1.4 enhances native service discovery support with health checks and introduces Nomad Variables, which lets users store configuration values.

We are excited to announce HashiCorp Nomad 1.4 is now generally available. Nomad is a simple and flexible orchestrator used to deploy and manage containers and non-containerized applications. Nomad works across on-premises and cloud environments. It is widely adopted and used in production by organizations such as Cloudflare, Roblox, Q2, and Pandora.

Let’s take a look at what’s new in Nomad and the Nomad ecosystem:

- Nomad Variables

- Service discovery improvements

- ACL roles and token expiration

- UI Improvements

- Community updates

»Nomad Variables

Many Nomad workloads require secrets or configuration parameters. Nomad has a template block to provide such configuration to tasks but has previously stored configuration values in external services such as HashiCorp Consul and HashiCorp Vault. However, this involves standing up and running separate services alongside Nomad.

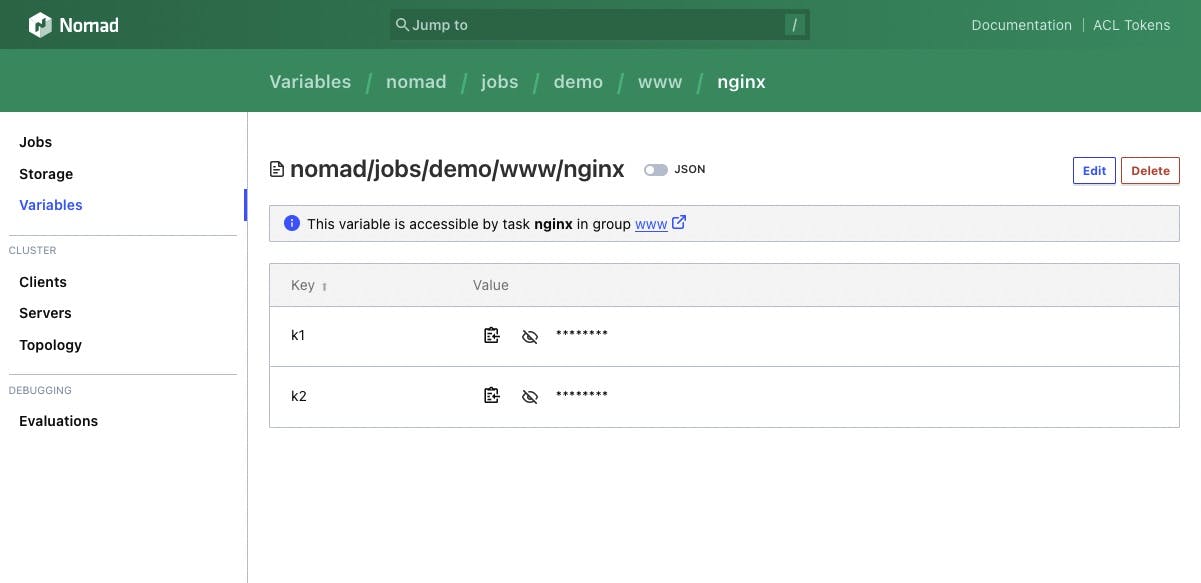

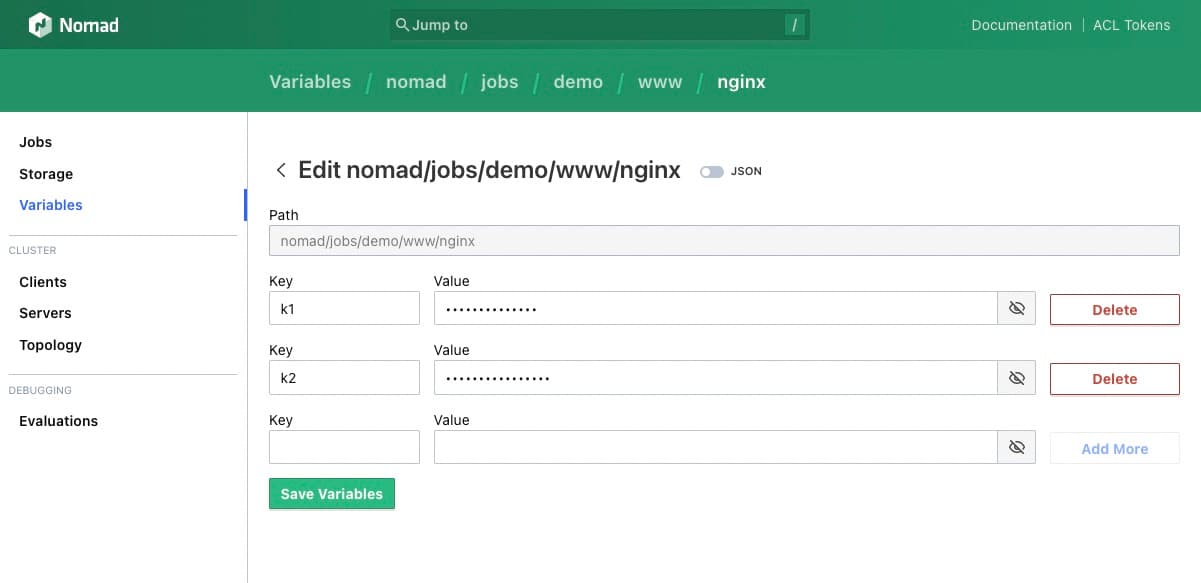

In Nomad 1.4, you can store encrypted configuration values at file-like paths directly in Nomad. These values, called Nomad Variables, can then be used across various workloads in your cluster, making configuration management simple in Nomad 1.4. Nomad Variables are encrypted at rest with AES-256 encryption, in-transit by mTLS between Nomad clients and servers, and protected by our access control list (ACL) system.

You can create, read, and edit variables in the Nomad CLI or the UI.

Access to Nomad Variables is controlled by ACL policies. Nomad tasks now have workload identities signed by the Nomad server. Those workload identities automatically grant tasks access to variables at paths that match their job ID, task group, and task.

Nomad ACL policies can also be attached to jobs, groups, and tasks.

Tasks access variables via their path using template blocks:

template {

destination = "${NOMAD_SECRETS_DIR}/env.vars"

env = trues

change_mode = "restart"

data = <<EOF

{{- with nomadVar "nomad/jobs/minio/storage/minio" -}}

MINIO_ROOT_USER = {{.root_user}}

MINIO_ROOT_PASSWORD = {{.root_password}}

{{- end -}}

EOF

}

The Nomad CLI accepts variable definitions from command line flags, a file, or from stdin:

$ nomad var put app/db user=sa pass=Passw0rd!

Writing to path "app/data"

Created variable "app/data" with modify index 1007

$ nomad var get app/db

Namespace = default

Path = app/db

Create Time = 2022-08-24 18:02:59.157553 -0400 EDT

Check Index - 1007

Items

pass = Passw0rd!

user = sa

And Nomad Variables support check-and-set semantics so that you can be sure that a value hasn't been edited between the time you queried it and tried to update it:

$ nomad var put -check-index=0 cas/demo a=1

Check-and-Set conflict

Your provided check-index (0) does not match the server-side index (1). The server-side item was last updated on 2022-09-14T15:29:20-07:00. If you are sure you want to perform this operation, add the -force or -check-index=1 flag before the positional arguments.

»Service Discovery Improvements

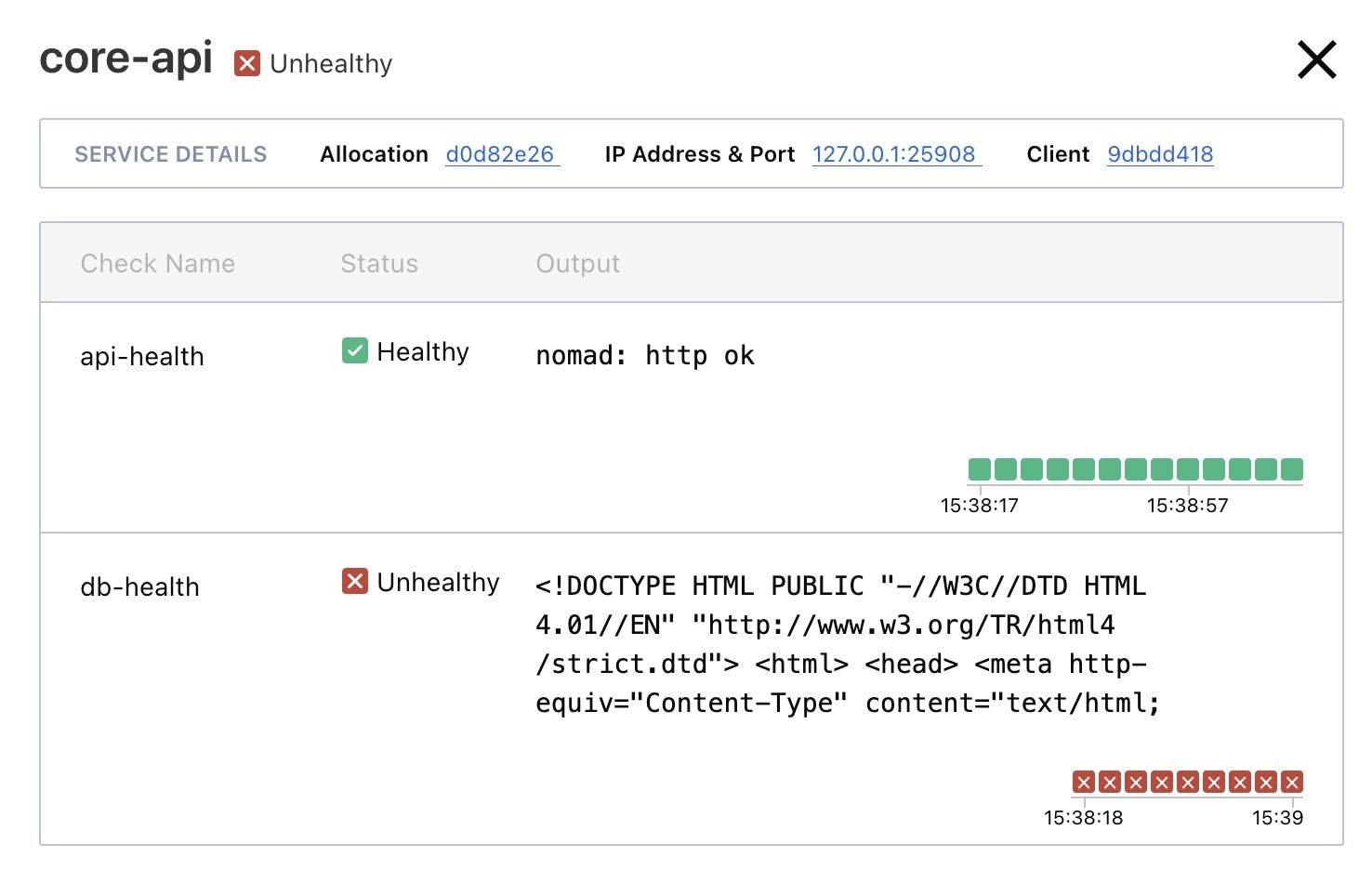

Expanding on Nomad's native service discovery support, Nomad 1.4 introduces the ability to configure health checks for native Nomad services.

Users can declare HTTP or TCP checks that monitor the health of a service using the Nomad provider. These checks inform the deployment process, ensuring bad versions of a task are not rolled out, and if a service fails a health check, it will be removed from Nomad service discovery until it becomes healthy again. This gives users more fine-grained control over deployments and service discovery logic, and will allow even more users to see the benefits of native Nomad service discovery in production environments.

More detailed information can be found in the checks documentation.

service {

name = "web"

port = "http"

provider = "nomad"

check {

type = "http"

path = "/"

interval = "5s"

timeout = "1s"

method = "GET"

}

}

To help operators understand the current status of an allocation, check status information for Nomad services is now reported in the alloc status command.

$ nomad alloc status 45

Nomad Service Checks:

Service Task Name Mode Status

Database task db_tcp_probe readiness success

Web (group) healthz healthiness failure

Web (group) index-page healthiness success

When a short summary overview of check statuses is not enough, the new alloc checks sub-command can be used to view complete check result information, including execution output from failing checks.

$ nomad alloc checks 45

Status of 3 Nomad Service Checks

ID = e0499dc450dcc5de2040a1bd7c04b736

Name = db_tcp_probe

Group = example.group[0]

Task = task

Service = database

Status = success

Mode = readiness

Timestamp = 2022-08-22T10:03:32-05:00

Output = nomad: tcp ok

ID = 19fccb5b660a6f51d420fc6808674971

Name = index-page

Group = example.group[0]

Task = (group)

Service = web

Status = success

StatusCode = 200

Mode = healthiness

Timestamp = 2022-08-22T10:03:32-05:00

Output = nomad: http ok

ID = 77fb619eb1123f3b68a26081d442be60

Name = healthz

Group = example.group[0]

Task = (group)

Service = web

Status = failure

Mode = healthiness

Timestamp = 2022-08-22T10:03:32-05:00

Output = nomad: Get “http://:9999/” dial tcp :9999: connect: connection refused

The Nomad UI has been updated to allow users to see services within the context of a job or an allocation. When looking at an Allocation page, users can view the services running within that allocation. Clicking on a service allows a user to observe the status of its health checks or jump to the related service in the Consul UI.

»ACL Roles and Token Expiration

Nomad uses an ACL system to control permissions within the cluster. Traditionally, users were granted access via an ACL token linked to one or more ACL policies. Now, instead of linking to ACL policies, a token can be associated with an ACL role.

ACL roles provide an easy way to collect ACL policies. For instance, if you have a class of users that need the policy write-to-apps-ns and the policy read-ops-ns, you could create a named role called app-engineer that would have both policies attached. If, in the future, this class of user needs an additional policy attached or a policy rescinded, simply updating the policies linked to the role would automatically change user permissions. This allows simple updates to permissions without having to issue new ACL tokens.

For more information on ACL roles, see the API documentation.

In addition to roles, the ACL system has also been updated with ACL token expiration. When an ACL token is created, Nomad operators can now include an optional ttl value to specify the token’s time-to-live.

nomad acl token create -name="ephemeral-token" -policy=example-policy -ttl=8h

Once the token expires, it will no longer provide access to the specified role or policies.

ACL roles and token expiration are improvements to the core of the Nomad’s ACL system. We plan to use these changes to implement single sign-on (SSO) and OIDC authentication in a future Nomad release.

»UI Improvements

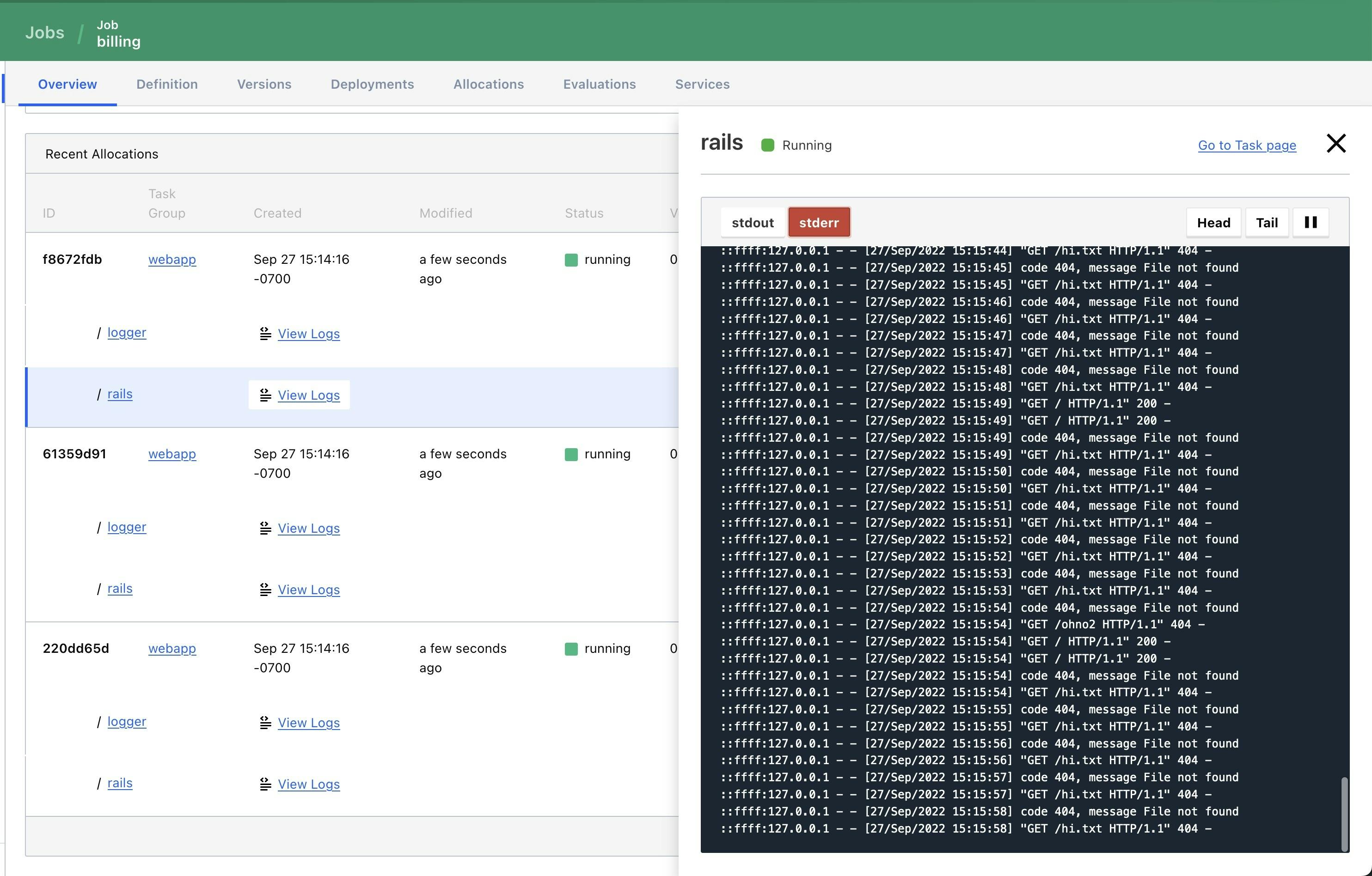

The Nomad UI has been updated to make navigating to specific tasks and viewing logs easier. From the job and task group pages, Nomad will now show links to individual tasks under each allocation. Users can navigate to a specific task with a single click and view each task’s logs without switching pages. This means that there are fewer clicks to get to the information you need.

»Other Recent Nomad Updates

Since Nomad 1.3 was released in May 2022, we have released exciting new additions to the product. In case you missed it, these recent improvement include:

»Simple Load Balancing of Nomad Services

When querying the Nomad Services API endpoint, users can now provide a new choose parameter. This value specifies the number of instances of a service to return and a key to use to select instances using rendezvous hashing.

curl 'localhost:4646/v1/service/redis?choose=s|abc123'

In practice, this allows for simple load balancing between Nomad services to be implemented using Nomad’s template functionality.

template {

data = <<EOH

# Configuration for 1 redis instance, as assigned via rendezvous hashing.

{{$allocID := env "NOMAD_ALLOC_ID" -}}

{{range nomadService 1 $allocID "redis"}}

server {{ .Address }}:{{ .Port }};{{- end }}

{{- end}}

EOH

}

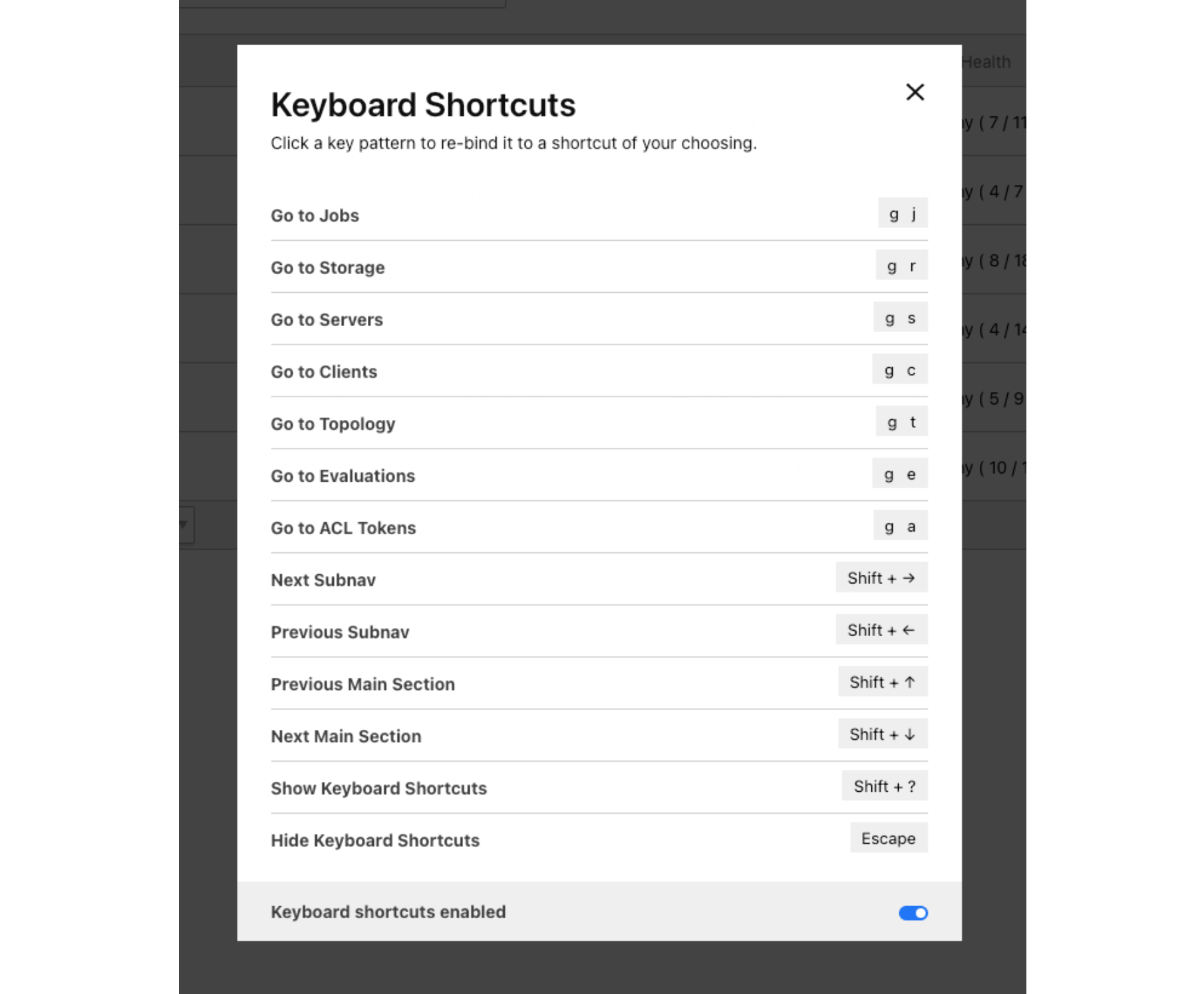

»Keyboard Navigation

A robust keyboard navigation system has been added to the Nomad UI. This allows users to quickly jump between various pages of the UI without ever touching their mouse, making navigating the UI even faster

Keyboard shortcuts can be rebound to different keys or disabled completely. For more details, see the Keyboard Navigation Learn Guide.

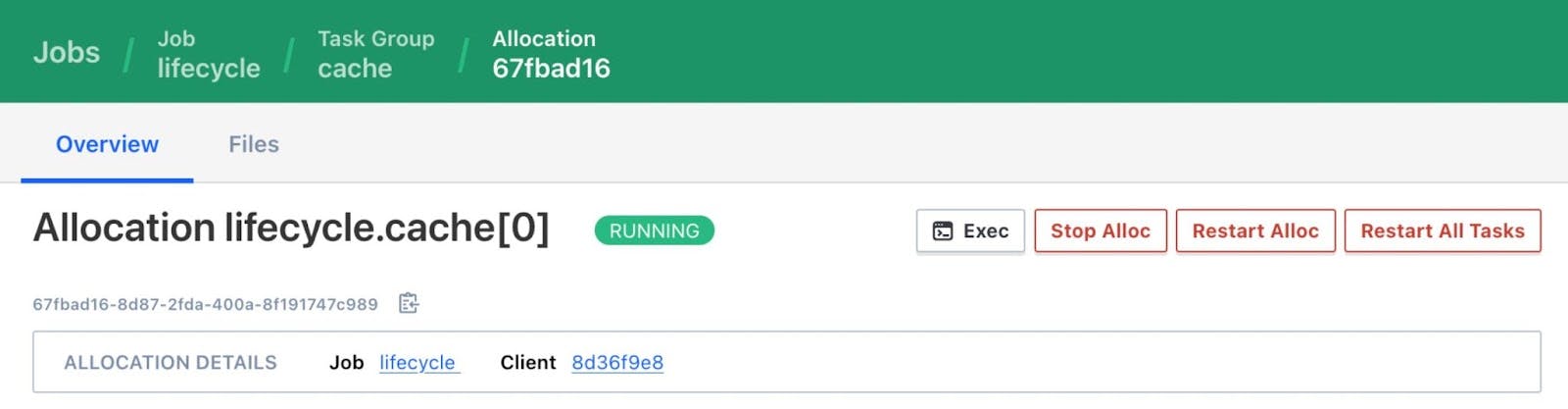

»Restart All Allocation Tasks

Nomad now includes more options to restart the tasks associated with an allocation.

Any currently running tasks in an allocation can be restarted using either the command nomad alloc restart or the “Restart Alloc” button in the Nomad UI. This matches existing behavior.

However, for certain Nomad jobs re-running pre-start tasks and post-start tasks can be necessary to ensure a healthy restart. To handle restarts on these jobs, we have added the ability to restart all of the tasks in an allocation including any completed tasks or sidecars. These restarted tasks will follow the standard lifecycle order, beginning with pre-start tasks. This can be done via the new “Restart All Tasks” button in the UI, or with the new -all-tasks flag.

nomad alloc restart -all-tasks 67fbad16

»Templating Improvements

Various improvements have been made to Nomad’s template functionality:

- A template’s

change_modecan be set toscriptto allow custom scripts to be executed when a template is updated. This allows Nomad tasks to respond in more ways to updates to Consul, Vault, or Nomad. - Nomad agents now have more fault-tolerant defaults to keep Nomad workloads running even when a Nomad client loses connection to Consul, Vault, or the Nomad servers.

- Templates support

uidandgidparameters to specify the template owner’s user and group on Unix systems. This allows more fine-grained control over file permissions when using Nomad’s template functionality.

»Sentinel Improvements (Enterprise)

In Nomad Enterprise, users can now use information from Nomad namespaces and ACL tokens in Sentinel policies. This allows users to write Sentinel policies that take into account information such as Namespace metadata or ACL Roles. For example, you can now write a policy to ensure that only users with a specific ACL role can use CSI volumes, or ensure a task’s user value is specified in namespace metadata.

»Operational Improvements

Various improvements have been made to Nomad cluster management:

- Scheduler configuration can be read and updated with new

get-configandset-configCLI commands. - Nomad evaluations can now be deleted via API or CLI.

- When bootstrapping a cluster, an operator-generated bootstrap token may be passed to the

acl bootstrapcommand, as shown here:

nomad acl bootstrap path/to/root.token

»Community Updates

Nomad is committed to being an open source first project, and we’re always looking for new open source contributors. If you’re familiar with golang or interested in learning/honing your Go skills, we invite you to join the group of Nomad contributors helping to improve the product.

Looking for a place to start? Head to the Nomad contribute page for a curated list of good first issues. If you’re a returning Nomad contributor looking for an interesting problem to solve, take a glance at issues labeled “help-wanted”. For help getting started, check out the Nomad contributing documentation or comment directly on the issue.

»Next Steps

We encourage you to try out the new features in Nomad 1.4:

- Download Nomad 1.4 from the project website.

- Learn more about Nomad with tutorials on the HashiCorp Learn site.

- Contribute to Nomad by submitting a pull request for a Github issue with the “help wanted” or “good first issue” label.

- Participate in our community forums, office hours, and other events.

Sign up for the latest HashiCorp news

More blog posts like this one

Automating workload identity for Vault and Nomad with Terraform

Learn how to use HashiCorp Vault and workload identities in your Nomad-orchestrated applications.

Terraform ephemeral resources, Waypoint actions, and more at HashiDays 2025

HashiCorp Terraform, Waypoint, and Nomad continue to simplify hybrid cloud infrastructure with new capabilities that help secure infrastructure before deployment and effectively manage it over time.

Nomad 1.10 adds dynamic host volumes, extended OIDC support, and more

HashiCorp Nomad 1.10 introduces dynamic host volumes, extended OIDC support, expanded upgrade testing, and improved CLI to UI transition.