We are excited to announce that HashiCorp Nomad 1.3 is now generally available. Nomad is a simple and flexible orchestrator used to deploy and manage containers and non-containerized applications. Nomad works across on-premises and cloud environments. It is widely adopted and used in production by organizations such as Cloudflare and Q2.

Let’s take a look at what’s new in Nomad and in the Nomad ecosystem, including:

- Native service discovery

- Edge and reconnected workload support

- CSI general availability

- Nomad Pack improvements

- New Evaluations UI

- Quality of life improvements

»Native Service Discovery

In previous versions of Nomad, service discovery could be accomplished only through Nomad’s integration with Consul or via third-party tools. With Nomad 1.3, Nomad tasks can easily make requests to other tasks by discovering address and port information through template stanzas or the Nomad API. This allows users who do not need a full service mesh, such as Consul Connect, to do simple service discovery using only Nomad.

To enable Nomad service discovery, set the new provider option in the service stanza to "nomad". This will register a new Nomad-only service when the job is deployed. By default, services use the "consul" provider to ensure backwards compatibility.

service {

name = "api"

provider = "nomad"

port = "api"

}

Services using the "nomad" provider provide a subset of the functionality provided by Consul services. In the update stanza, only "task_states" is a valid value for the health_check parameter and additional service-level health checks are not permitted.

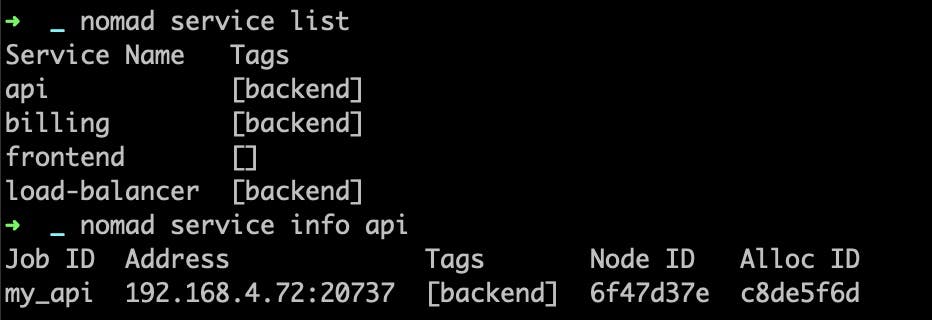

Once a task with a Nomad service has been deployed, the service can be viewed via the Nomad CLI and API.

To access the service from a different job, use the template stanza. This allows users to write service addresses to a file within each allocation, then send the task a signal or restart command if the addresses are updated. The new nomadService keyword allows users to get a list of addresses and ports associated with a Nomad service.

Here is an example template:

template {

data = <<EOH

http {

server {

listen 80;

location / {

{{ range nomadService "api" }}

proxy_pass http://{{ .Address }}:{{ .Port }};

{{ end }}

}

}

}

EOH

destination = "local/api-servers"

}

The template above might output the following file to configure an NGINX proxy task:

http {

server {

listen 80;

location / {

proxy_pass 10.5.2.45:32492

proxy_pass 10.2.6.61:32904

proxy_pass 10.2.6.61:32847

}

}

}

The task declared in this job could now access the addresses and ports of the service declared in a separate Nomad job. If the job that declared the Nomad service is re-deployed to a different node, these values will be updated automatically.

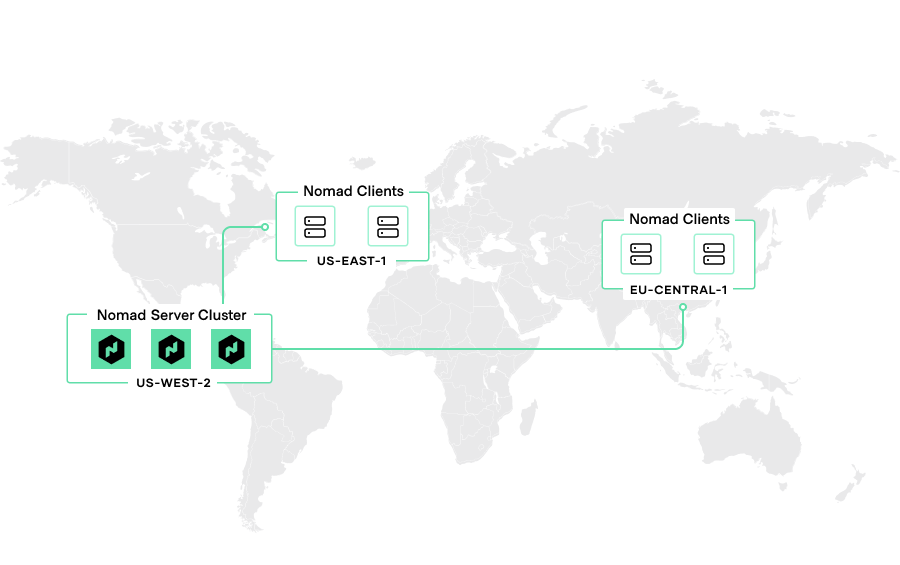

Native service discovery in Nomad is not meant to replace Consul for most users. The Nomad-Consul integration will continue to improve and expand going forward. Nomad users already on Consul are encouraged to continue using the integration, as Consul is a more fully featured service discovery and service mesh solution. Nomad service discovery is targeted at users who have simple routing needs or architectures that prohibit the use of Consul, such as geographically distributed edge workloads.

»Edge and Reconnected Workload Support

When a client is running, Nomad uses a heartbeat mechanism to monitor the health and liveness of servers. Nomad supports deploying clients that are distributed across a significant physical distance or on a different network than their configured servers. These edge clients are more susceptible to failing their heartbeat check due to latency or loss of network connectivity. By default, if the heartbeats fail, the Nomad servers assume the client node has failed and they stop assigning new tasks and start creating replacement allocations.

Nomad 1.3 introduces a new optional configuration attribute max_client_disconnect that allows operators to more easily start up rescheduled allocations for nodes that have experienced network latency issues or temporary connectivity loss. This option enables better support for single cluster deployments with edge clients that are geographically distributed or at risk of unstable network connections.

To enable this setting, you can set the max_client_disconnect attribute in the group stanza of your job file:

job "docs" {

group "example" {

max_client_disconnect = "1h"

}

}

By default, if max_client_disconnect is not set and a node fails its heartbeat, Nomad sets the node status as down at the server and marks the allocations as lost.

If max_client_disconnect is set on an allocation, and a client node has been disconnected for longer than heartbeat_grace but less than the max_client_disconnect duration, Nomad sets the client node status to disconnected and its allocations’ statuses to unknown. If the node reconnects before the max_client_disconnect duration ends, it will transition back to ready status. The client’s allocations will gracefully transition back from unknown to running without restarting as they would in previous versions of Nomad.

If the client node is disconnected for longer than the max_client_disconnect duration, Nomad will mark the client node as down and the allocations as lost. Upon reconnecting, the client’s allocations will restart if they have not been rescheduled elsewhere.

»CSI General Availability

Nomad's Container Storage Interface integration can manage external storage volumes for stateful services running inside your cluster. With Nomad 1.3, we are excited to announce that support for CSI is now generally available.

Nomad CSI now supports the topology feature. This is useful when you want to specify where a CSI volume should be placed within your cluster. For instance, the code shown below from a volume.hcl file using the AWS EBS CSI driver sets the volumes to be mounted in the zone "us-east-1b". This request is passed to the EBS CSI provider, which properly places the volume in this availability zone.

topology_request {

required {

topology {

segments {

"topology.ebs.csi.aws.com/zone" = "us-east-1b"

}

}

}

}

Additionally, Nomad now supports passing secrets into CSI-related commands for creating, deleting, and listing CSI snapshots via the CLI.

$ nomad volume snapshot list -secret someKey=someValue

With these features, stability improvements, and bug fixes, CSI is ready to be used for production workloads on Nomad. We want to thank everyone in the Nomad community for their patience and invaluable feedback while CSI was in beta.

»New Evaluations UI

Nomad 1.3 introduces a new user interface for viewing evaluation information. This allows Nomad users to quickly view the status of recent evaluations for the entire cluster, and dig into information on a specific evaluation.

Clicking on the new “Evaluations” link in the sidebar will show you a list of recent evaluations across all the jobs you can see in your cluster. Evaluations can be filtered by status and type, and searched for by evaluation ID, related job ID, related node ID, or the reason for triggering.

Selecting an evaluation reveals details including:

- The evaluation ID

- The evaluation status

- Links to the associated job or node

- The evaluation priority level

- Placement failure information

- A visual display of related evaluations

These pages allow Nomad operators to troubleshoot their Nomad cluster quickly using the UI, instead of exclusively relying on the CLI or API for evaluation information.

»Nomad Pack Improvements

Since the initial release of Nomad Pack as a Tech Preview last year, we have made several quality of life improvements to Nomad Pack. With these improvements, Nomad users can better integrate Nomad Pack into CI/CD workflows and more easily write and debug new packs.

When using a CI/CD pipeline, users often need to consume secrets that are not checked into their version control system. This is often achieved by setting environment variables in build processes. Nomad Pack can now consume these environment variables as inputs when rendering a template or deploying a job. For instance, in the following command, the pack variable my-key is set by setting the environment variable NOMAD_PACK_VAR_my-key, then used when deploying Prometheus.

export NOMAD_PACK_VAR_my-key=shhh_its_a_secret

nomad-pack run prometheus

Additionally, packs can now be rendered to a specific directory in the file system using the -o or --to-dir flags.

nomad-pack render prometheus -o ./my-job-files

Nomad Pack will now handle namespace inputs similarly to the Nomad CLI. Namespaces can be set using the NOMAD_NAMESPACE environment variable, the --namespace flag, or manually in the jobspec template.

To deploy a pack to the dev namespace, you can use either of the following commands:

export NOMAD_NAMESPACE=dev

nomad-pack run prometheus

nomad-pack run prometheus --namespace=dev

To make it simpler to get started writing custom packs, a new generate command has been added to create a basic pack or registry on your local filesystem. This gives new users a head start when adding a pack by automatically writing boilerplate code and demonstrating best practices. For example, to start writing a pack called ci-runner, you would run the following command:

nomad-pack generate pack ci-runner

Users on macOS can now install Nomad Pack using Homebrew with brew install nomad-pack, making it easier to start using packs.

Additionally, Nomad Pack has new template helper methods and better error messages, among other improvements.

Nomad Pack helps you easily run common applications, templatize your applications, and share configuration with the Nomad community. To get started, check out the Nomad Pack repository on GitHub, or read the Nomad Pack HashiCorp Learn guides for a walkthrough of using and extending Nomad Pack.

»More New Features

Nomad 1.3 also includes various quality of life improvements and bug fixes. Highlights include:

- A new

addressfield in theservicestanza that allows Nomad or Consul services to be registered with a custom.Addressto advertise. The address can be an IP address or domain name. - Easier API requests from the command line with

nomad operator api - Support for Linux Control Groups (cgroups) V2

- API endpoints for jobs, volumes, allocations, evaluations, deployments, and tokens now support pagination and a new filter parameter, allowing for more specific and efficient API queries.

- Task drivers can now be enabled or disabled for specific namespaces

- Fingerprinting is supported on Digital Ocean Droplets

See the Nomad 1.3 Changelog for a full list of changes.

»Other Recent Updates

Since Nomad 1.2 was released in November 2021, we have made some exciting new additions to Nomad and its ecosystem. Some of these improvements deserve mention even though they were released prior to Nomad 1.3. So, in case you missed it, here are some of these recent improvements:

»OpenAPI Support

The Nomad OpenAPI project was released. Nomad endpoints are described using the OpenAPI (Swagger) spec, allowing for automatic generation of documentation and SDKs in various languages. Golang, Java, JavaScript, Python, Ruby, Rust, and TypeScript clients have already been generated. Visit the Nomad OpenAPI GitHub repository for information on how to contribute or write an integration of your own.

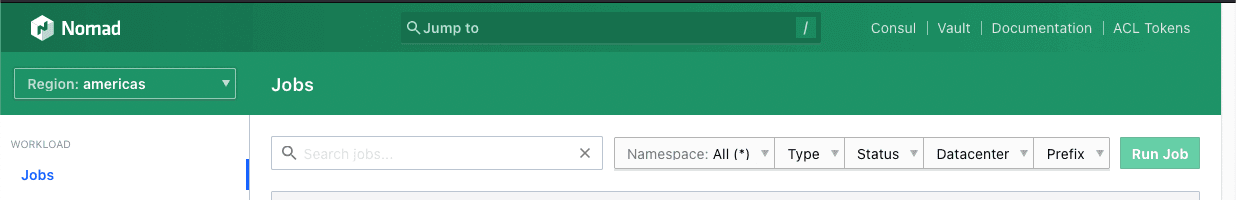

»UI Improvements

The Nomad UI received a number of upgrades:

Users can now add links to Consul and Vault in the Nomad header.

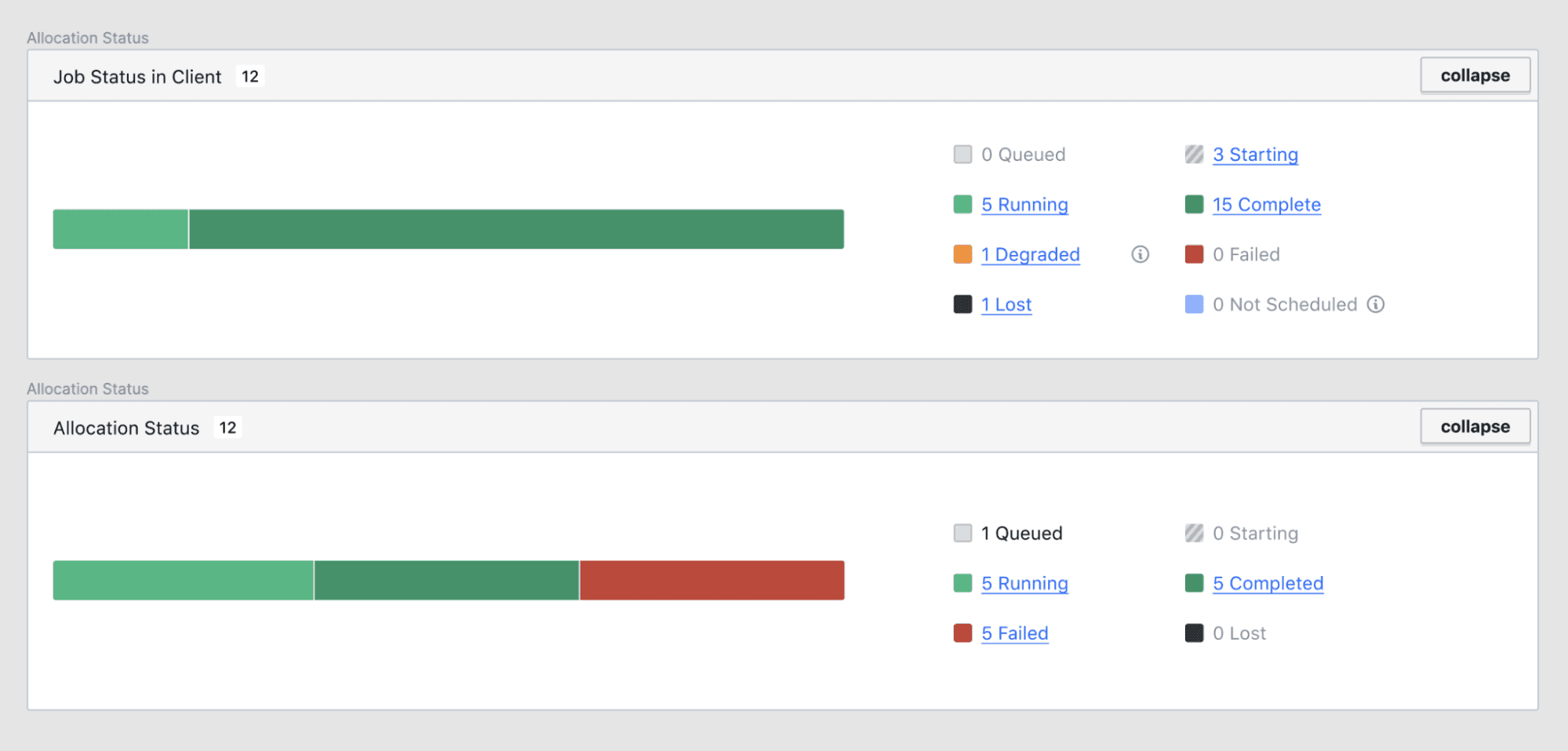

You can also jump directly to allocations filtered by status with new links on the jobs page.

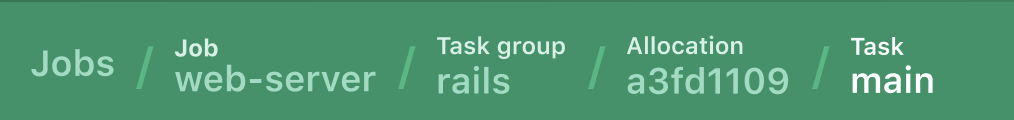

And you can navigate more easily with improved breadcrumbs.

The UI can even be completely disabled by editing the new Nomad UI config stanza.

»Evaluations and Deployments

Inspecting evaluations and deployments has gotten easier with improvements to the API endpoints. Both v1/evaluations and v1/deployments now support pagination.

nomad operator api /v1/deployments?page=2&per_page=10

Additionally, users can more easily query Nomad for a list of all the evaluations in a cluster using the new command: nomad eval list.

»Advanced Operator Actions and Cluster Configuration

We also made various quality of life improvements for users configuring, troubleshooting, or fixing their Nomad clusters:

- Job and allocation shutdown delays can now be bypassed using the

-no-shutdown-delay flag. This allows users to quickly shut off misbehaving jobs. - The new

-eval-priorityflag can be passed into thejob runandjob stopcommands. This allows operators to set a high priority when it is critical that a job be immediately deployed or stopped. - By using

RejectJobRegistration, Nomad operators can automatically reject any new job registration, dispatch, or scaling attempts. This can help shed load coming from automated processes or other users in the organization. - The number of active schedulers can be configured on the fly using the /agent/schedulers/config endpoint, allowing for performance tuning without restarting servers.

- Consul Template can be more easily tuned with configurable values for

consul_retry,vault_retry,max_stale,wait,wait_bounds, and block_query_wait in the Nomad client. Additionally, job specs can providewaitvalues to Consul Template as well.

»Next Steps

We encourage you to experiment with the new features in Nomad 1.3 and we are eager to see how these new features enhance your Nomad experience. If you encounter an issue, please file a new bug report in GitHub and we'll take a look.

Finally, on behalf of the Nomad team, I’d like to conclude with a big “thank you” to our amazing community! Your dedication and bug reports help us make Nomad better. We are deeply grateful for your time, passion, and support. Users can

- Download Nomad 1.3 from the project website.

- Learn more about Nomad with tutorials on the HashiCorp Learn site.

- Walk through a step-by-step tutorial on scheduling edge services with Nomad’s native service discovery.

- Contribute to Nomad by submitting a pull request for a GitHub issue with the “help wanted” or “good first issue” label.

- Participate in our community forums, office hours, and other events.