Note: We updated the scalability benchmark with the 2 Million Container Challenge.

Intro

Cluster schedulers promise us ease of deployment with ultimate scalability. We designed an ambitious challenge to test these promises: schedule one million containers. We call this the Million Container Challenge (C1M).

HashiCorp prides itself on creating technically excellent software, and the C1M is a test to showcase this. We tested Nomad against the C1M to ensure that we meet the needs of our users at any scale.

A cluster of five Nomad servers scheduled one million containers in less than five minutes, a rate of 3,750 containers per second. Details and observations of this benchmark are explained below.

Thank you to Google for providing the credits and support necessary to run the infrastructure for the C1M on Google Cloud.

C1M Setup

We ran both a C100K (100,000 containers) and C1M with the following cluster configurations. For each cluster configuration, we ran five Nomad servers. The cluster size below is number of Nomad clients, and does not include the additional servers.

| Cluster Size | Jobs | Tasks per Job | Total Tasks |

| 500 | 318 | 315 | 100,170 |

| 5,000 | 1,000 | 1,000 | 1,000,000 |

Our partners at Google generously provided the credits to run this amount of compute on Google Compute Engine. The strong and consistent performance of Google Cloud made the entire testing process efficient. We used Terraform to spin up thousands of resources in minutes.

A job is a declaration of work submitted to the scheduler. A job is composed of many tasks. A task is an application to run, which is a Docker container running a simple Go service in this test. Nomad can also schedule other tasks such as VMs, binaries, etc.

We could have submitted 1 job with 1,000,000 tasks for the C1M. Instead, we broke down the tasks into many jobs in an even split in order to provide more strain on the scheduler as well as better represent real world scenarios where many jobs would be running.

To make the benchmark even more strenuous and realistic, we designed the jobs to have constraints on which nodes can run the tasks. This forces the scheduler to evaluate and check constraints in addition to pure binpacking.

For full technicals of the C1M setup, including the Docker images used, the Nomad job specification, Terraform scripts, and more please see the full technical README of the C1M. The linked repository can also be used to reproduce any of these results.

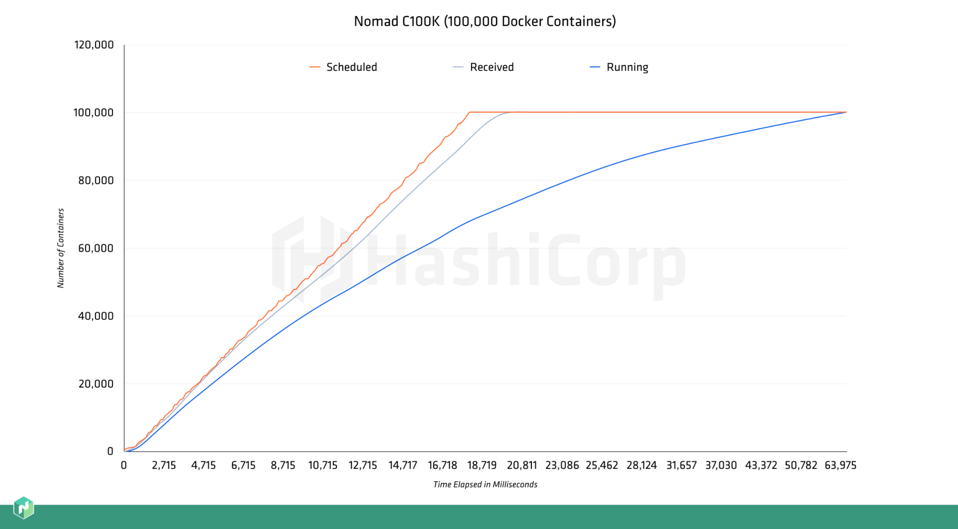

C100k Results

We begin by looking at the results for the C100K, since interesting observations can be drawn by comparing these results to the C1M results shown later.

The Y-axis is number of containers. The X-axis is time (milliseconds). There are three lines on the graph:

• Scheduled (Orange) - The scheduler chose where the container should be running. In other words, a container has been binpacked and scheduled. At this stage, it is now ready for the client to retrieve it.

• Received (Grey) - The client has acknowledged that it received a container task and will begin starting it.

• Running (Blue) - The container completed launching and is now running. In other words, if you went to this machine and ran docker ps, you would see this container in the "running" state.

These results lead to interesting observations:

First, the performance of Nomad in scheduling is nearly linear. Nomad completes scheduling and placement of all the containers in 18.1 seconds, exceeding 5,500 placements per second.

By 19.5 seconds (less than 2 seconds after the placement is complete), all clients have acknowledged that they have received their placements. In less than 20 seconds, Nomad is now just waiting for all the containers to start.

The final line (grey) is logarithmic. At 58.2 seconds Nomad has started 99% of the containers. In our investigation we found that the clients were simply saturated as they started hundreds of containers within a few seconds.

C1M Results

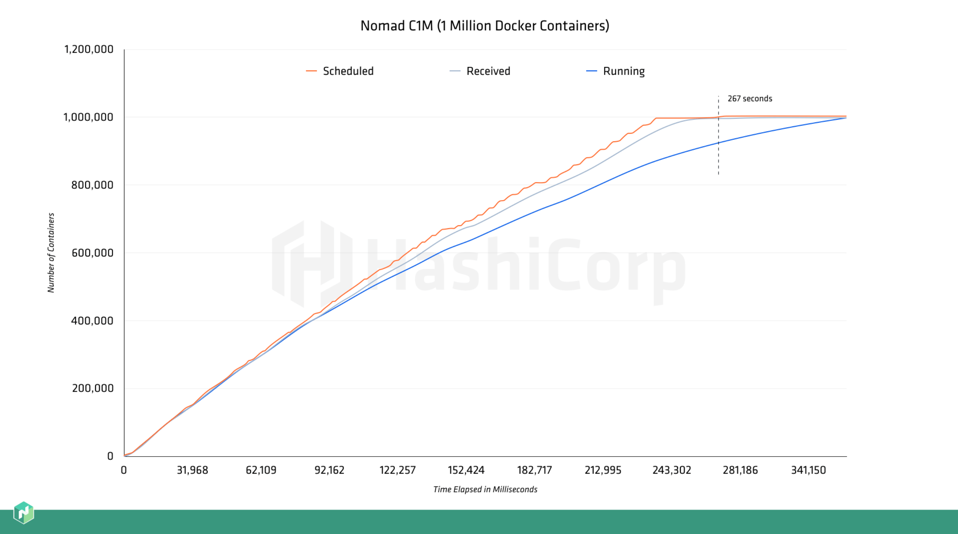

Next we look at the results for the C1M. The graph axes, line definitions, and colors are the same as the prior section.

Even at 1,000,000 containers, Nomad provides near-linear performance. Nomad completes all scheduling in 266.7 seconds (less than five minutes). This is a rate of nearly 3,750 placements per second.

The time it takes for clients to receive tasks is nearly realtime despite the vast scale. By the time a million containers have been scheduled, 99.4% of them have already been acknowledged by the clients.

99% of all containers are running in 370.5 seconds. As before, the time between client acknowledgement of placement and container start time is Nomad waiting for Docker to start the container. Still, scheduling and starting 1 million containers in just over six minutes is an impressive feat.

There is one curiosity with C1M: the graph shows that we scheduled and ran more than 1M containers. Nomad actually scheduled and ran nearly 1.003M containers. There are two reasons for this:

First, we found a bug in the Docker engine that appears to be a race condition by starting so many containers in a very short period of time. We have filed an issue, and there is a potential fix in master. Because Nomad is designed to self-heal and recover from these types of failures, Nomad rescheduled and restarted the failed jobs elsewhere.

Second, we ran into machine failures. At a cluster size of 5,000 nodes, we experienced hardware failure, network issues, and more. Nomad detects this as a non-functioning client and moves that client's workload to another machine. This type of self-healing behavior is critical for large scale clusters where routine failures become the norm.

Both of the above issues forced Nomad to revisit scheduling decisions and to start more containers. Ultimately, Nomad was able to complete the full benchmark.

Nomad Design Validation & Hardening

C1M uncovered some bugs and race conditions that we have now fixed and are a part of Nomad 0.3.1. This is the version that should be used to reproduce these results.

Thanks to the C1M, we are fully confident that Nomad is robust at dramatically large scales, and can comfortably run the largest real-world workloads.

In addition to hardening, the C1M provided validation for the design and architecture of Nomad. As clusters get larger and user jobs specify more complex placement constraints, the scheduler must spend more time finding resources to run applications. Nomad is the only free and open source optimistically concurrent scheduler, which allows it to make hundreds of scheduling decisions in parallel.

C1M Design

We ran the C1M against Nomad, our own cluster scheduler, but the C1M is runnable against other schedulers with a little bit of work.

There are a number of other cluster schedulers available. We chose to only benchmark our own scheduler since we are not professionals in operating the other schedulers, and did not feel comfortable publicizing any benchmarks on systems we were not sure we configured properly. The C1M source is public for other schedulers to use if interested.

Conclusion

At HashiCorp, we build solutions to problems that are technically sound and are a joy to use. We do not take shortcuts with the technologies we choose. The C1M is a public showcase of our work ethic and commitment to creating software designed to scale.

More future-looking architectures or Just-in-Time application summoning will increase the demand for schedulers by orders of magnitude. We are working to enable this future, and are continuously collaborating with institutions and innovative technology companies on cutting-edge research to push the performance of Nomad further.