When we build demonstrations for conferences or events, we want to highlight unique use cases for HashiCorp’s open source tools. In this post, we outline how we built Dance Dance Automation to demonstrate the use of HashiCorp Nomad, Terraform, and Consul and document some of the challenges along the way.

We debuted the first iteration of Dance Dance Automation at HashiConf 2019 and will continue to build upon it further. The game consists of a game server hosted on a Nomad cluster, connected with Consul Service Mesh, and provisioned by Terraform.

»Game Objectives

In Dance Dance Automation blocks are laid out on screen to the beat of the music, and the player has to tap the corresponding pad as the block passes by. Each block corresponds to an allocation on HashiCorp Nomad. If the player successfully times the tap of the pad to the block, the game stops the corresponding allocation. If the player manages to stop allocations faster than Nomad can reschedule them, bonus points are given.

The ID of the allocation flashes above each block, and when there is no allocation, the label becomes null. The player receives 10 points for stopping an allocation successfully and 50 points for outpacing the scheduler with a “null” allocation. The game includes a multiplayer mode for players to compete against each other.

Next, we take a closer look at the architecture that runs Dance Dance Automation.

»Game Server on Nomad

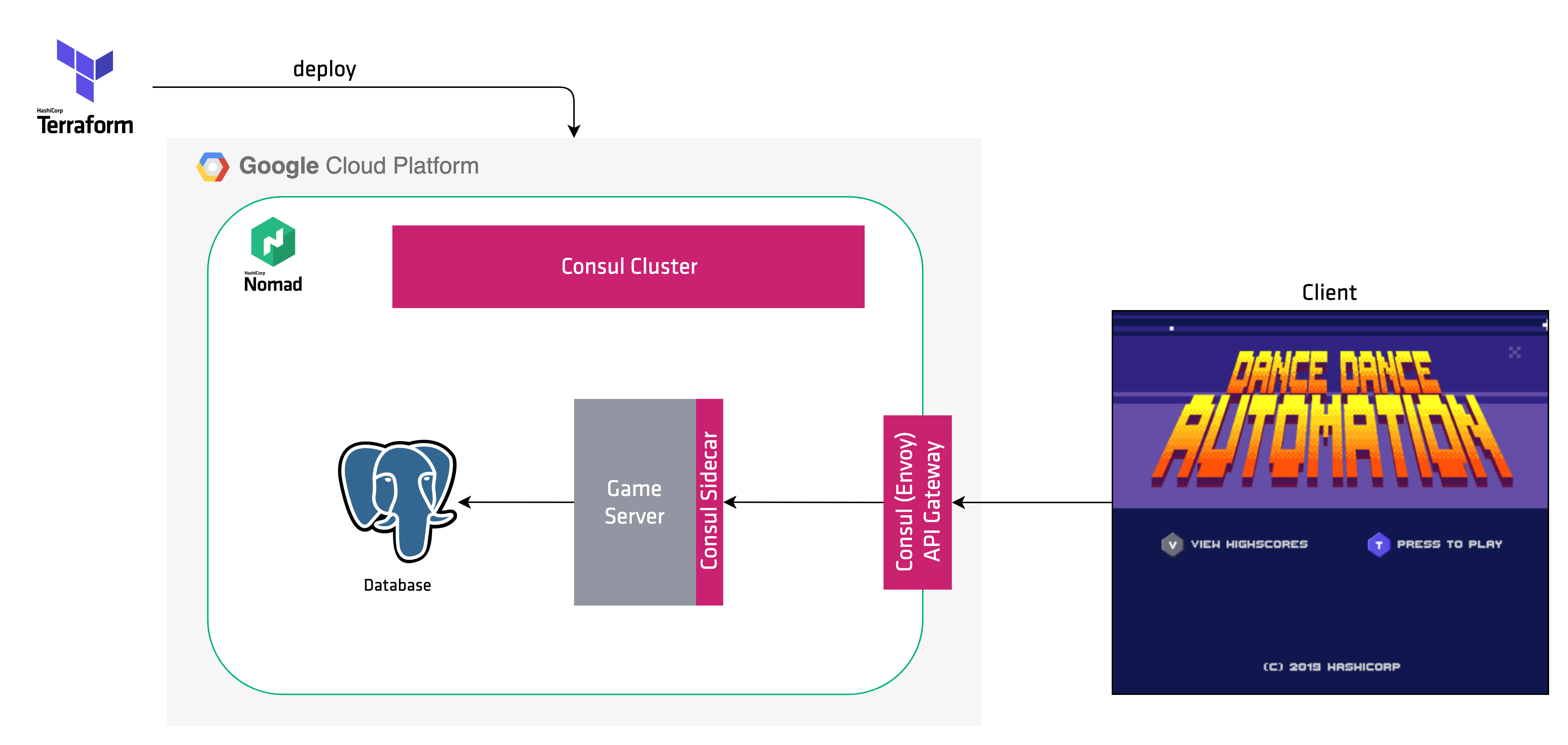

The server components of Dance Dance Automation run on a Nomad cluster residing in Google Cloud Platform. Invisible to the player, these processes track allocations, assign them to players, organize games, and manage scores.

All Games, scores, and allocations are persisted in a PostgreSQL database. The game server and applications in the Nomad cluster (such as Cloud Pong, which we feature in another post) can be stopped as part of the game.

»Networking with Consul

Within the Nomad cluster, the game server leverages Consul 1.6 Mesh Gateway and Service Mesh features. We use Consul to connect the game server to the database and observe the flow of traffic from game clients to the server. When the game server connects to the database and other instances, Consul Service Mesh encrypts all of its requests with mTLS.

When the game clients (specifically what the player sees) connect to a game server, an API gateway built by combining Envoy and the Consul Central Configuration, proxies the request to the backend game server. As a future step, we intend to use Mesh Gateways to connect to game servers across multiple clusters in different clouds.

»Deployment with Terraform

Using Terraform Cloud, we provisioned all of the game components, from cluster to game server. When we make any changes to the configuration, we push to a repository containing a Terraform configuration. Terraform Cloud receives the webhook event from GitHub and starts a plan. We found this particularly useful when one of us made changes to the Nomad job configuration while another fixed the DNS subdomains. Not only did Terraform lock the state but also enabled a review of the changes and affected dependencies.

»Game Client

When players enter the game, they interface with the game client. We built the game client with Godot, an open source game engine. When the application begins, it makes a call to the game server on Nomad to register the player and enter the lobby during multiplayer mode.

Specific actions in the game trigger API calls to the game server. For example, game completion triggers an API call to leave the game and post the high score.

We produced both the graphics and the audio for the game. To coordinate the blocks that players must match, we used the MBoy Editor, an application created for a similar Godot game, to flag each note and coordinate across the three tracks.

Players can interact with the game with keyboard controls but for increased fun factor, we wanted to create a set of pads for players to “dance”.

![]()

»Hardware

Initially, we wanted to run the game on the Raspberry Pi and connect our fabricated dance pads to the GPIO pins. However, we did not find the game’s performance on the Raspberry Pi ideal and had to settle on an alternative solution.

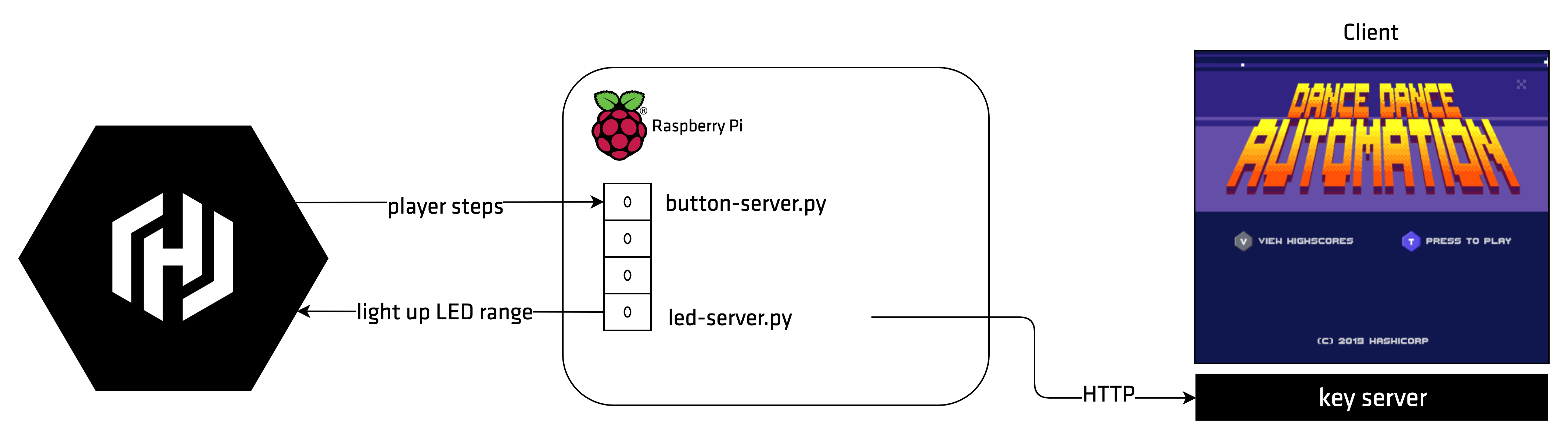

Since the game was built with keyboard controls as a back-up mechanism, we mapped the signals from the dance pads to specific key presses. In order to run the game on more powerful hardware than the Raspberry Pi, we had to proxy those key presses from the Pi and map them to a laptop. To accomplish this, we created a server (the "button server") on the Raspberry Pi that would translate GPIO inputs into HTTP requests, that were then forwarded to a server (the "key server") on the client machine that receives API calls from a Raspberry Pi and maps it to key presses. We call this the “key server”. The Raspberry Pi also hosts an “LED server”, which accepts HTTP calls from the client and using GPIO then turns on the corresponding dance pad.

The hardware presented some significant challenges, as electrical contacts and physical damage would affect the player’s experience. We spent quite some time soldering and repairing contacts, even taking a trip to a hardware store for additional wiring and supplies. Due to defects in some of the dance pads, we had to swap out our Packer pad for other working pads (which is why in photographs, you might see two Nomad or Vault pads!).

![]()

»Conclusion

When we set the dance pads on the floor of HashiConf 2019, we did get a few participants successfully playing on the pads. Some players even outpaced the Nomad scheduler with their skills for a few blocks!

We will continue to improve and extend Dance Dance Automation by fixing the hardware issues of our first iteration and adding more of the HashiCorp tools to the architecture. We hope to integrate Vault as a way of rotating our database credentials and use more of Consul’s service mesh to control traffic between game servers and clients. For practical use, the game will be optimized for the Raspberry Pi, to eliminate the need for additional computers. Once we improve the performance of the game, we might create an immutable Raspbian image with Packer so we can create multiple clients quickly.

![]()

If you have any questions or are curious to learn more, feel free to reach out on the community forum, on the HashiCorp User Groups, Events, & Meetups topic. Stay tuned for Dance Dance Automation coming to a HashiCorp community event near you!