Oscar Medina, senior cloud architect at Amazon Web Services, joined us on a live stream recently to demo a collaborative workflow to enable self-service Amazon EKS deployments for developers, using CDK for Terraform. Check out the recording of the live demo, and read on for a tutorial of the workflow that platform and developer teams can use to collaborate on Kubernetes deployments with HashiCorp Terraform, Sentinel policies, CDK for Terraform, and EKS.

»Tools Used in This Demo

This demo takes advantage of several tools and platforms to craft a collaborative workflow for platform and developer teams to define and deploy Kubernetes infrastructure configurations:

- Amazon EKS - Amazon Elastic Kubernetes Service (EKS) is a managed container service to run and scale Kubernetes applications in the cloud on AWS or on-premises.

- CDK for Terraform - Cloud Development Kit for Terraform (CDKTF) allows you to use familiar programming languages to define cloud infrastructure and provision it through Terraform. With CDKTF, you can create Terraform configurations in your choice of C#, Python, TypeScript, or Java (with experimental support for Go), and still benefit from the full ecosystem of Terraform providers and modules.

- Terraform Cloud and Policy as Code - Terraform Cloud is our managed service offering for infrastructure automation that enables practitioners, teams, and organizations to use Terraform in production. Sentinel is HashiCorp's policy as code framework, which is included in the Terraform Cloud Team & Governance and Business tiers. With Sentinel, you can enforce security, compliance, cost, and operational best practices before your users create infrastructure.

»A Collaborative Workflow for Platform and Application Teams

During the live demo, Oscar shows how a platform team working with Terraform in HashiCorp Design Language (HCL) can enable a developer team working with CDK for Terraform in TypeScript to deploy EKS clusters to AWS, and use Sentinel policies to ensure that all deployed clusters meet platform requirements.

As mentioned, the platform team uses Terraform with HCL, and they leverage Terraform Cloud to store and manage state, run jobs, and manage Sentinel policies and apply them to appropriate workspaces. They also leverage the EKS Blueprints for Terraform to provision Kubernetes clusters.

The developer team has adopted CDKTF because they prefer using TypeScript to define infrastructure configurations. In this application, they use TypeScript to define a configuration to deploy three different Kubernetes services: a deployment, service, and ingress.

In this scenario, the platform team wants to manage costs by preventing users from deploying services of the LoadBalancer type. So they have established Sentinel policies that only allow developer teams to deploy services of type NodePort or ClusterIP in EKS. In the demo walkthrough, you can see that when a developer tries to use an unapproved service type, it triggers a Sentinel policy error, alerting the developer and helping them resolve the issue before the change gets deployed. Using policy as code, platform teams can feel secure that their business and security rules around provisioning infrastructure will still be respected, even when developer teams are given more freedom and autonomy to deploy resources themselves.

The demo shows how leveraging all these tools together enables a workflow that platform and developer teams can use to collaborate on Kubernetes deployments and enable self-service deployments for developers, while ensuring that essential guardrails remain in place.

»Experience the Demo

You can reference the code used for this demo in the repos below, and follow along as you watch the recording or follow the steps outlined in the next sections.

- sharepointoscar/team-platform-aws-eks: Platform team repo that deploys EKS clusters to AWS, using the Terraform CLI and HCL.

- sharepointoscar/developers-team-aws-eks: Developer team repo with CDK for Terraform TypeScript application that deploys a workload to the provisioned EKS cluster, previously provisioned by the platform team.

- sharepointoscar/terraform-guides: Sentinel policies that maintain security guardrails, ensuring that workloads deployed by the developer team adhere to all requirements.

»Platform Team Deploys EKS Clusters to AWS

NOTE: Please clone any GitHub repositories. The instructions assume you’ve cloned the repositories.

»Configure Terraform Cloud Workspaces

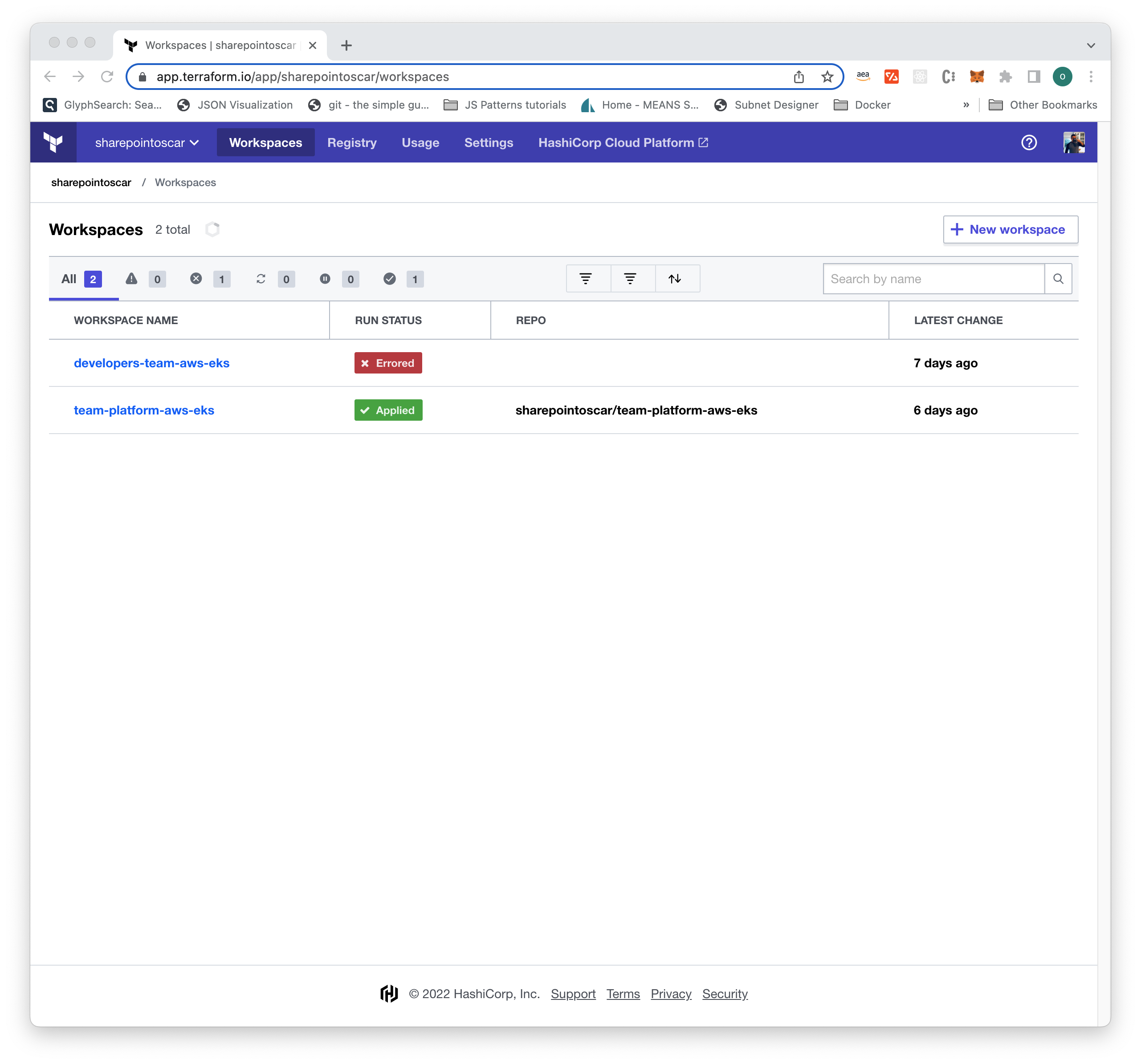

For this scenario, we have two Terraform Cloud workspaces, one for the platform team and another one for the developer team. Each workspace needs to be configured with Sentinel Policies so that when any Terraform Plan is executed, the Policy Check uses our custom Sentinel Policies. First, let’s create the two workspaces.

»Create Terraform Cloud Workspaces

Each of the two workspaces is configured differently. Let’s go over the creation for the platform team workspace.

»Create Platform Team Workspace

- Click on New workspace.

- Under Choose Type, select Version Control workflow.

- Under Connect to VCS, click on GitHub.

- Under Choose a repository, select the platform-team-aws-eks repository.

- Under Configure settings, type the name as platform-team-aws-eks and click on Create workspace.

- Under Settings, ensure Execution mode is set to Remote.

NOTE: Please ensure that under Workspace Settings, the Terraform Working Directory is set to examples/eks-cluster-with-argocd.

»Create Developer Team Workspace

This workspace is configured with a different workflow. It does not use version control. It uses the Remote execution mode.

- Click on New workspace.

- Under Choose Type, select CLI-driven workflow.

- Under Configure settings, type the name developers-team-aws-eks.

- Click on Create workspace.

- You should see instructions on configuring your backend. For example, after creating this workspace you’ll see something similar to this:

This will be the RemoteBackend you configure in the developers-team-aws-eks repository you cloned (more later)

terraform {

cloud {

organization = "sharepointoscar"

workspaces {

name = "developers-team-aws-eks"

}

}

}

Once you’ve created both workspaces, they should both be displayed in Terraform Cloud as shown here:

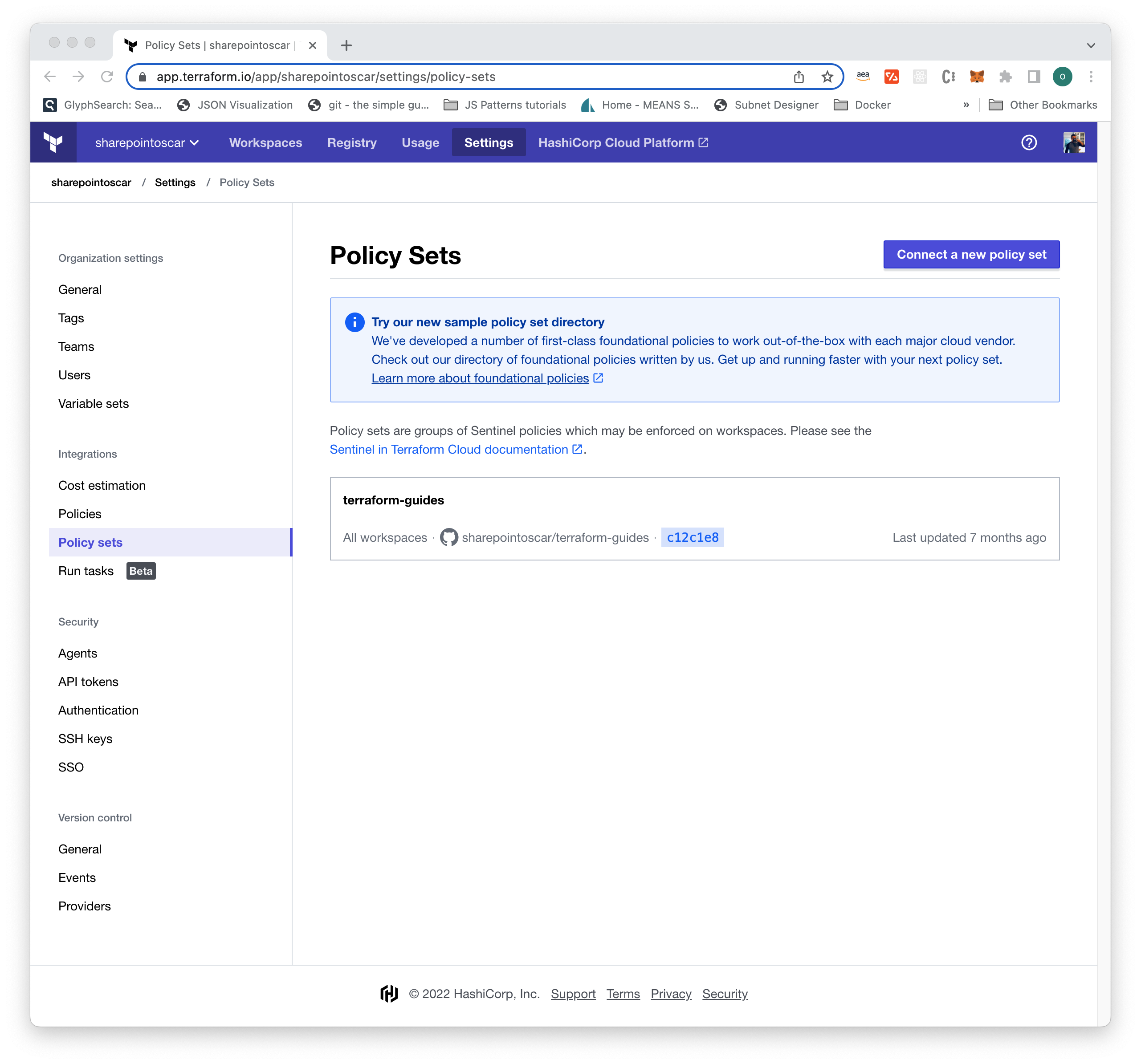

»Configure Policy Sets

Now, let’s configure the developer team workspace to use an existing GitHub repository, which contains your EKS policies:

- Once signed into Terraform Cloud, go to Settings > Policy sets

- Click on the Connect a new policy set button.

- Select GitHub as the version control provider.

- In the Choose a repository screen, select your clone of the terraform-guides repository (if it does not appear, try typing it in the text box at the bottom).

- Click on the right arrow to select the repository.

- Under Scope of Policies, select Policies enforced on all workspaces.

Your settings should look similar to those shown here:

You have now configured our Terraform Cloud instance to use a custom GitHub repository where our policies reside. Next, you need to configure AWS credentials for each of your workspaces so that you can properly connect to your AWS account.

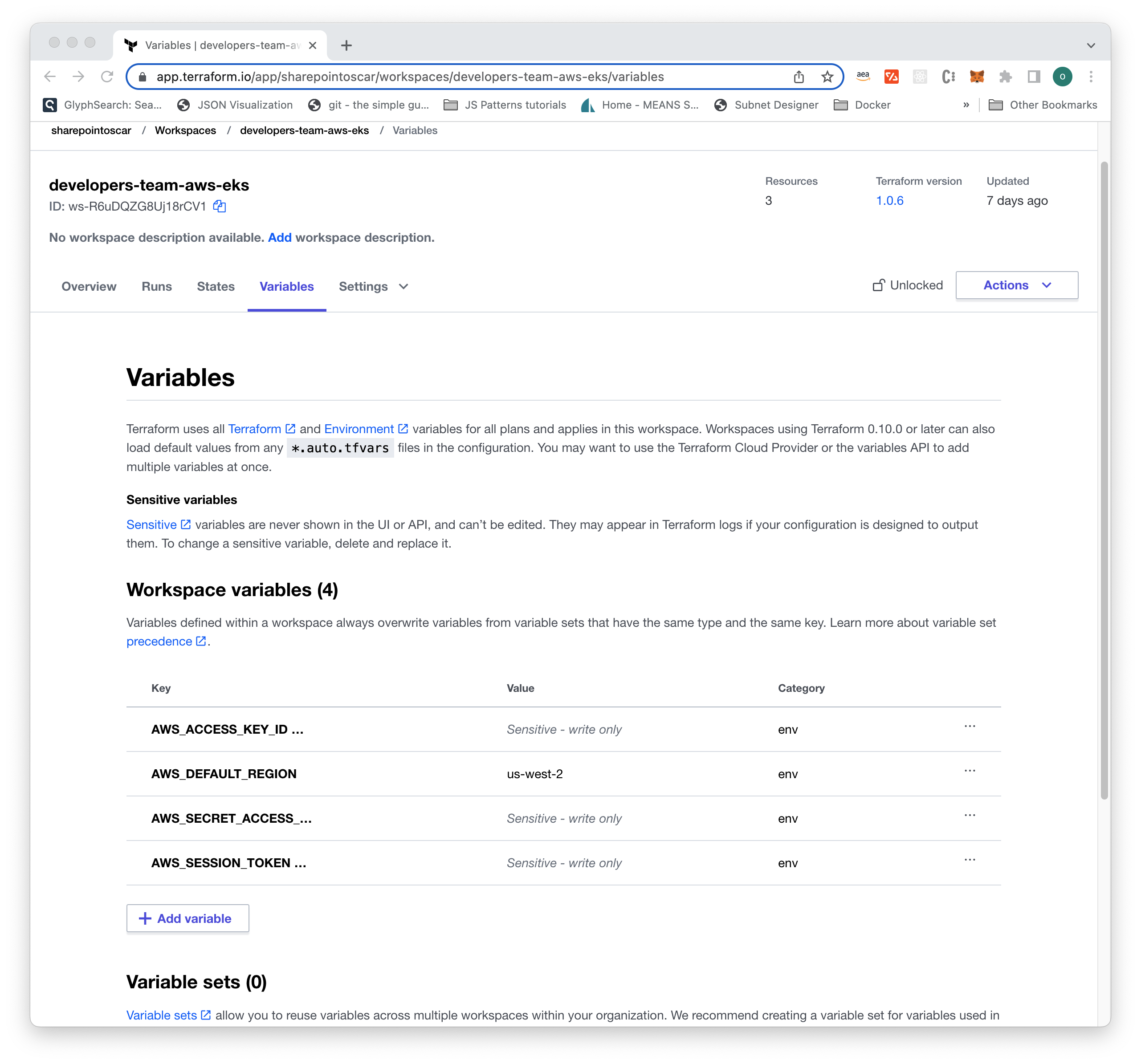

»Configure AWS Credentials

Depending on your environment, you may opt to use short-lived credentials, or not. This example uses short-lived AWS credentials and each workspace is configured accordingly before running any plans.

The process to configure credentials is the same for both workspaces:

- In the Workspaces page in Terraform Cloud, click on developers-team-aws-eks workspace.

- Click on Variables.

- Click + Add variable, and under Select variable category, select Environment variable.

- Next, in the Key textbox, type AWS_ACCESS_KEY_ID and in the Value textbox type or paste in the value.

- Click on the Save variable button.

- Click + Add variable, and under Select variable category, select Environment variable

- Next, in the Key textbox, type AWS_SECRET_ACCESS_KEY, and in the Value textbox type or paste in the value.

- Click + Add variable, and under Select variable category, select Environment variable

- Next, in the Key textbox, type AWS_SESSION_TOKEN, and in the Value textbox type or paste in the value.

NOTE: If you are not using short-lived credentials, skip step 9 above.

Once you’ve configured AWS credentials for your workspaces, it should look something like this:

»Deploying the EKS Cluster

Using the Terraform CLI and HCL language, the platform team deploys EKS clusters to AWS, so that they can be used by development teams. The repository team-platform-aws-eks contains many examples on deploying clusters. We will use the following example to show how to deploy your cluster.

- Change the directory to examples/eks-cluster-with-argocd.

- On the

main.tf, change the backend to reflect your Terraform Cloud workspace (lines 21 to 25). - On the

main.tf, change the region you would like to use (line 45). - If you wish to change the name of the cluster, modify

main.tflines 57 to 69. - Select the VPC CIDR that reflects your AWS environment (line 73).

- That’s it, we can now execute a

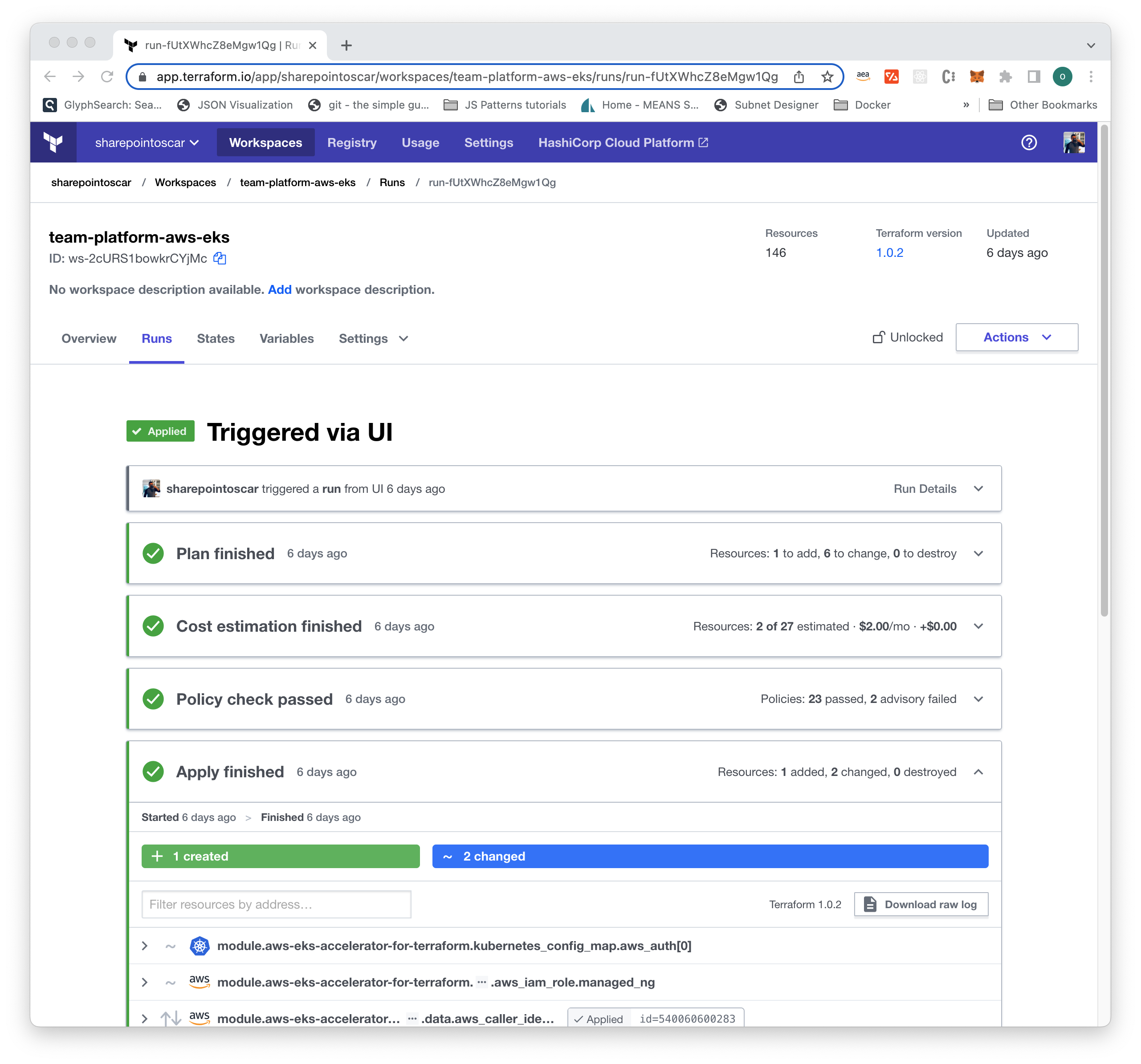

terraform plan, this triggers a speculative plan that allows you to ensure the plan will succeed. - To provision the cluster, go to the Terraform Cloud UI, under the team-platform-aws-eks workspace, click Actions, Start new run.

- Under Choose run type, select Plan and apply.

Whenever you execute a terraform plan or terraform apply, the logs are streamed to your terminal, but you can also click on the link to the Terraform Cloud active run for a more appealing visual as shown here:

Once your cluster is created, and Terraform Cloud workspaces are configured with Sentinel Policy Sets, we are ready to deploy a sample workload.

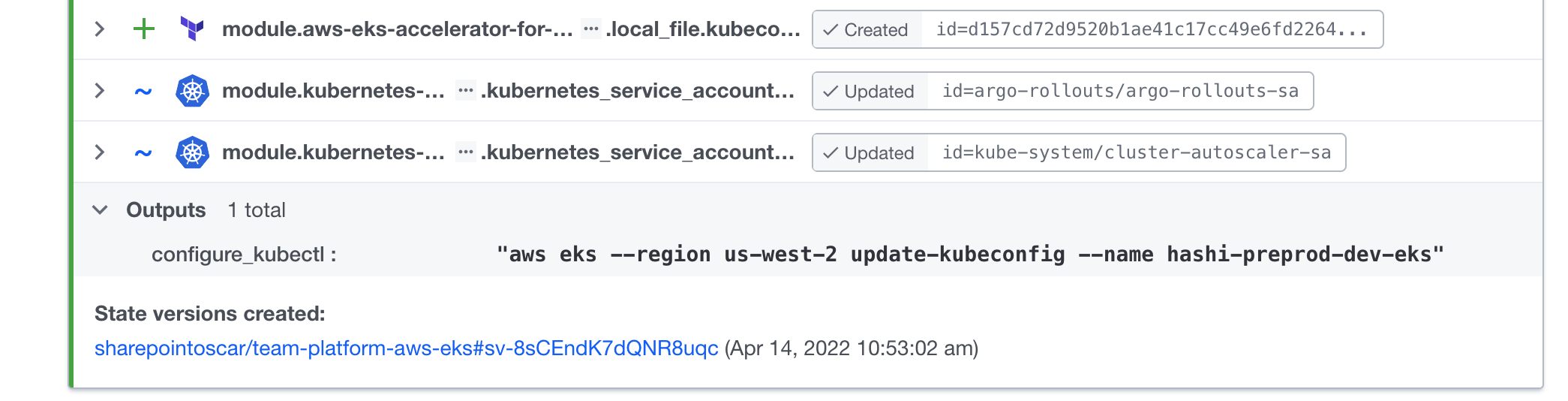

As the platform team, you want to provide your developer teams with the cluster name, so that they can deploy their workloads to it. The output of the cluster provisioning has the cluster name as shown below. For platform team members, Terraform Cloud allows easy copy-and-paste to your kubeconfig.

»Developer Team Deploys Workload via CDKTF and Terraform Cloud

You are now ready to deploy a workload to the shared EKS cluster. For these steps you will use the developers-team-aws-eks GitHub repository.

The workload you are deploying is a basic static website. It uses the ALB Ingress Controller, SSL, and a FQDN. It includes Deployment, Service, and Ingress Kubernetes objects, which are defined using TypeScript.

Please be sure to have AWS credentials setup in the developer team’s Terraform Cloud Workspace before the next step.

»Change Workload Based on Your Terraform Cloud Environment

The workload, as cloned, uses a different Terraform Cloud account and organization. You need to change this to reflect your environment including the previously created EKS cluster name.

- You need to change the RemoteBackend to the one you obtained when creating the developer workspace. Once you have that, go to the

main.tffile lines 19 to 26 and be sure to enter your Organization and Workspace name. - Specify the previously created EKS cluster name in lines 29 and 32.

- Modify the Ingress host to a domain you host and have control over (line 126).

NOTE: If you choose not to use SSL certificates or domain, you need to do the following:

- Remove all the annotations in the Ingress object, except

kubernetes.io/ingress.class: "alb" - Remove the

hostentry from the ingress spec.

»Deploy Workload

Now that you have all the changes required, go ahead and execute a cdktf synthesize to ensure our work compiles.

In the root of the repository, execute cdktf synthesize as shown below. Your output should look similar.

➜ cdktf synthesize

Newer version of Terraform CDK is available [0.10.1] - Upgrade recommended

Generated Terraform code for the stacks: developers-team-aws-eks

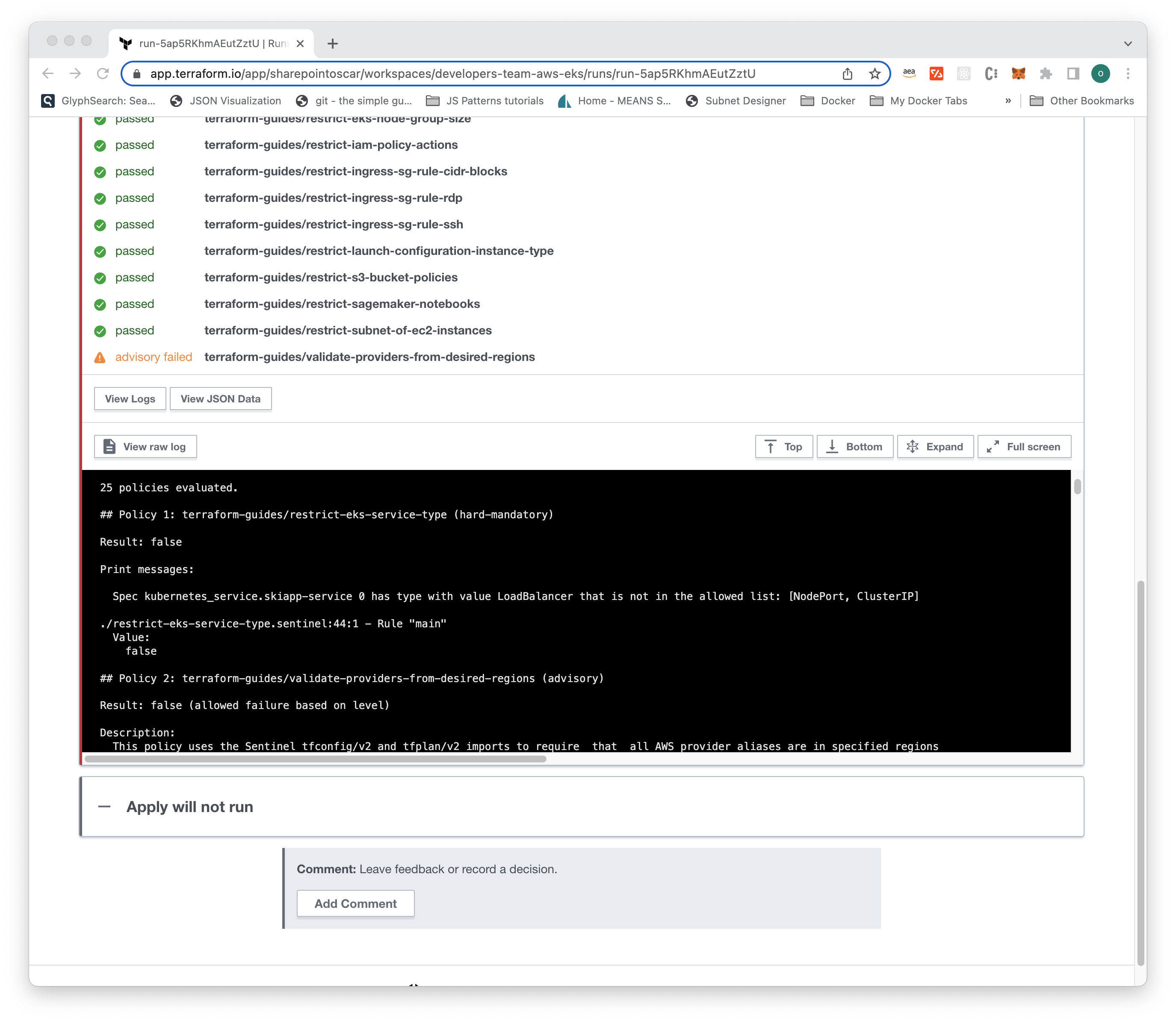

»Violating the Sentinel EKS Policies

On this first attempt, you will deploy the workload with the LoadBalancer Service Type that is not allowed — the plan should fail and tell you what the problem is.

Please modify the main.ts file, line 104, change from NodePort to LoadBalancer.

Go ahead and deploy the workload and execute cdktf deploy. You should see output as follows in the Terraform Cloud console.

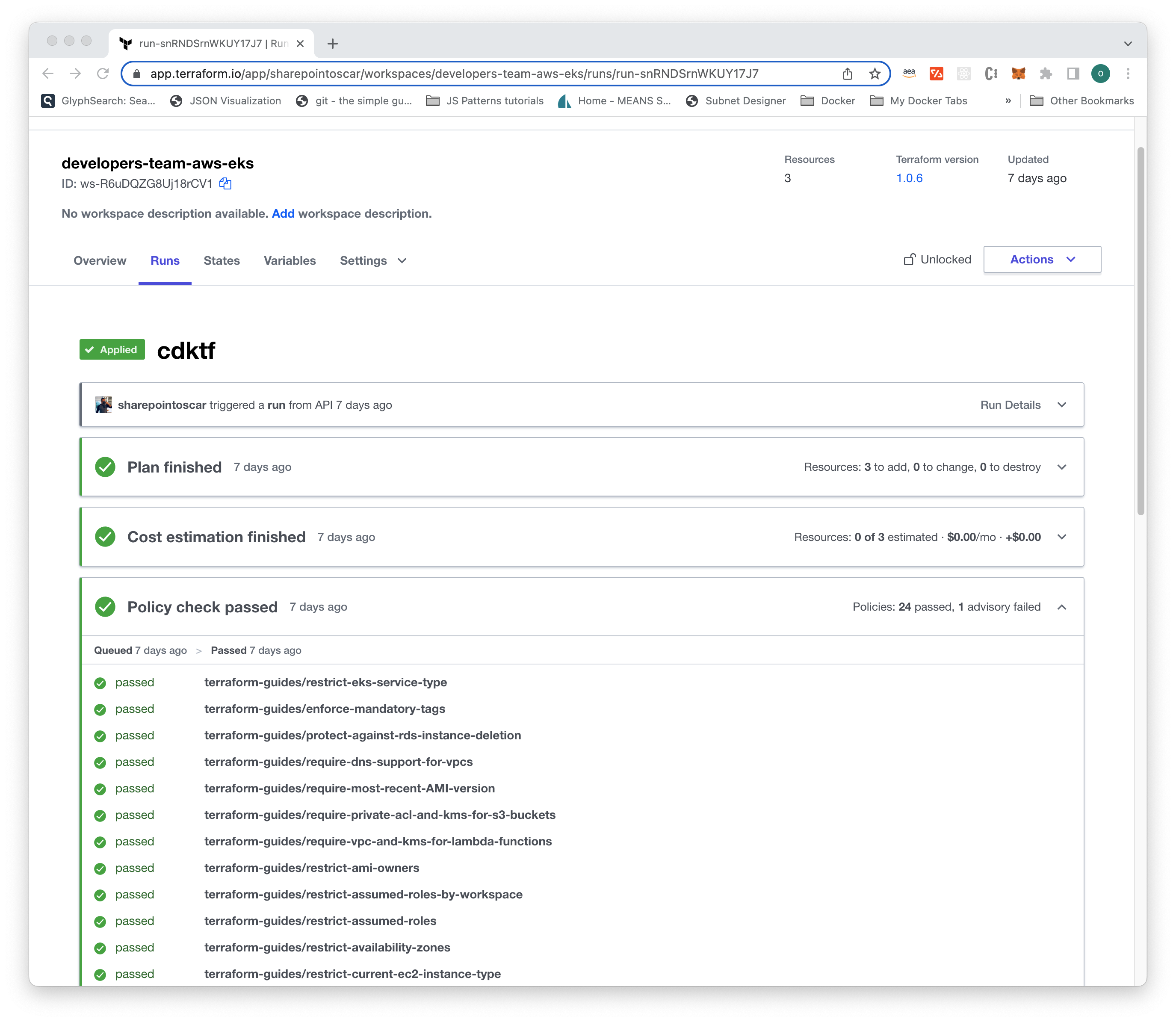

»A Successful Run

Next, modify the main.ts file, line 104, change from LoadBalancer to NodePort and run cdktf deploy.

The Kubernetes resources are clearly shown. Type yes to proceed. After a couple of minutes you should see output like this:

➜ cdktf deploy

Running plan in the remote backend. To view this run in a browser, visit:

https://app.terraform.io/app/sharepointoscar/workspaces/developers-team-aws-eks/run

s/run-snRNDSrnWKUY17J7

Deploying Stack: developers-team-aws-eks

Resources

✔ KUBERNETES_DEPLOYMEN skiapp-deployment kubernetes_deployment.skiapp-deployment

✔ KUBERNETES_INGRESS skiapp-ingress kubernetes_ingress.skiapp-ingress

✔ KUBERNETES_SERVICE skiapp-service kubernetes_service.skiapp-service

Summary: 3 created, 0 updated, 0 destroyed.

You can see below that the Terraform Cloud console shows the policy restrict-eks-service-type passed!

»Learn More

Check out the links below to learn more about the tools used in this demo.

»Try CDK for Terraform

If you’re new to the project, the tutorials for CDK for Terraform on HashiCorp Learn are the best way to get started, or you can check out this overview of CDKTF in our documentation.

»Explore Policy as Code with Terraform Cloud

Learn how to set up a Terraform Cloud account and explore how to write, test, and implement Sentinel policies with these tutorials on HashiCorp Learn.

»Amazon EKS

EKS Blueprints are a great way to get started with EKS. This framework aims to accelerate the delivery of a batteries-included, multi-tenant container platform on top of Amazon EKS. You can use this framework to implement the foundational structure of an EKS Blueprint according to AWS best practices and recommendations.