Consul’s Ingress gateways and Terminating gateways (sometimes called “Egress gateways”) are incredibly useful for integrating workloads that are not service mesh enabled. As your organization matures, you will find that most workloads are able to integrate with Consul (it supports both containerized and non-containerized platforms). However, there may always be legacy applications or third party software that cannot.

Ingress and Terminating gateways bridge that gap by safely allowing traffic inside and outside the mesh, while still enforcing mTLS and network policies for the mesh’s internal services. These toggles enable operators to start seeing the security and visibility benefits of a service mesh from day one by iteratively integrating their applications and infrastructure over time.

Our other topic, L7 traffic management, enables several different methods of application deployment and testing such as canaries and blue-green.

Before we dive in further, let’s review the high-level benefits of Consul’s service mesh features.

Consul Service Mesh and Hybrid Environments

The service mesh capabilities of Consul enable secure deployment best-practices with automatic service-to-service encryption, and identity-based authorization.

Consul uses the registered service identity (rather than IP addresses) to enforce access control with intentions (network policy). This makes it easier to reason about access control and enables services to be rescheduled by orchestrators including Kubernetes and HashiCorp Nomad. Intention enforcement is network agnostic, so Consul works with physical networks, cloud networks, software-defined networks, multi-cloud, and more.

Consul can also provide service discovery for heterogeneous environments where applications can register and discover healthy instances of one another via a global catalog. This is done via Consul’s DNS interface and health checking.

I’ve written about these two use-cases in the past and discussed these high-level benefits for hybrid environments (K8s and virtual machines).

Demo Repository

If you are a hands on learner, I will be using the following repo and README to deploy the demo infrastructure for this post.

NOTE: The demo will automatically deploy all of the infrastructure and applications by default. This post will walk through the configurations involved and how they were used together. If you would like to understand the actual execution of commands, then start here.

The Consul configuration files that we will review are applied in the demo here.

With the basics out of the way, let’s dig into some more advanced service mesh features to understand how they are used and their benefits.

Ingress Gateways

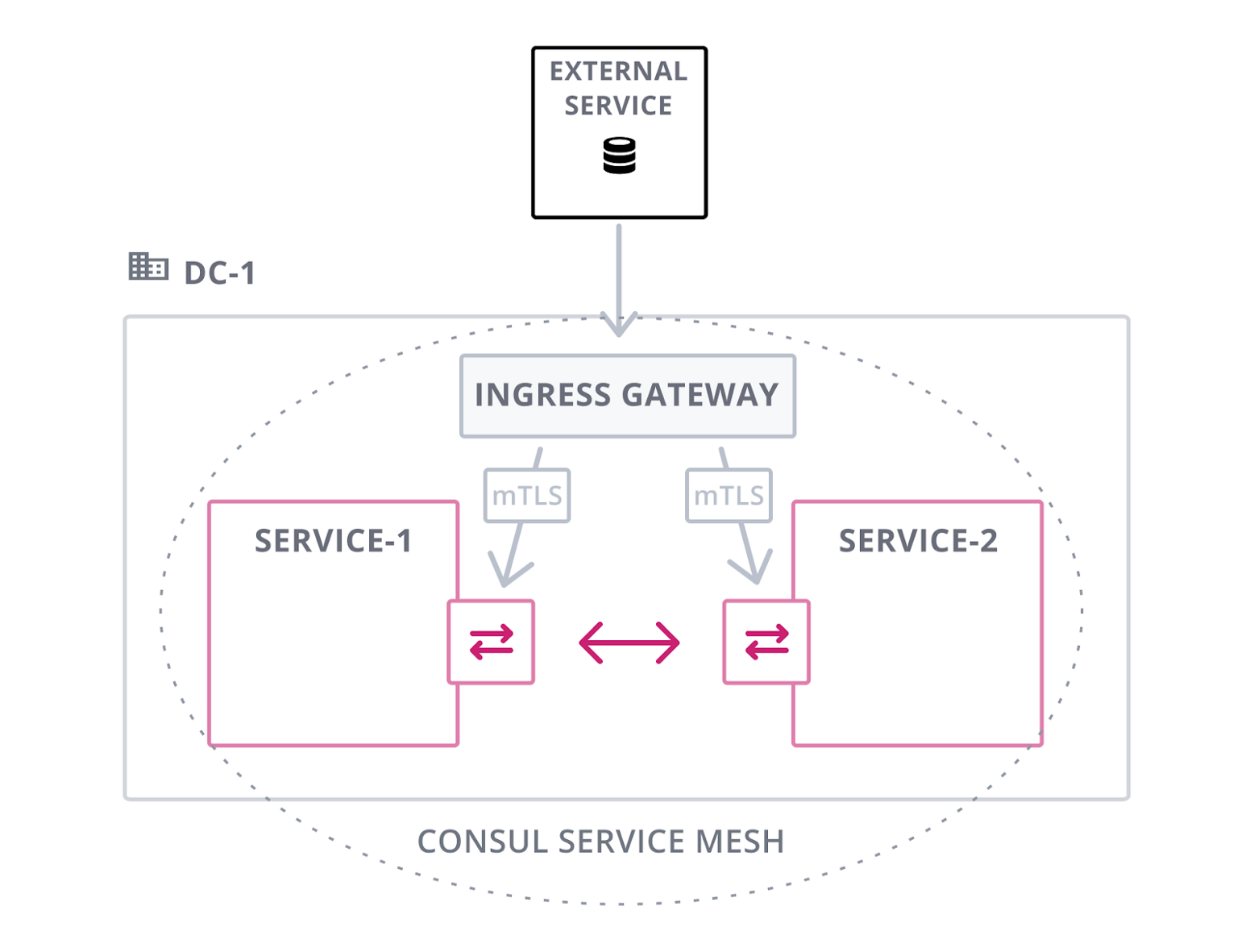

First, Consul provides both Ingress and Terminating gateways that will allow traffic to safely enter and exit the mesh. Ingress gateways are configured as services in Consul and act as the entry point for traffic that is inbound from non-mesh services.

In our Kubernetes example, the Consul helm chart will deploy the Ingress gateway (an Envoy proxy configured by Consul) as an exposed pod.

Once traffic from an external service reaches the Ingress gateway, Consul service discovery is leveraged to load balance traffic across healthy instances of a particular service. This traffic is sent through the Envoy proxies where our security benefits like mTLS and network policy (intentions) are enforced. This diagram explains the high-level flow.

In our example, we can find the IP address of the Consul Ingress gateway via kubectl once the demo has finished deploying.

$ kubectl get svc consul-consul-ingress-gateway

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

consul-consul-ingress-gateway LoadBalancer 10.15.255.211

35.202.227.148 5000:30992/TCP,8080:31993/TCP 8m18s

IMPORTANT: Make sure to add the EXTERNAL-IP to your /etc/hosts file on your laptop if you would like to access the demo via chrome/firefox/etc.

#Your /etc/hosts file35.202.227.148 go-movies-app.ingress.dc1.consul

The ingress pod deployment was configured via the official Consul helm chart. Specifically, we enabled configuration to turn on the Ingress gateway and to expose it as a cloud service load balancer.

# From helm values.yaml

ingressGateways:

enabled: true

defaults:

replicas: 1

service:

type: LoadBalancer

ports:

- port: 5000

nodePort: null

- port: 8080

nodePort: null

Next, we configure the Consul Ingress gateway via an HCL configuration file. This file defines the services, ports, and protocols for the Ingress gateway’s listeners.

#HCL config file for the ingress gateway

Kind = "ingress-gateway"

Name = "ingress-gateway"

Listeners = [

{

Port = 5000

Protocol = "tcp"

Services = [

{

Name = "k8s-transit-app"

}

]

},

{

Port = 8080

Protocol = "http"

Services = [

{

Name = "go-movies-app"

}

]

}

]

Applications are found via Consul’s built-in service discovery. Consul will ensure that healthy instances are only routed to via health checking. The “Services” names “go-movies-app” and “k8s-transit-app” are registered in Consul from their K8s deployment spec definitions (mentioned further below).

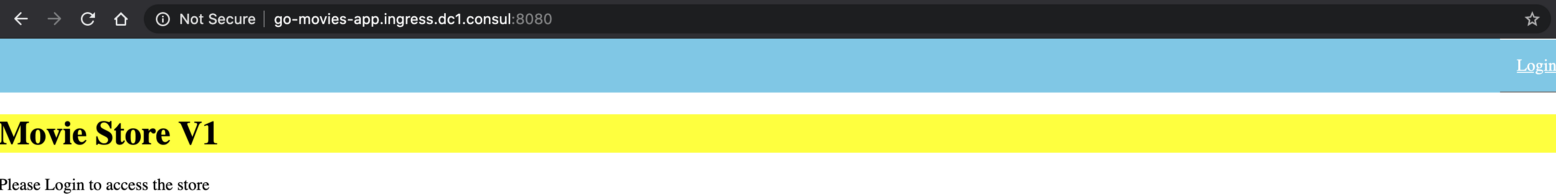

Now we can test the Ingress gateway. Once you have added the Ingress gateway's external IP to your /etc/hosts file, access it in your browser on port 8080. (Port 5000 is also running another demo application that leverages HashiCorp Vault for secrets. See this blog post for details). Again, make sure to add the ingress gateway external IP to your /etc/hosts file for this to work.

http://go-movies-app.ingress.dc1.consul:8080

If you refresh your browser a couple times, you will notice that multiple versions of the application are returned. The Ingress gateway has been configured to do traffic splitting between two versions of the app. This brings us to the more advanced Layer 7 features that we will cover shortly.

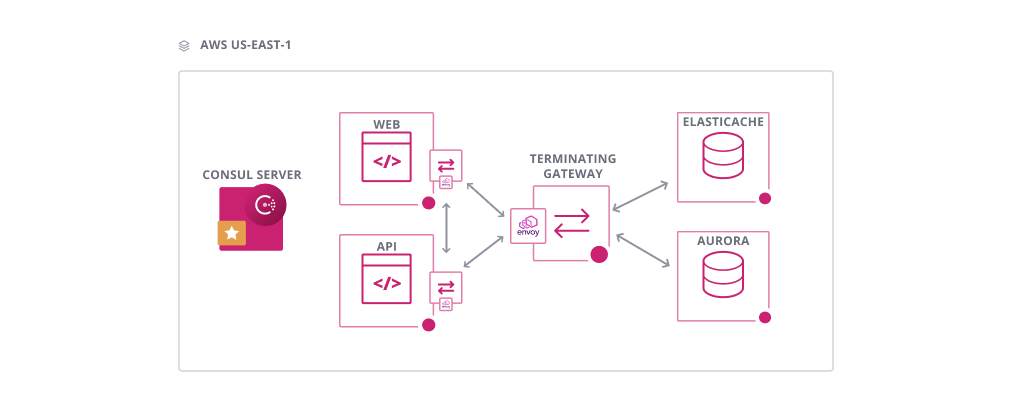

Terminating Gateways

Terminating gateways solve the inverse problem of Ingress. They allow service mesh traffic to exit the cluster to non-service mesh apps (and still have network policy enforced). In most large organizations (think Global 2000, Fortune 500), there will very likely be stateful or existing applications that will not be containerized. Consul accommodates these hybrid environments via its simple architecture and gateways for apps that have not been (or cannot be) added to the mesh.

Terminating gateways provide connectivity to external destinations by terminating Consul mTLS connections, enforcing Consul intentions, and forwarding requests to appropriate destination services.

These are not covered in today’s demo. However, the Consul helm chart can be configured to deploy them in the same manner as the Ingress gateway.

L7 Traffic Management

Outside of enforced mTLS and network policy, Consul’s service mesh offers several L7 Traffic management features.

Some examples include HTTP path-based traffic routing (you can use query parameters or headers as well), traffic shifting, and traffic splitting (which we’ve already seen above).

These features enable advanced rollout methods like canary testing and blue-green deployments.

Overview

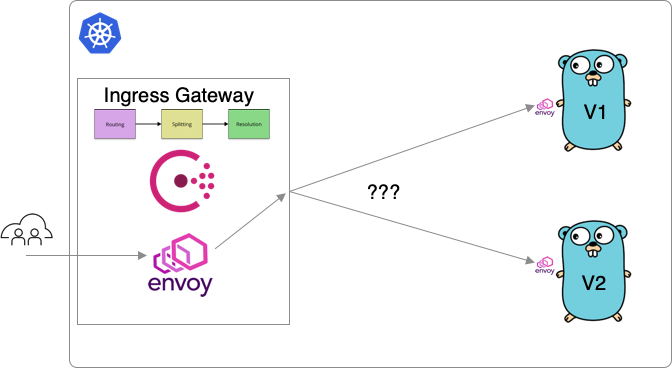

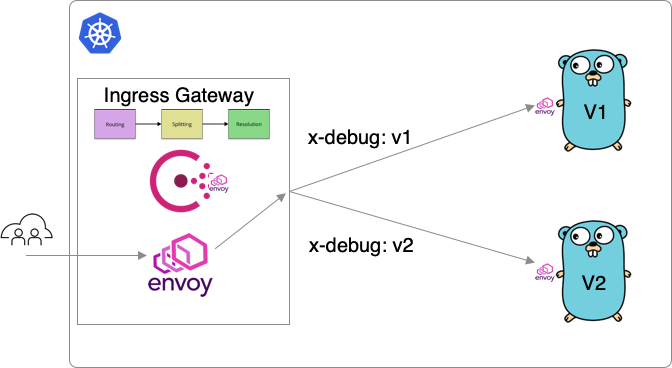

Consul (and Envoy) perform these duties by going through a 3-stage process to resolve upstream services (the Kubernetes apps we are trying to send traffic to).

The three stages are routing, splitting, and resolution. We will break each of these down with concrete code examples for understanding. Each of these stages can optionally take a configuration file. If omitted, a default behavior is used.

All three stages are applied at the proxy/Consul layer. So in our example, we are applying this 3-stage logic at the Ingress gateway. This is used to resolve the correct backend service to connect to (in this case, the go-movies-app). These stages are applied at each Envoy proxy in the mesh.

Our example setup:

Kubernetes Deployment Spec

Before we start configuring L7 features, we need to add a Consul service annotation to our K8s job spec. In the background, Consul is leveraging a mutating admissions web-hook (deployed via the helm chart). Doing so enables Consul to register information from the job. This allows for listing the job as a service in Consul or for injecting an Envoy proxy (sidecar) into the application’s pod.

The Kubernetes job spec can be found here. The important bit for our example is the “service-tags” annotation. This line specifically tags the service in Consul and will be leveraged in the discovery steps from above. Version 2 of the app looks similar and is in the same directory.

consul.hashicorp.com/connect-inject: "true"

consul.hashicorp.com/connect-service-protocol: "http"

consul.hashicorp.com/service-tags: "v1"

consul.hashicorp.com/connect-service-upstreams: "vault:8200,

pq-postgresql:5432, go-movies-favorites-app:8081"

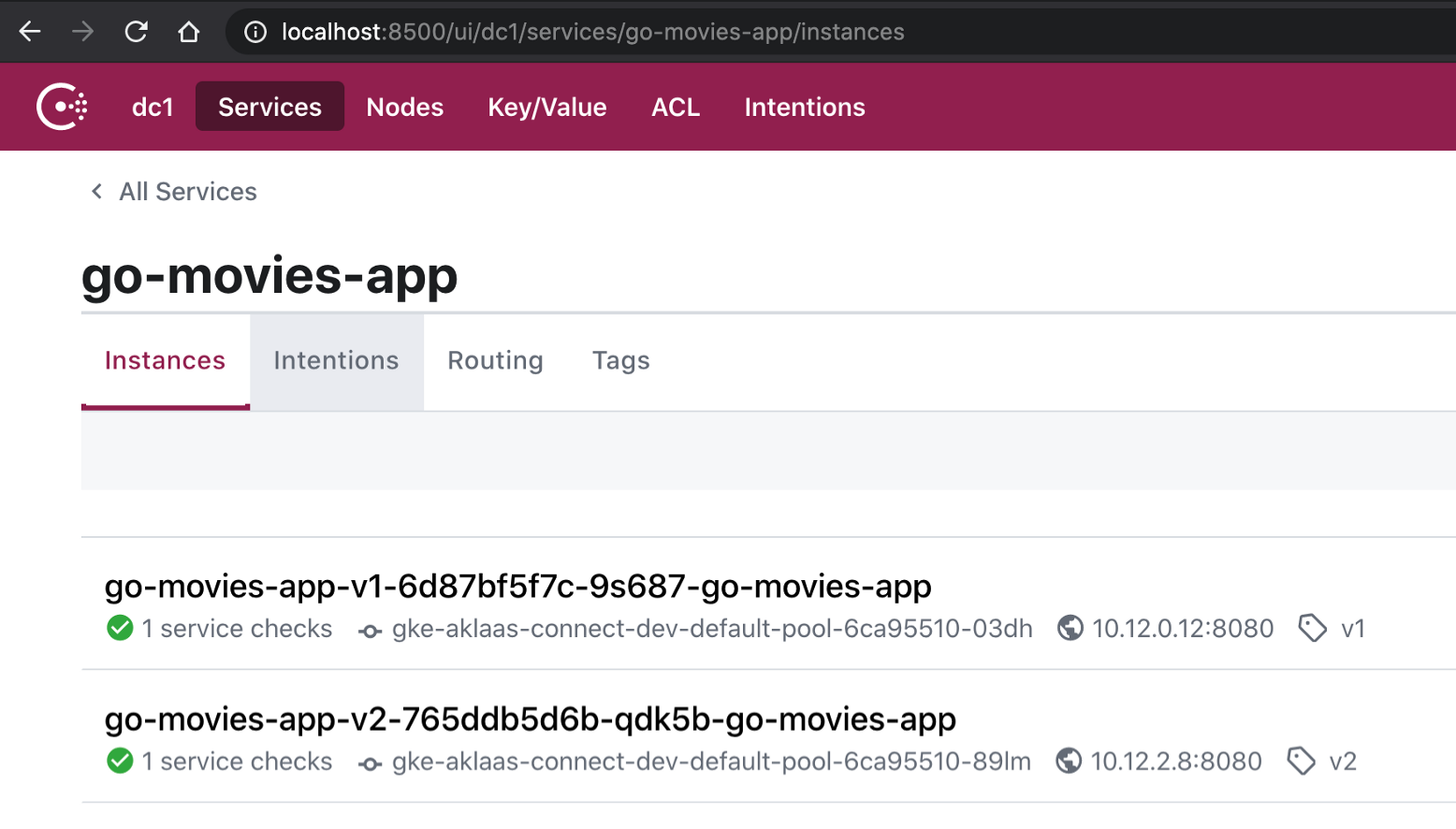

The deployment is registered in Consul as the service called “go-movies-app”. This is done via the “name” key under metadata (this is configurable). The applications should be running and healthy within Consul’s UI. See the repo README for details on how to access.

Traffic Resolution

It may seem counter-intuitive to the 3-stage (Routing > Splitting > Resolution) diagram from above, but I’m explaining the discovery stages in reverse as I believe it makes more sense when first learning these concepts.

Now that both versions of our Kubernetes app have been deployed and registered/tagged in Consul, we are ready to start leveraging advanced L7 features.

The resolver config enables us to define subsets of instances of a service that will satisfy service discovery requests in Consul.

Resolution is configured with the following file. All we’ve done here is create subsets of the “go-movies-app” based on the service tag annotations we set in the Kubernetes job spec above. When the Kubernetes job spec was applied, Consul automatically registered the service. This step carves the pool into two logical subsets within Consul. These can be seen by clicking on the “go-movies-app” service in the Consul UI.

kind = "service-resolver"

name = "go-movies-app"

default_subset = "v1"

subsets = {

"v1" = {

filter = "v1 in Service.Tags"

}

"v2" = {

filter = "v2 in Service.Tags"

}

}

More service resolver options are listed here.

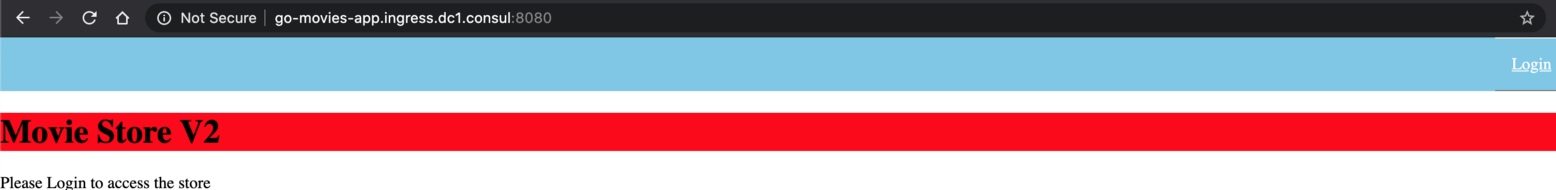

With both our app versions running in Kubernetes, registered in Consul (as “go-movies-app”), and organized into subsets (v1 and v2), we can test some deployment strategies.

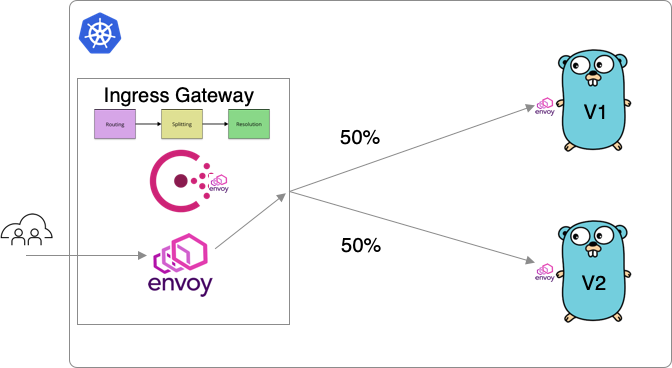

Traffic Splitting

We’ve seen this in action when we refreshed our browser at the beginning of the demo. Traffic splitting does exactly what it describes. It allows us to split percentages of traffic across different subsets of a service. In this case, we have the traffic split 50/50 across both versions of the app.

kind = "service-splitter"

name = "go-movies-app"

splits = [

{

weight = 50

service_subset = "v1"

},

{

weight = 50

service_subset = "v2"

},

]

In this configuration, all we've done is assign weights to the subsets of services we defined earlier. You can also test this via repeated curl commands as well.

curl http://go-movies-app.ingress.dc1.consul:8080/

All service splitting options are listed here.

»Traffic Routing

Traffic routing is actually the first stage in the upstream discovery process. However, I find it easier to explain last so we understand how our services were actually registered, organized into subsets, and resolved.

A router config entry allows for a user to intercept traffic using L7 criteria such as path prefixes or HTTP headers, and change behavior by sending traffic to a different service or service subset.

By default, the demo writes a configuration that allows resolution to specific subsets based on headers. This can be leveraged for canary deploys of a new application where you would like to call and test a new version of the application (while maintaining the old version of the app).

Kind = "service-router"

Name = "go-movies-app"

Routes = [

{

Match {

HTTP {

Header = [

{

Name = "x-debug"

Exact = "1"

},

]

}

}

Destination {

Service = "go-movies-app"

ServiceSubset = "v1"

}

},

{

Match {

HTTP {

header = [

{

Name = "x-debug"

Exact = "2"

},

]

}

}

Destination {

Service = "go-movies-app"

ServiceSubset = "v2"

}

},

]

This routing config matches a specific HTTP header to the go-movies-app service subset (that we defined in the above steps). All available configuration options are listed here.

We can test this via curl.

$ curl -H "x-debug: 1" http://go-movies-app.ingress.dc1.consul:8080/

<!doctype html>

<html lang="en">

<head>

. . .

<h1>Movie Store V1</h1>

Please Login to access the store

</body>

</html>%

And Version 2 . . .

$ curl -H "x-debug: 2" http://go-movies-app.ingress.dc1.consul:8080/

<!doctype html>

<html lang="en">

. . .

<h1>Movie Store V2</h1>

Please Login to access the store

</body>

</html>%

Kind = "service-router"

Name = "go-movies-app"

Routes = [

{

Match {

HTTP {

PathPrefix = "/v1"

}

}

Destination {

Service = "go-movies-app"

ServiceSubset = "v1"

}

},

{

Match {

HTTP {

PathPrefix = "/v2"

}

}

Destination {

Service = "go-movies-app"

ServiceSubset = "v2"

}

},

]

We must first write this config to Consul (it is not deployed by default). Make sure you are in the right directory.

consul config write demo/consul/router-path.hcl

Next, we can test via curl (or your browser).

curl http://go-movies-app.ingress.dc1.consul:8080/v1

...

<h1>Movie Store V1</h1>

Version 2 . . .

curl http://go-movies-app.ingress.dc1.consul:8080/v2

...

<h1>Movie Store V2</h1>

Summary

Consul’s service mesh is a powerful tool for improving your deployment workflows. In this post, we went over Ingress gateways and L7 features for advanced traffic management. However, there are many other benefits like enforced TLS encryption, network policy, observability, tracing, and more. See the resources below to learn more about Consul.

Further Reading

L7 Traffic Management in Consul

Check out HashiCorp’s tutorial site for guides on Consul topics and walkthroughs.

Also, see our Consul tracks at Instruqt for hands-on learning with actual Consul deployments.

Consul in hybrid computing environments (K8s and VMs)