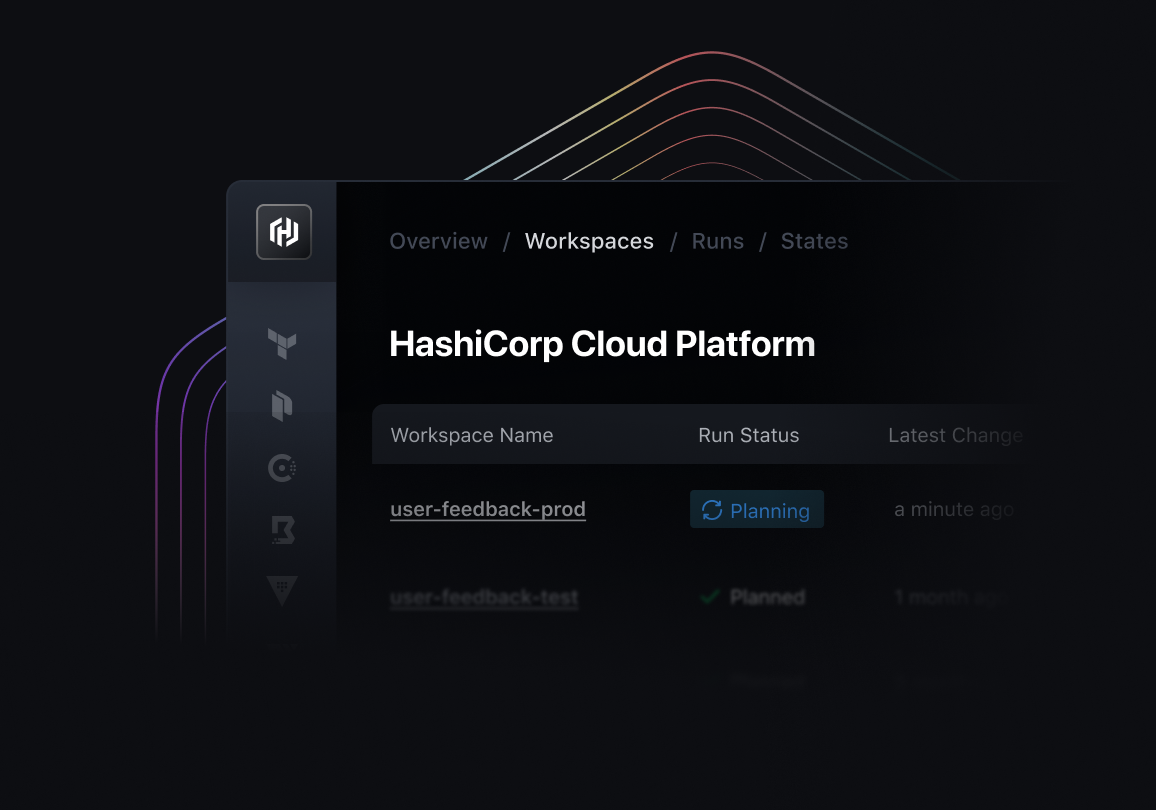

Do cloud right with the HashiCorp Cloud Platform

Delivered as an integrated suite of SaaS services, the HashiCorp Cloud Platform (HCP) is the fastest way to get started with The Infrastructure Cloud. It’s designed to bring Infrastructure and Security Lifecycle Management together into one smooth, consistent operating model.

With HCP, you can provision infrastructure, secure access, and enforce policies in minutes. It’s built to scale from small teams all the way up to enterprise-wide operations across on-premises, hybrid, and multi-cloud environments.

490+

Customers in the Global 2000

500M

Annual downloads

Your fastest path to getting value from the cloud

HCP helps you quickly start seeing value from the cloud by speeding up delivery, lowering costs, and reducing risk. So you can innovate faster without compromising security or your budget.

Accelerate delivery and innovation

Drive innovation by automating provisioning, integrating security from Day 1, and providing a unified operating model for platform and developer teams.

Join thousands using HCP to build faster, secure, optimized cloud programs

The HashiCorp Cloud Platform is built for enterprises that want to move fast — so you can focus on growth, not building custom tools.

HCP Terraform Europe is now available for customers in the European region to help with latency and provide geographic service locality for their infrastructure deployments. Contact Sales to learn more.

- Push-button deploymentsAccelerate cloud adoption with production-grade infrastructure, built-in security, and pay-as-you-go pricing.

- One workflow across cloudsEnable consistency and flexibility with centralized identity, policies, and virtual networks.

- Fully managed infrastructureIncrease productivity and reduce costs by letting HashiCorp experts manage, monitor, upgrade, and scale your clusters.

Infrastructure

Lifecycle Management

Use infrastructure as code to build, deploy, and manage the infrastructure that underpins cloud applications.

Learn moreSecurity

Lifecycle Management

Use identity-based access controls to manage the security lifecycle of your secrets, users, and services.

Learn moreTrusted with critical global applications

Enforce fine-grained, logic-based policy decisions with Sentinel, HashiCorp’s embeddable policy as code framework.

Learn moreRole-based access control grants permissions only to those who need it. Manage access to sensitive resources with SAML single sign-on integrations with Okta and Azure AD.

Learn moreHashiCorp products power the largest modern applications in the world. Highly available and scalable solutions help you avoid downtime and meet your recovery time and recovery point objectives.

Learn moreManage the lifecycle of your infrastructure deployments with continuous validation and drift detection. Streamline your auditing process with comprehensive log files.

Learn moreAmplify team productivity with a private registry where developers can find and share reusable code, policy libraries, and other resources in one place.

Learn moreEnd-to-end security across all environments

HashiCorp’s enterprise products and managed services are backed by comprehensive security features and compliance certifications so you can meet regulatory, industry, and internal requirements. And with 24/7 monitoring and support, customers have peace of mind knowing their data and infrastructure are protected.

Let’s build your cloud automation platform

HashiCorp has more than a decade of experience and deployments at thousands of large organizations worldwide. HashiCorp Validated Designs use that expertise to outline best practices for deploying and operating HashiCorp solutions. Our service and customer success teams collaborate with certified partners to design and implement a cloud automation platform tailored to your business needs.

Take the next step

Start using the HashiCorp Cloud Platform today with a $500 trial credit. *North America region customers only