Operating Terraform for Multiple teams and Applications

This guide provides an operational framework for organizations looking to establish an infrastructure provisioning workflow leveraging the collaboration and governance features of Terraform Enterprise.

Multi-Cloud Infrastructure Provisioning

The foundation for adopting the cloud is infrastructure provisioning. HashiCorp Terraform is the world's most widely used cloud provisioning product and can be used to provision infrastructure for any application using an array of providers for any target platform.

To achieve shared services for infrastructure provisioning, IT teams should start by implementing reproducible infrastructure as code practices, and then layering compliance and governance workflows to ensure appropriate controls.

Terraform Enterprise automates the infrastructure provisioning workflow across an organization. This document outlines how organizations can setup Terraform Enterprise to build complex, interdependent infrastructure for multiple teams and initiatives using modules and workspaces.

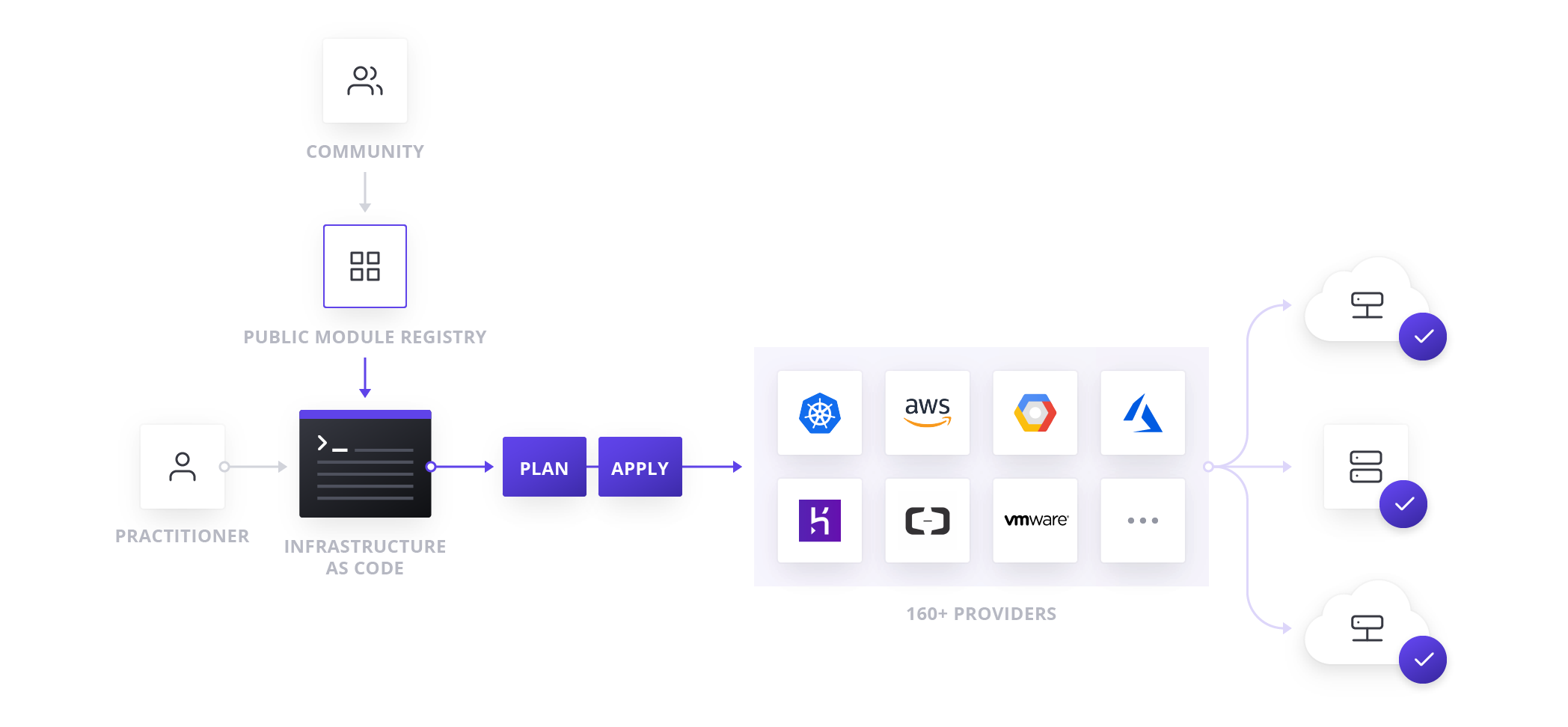

Organizations using Terraform Open Source should be familiar with this workflow:

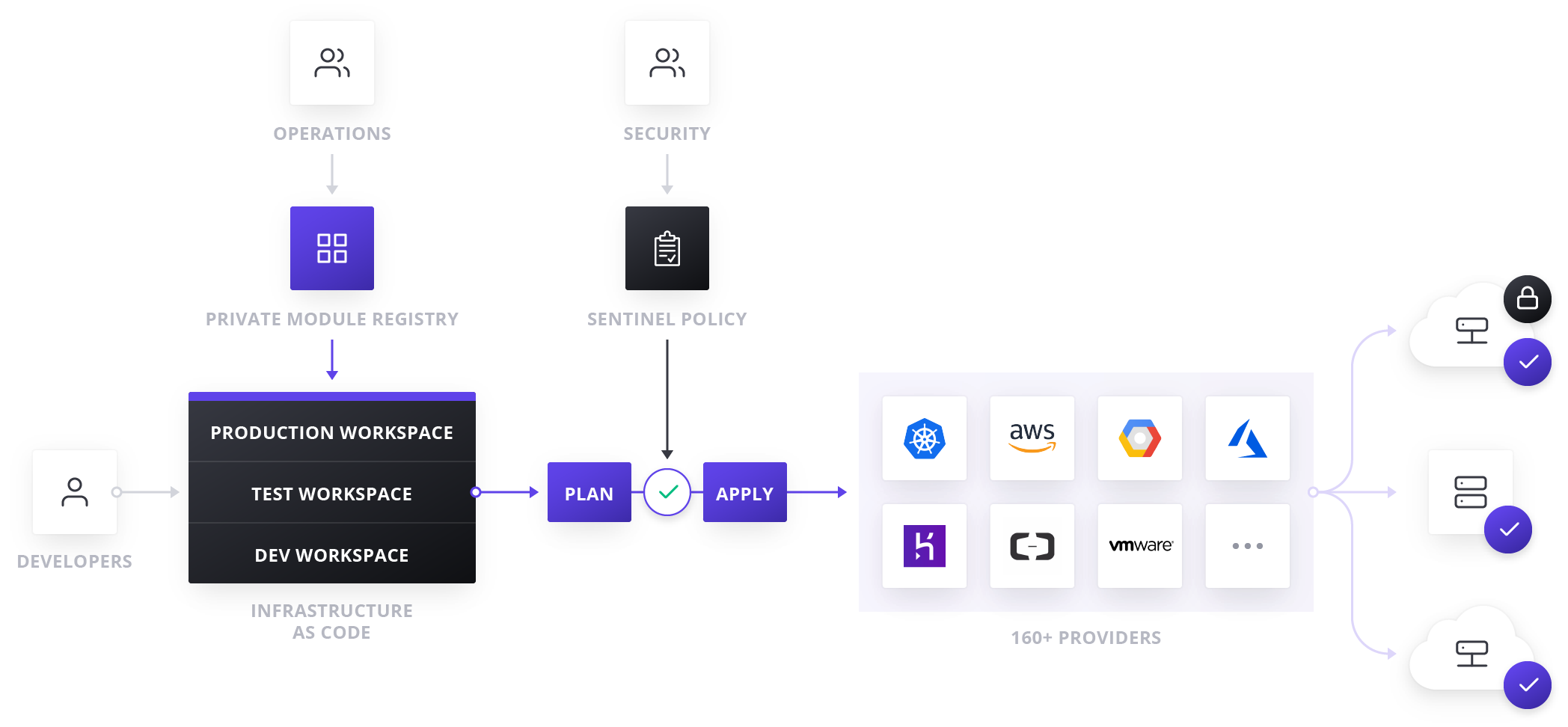

This guide will provide an operational framework to establish a new workflow using the collaboration and governance features of Terraform Enterprise:

Goals & Assumptions

The goal of this model is to give organizations a clear path to supporting multiple teams provisioning infrastructure with Terraform Enterprise. Most organizations gradually adopt Terraform, often starting the adoption journey with a small pilot team that proves out and validates the use case. After this pilot, Terraform Enterprise is usually brought under the management of a central IT, operations, or cloud team. Different organizations will have different structures that best suit their needs, but generally the team managing Terraform Enterprise should be infrastructure experts; hereafter this team will be referred to as the infrastructure team. This infrastructure team facilitates and enables the larger organization to provision infrastructure.

Assuming the Terraform pilot is successful, the infrastructure team should have a working understanding of Terraform’s features. For reference, here are a few key terms to be familiar with:

Infrastructure as Code is the approach that Terraform uses to build infrastructure. Users define their desired infrastructure in HCL (HashiCorp Configuration Language) and Terraform builds, manages, and tracks the state of that infrastructure.

Workspaces are the unit of isolation in Terraform. Workspaces are associated with a set of Terraform configuration files, variables, settings, and a state file. Similar to how monolithic applications are decomposed into microservices, monolithic infrastructure is decomposed into workspaces. Workspaces have separate owners, access controls, and scope. We recommend having at least one workspace per deployment environment (e.g. Dev, Test, Prod) per configuration.

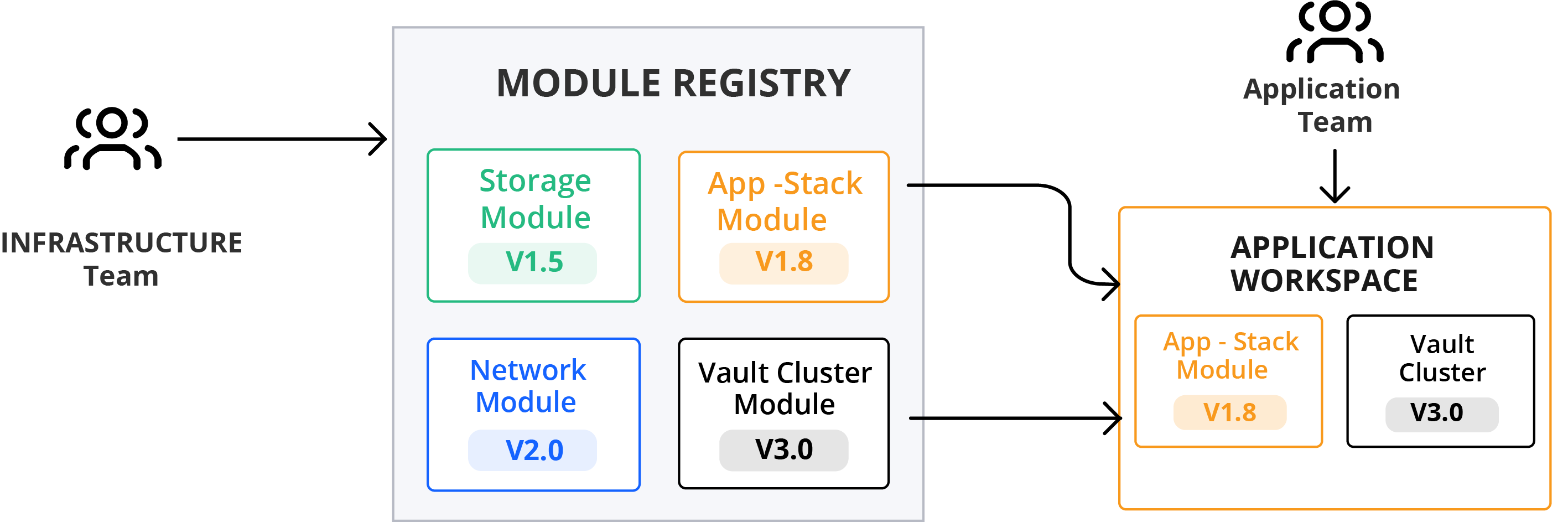

Modules are re-usable infrastructure components. Similar to how libraries are reused across microservices, modules are reused across workspaces.

With working knowledge of Terraform’s functionality, the next task for the infrastructure team is to understand the relationships between the teams and applications they will support with Terraform. With that understanding, the infrastructure team can then enable the most secure and efficient infrastructure provisioning for those teams and applications.

Addressing Legacy Workflows and Applications

Making sense of legacy workflows and applications is helpful to motivate people and teams to adopt new ones. Infrastructure provisioning has changed dramatically over the past few years going from hardware to virtual machines to cloud-based infrastructure. This transition is one from static, homogenous resources to dynamic, diverse ones. Many infrastructure teams will be familiar with point-and-click provisioning tooling and workflows made popular for provisioning and managing virtual machines. However, this involves slow, error-prone manual effort. Many new tools attempt to recreate this same workflow for cloud environments. While these tools function to bridge the gap, Infrastructure as Code tools provide automation, efficiency, and safety that point and click tools cannot replicate. For organizations that are most comfortable with tools like Cloud management platforms, we recommend a “better together” approach to get the comfort of GUI’s, while providing the opportunity to get up to speed with Infrastructure as Code.

Understanding Relationships Between Teams and Applications

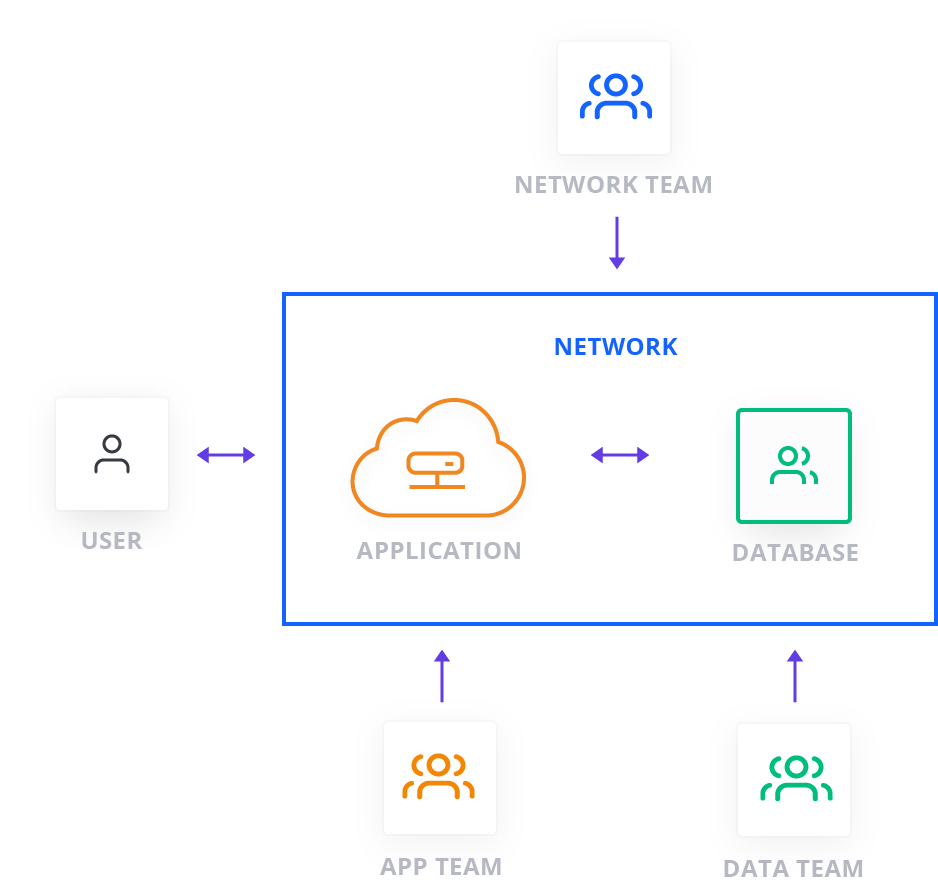

A small team (or individual) using Terraform has the benefit of a tight feedback loop between key team members and responsibilities. For example, the same person might be responsible for building infrastructure, writing application code, and securing that application. In this scenario, operating with a single, monolithic configuration file might be sufficient. However, as organizations grow and build more complex applications running on top of more complex infrastructure, that feedback loop loosens dramatically— networking teams don’t have perfect insight into the needs of development teams, security teams want to slow operations for auditing purposes, while operations teams want to provide speed and efficiency— motivating the need for the infrastructure team to create boundaries between components to harden the overall topology against breaks and allow work in parallel.

For the infrastructure team managing Terraform, it is important to understand relationships between teams and applications. They should work with teams to understand communication channels between teams, interdependencies between infrastructure components, and interdependencies between the services running on top of the infrastructure. A simple application might leverage a network, an app server, and a database each owned by respective, distinct team:

Other important considerations include both the rate of change of these components and the scale of those changes. Slower changing components such as VPC’s can— and should— be treated differently than more rapidly changing components like compute instances. Likewise, by qualifying releases (major and minor at the least), teams can establish processes accordingly.

Determining Workspace Structure and Function

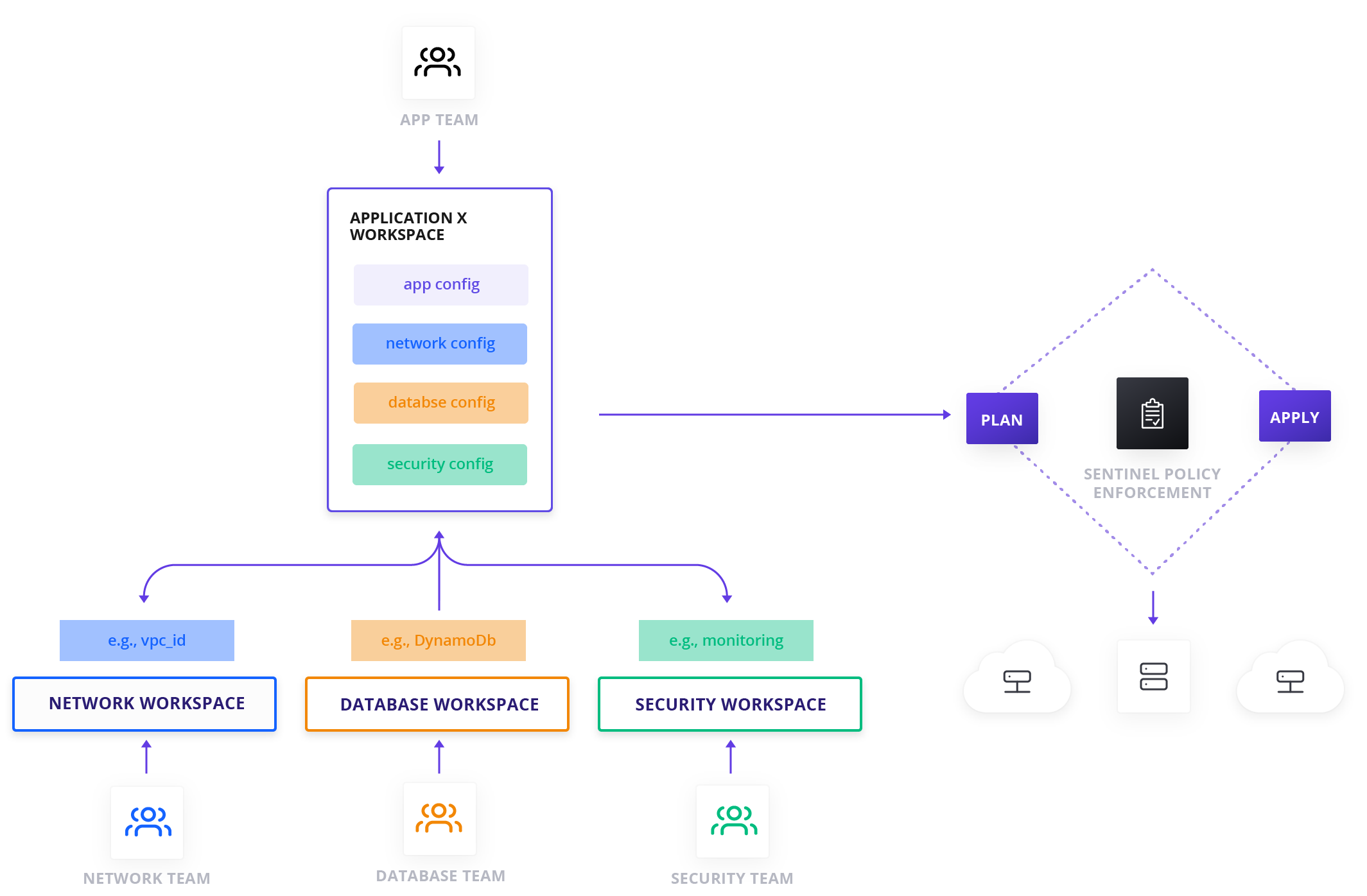

After understanding the relationships between teams and applications, the infrastructure team should break down existing infrastructure to match those relationships using workspaces within Terraform. Decomposing infrastructure with workspaces allows teams to efficiently work in parallel, while minimizing the blast radius of changes and ensuring proper access controls.

A topology might include many applications and services existing in respective workspaces with appropriate access controls in place. From the example above, the following workspaces would provide helpful decomposition:

Our recommended practice is that the networking workspace should be managed by the networking team and has outputs which can be consumed by the application workspace (and any other components, services, and applications to be deployed on the network). The networking workspace should allow write access for the networking team, but limited permissions for other teams. Other middleware and shared services (e.g. security, logging, monitoring, or database tooling) employed by multiple applications should get respective workspaces, with “write” access available to the teams managing those services and "read" access for teams consuming those resources. This allows applications teams to quickly make use of these services without special knowledge or set up for them. Likewise, each application should get its own respective workspaces with similarly appropriate access control.

Workspace-based decomposition allows Application and Dev teams to quickly iterate/build, while only modifying a small amount of Terraform code. The infrastructure team is tasked with ensuring stability of infrastructure (and sub-teams are responsible for sub-components like network or security), while the application team is only concerned with building a stable application.

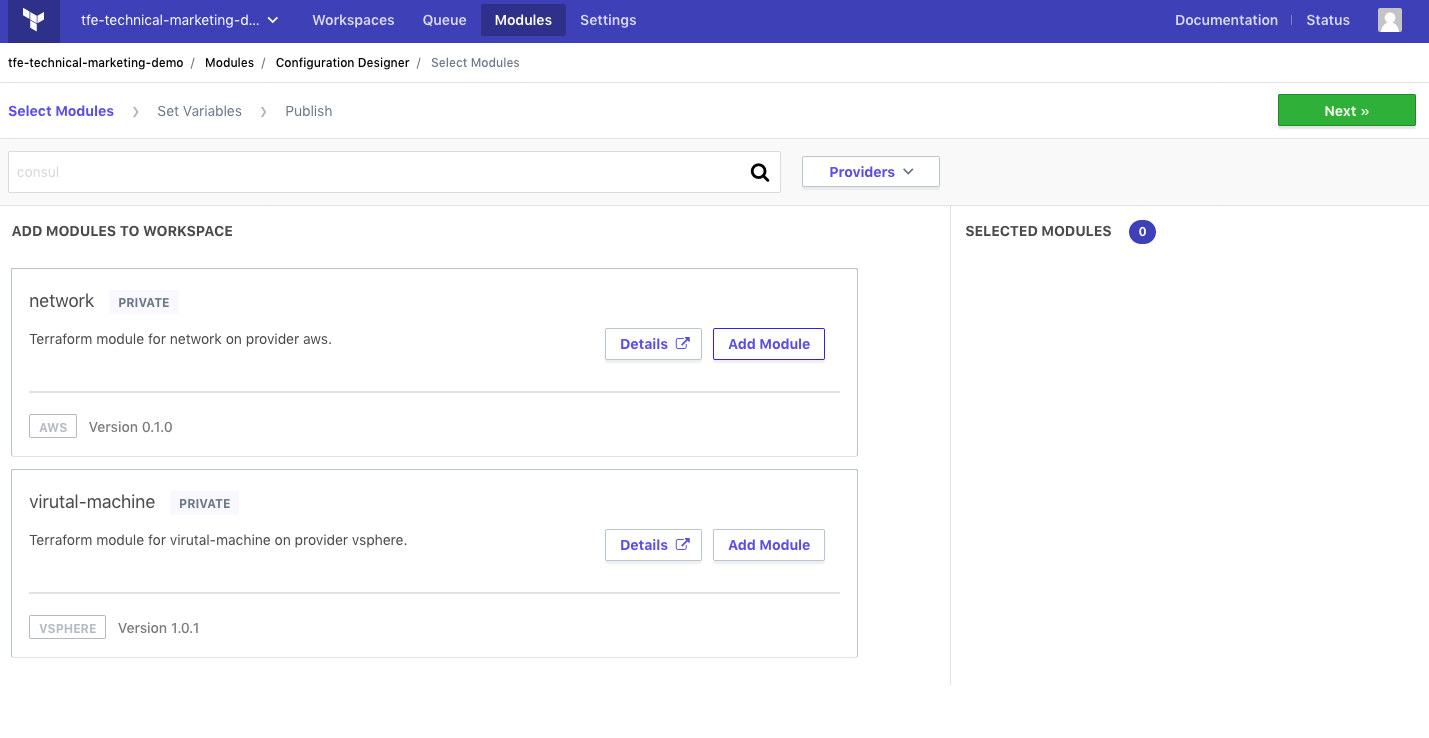

This model also promotes self-service infrastructure provisioning— Terraform Enterprise provides a Private Module registry with a corresponding Configuration Designer. Using the Terraform Enterprise UI, an app team can use the Configuration Designer to aggregate/modify modules created by the infrastructure team (with inputs and definitions from Network/Security teams). See the Self-Service Infrastructure section below for more.

Building Initial Workspaces

With structure and relationships understood, teams will need to work with the infrastructure team to create their workspaces. In some cases, the infrastructure team will work with component/application teams to write Infrastructure as Code to define needed components. In other cases, the Infrastructure team will vet and review Infrastructure as Code configuration files written by the respective component/application team. Visit the Terraform learning center or docs for more resources on writing Infrastructure as Code.

Coordinating Changes and Promotions in Workspaces

After initial workspaces are built out, teams need to coordinate releases and changes: minor releases might be isolated in impact and not require coordination with other workspaces or teams, while major releases might require informing and aligning with affected teams. For example, a minor change might create a new input for a configuration to allow more specific tuning, while providing a default value to avoid a breaking change. A major change might be a refactor that introduces different outputs that result in existing workflows failing if the changes aren’t planned for.

Promotion of Infrastructure as Code (from development to production for example) also needs to be considered. Earlier, we recommended building workspaces based on environment and configuration, which means you would have a dev/test/prod workspace for infrastructure components. To promote configurations across those workspaces, our recommended practice is to have a variable within the configuration that users can set to current stages of promotion (ie dev, test, prod). This variable can be linked to a map of other inputs that define what each of those environments should look like; this is a declarative approach to capturing these environments. For example, by setting the promotion variable to “dev” within a dev workspace that would limit VM size or number of read replicas provisioned for that workspace, while setting it to “prod” provides for larger VM’s and more read replicas.

Promotion in this fashion limits changes made to configuration files, which prevents errors and increases confidence in promotions to production environments. There are qualifications that might prevent this method (eg if production environments use hardware load balancers and development environments use software load balancers), but we recommend avoiding code changes during promotion when possible.

Governance Through Modules and Sentinel Policy as Code

Terraform modules provide a way to standardize how components are built across an organization. By codifying components into modifiable, reusable modules organizations can prevent having multiple iterations of the same component, which could potentially result in insecure configurations. For many organizations, modules act as a layer of governance to ensure that common components are always built according to operational best-practices, while allowing customization if needed.

Governance can also be enforced programmatically through Sentinel policy as code. The infrastructure team should work with a security team (CIO, CISO, etc.) to ensure workspaces have proper access permissions and to create policy as code for infrastructure. The security team could write and implement their own policy as code, or work with the infrastructure team to develop and enforce policies. For example, many regulations require sensitive handling of data and where it is stored. To meet that requirement the security team could write a policy that prevents building infrastructure outside specified availability zones. For more on sentinel refer to our Sentinel guide or the Sentinel tracks in the Terraform learning center.

# Allowed availability zones

allowed_zones = [

"us-east-1a",

"us-east-1b",

"us-east-1c",

"us-east-1d",

"us-east-1e",

"us-east-1f",

]

# Rule to restrict availability zones and region

region_allowed = rule {

all aws_instances as _, instances {

all instances as index, r {

r.applied.availability_zone in allowed_zones

}

}

}

# Main rule that requires other rules to be true

main = rule {

(region_allowed) else true

}

This rule restricts AWS deployments to us-east availability zones.

Using Modules for Self-Service Infrastructure

Some organizations seek to establish self-service infrastructure for teams building applications. In this model the infrastructure team can develop modules for common infrastructure components that application teams can rapidly customize and deploy.

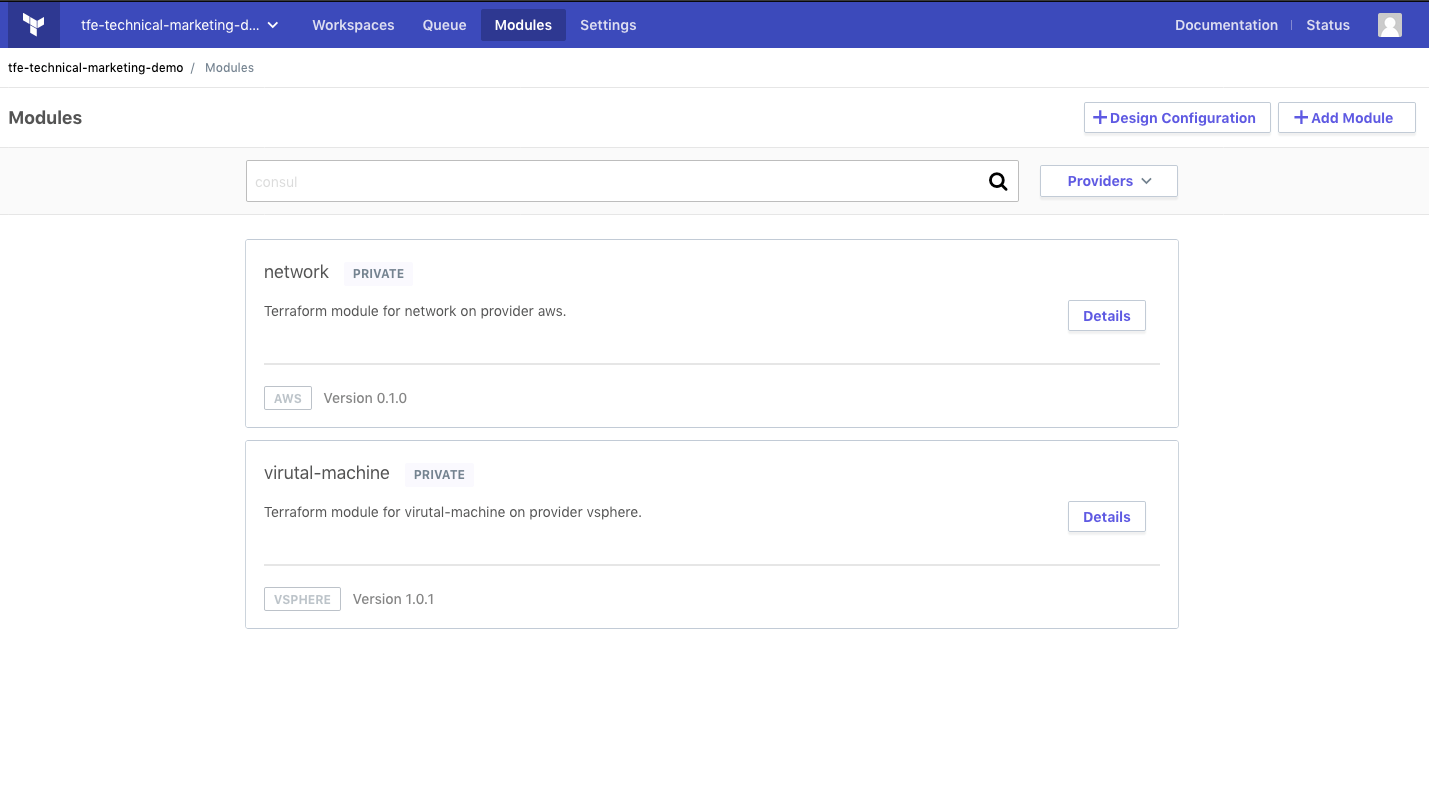

These modules are stored in the Private Module Registry (PMR). Terraform Enterprise users have access to both the public registry filled with community-built modules and a private module registry, where proprietary modules can be securely stored and shared internally.

Many application teams will have knowledge and preferences about the infrastructure they’re deploying applications to, however, not every application team will have this knowledge. Terraform allows these types of teams to still rapidly deploy infrastructure with the module registry and configuration designer.

Teams with no Infrastructure as Code experience can select the modules they would like to use, customize them in configuration designer, and receive Infrastructure as Code to provision that infrastructure.

Conclusion

As companies continue to build complex applications on top of complex infrastructure, teams tasked with managing it and facilitating it’s provisioning can use Terraform Enterprise to decompose, automate, and secure that infrastructure. By understanding relationships and dependencies the infrastructure team empowers application and service teams to work in parallel to develop, iterate, and promote infrastructure components (and respective applications), while isolating and minimizing impact from unexpected errors and bugs. With proper workspace organization and policy as code governance guardrails in place organizations can work with speed, while ensuring confidence that they’re leveraging operational best practices. Likewise, the infrastructure team can enable the larger organization to provision infrastructure in a self-service fashion through the module registry and configuration designer.