In Consul 1.8, we introduced three new service mesh features: ingress, terminating, and WAN federation via mesh gateways. In this blog, we're going to explain how WAN federation can be used to connect multiple Kubernetes clusters across various environments.

When connecting services between multiple Kubernetes clusters, we can face varied and diverse challenges. How do we handle overlapping network addresses, NAT, multiple cloud providers, and securing the ingress for each service? The easiest way to do this would be to send encrypted traffic over the internet, without having to worry about it being compromised.

Leveraging mesh gateways in HashiCorp Consul, we are able to securely connect multiple Kubernetes clusters together, even with gateways exposed on the public internet. This provides a service mesh to manage and secure the traffic between services, no matter where they are running. In this post, we will examine how to deploy Consul in two Kubernetes clusters, using WAN Federation to secure communication between services without networking complexities.

»Prerequisites

We start with two Kubernetes clusters already provisioned. The Kubernetes clusters do not have to be in the same region or hosted on the same cloud provider. In this example, we use one Google Kubernetes Engine (GKE) cluster and one Azure Kubernetes Service (AKS) cluster. We have not peered networks or performed any configuration on these clusters.

We need Consul, Helm, and kubectl on our development machine.

Consul provides a helm chart repository that we need to add to helm.

$ helm repo add hashicorp https://helm.releases.hashicorp.com

"hashicorp" has been added to your repositories

We are working from an empty directory that we can write files into to store the configuration files that we create.

»Deploy Consul to GKE

We override the default values for the Consul Helm chart and store them in values-gke.yaml. We set the image: consul:1.8.0-beta1 to use the new WAN federation feature. We then set the datacenter name to gke, and enable ACLs, TLS, and Gossip Encryption to secure our cluster. We enable federation and meshGateway functionality. We set the connectInject toggle to true to deploy the mutating admission webhook which deploys the Connect sidecar proxy.

global:

name: consul

image: consul:1.8.0-beta1

datacenter: gke

tls:

enabled: true

acls:

manageSystemACLs: true

createReplicationToken: true

federation:

enabled: true

createFederationSecret: true

gossipEncryption:

secretName: consul-gossip-encryption-key

secretKey: key

connectInject:

enabled: true

meshGateway:

enabled: true

Then, we create the gossip encryption key and deploy the values-gke.yaml configuration with Helm. The helm install command can take up to 10 minutes depending on your cloud provider, due to provisioning persistent volumes and attaching those to the host.

$ kubectl config use-context gke

Switched to context "gke".

$ export TOKEN=`consul keygen`

$ kubectl create secret generic consul-gossip-encryption-key \

--from-literal=key=$TOKEN

secret/consul-gossip-encryption-key created

$ helm install consul -f values-gke.yaml hashicorp/consul --wait

NAME: consul

LAST DEPLOYED: Tue May 12 12:19:48 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

Thank you for installing HashiCorp Consul!

# omitted for clarity

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

consul-79nw8 1/1 Running 0 4m42s

consul-98pdb 1/1 Running 0 4m43s

consul-connect-injector-webhook-deployment-d9467d674-gjnbz 1/1 Running 0 4m42s

consul-hzc8p 1/1 Running 0 4m42s

consul-mesh-gateway-7474d78cbc-22x9l 2/2 Running 0 4m42s

consul-mesh-gateway-7474d78cbc-mzpwd 2/2 Running 0 4m42s

consul-server-0 1/1 Running 5 4m42s

consul-server-1 1/1 Running 5 4m42s

consul-server-2 1/1 Running 5 4m42s

The Consul cluster now runs in the GKE cluster, and we now export the federation secret to deploy to our AKS cluster.

$ kubectl get secret consul-federation -o yaml > consul-federation-secret.yaml

In a separate shell that we will leave open in the background, we port forward to our consul UI.

$ kubectl port-forward svc/consul-ui 8501:443

Forwarding from 127.0.0.1:8501 -> 8501

Forwarding from [::1]:8501 -> 8501

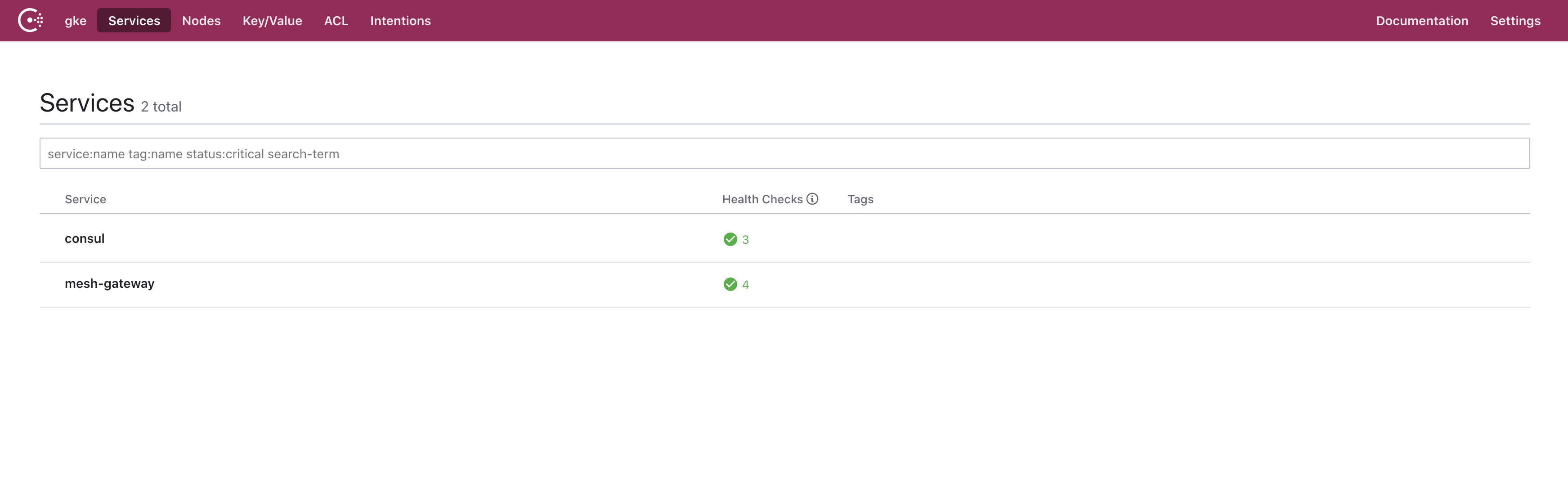

We now open the browser and visit https://localhost:8501 to view the cluster.

»Deploy Consul to AKS

Next, we configure our aks cluster and save the configuration in values-aks.yaml.

In addition to the options we specified for our gke cluster, we configure aks to use the consul-federation secret that already has the secrets generated by the gke cluster.

global:

name: consul

image: consul:1.8.0-beta1

datacenter: aks

tls:

enabled: true

caCert:

secretName: consul-federation

secretKey: caCert

caKey:

secretName: consul-federation

secretKey: caKey

acls:

manageSystemACLs: true

replicationToken:

secretName: consul-federation

secretKey: replicationToken

federation:

enabled: true

gossipEncryption:

secretName: consul-federation

secretKey: gossipEncryptionKey

connectInject:

enabled: true

meshGateway:

enabled: true

server:

extraVolumes:

- type: secret

name: consul-federation

items:

- key: serverConfigJSON

path: config.json

load: true

We deploy Consul to aks in a way similar to gke, with an extra step to deploy the federation token we exported.

$ kubectl config use-context aks

Switched to context "aks".

$ kubectl apply -f consul-federation-secret.yaml

secret/consul-federation created

$ helm install consul -f values-aks.yaml hashicorp/consul --wait

NAME: consul

LAST DEPLOYED: Tue May 12 12:41:07 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

Thank you for installing HashiCorp Consul!

# omitted for clarity

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

consul-4w7zc 1/1 Running 0 3m56s

consul-5klsj 1/1 Running 0 3m56s

consul-connect-injector-webhook-deployment-8db6bc89b-978dr 1/1 Running 0 3m56s

consul-fwpth 1/1 Running 0 3m56s

consul-mesh-gateway-67fddc99ff-8jfdb 2/2 Running 0 3m56s

consul-mesh-gateway-67fddc99ff-xfdqh 2/2 Running 0 3m56s

consul-server-0 1/1 Running 0 3m56s

consul-server-1 1/1 Running 0 3m56s

consul-server-2 1/1 Running 0 3m56s

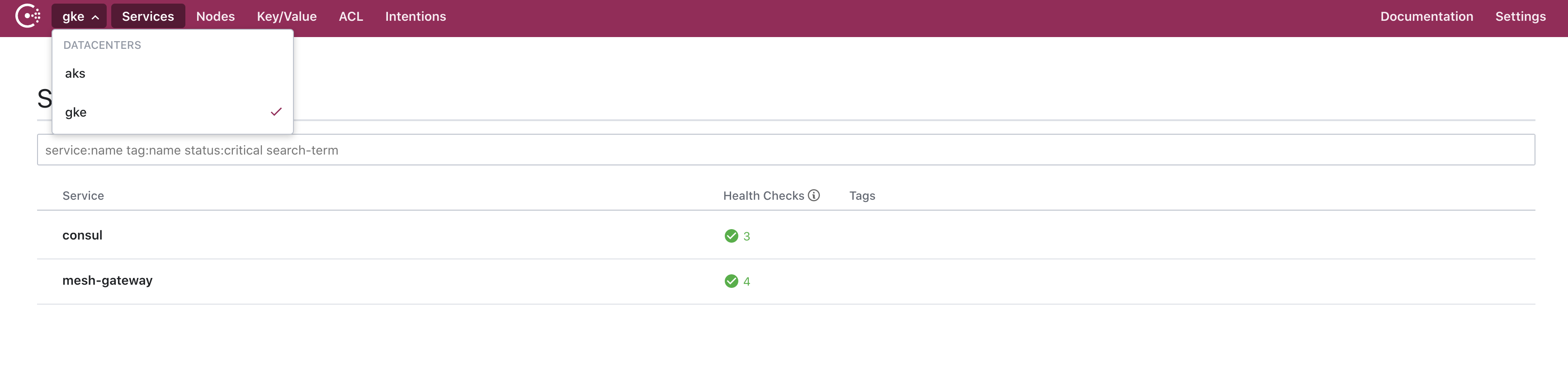

We now have Consul deployed and federated in both the gke and aks clusters. When we examine the Consul UI, we can see both datacenters in the dropdown.

»Cross-Cluster Communication between Services

With two Kubernetes clusters fully federated running mesh gateways in each, we can deploy a backend service into the aks cluster and a frontend service to the gke cluster.

We create a pod definition for the backend counting service named counting.yaml.

apiVersion: v1

kind: ServiceAccount

metadata:

name: counting

---

apiVersion: v1

kind: Pod

metadata:

name: counting

annotations:

"consul.hashicorp.com/connect-inject": "true"

spec:

containers:

- name: counting

image: hashicorp/counting-service:0.0.2

ports:

- containerPort: 9001

name: http

serviceAccountName: counting

The consul.hashicorp.com/connect-inject annotation tells Consul that we want to automatically add this pod as a Consul service. By registering the pod as a Consul service, the mesh gateway can identify and resolve the service without registering it as a Kubernetes service.

We deploy this pod to the aks cluster.

$ kubectl create -f counting.yaml

serviceaccount/counting created

pod/counting created

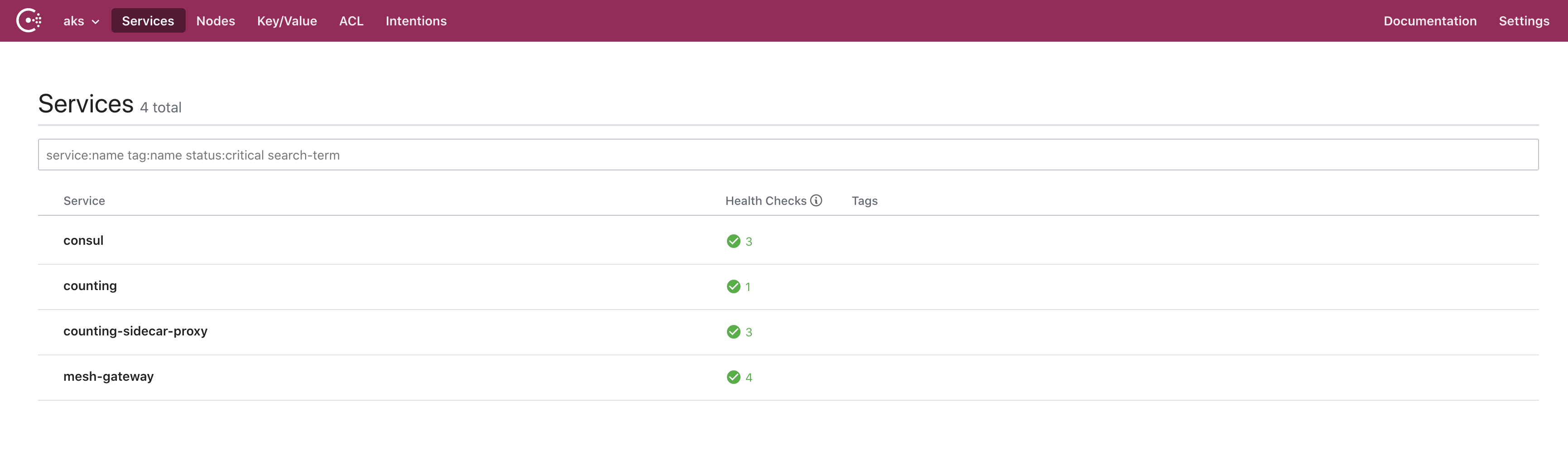

The Consul UI demonstrates that the new counting service is registered and available.

Next, we create the pod definition for the dashboard service and its load balancer in dashboard.yaml.

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard

---

apiVersion: v1

kind: Pod

metadata:

name: dashboard

labels:

app: "dashboard"

annotations:

"consul.hashicorp.com/connect-inject": "true"

"consul.hashicorp.com/connect-service-upstreams": "counting:9001:aks"

spec:

containers:

- name: dashboard

image: hashicorp/dashboard-service:0.0.4

ports:

- containerPort: 9002

name: http

env:

- name: COUNTING_SERVICE_URL

value: "http://localhost:9001"

serviceAccountName: dashboard

---

apiVersion: "v1"

kind: "Service"

metadata:

name: "dashboard-service-load-balancer"

namespace: "default"

labels:

app: "dashboard"

spec:

ports:

- protocol: "TCP"

port: 80

targetPort: 9002

selector:

app: "dashboard"

type: "LoadBalancer"

Once again we add the consul.hashicorp.com/connect-inject annotation. The dashboard service connects to the counting service by configuring it as an upstream endpoint with the consul.hashicorp.com/connect-service-upstreams annotation. The value is formatted as ”[service-name]:[port]:[optional datacenter]”. We can add additional annotations for other Consul Connect configurations.

We switch to the gke cluster and deploy the dashboard frontend into the default namespace.

$ kubectl config use-context us-east

Switched to context "gke".

Context "gke" modified.

$ kubectl create -f dashboard.yaml

serviceaccount/dashboard created

pod/dashboard created

service/dashboard-service-load-balancer created

In the Consul dashboard, we can see that the dashboard service and mesh-gateway pods are registered and running.

We can check if our frontend service successfully communicates with the backend by accessing its dashboard. We retrieve the public IP address of the load balancer attached to the dashboard service.

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

consul-connect-injector-svc ClusterIP 10.0.27.19 <none> 443/TCP 12m15m

consul-dns ClusterIP 10.0.31.172 <none> 53/TCP,53/UDP 12m15m

consul-mesh-gateway LoadBalancer 10.0.20.181 34.67.131.248 443:32101/TCP 12m15m

consul-server ClusterIP None <none> 8501/TCP,8301/TCP,8301/UDP,8302/TCP,8302/UDP,8300/TCP,8600/TCP,8600/UDP 12m15m

consul-ui ClusterIP 10.0.29.208 <none> 443/TCP 12m15m

dashboard-service-load-balancer LoadBalancer 10.0.26.155 34.68.5.220 80:32608/TCP 2m14s

kubernetes ClusterIP 10.0.16.1 <none> 443/TCP 102m

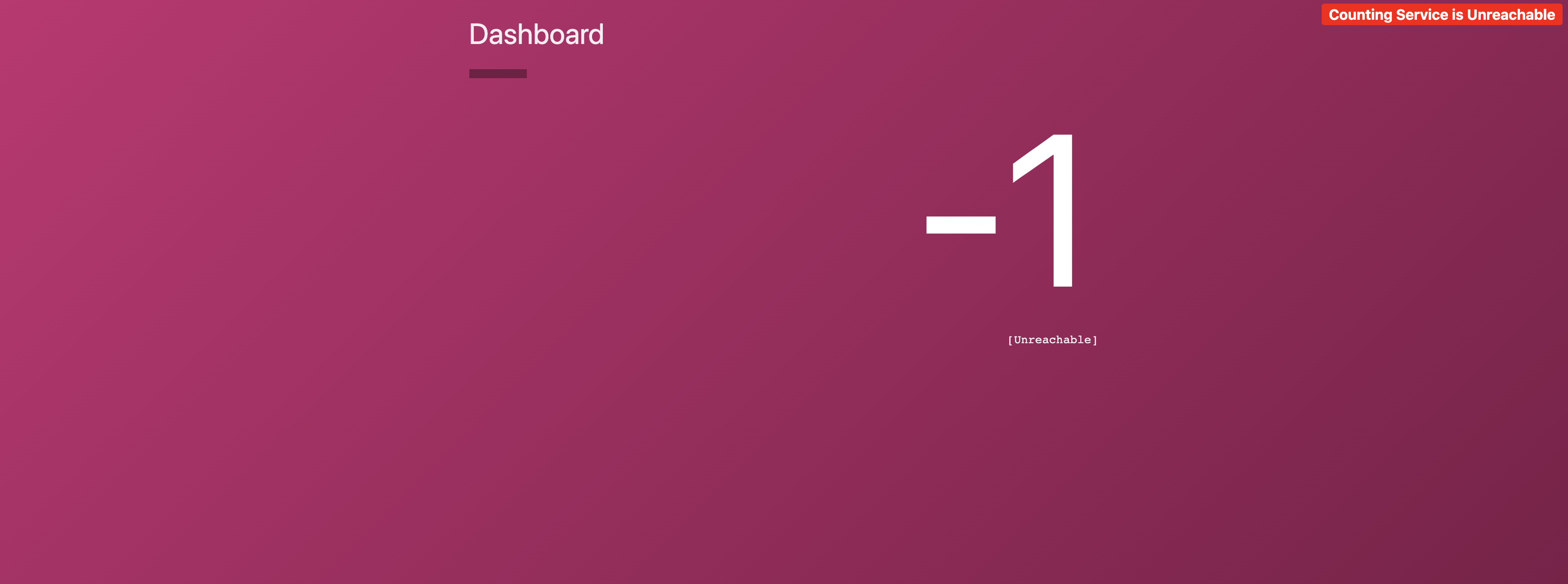

When we access http://34.68.5.220, we notice the dashboard service is failing to connect to the counting service. We enabled ACLs in our cluster which block communication by default.

»Allow Service Communication

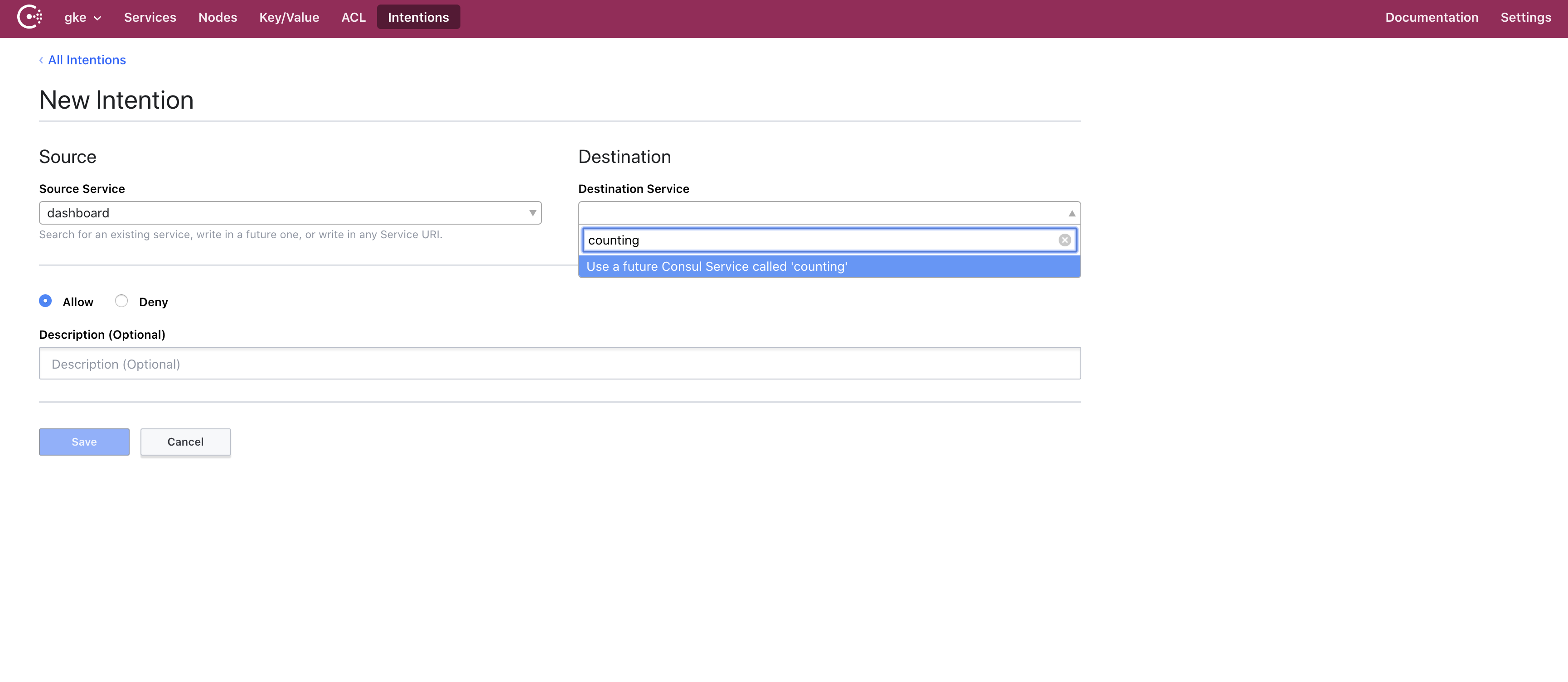

If we want to allow traffic we need to create an intention to allow traffic between the dashboard service and the counting service.

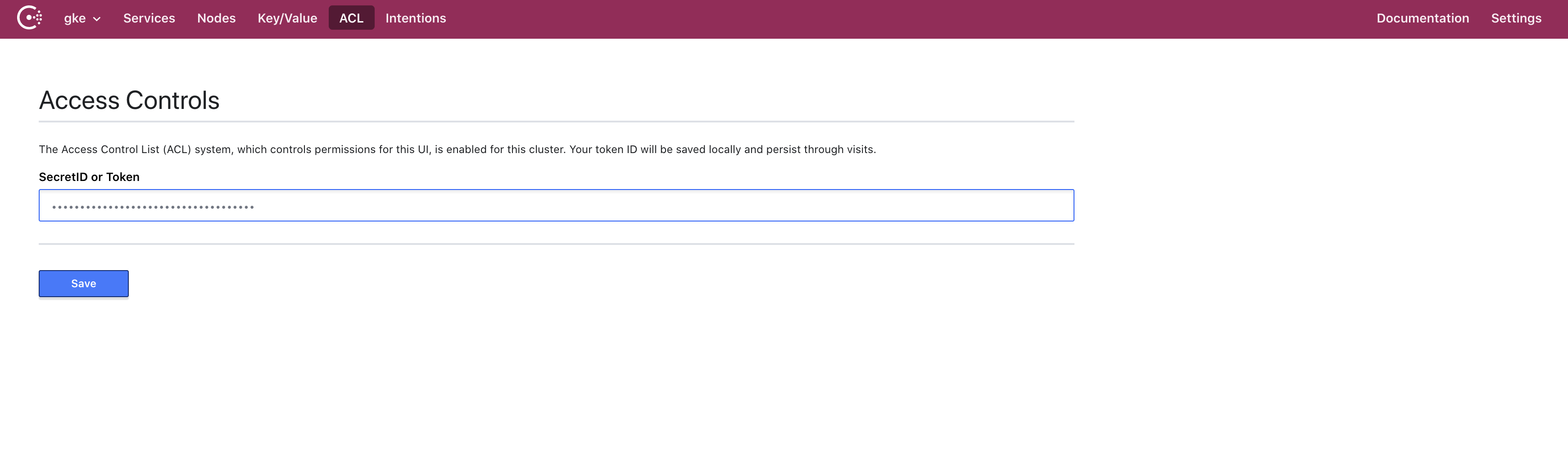

First we need to get a token to access the Consul ACL UI. When we created our cluster a bootstrap token was generated, we can use that token.

$ kubectl get secret consul-bootstrap-acl-token --template={{.data.token}} | base64 -D

f57615c0-bffd-d286-6658-32d64f815b2a%

Note: If this command fails, ensure that you are on the primary cluster, the gke cluster in this case.

Now in the UI we click on ACL and paste this token in.

Now we click on Intentions and then Create. We specify that we want our source dashboard to communicate with the counting service. The gke datacenter doesn’t know about the counting service yet though so we type it in manually.

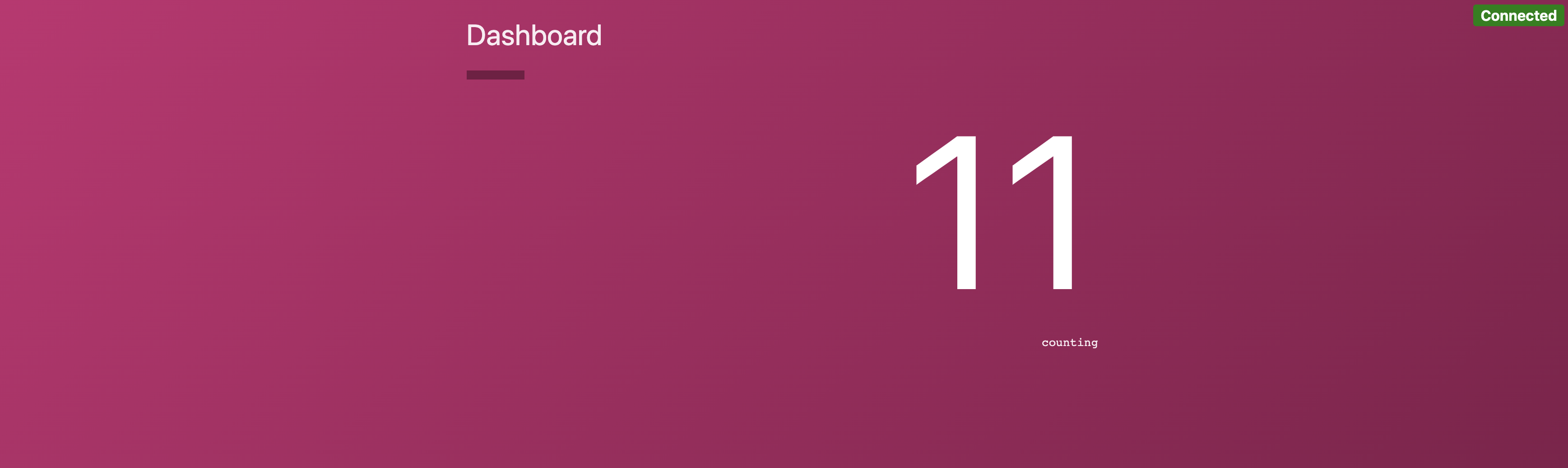

Now we go back to our browser window for the dashboard service and we see that the dashboard service is communicating with the counting service.

»Conclusion

In this post, we covered how to deploy Consul via Helm into multiple Kubernetes clusters using the new WAN federation features available in Consul 1.8. We then connected two services between clusters via mesh gateways and created an intention to allow the services to communicate with one another. This was all completed easily and securely despite the clusters being run on totally different clouds and connected across the public internet.

To learn more refer to the official documentation for Consul Kubernetes, Consul WAN Federation and Connect mesh gateways.

Post questions about this blog to our community forum!