Nomad is a powerful and flexible scheduler designed for long running services and batch jobs. Through a wide range of drivers, Nomad can schedule container based workloads, raw binaries, java applications and more. Nomad is easy to operate and scale, and integrates seamlessly with HashiCorp Consul for service to service communication and HashiCorp Vault for secrets management.

Nomad provides developers with self-service infrastructure. Nomad jobs are described using a high level declarative format syntax that is version controlled and promotes infrastructure as code. Once a job is submitted to Nomad, it is responsible for deploying and ensuring the availability of a service. One of the benefits of running Nomad is increased reliability and resiliency of your computing infrastructure.

Welcome to our series on Building Resilient Infrastructure with Nomad, where we explore how Nomad handles unexpected failures, outages, and routine maintenance of cluster infrastructure, often without operator intervention required.

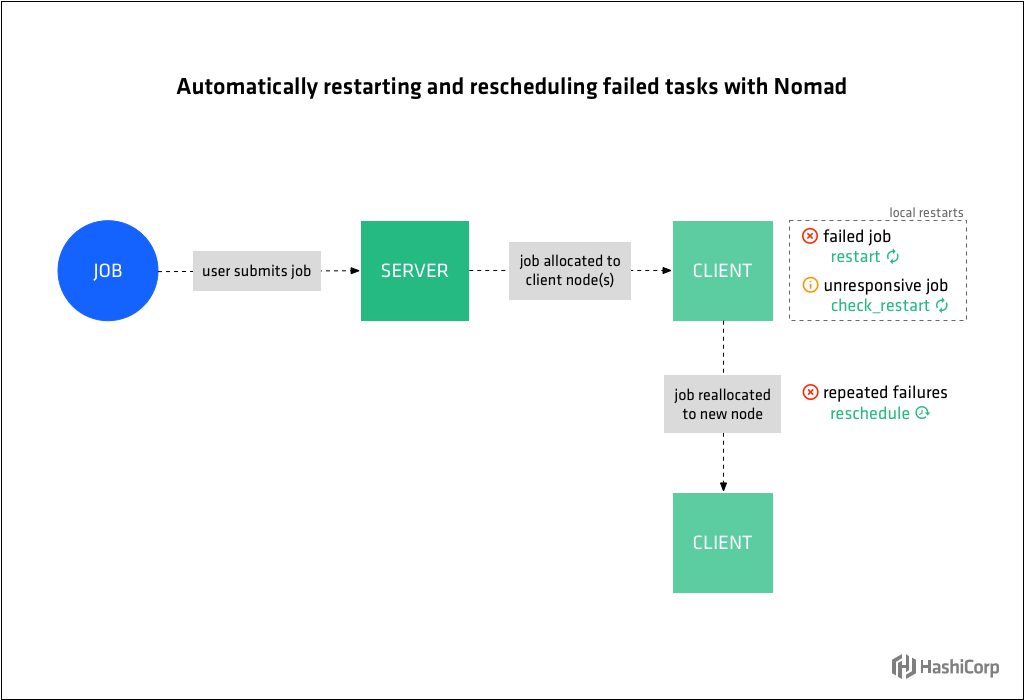

In this first post, we'll look at how Nomad automates the restart of failed and unresponsive tasks as well as reschedule of repeatedly failing tasks to other nodes.

»Tasks and job declaration

A Nomad task is a command, service, application, or other workload executed on Nomad client nodes by their driver. Tasks can be short-lived batch jobs or long-running services such as a web application, database server, or API.

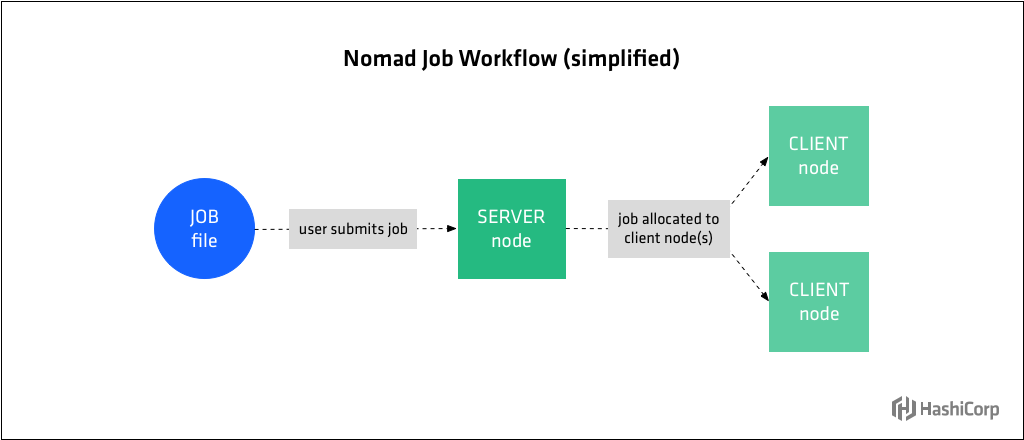

Tasks are defined in a declarative jobspec in HCL syntax. Job files are submitted to the Nomad server and the server determines where and how the task(s) defined in the job file should be allocated to client nodes. Another way to conceptualize this is: The job spec represents the desired state of the workload and the Nomad server creates and maintains the actual state.

The heirarchy for a job is: job → group → task. Each job file has only a single job, however a job may have multiple groups, and each group may have multiple tasks. Groups contain a set of tasks that are to be co-located on the same node.

Here is a simplified job file defining a Redis workload:

job "example" {

datacenters = ["dc1"]

type = "service"

constraint {

attribute = "${attr.kernel.name}"

value = "linux"

}

group "cache" {

count = 1

task "redis" {

driver = "docker"

config {

image = "redis:3.2"

}

resources {

cpu = 500 # 500 MHz

memory = 256 # 256MB

}

}

}

}

Job authors can define constraints as well as resources for their workloads. Constraints limit the placement of workloads on nodes by attributes such as kernel type and version. Resource requirements include the memory, network, CPU, etc. required for the task to run.

There are three types of jobs: system, service, and batch, which determines the scheduler Nomad will use for the tasks in this job. The service scheduler is designed for scheduling long lived services that should never go down. Batch jobs are much less sensitive to short term performance fluctuations and are short lived, finishing in a few minutes to a few days. The system scheduler is used to register jobs that should be run on all clients that meet the job's constraints. It is also invoked when clients join the cluster or transition into the ready state

Nomad makes task workloads resilient by allowing job authors to specify strategies for automatically restarting failed and unresponsive tasks as well as automatically rescheduling repeatedly failing tasks to other nodes.

»Restarting failed tasks

Task failure can occur when a task fails to complete successfully, as in the case of a batch type job or when a service fails due to a fatal error or running out of memory.

Nomad will restart failed tasks on the same node according to the directives in the restart stanza of the job file. Operators specify the number of restarts allowed with attempts, how long Nomad should wait before restarting the task with delay, the amount of time to limit attempted restarts to with interval. Use (failure) mode to specify what Nomad should do if the job is not running after all restart attempts within the given interval have been exhausted.

The default failure mode is is fail which tells Nomad not to attempt to restart the job. This is the recommended value for non-idempotent jobs which are not likely to succeed after a few failures. The other option is delay which tells Nomad to wait the amount of time specified by interval before restarting the job.

The following restart stanza tells Nomad to attempt a maximum of 2 restarts within 30 minutes, delaying 15s between each restart and not to attempt any more restarts after those are exhausted.

group "cache" {

...

restart {

attempts = 2

interval = "30m"

delay = "15s"

mode = "fail"

}

task "redis" {

...

}

}

This local restart behavior is designed to make tasks resilient against bugs, memory leaks, and other ephemeral issues. This is similar to using a process supervisor such as systemd, upstart, or runit outside of Nomad.

»Restarting unresponsive tasks

Another common scenario is needing to restart a task that is not yet failing but has become unresponsive or otherwise unhealthy.

Nomad will restart unresponsive tasks according to the directives in the check_restart stanza. This works in conjunction with Consul health checks. Nomad will restart tasks when a health check has failed limit times. A value of 1 causes a restart on the first failure. The default, 0, disables health check based restarts.

Failures must be consecutive. A single passing check will reset the count, so services alternating between a passing and failing state may not be restarted. Use grace to specify a waiting period to resume health checking after a restart. Set ignore_warnings = true to have Nomad treat a warning status like a passing one and not trigger a restart.

The following check_restart policy tells Nomad to restart the Redis task after its health check has failed 3 consecutive times, to wait 90 seconds after restarting the task to resume health checking, and to restart upon a warning status (in addition to failure).

task "redis" {

...

service {

check_restart {

limit = 3

grace = "90s"

ignore_warnings = false

}

}

}

In a traditional data center environment, restarting failed tasks is often handled with a process supervisor, which needs to be configured by an operator. Automatically detecting and restarting unhealthy tasks is more complex, and either requires custom scripts to integrate monitoring systems or operator intervention. With Nomad they happen automatically with no operator intervention required.

»Rescheduling failed tasks

Tasks that are not running successfully after the specified number of restarts may be failing due to an issue with the node they are running on such as failed hardware, kernel deadlocks, or other unrecoverable errors.

Using the reschedule stanza, operators tell Nomad under what circumstances to reschedule failing jobs to another node.

Nomad prefers to reschedule to a node not previously used for that task. As with the restart stanza, you can specify the number of reschedule attempts Nomad should try with attempts, how long Nomad should wait between reschedule attempts with delay, and the amount of time to limit attempted reschedule attempts to with interval.

Additionally, specify the function to be used to calculate subsequent reschedule attempts after the initial delay with delay_function. The options are constant, exponential, and fibonacci. For service jobs, fibonacci scheduling has the nice property of fast rescheduling initially to recover from short lived outages while slowing down to avoid churn during longer outages. When using the exponential or fibonacci delay functions, use max_delay to set the upper bound for delay time after which it will not increase. Set unlimited to true or false to enable unlimited reschedule attempts or not.

To disable rescheduling completely, set attempts = 0 and unlimited = false.

The following reschedule stanza tells Nomad to try rescheduling the task group an unlimited number of times and to increase the delay between subsequent attempts exponentially, with a starting delay of 30 seconds up to a maximum of 1 hour.

group "cache" {

...

reschedule {

delay = "30s"

delay_function = "exponential"

max_delay = "1hr"

unlimited = true

}

}

The reschedule stanza does not apply to system jobs because they run on every node.

As of Nomad version 0.8.4, the reschedule stanza applies during deployments.

In a traditional data center, node failures would be detected by a monitoring system and trigger an alert for operators. Then operators would need to manually intervene either to recover the failed node, or migrate the workload to another node. With the reschedule feature, operators can plan for the most common failure scenarios and Nomad will respond automatically, avoiding the need for manual intervention. Nomad applies sensible defaults so most users get local restarts and rescheduling without having to think about the various restart parameters.

»Summary

In this first post in our series Building Resilient Infrastructure with Nomad, we covered how Nomad provides resiliency for computing infrastructure through automated restarting and rescheduling of failed and unresponsive tasks.

Operators specify Nomad’s local restart strategy for failed tasks with the restart stanza. When used in conjunction with Consul and the check_restart stanza, Nomad will locally restart unresponsive tasks according to the restart parameters. Operators specify Nomad’s strategy for rescheduling failed tasks with the reschedule stanza.

In the next post we’ll look at how the Nomad client enables fast and accurate scheduling as well as self-healing through driver health checks and liveliness heartbeats.