At HashiConf 2017, we announced Sentinel, a framework for treating policy as code. Sentinel is built-in to the HashiCorp Enterprise products to allow automation guardrails, business requirements, legal compliance, and other policies to be actively enforced by running systems in real-time.

Using HashiCorp Terraform Enterprise and the Kubernetes provider we can apply fine-grained policy enforcement using Sentinel to Kubernetes resources, before the changes to the resources are applied on the cluster. This blog post explores using Sentinel in Terraform Enterprise to manage Kubernetes clusters and enforce Kubernetes service types and namespace naming conventions.

To learn more about how to use Sentinel in Terraform Enterprise, please read our previous blog post Sentinel and Terraform Enterprise. For an overview on the Kubernetes provider, please read our previous blog post Managing Kubernetes with Terraform.

»Policy Enforcement on a Service

A Kubernetes service is an abstraction provided by Kubernetes to access the underlying pods. The service can have various “types” that include ClusterIP, NodePort, and LoadBalancer. The service type LoadBalancer by default creates a public facing load balancer using the cloud provider hosting the Kubernetes cluster. Organizations with governance requirements typically might not want to expose these Kubernetes services to the public internet directly using cloud provider’s load balancers. Operators in those organizations might want to restrict services that are of type LoadBalancer from being created. They might want to inspect requests that require a public facing load balancer to be provisioned. Let us see an example of a Sentinel policy that can enforce this restriction. Consider an example pod and service that are being managed using Terraform:

provider "kubernetes" {}

resource "kubernetes_pod" "nginx" {

metadata {

name = "nginx-pod"

labels {

app = "nginx"

}

}

spec {

container {

image = "nginx:1.7.8"

name = "nginx"

port {

container_port = 80

}

}

}

}

resource "kubernetes_service" "nginx" {

metadata {

name = "nginx"

}

spec {

selector {

# NGINX pod labels are being referenced

app = "${kubernetes_pod.nginx.metadata.0.labels.app}"

}

port {

port = 80

target_port = 80

}

type = "${var.service_type}"

}

}

variable "service_type" {

default = "LoadBalancer"

}

The above example defines a NGINX pod and exposes it on port 80 using the “nginx” service of type LoadBalancer. But using Sentinel we can enforce that only services of type NodePort or ClusterIP are created in Kubernetes and this can be done using the following Sentinel policy:

import "tfplan"

allowed_service_types = [

"NodePort",

"ClusterIP",

]

// service_type_for function finds the value of service 'type'

// in the kubernetes resource

service_type_for = func(resource) {

for resource as _, data {

for data as _, v {

for v.applied.spec as _, spec {

return spec["type"]

}

}

}

return false // false when service 'type' is not specified

}

// kubernetes_services function returns a list of 'kubernetes_service' resources

kubernetes_services = func() {

services = []

// Iterate over all the resources in the Terraform plan and

// find “kubernetes_service” resource

for tfplan.resources as type, resource {

if type is "kubernetes_service" {

services += [resource]

}

}

return services // Return a list of kubernetes services found in the Terraform plan

}

// main is the entry point of the execution of Sentinel policies

main = rule {

// Iterate over all Kubernetes services in the Terraform plan

all kubernetes_services() as service {

// For each Kubernetes service check the service type is allowed

service_type_for(service) in allowed_service_types

}

}

The above policy goes through all kubernetes services that are part of a Terraform plan and validates that they are of type NodePort or ClusterIP in order for the policy check to succeed.

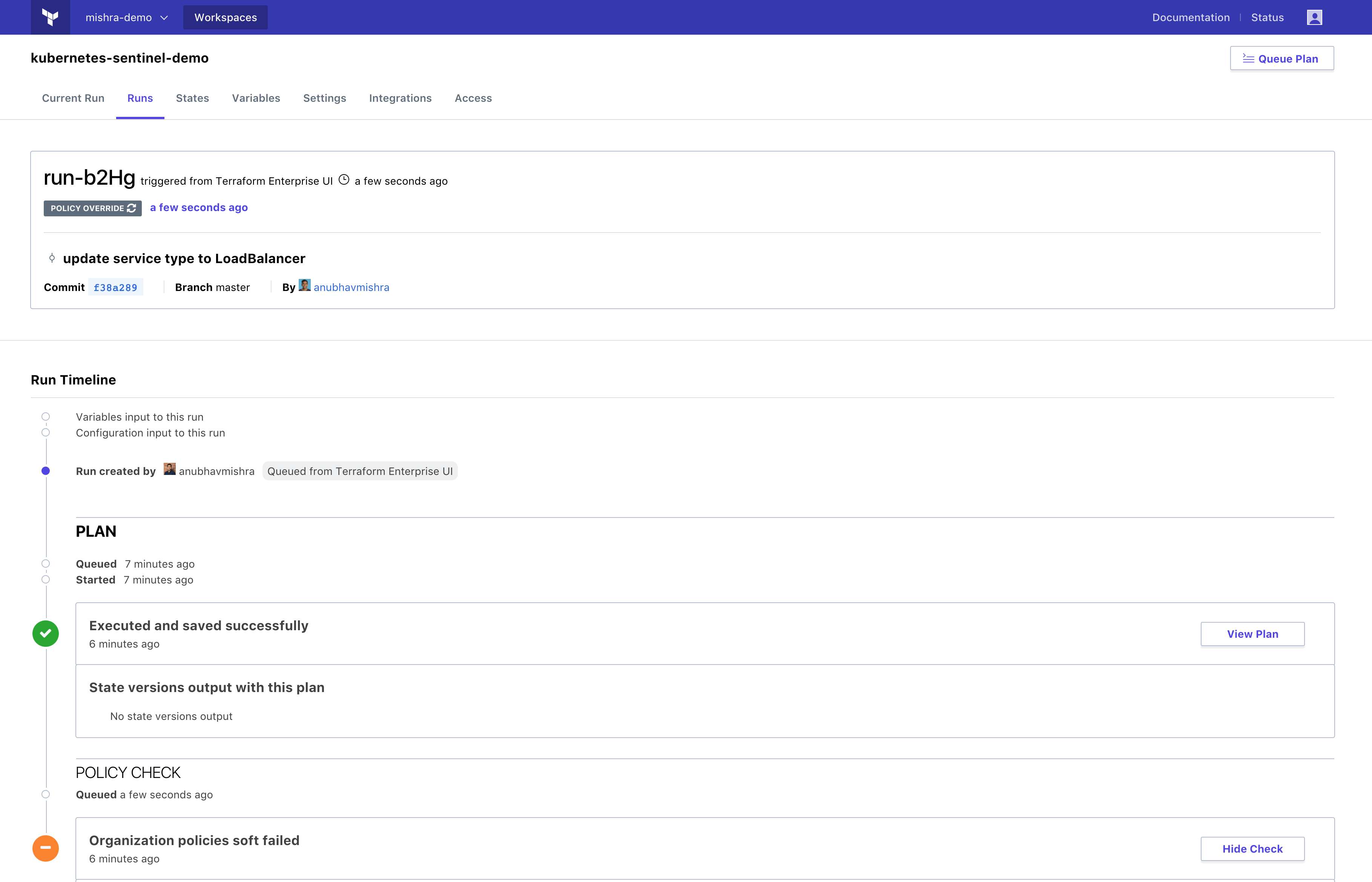

An operator can use enforcement modes to determine what happens if the policy was to fail. In this case, they might want to use “Soft mandatory” as it requires an operator with appropriate permissions to override any policy failures.

This enforcement mode provides a great way to validate and gate keep services that are being requested by the users. Below is a screencast of this policy enforcement using Terraform Enterprise and Sentinel.

»Policy Enforcement on a Replication Controller

Using replication controllers in Kubernetes is a common practice in order to keep a certain number of pods always running in the cluster. This number is defined using replicas in the replication controller. But the value of replicas can be incorrectly provided by the user submitting request to Kubernetes. A consequence of such an error can cause the cluster to be over-utilized by the resources that are reserved for the pods being managed by the replication controller. An operator might want to enforce a maximum number of replicas allowed in a replication controller. Let us see how a Sentinel policy can enforce such a restriction. Consider a replication controller that is being managed using Terraform:

provider "kubernetes" {}

resource "kubernetes_replication_controller" "nginx" {

metadata {

name = "nginx-example"

labels {

app = "nginx-example"

}

}

spec {

replicas = "${var.replicas}"

selector {

app = "nginx-example"

}

template {

container {

image = "nginx:1.7.8"

name = "nginx"

port {

container_port = 80

}

resources {

limits {

cpu = "0.5"

memory = "512Mi"

}

requests {

cpu = "250m"

memory = "50Mi"

}

}

}

}

}

}

# NGINX kubernetes service specification

resource "kubernetes_service" "nginx" {

metadata {

name = "nginx-example"

}

spec {

selector {

# NGINX pod labels are being referenced

app = "${kubernetes_replication_controller.nginx.metadata.0.labels.app}"

}

port {

port = 80

target_port = 80

}

type = "NodePort"

}

}

variable "replicas" {

default = 15

}

The above example defines a replication controller that manages a NGINX pod. The replication controller specifies that there be 15 replicas of the pod running in the Kubernetes cluster. The “nginx” service is used to access the NGINX pods. An operator might want to enforce a maximum number of replicas that can be specified in a replication controller by using the following Sentinel policy:

import "tfplan"

// replicas_for function finds the value of 'replicas'

// in the kubernetes resource

replicas_for = func(resource) {

for resource as _, data {

for data as _, v {

for v.applied.spec as _, spec {

return int(spec["replicas"])

}

}

}

return false // false when replicas are not specified

}

// kubernetes_replication_controllers function returns a list of

// 'kubernetes_replication_controller' resources

kubernetes_replication_controllers = func() {

replication_controllers = []

// Iterate over all the resources in the Terraform plan and

// find “kubernetes_replication_controller” resource

for tfplan.resources as type, resource {

if type is "kubernetes_replication_controller" {

replication_controllers += [resource]

}

}

// Return a list of kubernetes replication controllers

// found in the Terraform plan

return replication_controllers

}

main = rule {

// Iterate over all replication controller resources in the Terraform plan

all kubernetes_replication_controllers() as replication_controller {

// For each replication controller check if the replicas is less than 10

replicas_for(replication_controller) < 10

}

}

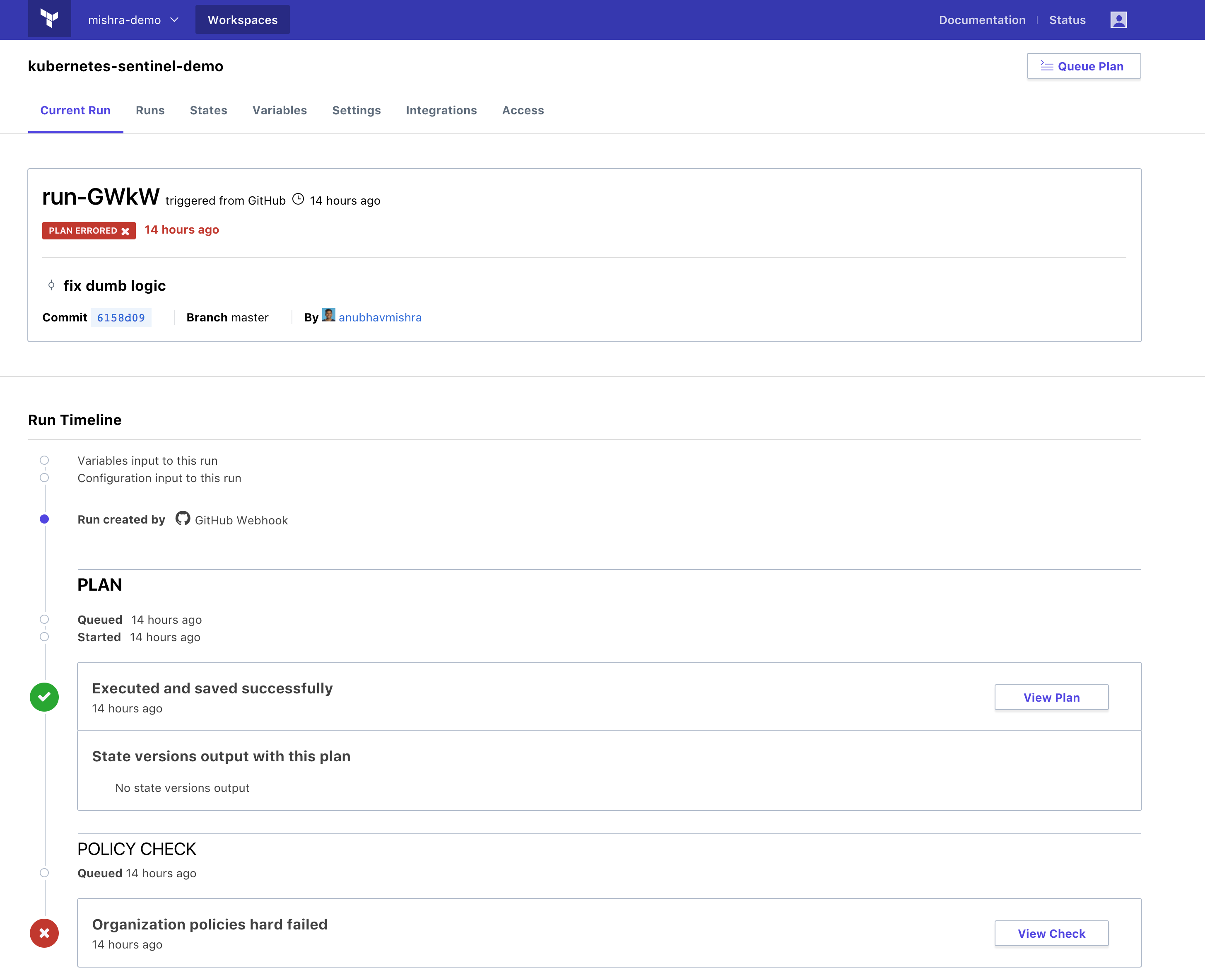

The above policy helps keep the resource reservation in a Kubernetes cluster under control and helps prevent a situation where the newer workload isn’t schedulable on the cluster. Since this policy enforcement is so critical, an operator may want to use “Hard mandatory” policy enforcement that doesn’t allow any overrides on failures and the policy must pass before any changes are applied.

Below is a screencast of this policy enforcement using Terraform Enterprise and Sentinel.

»Other Examples

There are other Sentinel policy examples that can be found in the kubernetes-sentinel Github repository and they include the following:

-

limit-range - Validates a Kubernetes pod’s CPU limits

-

namespace - Validates a Kubernetes namespace naming convention

Feel free to fork the kubernetes-sentinel Github repository to your Github account and try out the examples that are covered in the post.

»Why Use Sentinel with Kubernetes?

»Fine Grained Policy Control and Consistent Workflow

Kubernetes provides a flexible platform to deploy and manage modern containerized applications. While access controls are supported to restrict who can use the cluster, fine grained policy is much more complex. When using Terraform to orchestrate Kubernetes, we get the benefits of infrastructure as code and a consistent workflow for provisioning infrastructure. On the other hand, Terraform Enterprise enables us to use Sentinel to enforce fine grained policies on top of any existing access controls. This lets us enforce security policies, operational best practices, and avoid compliance issues.

»Plan Simulation

Sentinel provides the tfplan plugin which has access to the Terraform plan. The plan simulates the effects of allowing the given changes. Hence the policy enforcement can take advantage of this simulated state created using a Terraform plan and validate policies on the applied state of a resource.

»Simplicity

Sentinel policy language is aimed to be simple. The language is easy to understand and doesn’t require formal knowledge of programming. Unlike admission hooks in Kubernetes, these policies don’t require you to write Golang code to enforce them. No modifications need to be made to the Kubernetes cluster either, since the enforcement is being done in Terraform Enterprise, prior to using the Kubernetes API. The Sentinel language makes it easy for policymakers to create and enforce policies on Kubernetes resources.

»Conclusion

Sentinel provides a way for organizations to enforce policies on Kubernetes resources in a way that is granular and provides the automation necessary to efficiently scale. This post discusses only two examples of Sentinel policies that can be used to enforce control over a few Kubernetes resources like services and replication controllers but this same Sentinel policy can be applied to many other resources that are supported by the Terraform Kubernetes provider.

For those that are already using Terraform Enterprise, you can try the two examples shown in the post. To learn more and get started with Terraform Enterprise or request a free trial, visit https://www.hashicorp.com/products/terraform.