Nomad 0.11 announced a Technical Preview of the HashiCorp Nomad Autoscaler. This is a new open source project that provides the ability to automatically scale workloads running on a Nomad cluster. It's capable of being easily deployed on Nomad, and it employs a plugin system that allows operators to perform autoscaling in whatever way is appropriate for their workloads.

The Nomad Autoscaler is being released as a technical preview in order to get early feedback from the community. As a result, some of this content is subject to change. However, we're excited about this capability, and we know that first-class autoscaling support is something that the community is excited about as well.

This blog contains an overview of the Nomad Autoscaler architecture, as well as the changes in Nomad 0.11 to support this and other autoscaler efforts. You can also check out the autoscaler preview video from Nomad Virtual Day or register here to watch a live technical demo.

»Autoscaling Overview

Autoscaling refers to the automatic scaling of computational resources. Autoscaling is an important tool for many of our users. Applications and clusters must be given sufficient resources to meet business SLAs. However, resource utilization in excess of that need can result in unnecessary costs and/or scheduling contention. Complicating this balance is the reality that application and cluster loads can change dramatically over time, necessitating automatic adjustments capable of responding to real-time demands.

There are multiple types of autoscaling of interest to Nomad operators. In particular, horizontal application scaling is concerned with changing the desired count for a Nomad task group, scaling up/down the number of instances of an application. This tech preview for the Nomad Autoscaler is concerned solely with horizontal application autoscaling. However, we're planning to support other autoscaling paradigms in future releases, such as horizontal cluster autoscaling and vertical application autoscaling.

»Nomad Autoscaler Architecture

The Nomad Autoscaler has been implemented as an external process which interacts with a new Scaling Policy API in Nomad. We decided on this approach for a number of reasons. A separate binary decouples our release lifecycle, which is particularly helpful as we finalize the architecture of the autoscaler. Furthermore, this means that the scaling policy API introduced in Nomad 0.11 is available for third-party autoscalers to use as well.

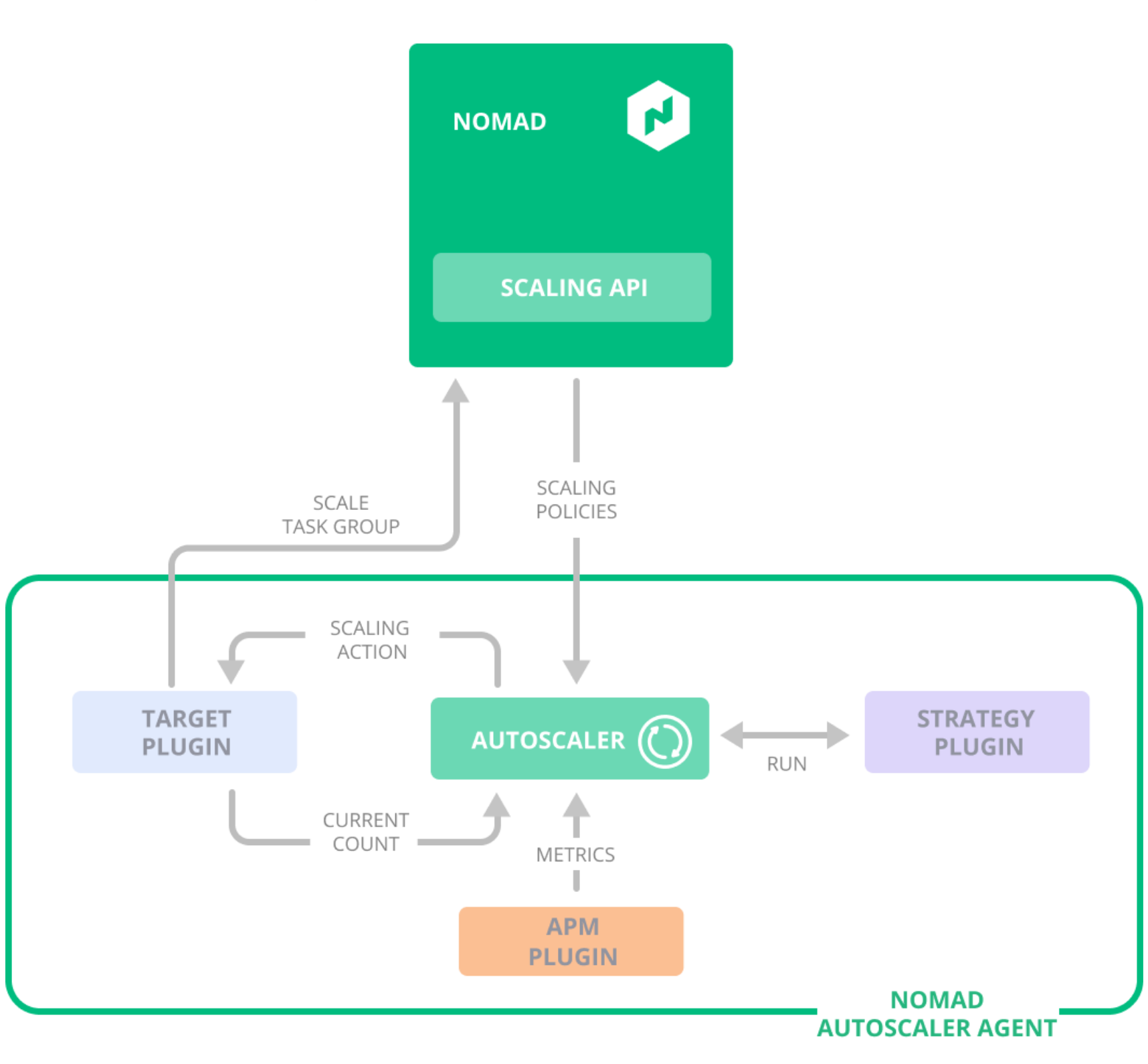

The operation of the Nomad Autoscaler is as follows: the Nomad Autoscaler pulls scaling policies from Nomad, and then implements a generic control loop, feeding application metrics into autoscaling strategies in order to determine the appropriate scaling actions for a particular target. Because effective scaling strategy varies widely from application to application, the Nomad Autoscaler provides multiple plugin systems enabling users to customize the operation of the autoscaler:

- APM plugins allow interfacing with different application performance metric systems, in order to pull the metrics necessary to make scaling decisions. The tech preview ships with plugins for Prometheus and native Nomad metrics.

- Target plugins provide the interface for performing scaling actions. The tech preview ships with a single target plugin, for Nomad task groups. This interface will become more significant in the future as we develop other autoscaling paradigms (e.g. cluster autoscaling).

- Strategy plugins implement the logic dictating when and how to scale a particular target, based on the current scaling level, the provided scaling strategy, and the observed metrics. The output from the scaling strategy is a set of scaling actions that is passed to the associated target plugin.

This interaction is illustrated in the figure below.

Illustration of the interaction between the Nomad Autoscaler, its plugins, and Nomad's APIs.

»Operator User Experience

The architecture of the Nomad Autoscaler was designed to streamline the user experience for operators already familiar with Nomad:

- Scaling policies targeting a task group are provided in the job specification as part of a new

scalingblock. - The autoscaler itself can be run as a job on the Nomad cluster.

- Observability of scaling events happens through the Nomad API.

The steps below illustrate the workflow for running and using the autoscaler.

»Step 1. Deploy the Nomad Autoscaler

Deploy the autoscaler on Nomad. HashiCorp will release the Nomad Autoscaler through official raw binaries and Docker images, enabling the autoscaler to be run using either the exec or docker task drivers. Below is an example deploying the autoscaler using the docker task driver.

job "nomad-autoscaler" {

task "autoscaler-pool" {

driver = "docker"

config {

image = "hashicorp/nomad-autoscaler:tech-preview"

args = [

"-config=config.hcl"

]

}

template {

destination = "config.hcl"

data = <<EOH

plugin_dir = "./plugins"

scan_interval = "5s"

nomad {

address = "http://nomad.service:4646"

}

apm "prometheus" {

driver = "prometheus"

config = {

address = "http://prometheus.service:9090"

}

}

strategy "target-value" {

driver = "target-value"

}

EOH

}

}

}

The ability to run the autoscaler on Nomad means that all the capabilities of the Nomad runtime are available, including downloading autoscaler plugins using artifact blocks and using template blocks to configure the autoscaler.

»Step 2. Configure A Nomad Job With an Autoscaling Policy

Once the autoscaler is running and connected to Nomad, it will query for scaling policies. Scaling policies for a task group are provided as part of the new scaling block, like so:

job "web-app" {

group "example" {

scaling {

enabled = true

min = 1

max = 10

policy {

source = "prometheus"

query = "scalar(avg(haproxy_server_current_sessions))"

strategy = {

name = "target-value"

config = { target = 20 }

}

}

}

task "server" {...}

}

}

This example policy refers to the "prometheus" source defined in the autoscaler configuration, as well as the "target-value" strategy plugin, specifying that the task group should be scaled so that the average number of connections to the service is 20.

»Getting Started

The Nomad Autoscaler, along with source for plugins, is available on GitHub. This repo includes instructions for building and running the autoscaler, as well as example usage. We'll be working with the HashiCorp education team to provide guides for best-practice deployment of the autoscaler and for building plugins. In the meantime, feel free to try it out and give us feedback in the issue tracker. To see this feature in action, please register the upcoming live demo session here

As a tech preview feature, autoscaling may not be functionally complete and we are not providing enterprise support for Nomad Enterprise users using autoscaling in production. We’re excited to release this new architecture with the community to gather feedback, and will officially add it to our support for Nomad Enterprise after the feature is generally available.