We are pleased to announce a tech preview of cluster peering, which will be available in the upcoming release of HashiCorp Consul 1.13. A whole new model for how Consul handles cross-cluster federation, cluster peering enables connectivity between independently managed Consul clusters or Admin Partitions.

In larger enterprises, platform teams are often tasked with providing a standard networking solution to independent teams across the organization. Often these teams have made their own choices about cloud providers and runtime platforms, but the platform team still needs to enable secure cross-team connectivity. To make it work, the organization needs a shared networking technology it can build upon that works everywhere.

»Before Cluster Peering

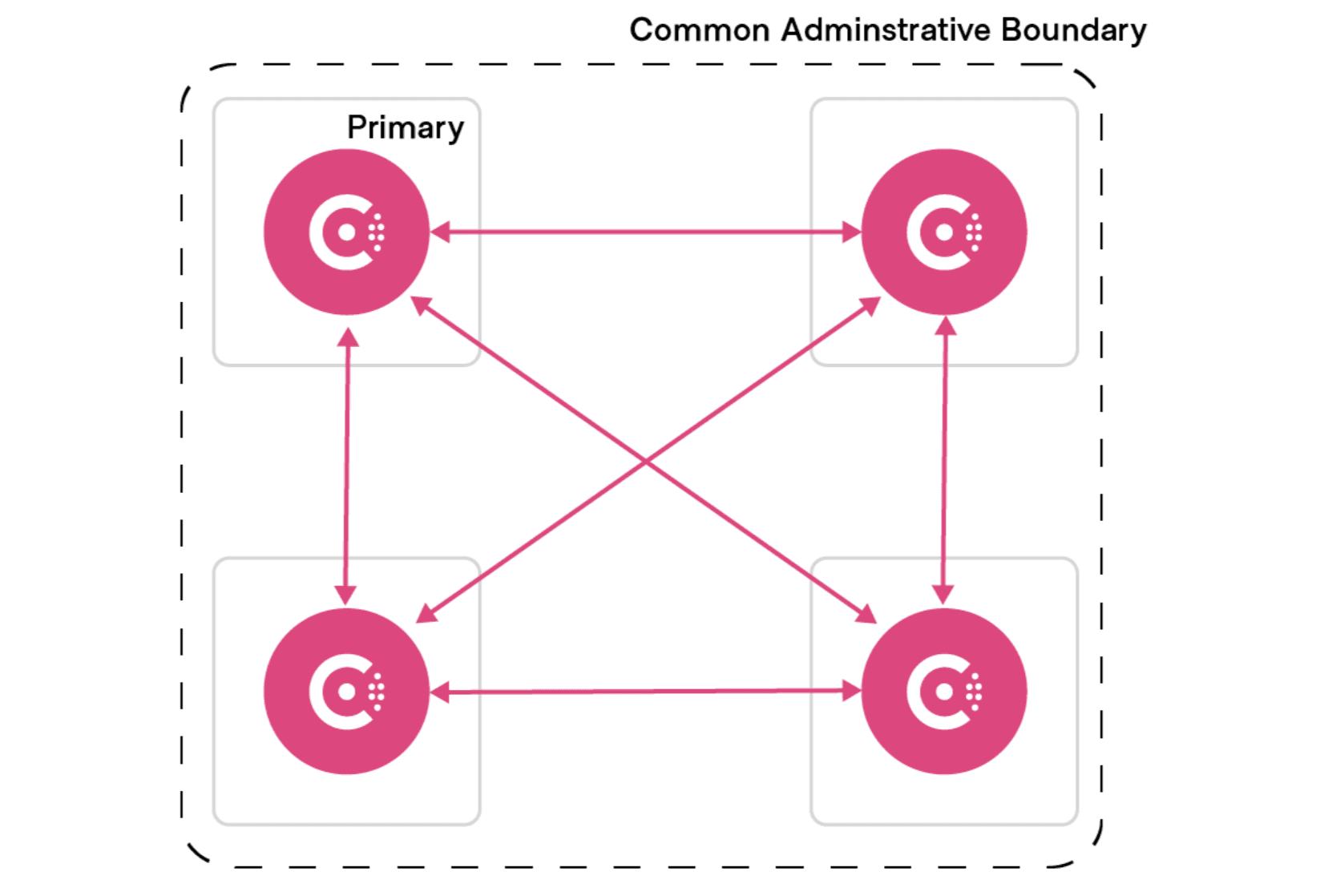

Consul’s current federation model is based on the idea that all Consul datacenters (also known as clusters) are managed by a common administrative control. Security keys, policies, and upgrade activities are assumed to be coordinated across the federation. Mesh configuration and service identities are also global, which means that service-specific configurations, routing, and intentions are assumed to be managed by the same team, with a single source of truth across all datacenters.

This model also requires a full mesh of network connectivity between datacenters, as well as relatively stable connections to remote datacenters. If that matches how you manage infrastructure, WAN federation provides a relatively simple solution: with a small amount of configuration every service can connect to every other service across all your datacenters.

The advantages of WAN federation include:

- Shared keys

- Coordinated upgrades

- Static primary datacenter

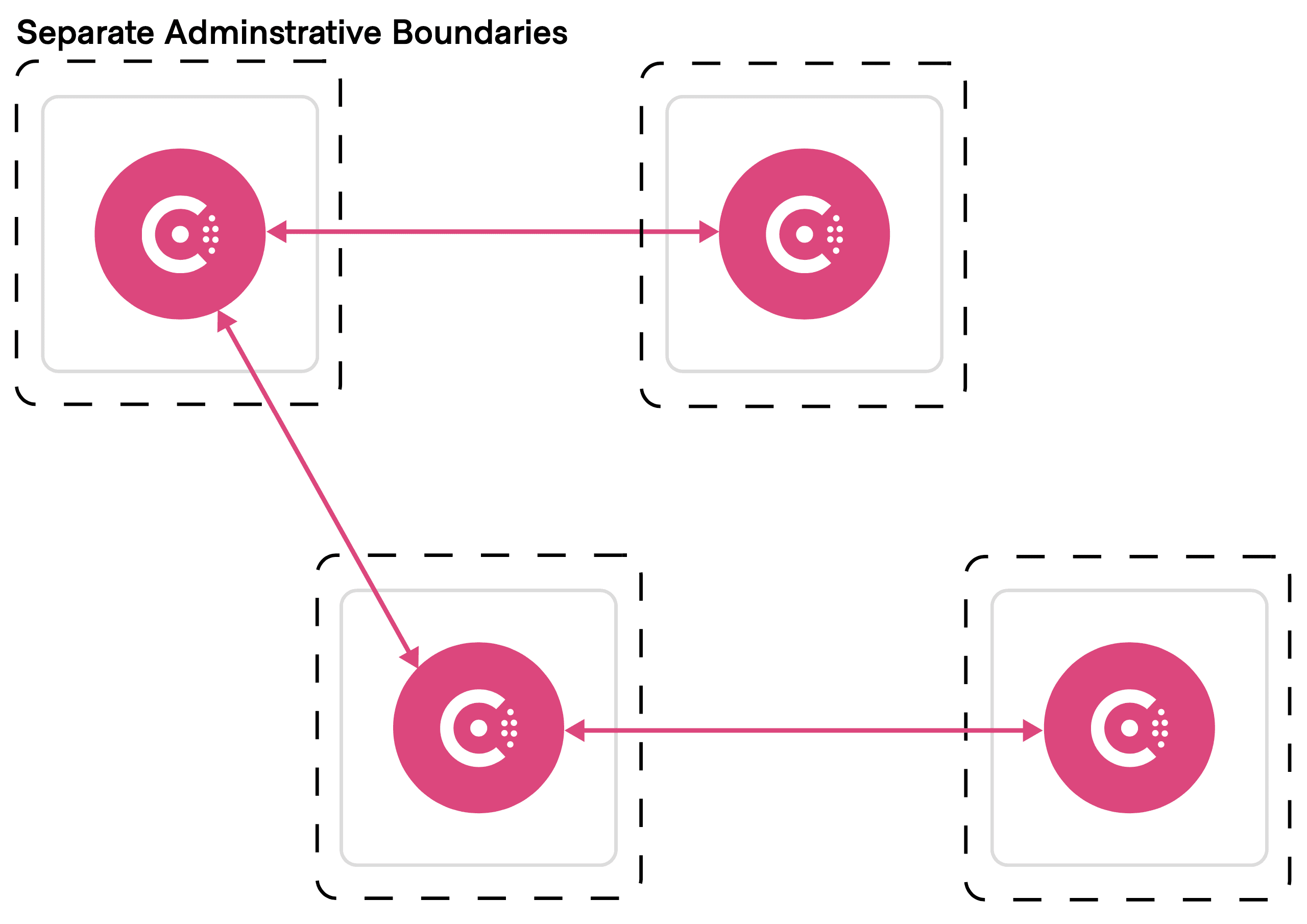

However, many organizations are deploying Consul into environments managed by independent teams across different networking boundaries. These teams often require the ability to establish service connectivity with some, but not all, clusters, while retaining the operational autonomy to define service mesh configuration specific to their needs without conflicting with configuration defined in other clusters across the federation. That highlights some of the limitations in the WAN federation model:

- Reliance on connectivity to a primary datacenter.

- Assumption of resource sameness across the federation.

- Difficult to support complex hub-and-spoke topologies.

»With Cluster Peering

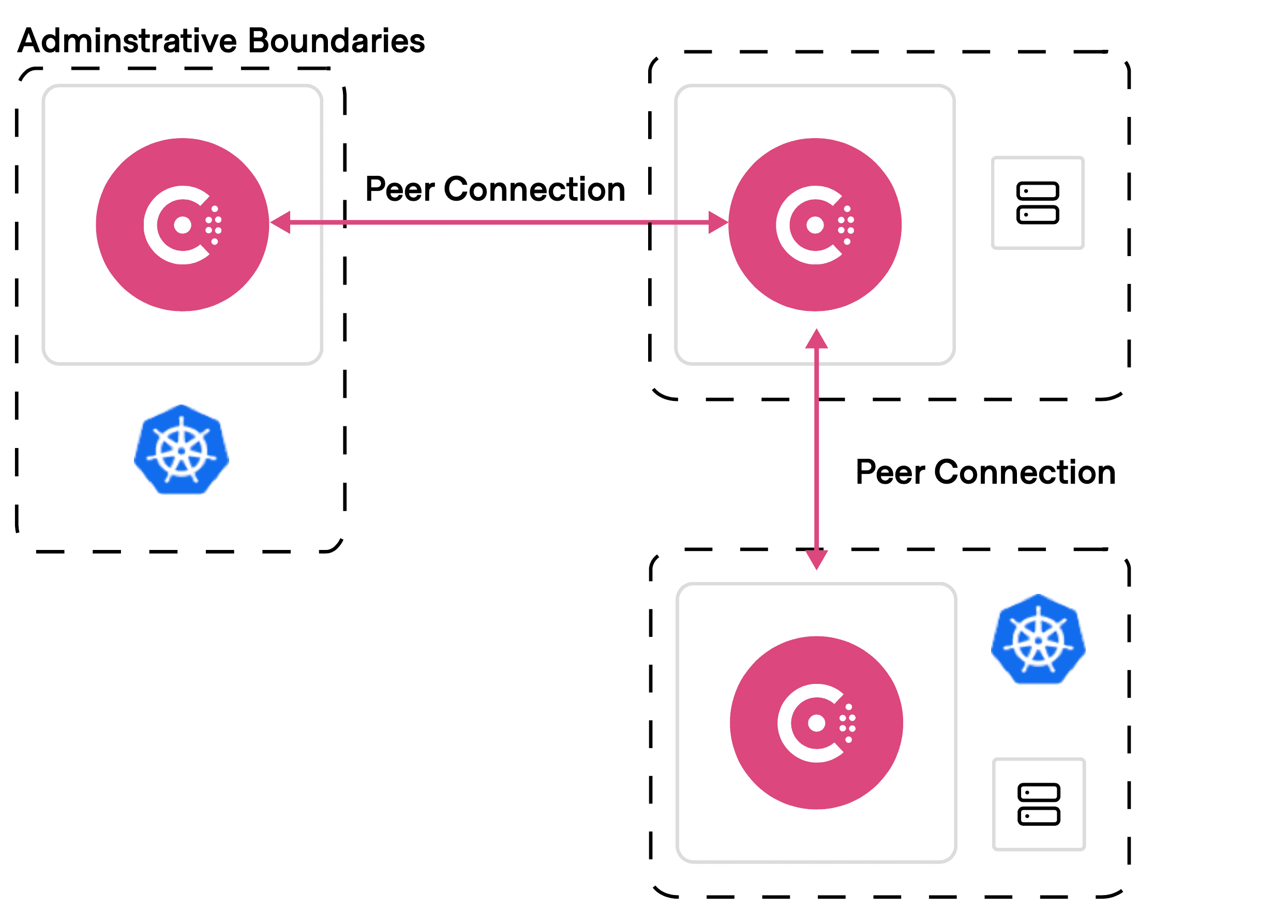

With cluster peering, each cluster is autonomous with its own keys, catalog, and access control list (ACL) information. There is no concept of a primary datacenter.

Cluster administrators explicitly establish relationships (or “peerings”) with clusters they need to connect to. Peered clusters automatically exchange relevant catalog information for the services that are explicitly exposed to other peers.

In the open source version of Consul 1.13, cluster peering will enable operators to establish secure service connectivity between Consul datacenters, no matter what the network topology or team ownership looks like.

Cluster peering provides the flexibility to connect services across any combination of team, cluster, and network boundaries. The benefits include:

- Fine-grained connectivity

- Minimal coupling

- Operational autonomy

- Support for hub-and-spoke peering relationships

Cluster peering enables customers to securely connect applications across internal and external organizational boundaries while maintaining the security offered by mutual TLS, and autonomy between independent service meshes.

»Consul Enterprise

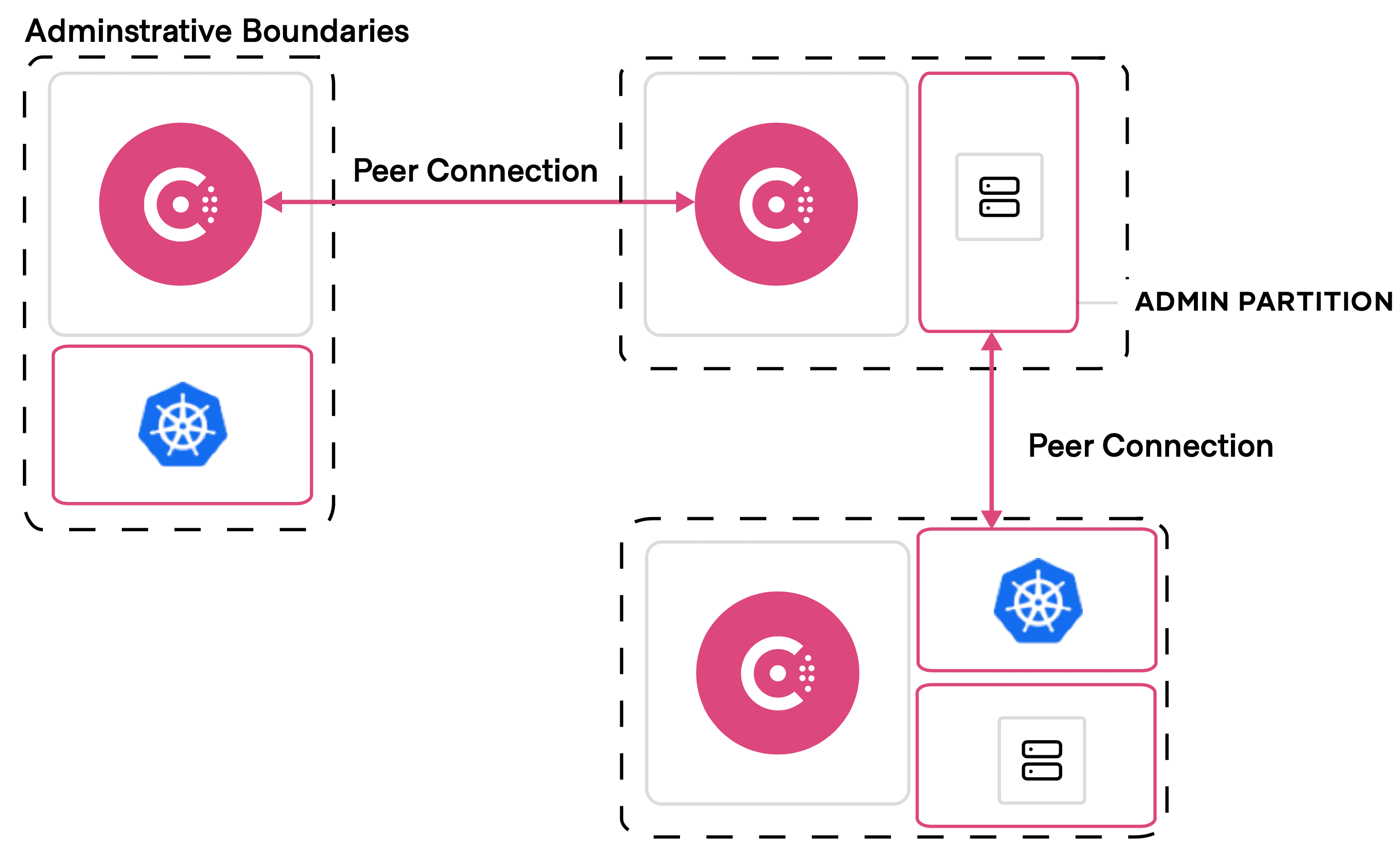

Admin Partitions, introduced in Consul 1.11, provides improved multi-tenancy for different teams to develop, test, and run their production services using a single, shared Consul control plane. Admin Partitions lets your platform team operate shared servers to support multiple application teams and clusters. However, Admin Partitions in Consul 1.11 supports connecting partitions only on the same servers in a single region.

Now, with cluster peering, partition owners can establish peering with clusters or partitions located in the same Consul datacenter or in different regions. Peering relationships are independent from any other team’s partitions, even if they share the same Consul servers.

»Next Steps

Cluster peering is an exciting new addition to Consul that gives operators greater flexibility in connecting services across organizational boundaries.

But note that cluster peering is not intended to immediately replace WAN federation. In the long term, additional functionality is needed before cluster peering has feature parity with all of the features of WAN federation. If you are already using WAN federation, there is no immediate need to migrate your existing clusters to cluster peering.

If you are interested in testing cluster peering, download the Consul 1.13 tech preview, then head over to consul.io/docs/connect/cluster-peering to get started configuring the feature.

The Consul team would love your feedback on cluster peering — please share your thoughts with the team using this Consul feedback form.