HashiCorp Consul’s latest enterprise feature is called Administrative Partitions. It addresses resource inefficiencies faced by many customers when multiple dedicated service meshes are provisioned for different teams rather than sharing a single set of service mesh control plane resources. This leads to additional management requirements, lack of consistent governance, increased costs, and overall waste of resources.

This blog post will discuss many of these challenges and how Admin Partitions can solve them, delving into the details of how they work. For hands-on experience with Admin Partitions, please see the step-by-step tutorials in the HashiCorp Learn guide for Multi-Tenancy with Administrative Partitions.

»Why Use Multi-Tenancy?

In a world where cloud resources can easily be spun up by almost anyone (and oftentimes forgotten about), cloud efficiency and cost reduction have become a huge focus for many organizations. One way to optimize resource usage is through multi-tenancy, which allows entities within an organization to share and consume the same resources while providing secure and distinct isolation between groups. This improves efficiency and reduces resource management. In fact, multi-tenancy is a core practice at hyper-scale cloud vendors — where the underlying compute, network, and storage infrastructure is abstracted and shared between many end customers.

Admin Partitions, introduced in HashiCorp Consul 1.11, provide improved multi-tenancy for different teams to develop, test, and run their production services within a single Consul datacenter deployment. In addition to reducing the costs and complexity of managing multiple individual service meshes, Admin Partitions also strengthen security while enabling centralized governance.

»The Challenges Without Multi-Tenancy on a Service Mesh

Prior to Admin Partitions, disparate teams within an organization seeking to improve service discovery, apply zero trust security practices between services, and control traffic shaping were likely to deploy and manage their own service mesh solution. Running their own service mesh gives teams the freedom to work autonomously and manage their own resources.

However, consistent oversight across the many service mesh deployments throughout an organization becomes a challenge when different teams are applying their own security rules. Furthermore, the ability to quickly deploy managed Kubernetes clusters like Microsoft Azure Kubernetes Service (AKS), Amazon Elastic Kubernetes Service (EKS), and Google Kubernetes Engine (GKE) in the public cloud can accelerate the sprawl of individual service meshes, exacerbating the issue. Organizations are faced with issues around:

»Management Overhead

Operating a Consul service mesh deployment may not involve a lot of active management, but following best practices for scaling, governance, consistent security enforcement, high availability, and upgrades may require additional effort from each team. For some smaller organizations, a few teams managing their own Consul datacenters may not be too challenging, but as organizations scale to dozens of individual teams managing their own service mesh deployments, it can lead to unnecessary overhead. In cases where multiple deployments exist, it makes sense to centralize the operations and management.

»Resource Constraints

Many organizations have an existing central operations team dedicated to managing service discovery or service mesh platforms. However, even with a centralized management model, the centralized operations team still needs to manage potentially dozens of service mesh deployments across the organization. Not many organizations have the resources for that. Smaller organizations may have only a single individual responsible for the management of an entire fleet of deployments.

»Governance and Compliance

When different development teams manage their own service mesh deployments, there can be a lack of oversight, control, consistency, and security. There is a need to enforce a common set of security measures across all service meshes while still allowing individual teams to layer on their own security policies.

»High Costs

Many service meshes include control planes that run on underlying resources like virtual machines or Kubernetes nodes. The costs of these resources may not be significant individually, but at scale they can add up to considerable costs. In addition, Consul Enterprise licenses are calculated on a per Consul datacenter basis. This can be cost prohibitive if organizations have many teams deploying dozens of separate service meshes.

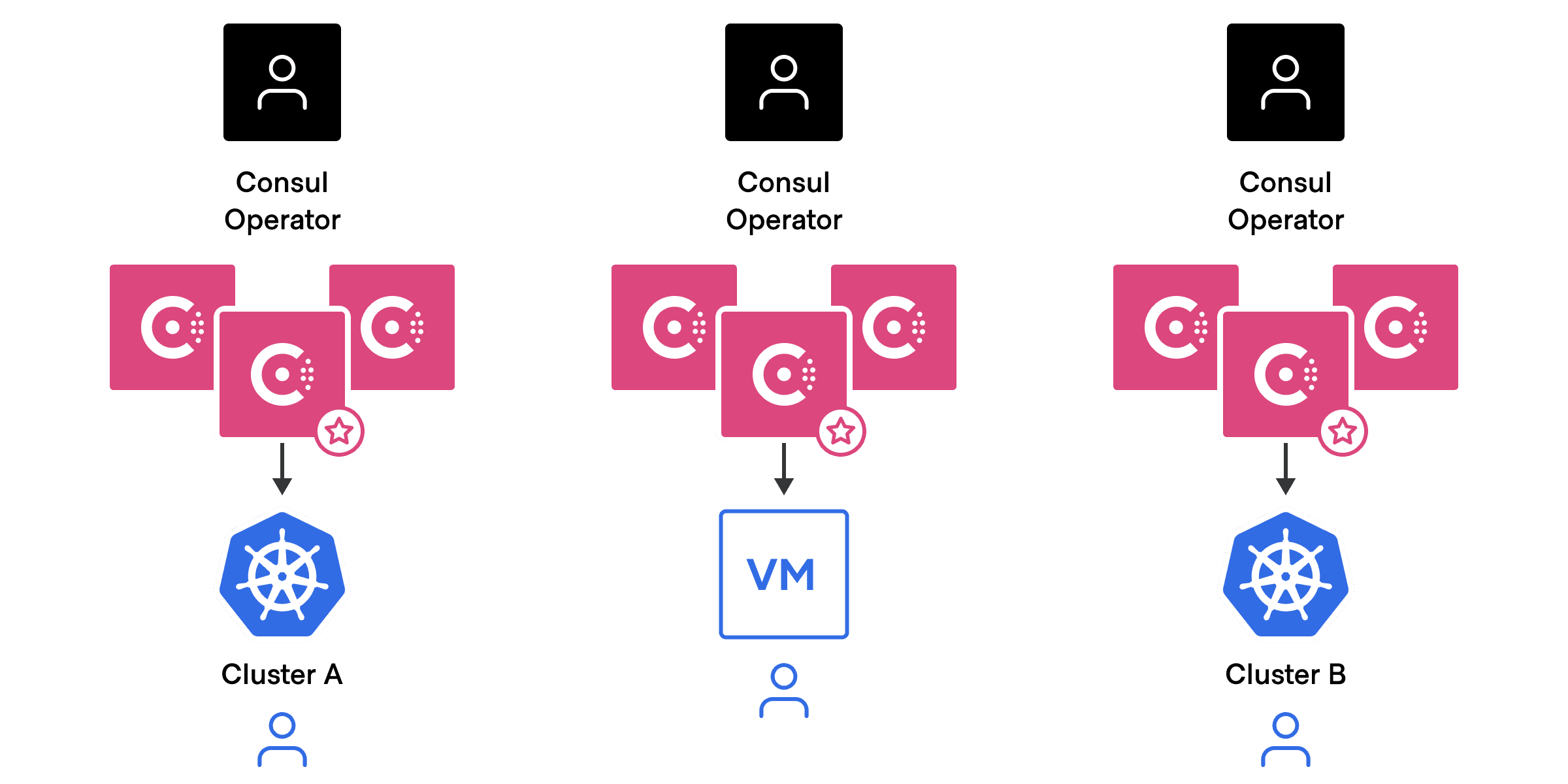

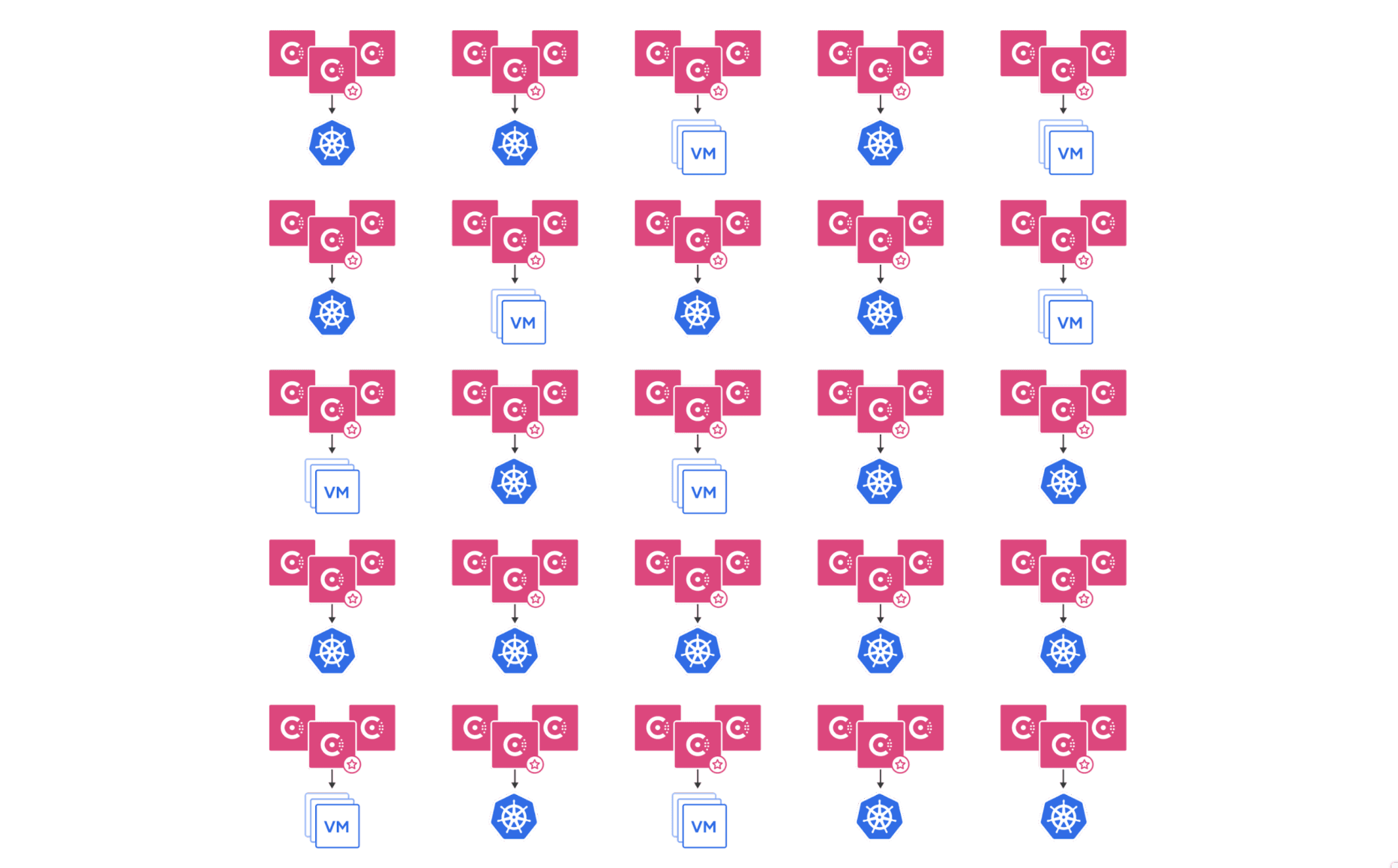

Small scale with a few Consul deployments can be manageable:

At a larger scale, management and cost become a consideration:

Consul already solves some of these challenges with the capability to run multiple Kubernetes clusters within a single Consul datacenter. Consul also provides an existing enterprise feature called namespaces, which provides a certain level of resource separation.

While these existing capabilities help reduce the management overhead, costs, and resource constraints mentioned above, Consul still requires non-overlapping pod network IP address ranges between the Kubernetes clusters for services requiring cross-cluster communication. That runs counter to how most customers are designing and implementing Kubernetes today. In addition, it places a burden on the different teams to continually coordinate in order to ensure their networks do not conflict. This can impact their workflow, productivity, and ability to work autonomously.

»The Solution: Administrative Partitions

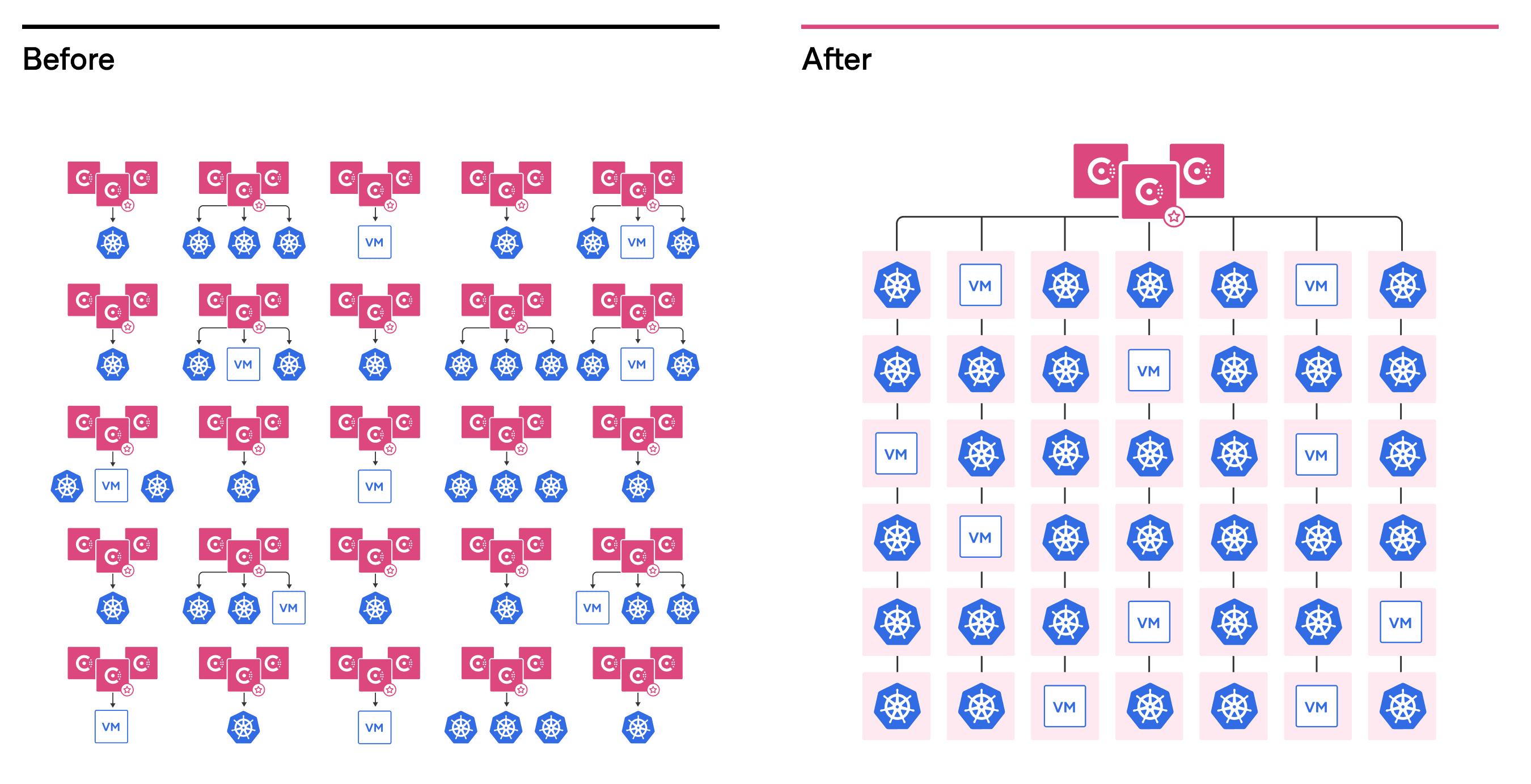

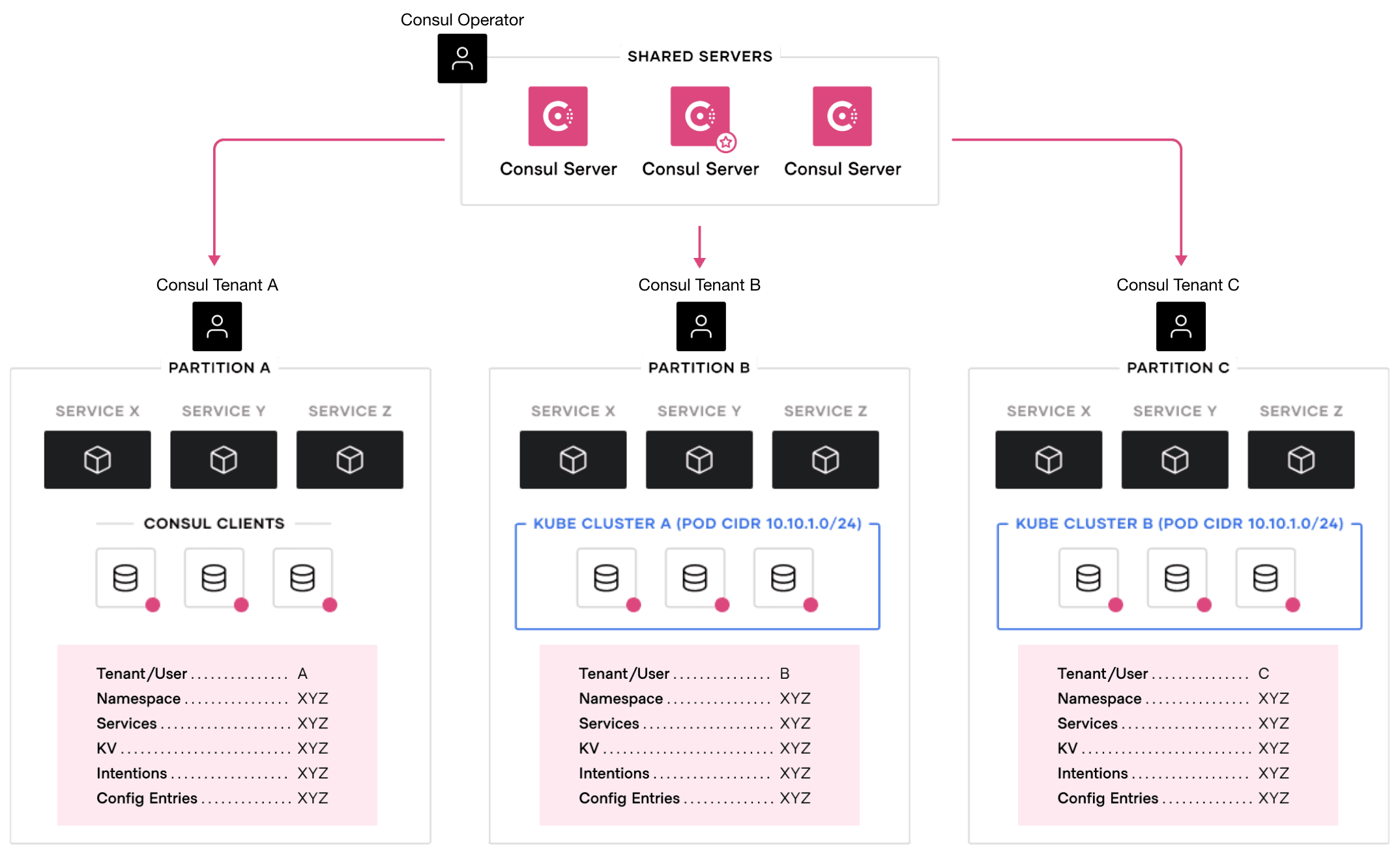

Admin Partitions solve these challenges by consolidating multiple Consul deployments onto a single Consul control plane and providing tenant separation by placing Consul clients into different logical groups, called partitions. Consolidating onto a single set of Consul servers helps to significantly reduce management and cost overhead. This model also enables a single centralized operations team or individual to manage and operate many separate partitions within a single Consul datacenter.

The centralized operations team can ensure consistent governance and proper regulations are applied to the many different partitions within the Consul datacenter. Individual tenants within each partition can still maintain autonomy with discrete ownership over the underlying VM or Kubernetes cluster resources that are running their services. The tenants can operate as if they were running their own Consul deployment without worrying about the overall health or maintenance of the service mesh control plane.

»Overlapping IPs

Admin Partitions also provide discrete isolation between tenants that even allow Kubernetes clusters in different partitions within the same Consul datacenter to have pod networks with overlapping IP address ranges. Prior to Admin Partitions, customers had to work around the challenge of having overlapping IPs by deploying separate Consul datacenters for each Kubernetes cluster and connecting the Consul datacenters using mesh gateways. While this worked, it increased the management complexity and cost overhead. Admin Partitions removes the overhead and complexity by allowing these Kubernetes clusters to reside in the same Consul datacenter regardless of the network makeup.

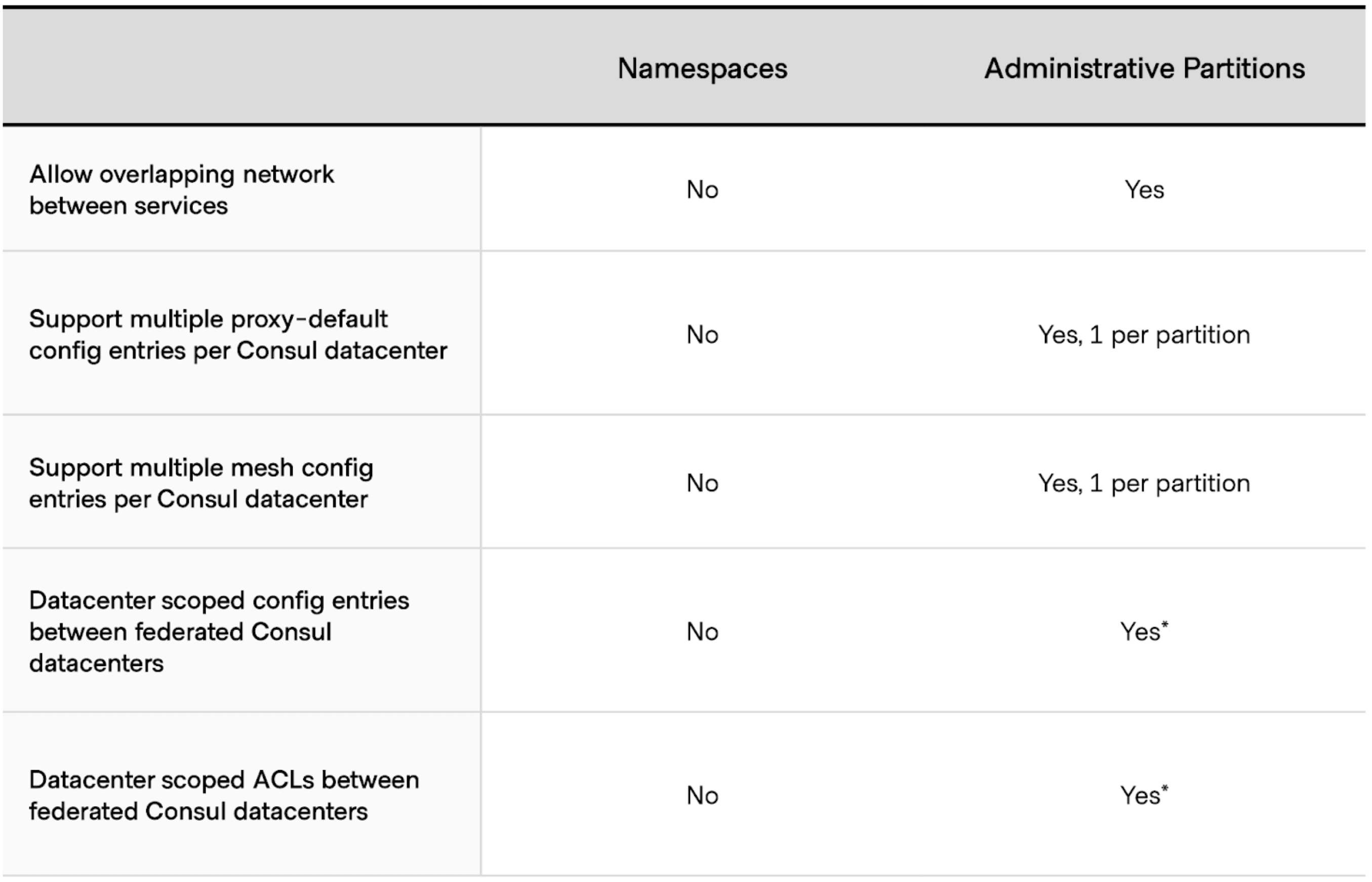

»Administrative Partitions vs Namespaces

The Consul namespaces feature was released in Consul Enterprise version 1.7 and provided some multi-tenancy capabilities. It allowed multiple different tenants to reside in the same Consul datacenter by separating out tenant resources into separate logical namespaces. While this provided robust isolation, namespaces still required non-overlapping IP ranges between tenants.

In addition, some service mesh configurations, like proxy-defaults, are globally configured regardless of the namespace. This resulted in inflexibility when one tenant required different configuration from other tenants despite being separated by different namespaces.

Lastly, namespaces span across federated deployments of Consul clusters, so any configuration entry applied to a namespace of one Consul cluster will also apply to the same namespace of the remote Consul cluster.

Admin Partitions address these gaps with a stronger level of isolation between tenants. In fact, Admin Partitions are a superset of namespaces. Each partition will have its own set of resources including namespaces, key/value pairs, ACLs, etc.

* Support for Administrative Partitions in a federated topology is not yet supported in version 1.11. Support is expected in an upcoming release.

»How Do They Work?

Admin Partitions allow multiple tenants to reside in the same Consul datacenter and share a common set of Consul servers. When a new Consul datacenter is deployed with Admin Partitions enabled on the server nodes, a default partition is automatically created.

Each tenant can configure its Consul clients to join the datacenter in either the default partition or a newly defined partition. In the case of VM clients, the partition parameter can be set in the configuration file of each VM. For Kubernetes clients, the partition parameter is set in the Helm values file.

Once joined to a Consul datacenter, the services running on the Consul clients will, by default, only communicate with services in the same partition. In addition, each partition has its own set of resources, including namespaces, key-value stores, and proxy-defaults. With the use of partition-specific ACL tokens, Consul operators can limit their tenants to only see resources within their respective partitions. Consul operators can also assign tenant administrators to each partition to manage as their own isolated service mesh.

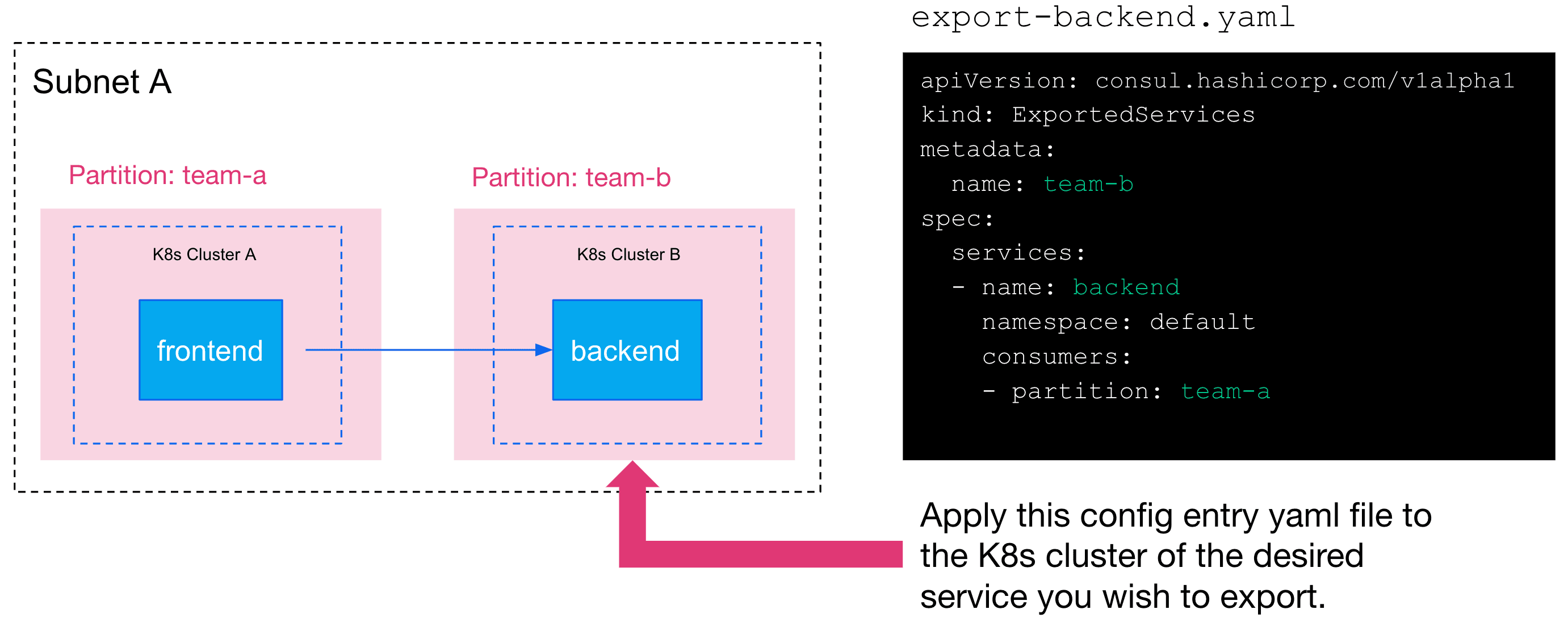

»Service-to-Service Communication Across Partitions

By design, Admin Partitions provide strict resource isolation between partitions. However, there are scenarios where cross-partition communication between services is desired within the Consul datacenter. For example, Team A is running a service named_ frontend _that resides on Kubernetes cluster A that belongs to partition team-a. The frontend service relies on an upstream service named backend, which is running on Kubernetes cluster B and belongs to partition team-b managed by Team B. In this scenario, service-to-service traffic between partitions can be allowed by deploying a configuration entry called exported services on Kubernetes cluster B. The exported service specifies the desired services to be exported outside of the local partition. It also specifies which remote partition is allowed to consume the exported services.

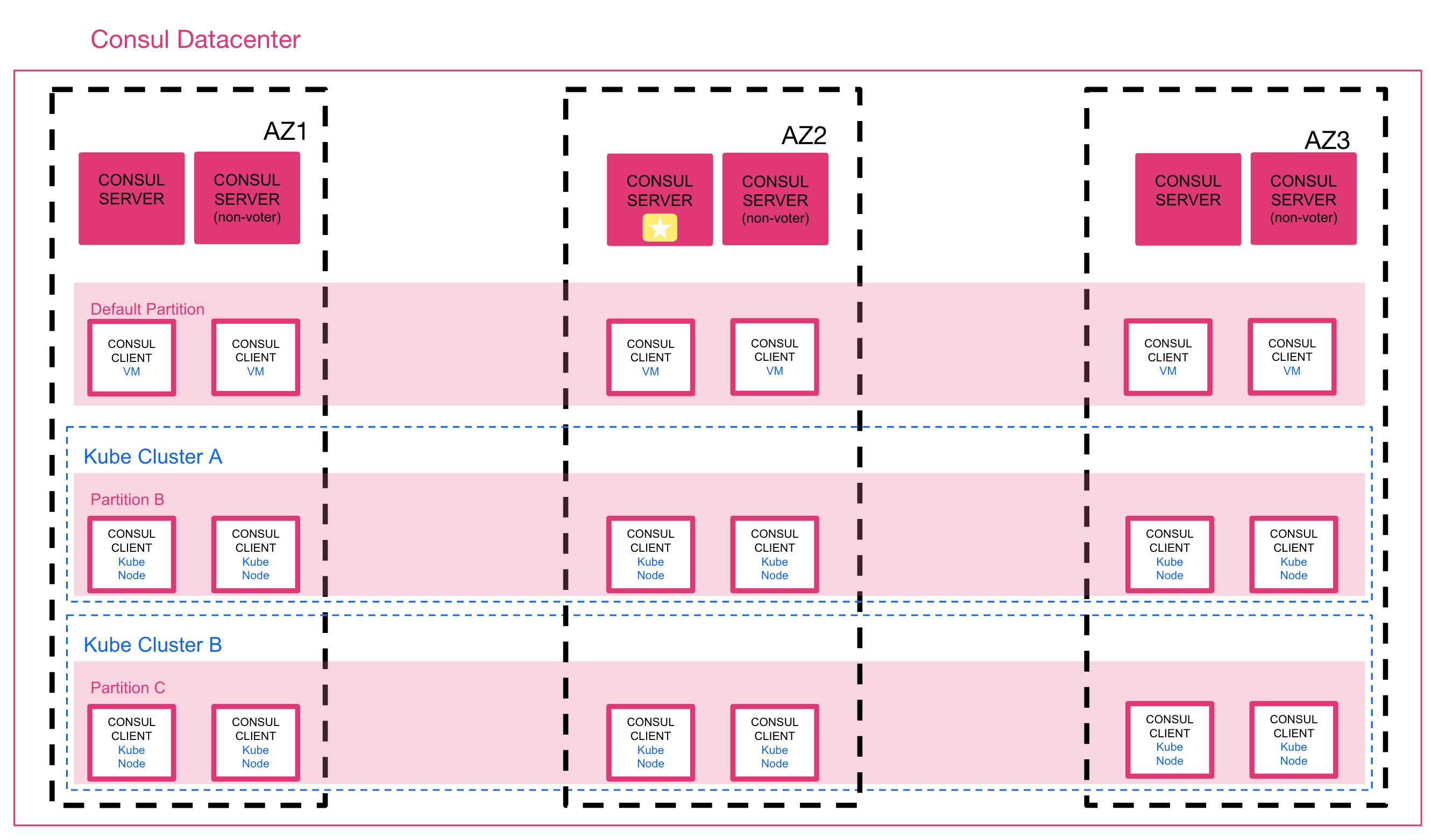

»Architecture

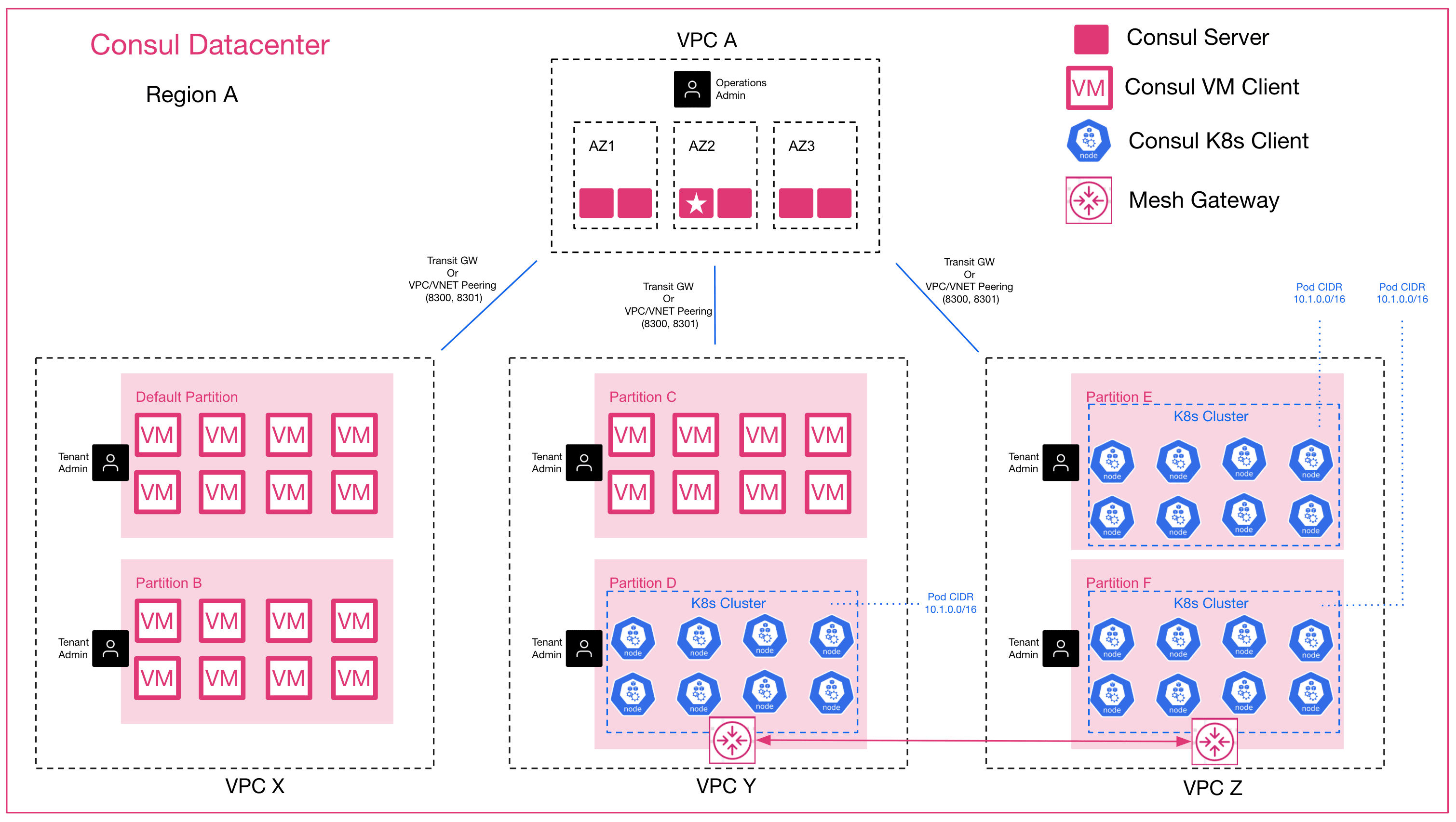

Admin Partitions do not change the existing recommended architecture for a Consul production deployment. Customers should still adhere to best practices provided in the Consul reference architecture documentation to ensure optimal performance, availability, and network connectivity. Here is an example reference to an enterprise deployment with relation to partitions:

Supported topologies and considerations for partitions include:

- Consul servers and clients may run on either VMs or on Kubernetes nodes.

- The default partition supports:

- Multiple VMs in the default partition

- Multiple Kubernetes clusters in the default partition

- Mix of multiple K8s and multiple VMs in the default partition

- Each non-default partition supports:

- Multiple VMs in the same partition

- Multiple Kubernetes clusters per partition (one K8s cluster per partition recommended)

- Mix of one single Kubernetes cluster and multiple VMs in the same partition

- Kubernetes nodes within a single Kubernetes cluster must all reside in the same partition. The Kubernetes cluster cannot have nodes belonging to different partitions.

Note: A default partition is automatically created when Admin Partitions are enabled on the Consul server at time of deployment. A non-default partition is any partition created by the Consul operator or administrator.

»Reference Diagram

The diagram below references an example hub-and-spoke design where the Consul datacenter consisting of Consul servers is managed by a global operations team in a central VPC. Tenants from various VPCs can join their Consul clients to the same Consul datacenter, resulting in separate partitions for each tenant. The global operations team can delegate partition-scoped administrative responsibilities to the respective tenant administrators.

On the control plane, network connectivity is required in order to join Consul clients to the Consul datacenters. If the Consul datacenter servers and Consul clients reside in different VPCs, transit gateways or VPC peering can be used to obtain the required network connectivity. Keep in mind that connecting between VPCs using transit gateways or other VPCs peering solutions require non-overlapping IP addresses between the Consul clients in each VPC.

For service-to-service traffic across partitions that reside on different VPCs, transit gateways or other peering solutions are not required. Consul supports data plane traffic to connect across VPCs using mesh gateways. In addition, service-to-service connections between different Kubernetes clusters with overlapping POD IP ranges are supported between different partitions:

»Conclusion

We hope that this post has provided you with a good understanding of the need for multi-tenancy, how Administrative Partitions can address these needs, and how it all works.

Consul is a networking solution that provides service discovery, secure services networking, and network infrastructure automation across multiple clouds and multiple run-times. Admin Partitions are just another feature to help enterprises consume Consul in a more cost effective manner among many groups. Not only do Admin Partitions help increase efficiency and reduce cost by allowing different groups to consume shared resources, they can also help centralize management and enforce consistent governance while allowing division of labor and distribution of responsibility to various groups of an organization.

For more information and hands-on tutorials, we encourage you to visit the HashiCorp Learn guide for Multi-Tenancy with Administrative Partitions. Additional reference details can also be found in the Consul.io documentation.