This is the fourth and final post in the series Building Resilient Infrastructure with Nomad (Part 1, Part 2, Part3). In this series we explore how Nomad handles unexpected failures, outages, and routine maintenance of cluster infrastructure, often without operator intervention.

In this post we’ll explore how Nomad’s design and use of the Raft consensus algorithm provides resiliency against data loss and how to recover from outages.

We’ll assume a production deployment, with the recommended minimum of 3 or 5 Nomad servers. For information deploying a Nomad cluster in production, see Bootstrapping a Nomad Cluster.

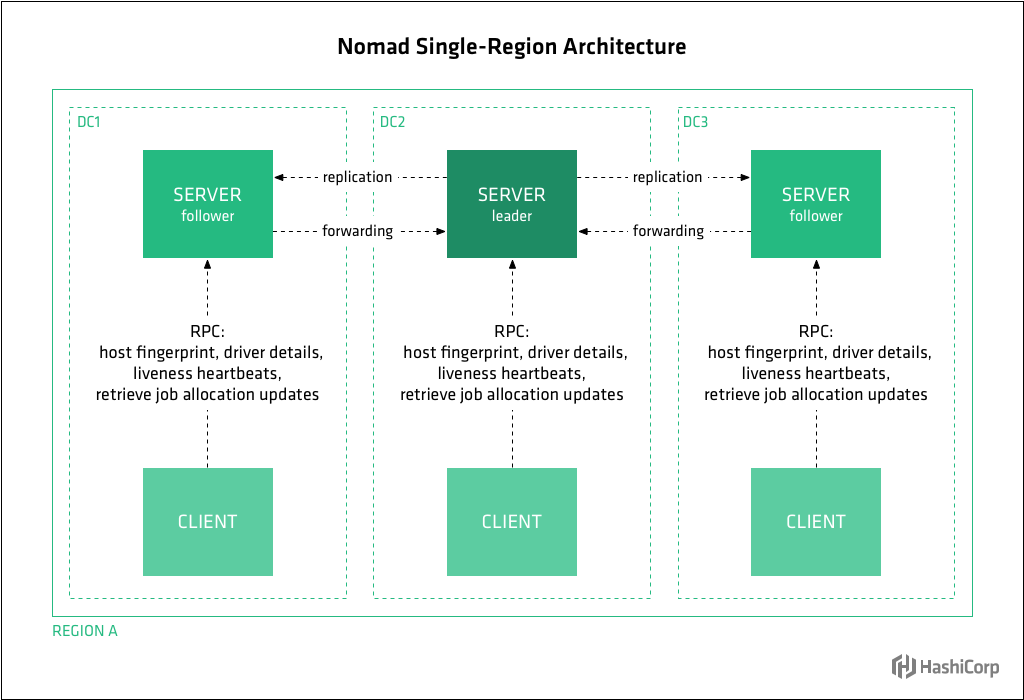

You’ll recall from Post 2 in this series, that a Nomad cluster is composed of nodes running the Nomad agent, either in server or client mode. Clients are responsible for running tasks, while servers are responsible for managing the cluster. Client nodes make up the majority of the cluster, and are very lightweight as they interface with the server nodes and maintain very little state of their own. Each cluster has usually 3 or 5 server mode agents and potentially thousands of clients.

Nomad models infrastructure as regions and datacenters. Regions may contain multiple datacenters. For example the “us” region might have a “us-west” and “us-east” datacenter. Nomad servers manage state and make scheduling decisions for the region to which they are assigned.

»Nomad and Raft

Nomad uses a consensus protocol based on the Raft algorithm to provide data consistency across server nodes within a region. Raft nodes are always in one of three states: follower, candidate, or leader. All nodes initially start out as a follower. In this state, nodes can accept log entries from a leader and cast votes. If no entries are received for some time, nodes self-promote to the candidate state. In the candidate state, nodes request votes from their peers. If a candidate receives a majority of votes, then it is promoted to a leader. The leader must accept new log entries and replicate to all the other followers

Raft-based consensus is fault-tolerant up to the point where a majority of nodes are available. Otherwise it is impossible to process log entries or reason about peer membership.

For example, a Raft cluster of 3 nodes can tolerate 1 node failure, because the 2 remaining servers constitute a majority quorum. A cluster of 5 nodes can tolerate 2 node failures, etc. The recommended configuration is to either run 3 or 5 Nomad servers per region. This maximizes availability without greatly sacrificing performance. The deployment table below summarizes the potential cluster size options and the fault tolerance of each.

| Servers | Quorum Size | Failure Tolerance |

|---|---|---|

| 1 | 1 | 0 |

| 2 | 2 | 0 |

| 3 | 2 | 1 |

| 4 | 3 | 1 |

| 5 | 3 | 2 |

| 6 | 4 | 2 |

| 7 | 4 | 3 |

Nomad’s fault tolerance means that there are two types of outages you might experience:

- failure of a minority number of servers within a region, resulting in no loss of quorum

- failure of a majority number of servers within a region, resulting in a loss of quorum

Each scenario has a different recovery procedure.

»Recovering from failure of minority number of servers (no loss of quorum)

Nomad’s use of Raft means that the failure of a minority number of servers is transparent to clients. Cluster operation will continue normally with jobs running on client nodes and servers continuing to schedule jobs and monitor the cluster.

However, to protect against data loss in the event of further server node failures, the cluster should be restored to full health by repairing or replacing the failed servers and rejoining them to the cluster. This requires operator intervention but the process is fairly straightforward.

For each of the failed servers, first try bringing the failed server back online and having it rejoin the cluster with the same IP address as before. If you need to rebuild a new server to replace the failed one, have the new server join the cluster with the same IP address as the failed server. Both options will return the cluster to a fully healthy state once all the failed servers have rejoined.

If the rebuilt server cannot have the same IP address as the one it is replacing, the failed server needs to be removed from the cluster. This dead server cleanup happens automatically as part of autopilot. Operators can also remove servers manually with the nomad server force-leave command. If that command isn’t able to remove the server, use the nomad operator raft remove-peer command to remove the stale peer server on the fly with no downtime.

»Recovering from failure of majority number of servers (loss of quorum)

In the case of the failure of a majority number of servers within a region, quorum will be lost, resulting in a complete outage at the control plane level and perhaps some loss of data. Fortunately, partial recovery is possible using data on the remaining servers. This requires operator intervention. Also, it’s important to note that a Nomad server outage doesn’t necessarily mean workloads have stopped. If the Nomad clients and their hosts are operational, workloads will continue to run while the Nomad servers are without quorum.

Briefly, the recovery procedure involves stopping all remaining servers, creating a raft/peers.json with entries for all remaining servers, and then restarting them. The cluster should be able to elect a leader once the remaining servers are all restarted with an identical raft/peers.json configuration. Any new servers you introduce later, e.g. to restore majority quorum, can be fresh with totally clean data directories and joined using Nomad's server join command.

In extreme cases, it should be possible to recover with just a single remaining server by starting that single server with itself as the only peer in the raft/peers.json recovery file.

However, using raft/peers.json for recovery can cause uncommitted Raft log entries to be implicitly committed, so this should only be used after an outage where no other option is available to recover a lost server. Make sure you don't have any automated processes that will put the peers file in place on a periodic basis.

See Outage Recovery for complete details on recovering from either type of outage.

»Outages and regions

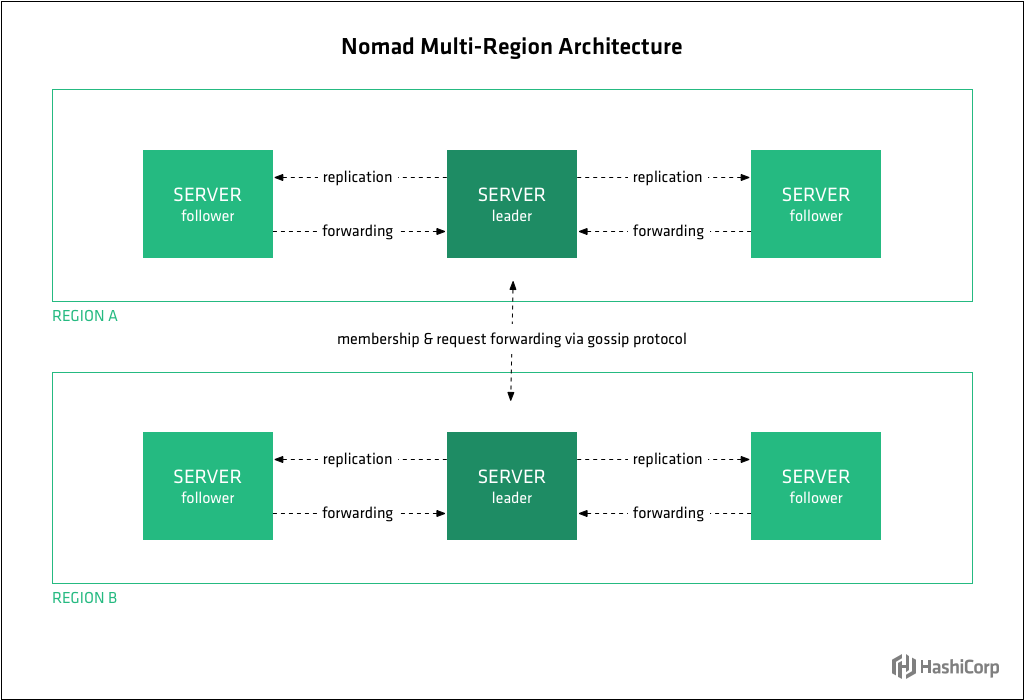

In some cases, for either availability or scalability, you may choose to run multiple regions. Nomad supports federating multiple regions together into a single cluster.

A region typically maps to a large geographic region, for example us or europe, with potentially multiple zones, which map to datacenters such as us-west and us-east. Nodes belonging to the same datacenter should be colocated (they should share a local LAN connection).

Regions are the scheduling boundary. They are fully independent from each other, and do not share jobs, clients, or state. They are loosely-coupled using a gossip protocol over WAN, which allows users to submit jobs to any region or query the state of any region transparently. Requests are forwarded to the server in the appropriate region to be processed and the results returned. Data is not replicated between regions. This allows regions to be “bulk heads” against failures in other regions.

Clients are configured to communicate with their regional servers and communicate using remote procedure calls (RPC) to register themselves, send heartbeats for liveness, wait for new allocations, and update the status of allocations.

Because of Nomad’s two-tier approach, if you lose several Nomad servers within one datacenter, you may not experience loss of quorum because servers in other datacenters within the same region participate in the Raft consensus are counted towards quorum. If you have experienced a loss of a minority number of Nomad servers within a single region, your cluster will not experience an outage and you won’t have to do any data recovery. Restore your cluster to full health using the procedure mentioned above and in detail in our Outage Guide.

If a majority number of server nodes fail within a region, there will be loss of quorum and complete outage at the control plane level and possibly some loss of data about jobs running in that region. Other regions in the cluster won’t be affected by the outage. Jobs running in other regions will continue to run even if they were submitted via Nomad servers in the affected region. In the affected region, as long as Nomad client and their hosts are operational, workloads will continue to run. The recovery procedure for this scenario is explained briefly above and in our Outage Guide. Once you restore the failed servers and restore cluster health, Nomad will become aware of all jobs that are still running and reconcile state.

»Summary

In this fourth and final post in our series on Building Resilient Infrastructure with Nomad (Part 1, Part 2, Part3) we covered how Nomad’s infrastructure model and use of the Raft consensus algorithm provides resiliency against data loss and how to recover from outages. Nomad is designed to handle transient and permanent failures of both clients and servers gracefully to make it easy to run highly reliable infrastructure.