Exposure of secrets such as database passwords, API tokens, and encryption keys can lead to expensive breaches. For reference, the average cost of data breach in 2025 is $4.4M, according to the Cost of a Data Breach report. We know that preventing secret exposure needs to be a primary focus for security teams. Verizon's 2025 Data Breach Investigations Report makes this fact clear, finding that 88% of attacks targeting web applications involved the usage of compromised credentials.

While having reactive mitigation and recovery plans (e.g. scans and incident management) in place for security breaches is crucial, proactive always beats reactive in the cybersecurity space.

HashiCorp offers a collection of tools that are geared not only towards fixing secret sprawl, but also incorporating security checks directly into your development workflows to prevent secrets from being unintentionally exposed in the first place. In this blog post, we’ll explore four examples on how to proactively prevent secrets from being exposed and end secret sprawl.

»Credential injection and just-in-time (JIT) access

Traditional methods of granting system access generally involve sharing and storing static credentials. This bad practice increases the risk of credentials being compromised as they’re typically stored in plaintext within multiple locations, shared between operators, while being also difficult to revoke due to their high usage and importance in day-to-day operations.

HashiCorp Boundary solves this problem by requiring the user to only keep track of their own personal, non-privileged credentials. This secret-protecting workflow uses HashiCorp Vault's dynamic credentials for session authentication once the user is authenticated to Boundary. Ideally, the dynamic credentials are scoped to a given task (ex: read-only access to a particular database). The scoping can be accomplished with less overall administrative burden than traditional alternatives like issuing multiple long-lived credentials to the user.

Rather than having the user enter these credentials when they connect to targets, Boundary admins can create a passwordless experience by leveraging Boundary’s credential injection functionality. Credential injection automates the insertion of credentials directly into remote sessions, eliminating the need for users to handle sensitive information such as administrative credentials with elevated privileges.

With credential injection, the user never sees the credentials required to authenticate to the target. The Boundary worker node does both the session establishment and authentication on their behalf, so with this feature, you can prevent secret exposure (and secret sprawl) from a manual credential handling standpoint.

Here is an example of how the credential injection process works by using the Boundary CLI to connect to a PostgreSQL database:

First, the user authenticates to Boundary.

> boundary authenticate

Please enter the login name (it will be hidden):

Please enter the password (it will be hidden):

Authentication information:

Account ID: acctpw_AbcdeFGHIj

Auth Method ID: ampw_oM7bMLuOPv

Expiration Time: Mon, 19 May 2025 13:37:06 EDT

User ID: u_abcdefg0ab

The token name "default" was successfully stored in the chosen keyring and is not displayed here.

Next, the user requests a connection to the database target.

> boundary connect postgres hc-andre-faria-db.boundary.lab -dbname boundary_demo

The Boundary controller fetches a single-use set of credentials from Vault’s credential store with a limited time-to-live (TTL). Boundary then injects the credentials directly into the user session.

Lastly, the user establishes a connection to the target.

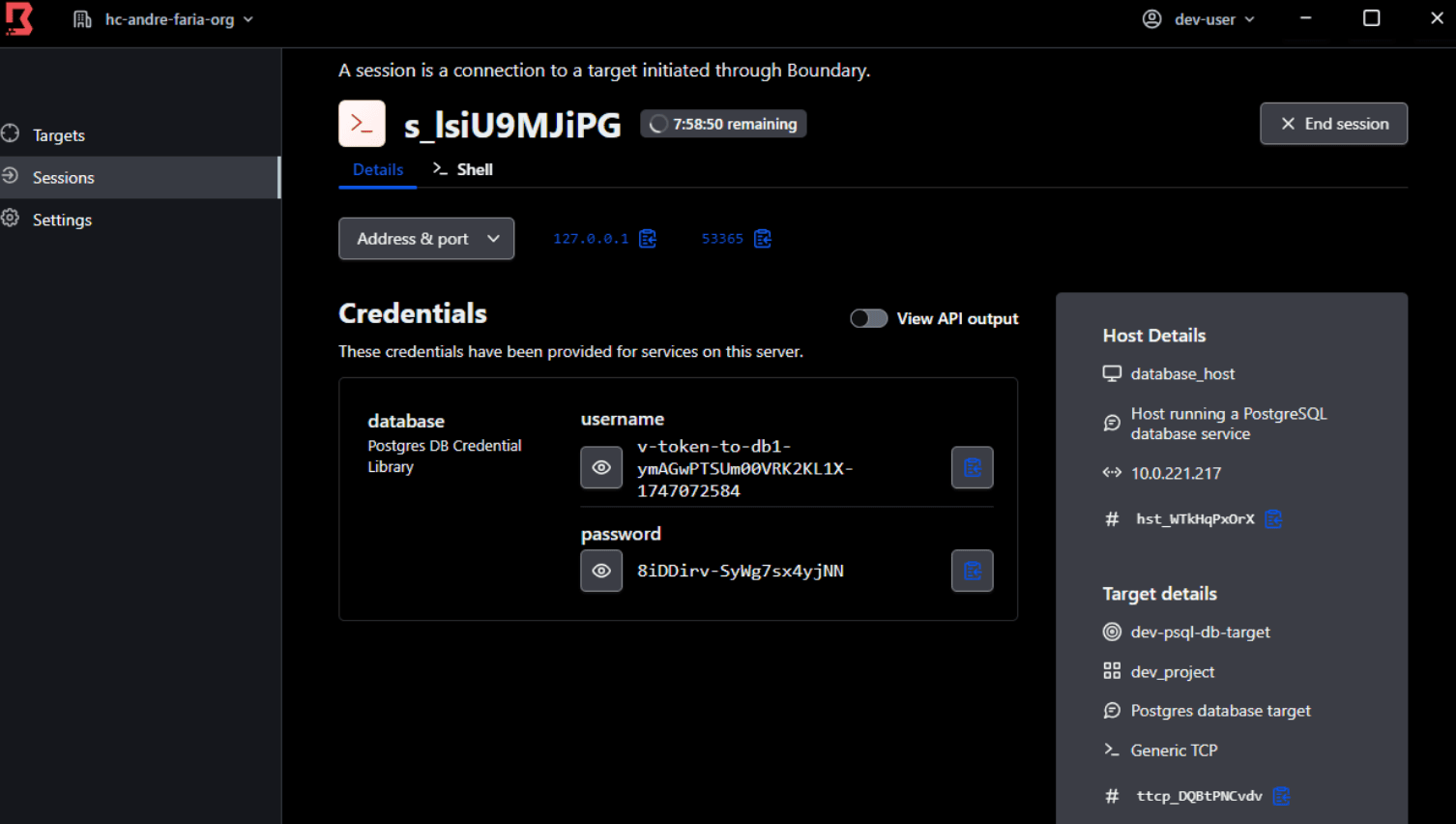

The session was established using just-in-time (JIT), dynamically generated credentials via Boundary’s Vault credential stores capability. The image below shows how you can view the brokered Vault credentials for the PostgreSQL session above through the Boundary Desktop Client.

These JIT credentials are short-lived for this session only. This ensures credentials are only available when needed and automatically revoked after their usage, greatly reducing the risk of credential exposure.

This Boundary sessions view shows session details such as username, host details, password, and TTL near the session name. This example currently has 7:58:50 remaining.

»Preventative secret scanners

You can’t secure your secrets (credentials, tokens, keys, etc.) if you don’t know where they are. It’s up to security and platform teams to find tools that can discover and remediate secret sprawl by scanning for unmanaged and leaked credentials across a broad set of data sources. These tools need to find hard-coded, plaintext secrets not just in GitHub repos, but places like Slack, Jira, and Confluence. The best tools don’t just help teams take the appropriate remediation path, they also save developers from accidentally exposing secrets in the first place.

HCP Vault Radar is a great example of a secret scanner that covers all three use cases: Discovery, remediation, and prevention. Vault Radar can find secrets without a lot of false positives, then it gives you a push-button workflow to copy secrets into Vault Enterprise or HCP Vault Dedicated for secure storage and central management. It also has the ability to proactively prevent secrets from making their way into your CI/CD pipelines in the first place.

By leveraging pre-receive hooks, HCP Vault Radar allows you to implement controls that scan each commit to your version control system, preventing commits with secrets in plaintext from being added to your Git history.

Shifting left even further, Vault Radar users can also make use of pre-commit webhooks before any changes are committed to version control by scanning code locally on the developer’s machine and CI/CD pipelines for any hard-coded, sensitive information.

Terraform run tasks that integrate HCP Vault Radar into HCP Terraform (You can find an example Terraform module for this here) can also be set up in order to parse pre-plan or pre-apply run stages for potential secrets in plaintext, preventing developers from accidentally committing code with sensitive information.

Using the previous module as an example, the following run task was created within HCP Terraform:

With the run task created and configured to execute during the pre-plan stage of the Terraform run, I’ll now attempt to run a terraform plan with the following hardcoded API key in the main.tf file:

# Token to be picked up by HCP Vault Radar

provider "pinecone" {

api_key = "fdfa439d-99ce-4f58-88bb-b4b04e7775d0"

}

The run will quickly fail with the following error message during the pre-plan stage:

PS C:\Dev\terraform-hcp-vault-radar-runtask\examples\basic\sample> terraform plan

Running plan in HCP Terraform. Output will stream here. Pressing Ctrl-C

will stop streaming the logs, but will not stop the plan running remotely.

Preparing the remote plan...

To view this run in a browser, visit:

https://app.terraform.io/app/acfaria-hashicorp/terraform-shell-radar-runtask-h820/runs/run-KmrEXD9YjqVwMArG

Pre-plan Tasks:

All tasks completed! 0 passed, 1 failed (4s elapsed)

│ hcp-radar-runtask-h820 ⸺ Failed (Mandatory)

│ HashiCorp Vault Radar scan complete, 1 secret found!

│ Details: https://vault-radar-portal.cloud.hashicorp.com

│

Error: the run failed because the run task, hcp-radar-runtask-h820, is required to succeed

│

│ Overall Result: Failed

------------------------------------------------------------------------

│ Error: Task Stage failed.

The error message can also be visualized within the HCP Terraform UI:

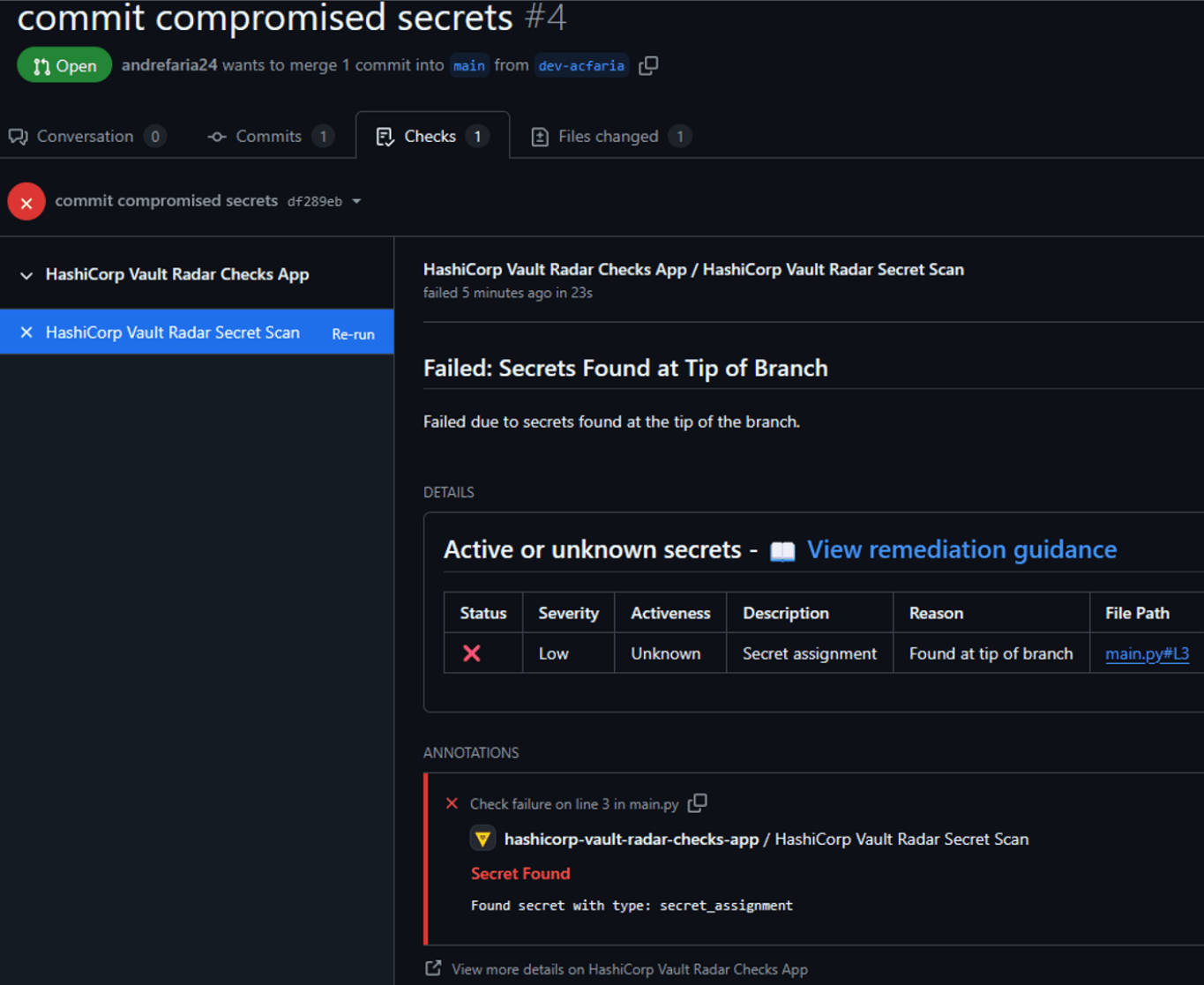

For GitHub users, the HashiCorp Vault Radar Checks GitHub App can also be integrated with all, or select, repositories within your organization. The application will showcase any secrets found during a PR, along with recommended remediation actions, as shown in the following images:

»Secure-by-design infrastructure as code modules

One of the main benefits of adopting infrastructure as code (IaC) practices is the reduction of risk in human error by automating infrastructure provisioning and management. By templatizing and versioning your infrastructure automation configuration, you’re able to prevent secret exposure every time you provision computing resources.

In IaC tools such as HashiCorp Terraform, this security hardening process is done using modules. Platform teams, operations, and security stakeholders can work together to build secure settings into modules so that errors like secret exposure never happen (see how Morgan Stanley built their set of hardened modules). Organizations can then use Terraform to require the use of secure-by-design modules when provisioning production environments.

There are numerous ways to build a module that prevents secrets exposure, but the best way is to integrate your modules with your secrets management systems. For example, you would place variable fields in a module that call on a secrets manager like HashiCorp Vault to retrieve any secrets, ensuring they are not hard-coded within the configuration, or exposed to developers.

Here is an example Terraform configuration using Vault’s KV v2 secrets engine within a Terraform module to provide the credentials needed to create an Amazon Aurora PostgreSQL database instance:

resource "aws_rds_cluster" "test-postgresql-cluster" {

cluster_identifier = "test-db"

engine = "aurora-postgresql"

availability_zones = ["us-east-1a", "us-east-1b", "us-east-1c"]

master_username = data.vault_generic_secret.postgres_db_creds.data["postgres_master_username"]

master_password = data.vault_generic_secret.postgres_db_creds.data["postgres_master_password"]

}

resource "aws_rds_cluster_instance" "test-db" {

identifier = "test-db-instance-1"

cluster_identifier = aws_rds_cluster.test-postgresql-cluster.id

instance_class = "db.serverless"

engine = aws_rds_cluster.test-postgresql-cluster.engine

engine_version = aws_rds_cluster.test-postgresql-cluster.engine_version

publicly_accessible = true

promotion_tier = 1

}

data "vault_generic_secret" "postgres_db_creds" {

path = "kv-v2/aws/postgres"

}

This integration works by using Terraform’s Vault provider within the Terraform module, as such:

terraform {

required_providers {

vault = {

source = "hashicorp/vault"

version = "5.3.0"

}

}

}

provider "vault" {

# Configuration options

}

Note: While the above example creates a static secret — the goal for any security organization should be to migrate as many systems to using dynamic secrets as possible.

Terraform users can also rest assured that the secrets aren’t being stored in Terraform state either, since version 5.0 of the Vault provider introduced support for ephemeral resources, which are available in Terraform 1.10 and later.

And in addition to secure modules, there’s an additional failsafe guardrail you can put in place to prevent secret exposure: Policy as code. The Sentinel framework in Terraform and Vault is the mechanism that’s used to require the use of secure-by-design modules.

»Workload identity tokens

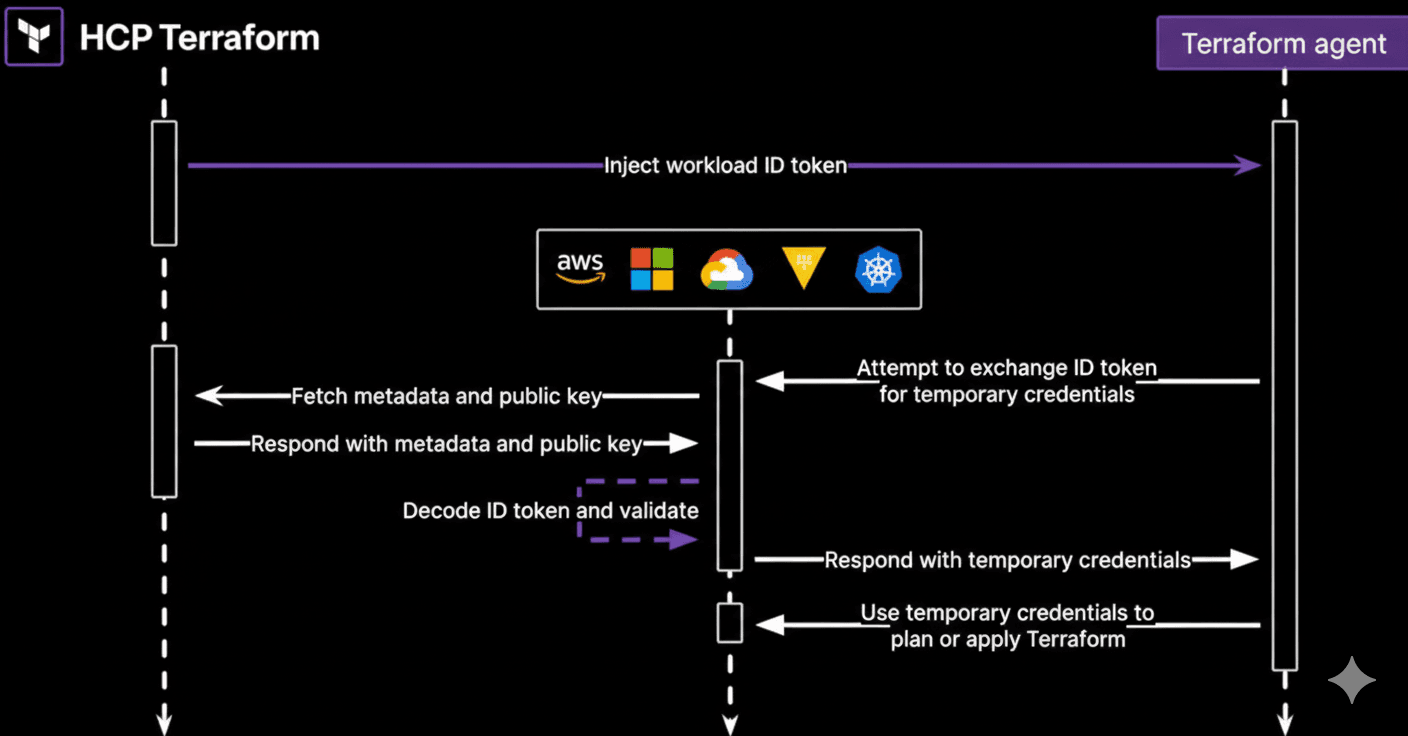

Another security tactic that’s becoming mainstream is workload identity — which is called workload identity federation (WIF) for some cloud providers. This technique uses workload identity tokens to connect infrastructure provisioning systems to cloud providers without storing any credentials in the process. The token’s TTL is very short and it's only used for one deployment. For each deployment, the system auto-generates a brand new token. This makes it much harder for the credential to be found or exposed within its short lifespan.

Dynamic provider credentials, a workload identity feature in Terraform Enterprise and HCP Terraform, gives you this workflow. This authentication model allows users to create JIT credentials for all major cloud vendors’ official Terraform providers, and HashiCorp Vault.

The use of dynamic provider credentials within your workflow reduces the operational burden and security risks that are associated with managing static, long-lived credentials, eliminating the need to manually manage and rotate credentials across your organization. It also enables your teams to leverage cloud platforms’ authentication and authorization tool sets to define permissions based on specific metadata.

Dynamic provider credentials leverage the OpenID Connect (OIDC) standard’s workload identity and implementation. Terraform Enterprise / HCP Terraform is first set up as a trusted identity provider with the desired cloud platform(s) and/or Vault. Then, a signed identity token is generated for each workload to obtain temporary credentials that are injected into the Terraform agent’s run environment.

Here is a more detailed model of the dynamic provider credentials process:

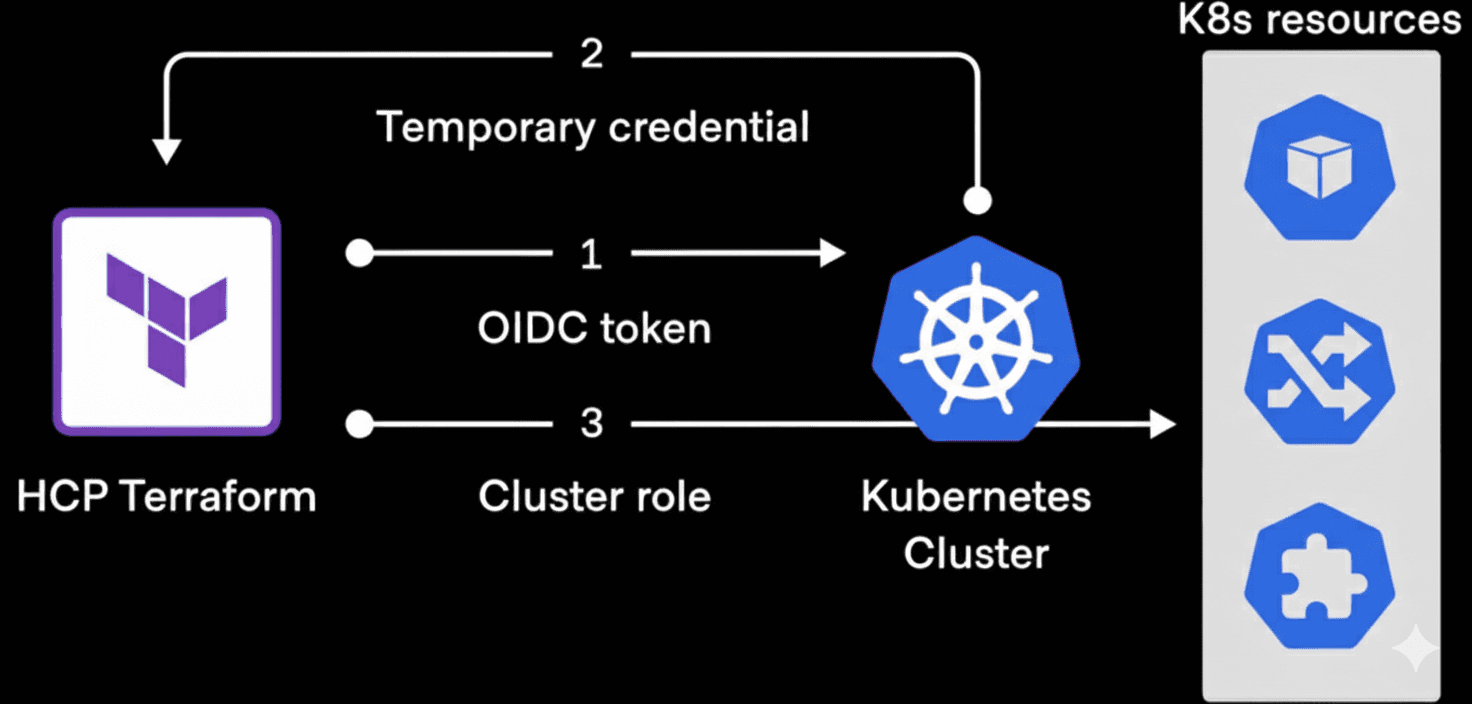

When it comes to Kubernetes and Helm providers, Terraform’s dynamic provider credentials can also be used for native JIT authentication. This is done by having Terraform Enterprise / HCP Terraform’s workload identity be exchanged for a temporary auth token. A Terraform workload is then mapped to a Kubernetes cluster role as per the following diagram:

Dynamic provider credentials are also officially supported on cloud managed services such as Amazon Elastic Kubernetes Services (EKS) and Google Kubernetes Engine (GKE).

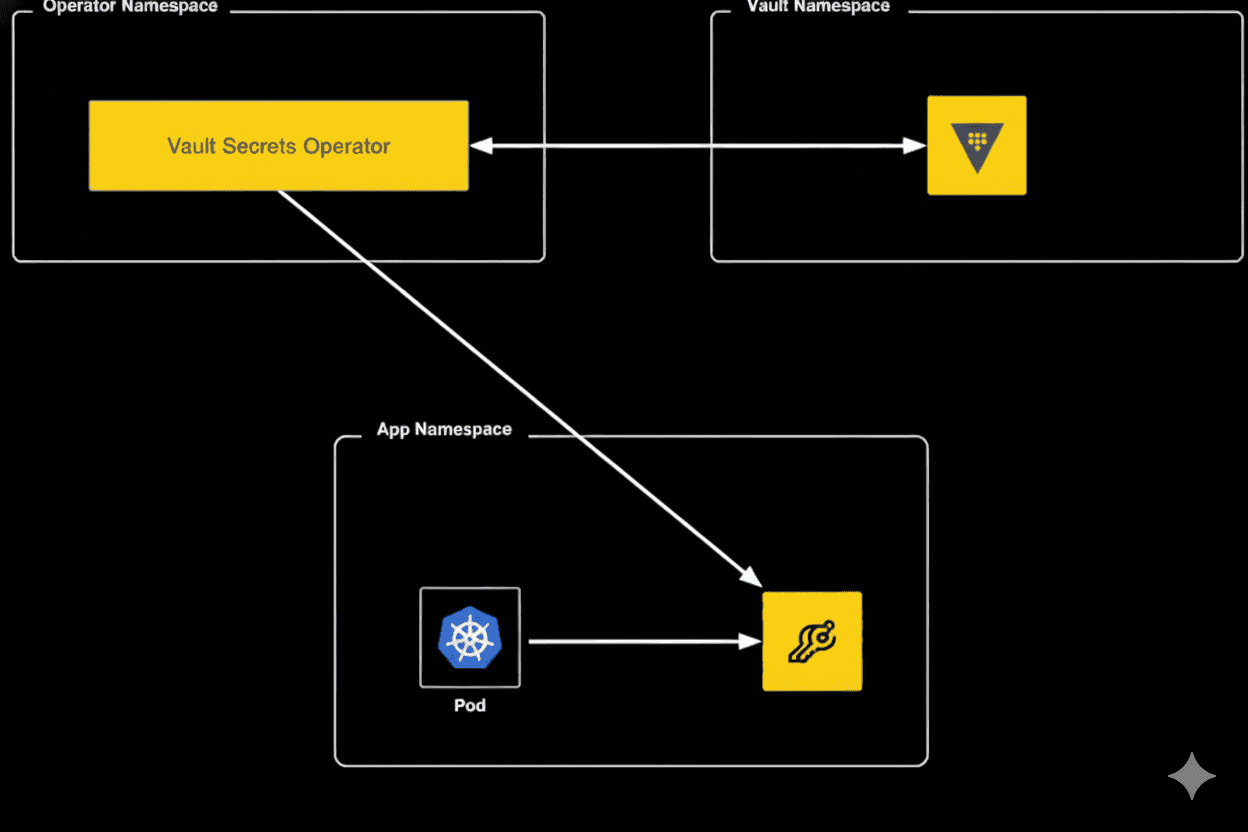

»Bonus: Vault Secrets Operator

If HashiCorp Vault is being used within your organization, Kubernetes native secrets can also be protected by using the Vault Secrets Operator (VSO) without requiring developers to be proficient with, or interact with Vault at all. This is possible because the Vault Secrets Operator is a Kubernetes operator, which updates Kubernetes native secrets and syncs them to Vault, allowing Vault to manage the secrets seamlessly on the backend. This ensures secrets are managed from a central, secure location rather than being scattered throughout various environments, greatly reducing exposure risk.

As you can see, the Vault Secrets Operator syncs secrets between Kubernetes and Vault in a specified namespace. Applications only have access to the secrets within that given namespace. The secrets are still managed by Vault, even though they are accessed via the standard method within Kubernetes.

The Vault Secrets Operator supports synchronizing a wide range of Vault secrets such as dynamic database and cloud credentials, TLS certificates, static key-values, etc.

»The bigger picture

Having a proactive approach regarding secret exposure prevention is crucial for any modern business to ensure their digital assets, and customer information is secure. These were only a few examples of how HashiCorp products such as HCP Vault Radar, Boundary, Vault, and Terraform can provide you with robust mechanisms to keep your business proactive in security. To learn more about these solutions, and other HashiCorp products, visit the HashiCorp Developer website.

HashiCorp products are built to work together to provide a larger transformational strategy for enterprises. If your organization is interested in a more modern, holistic approach to security, governance, and compliance, share our solution brief with your colleagues: Securing and governing hybrid and multi-cloud at scale.

If you’re having trouble convincing your team or leadership why prevention of secret sprawl and exposure is worth the investment, send them a copy of our eBook on the costs of secret sprawl:

This blog post was published in June 2025 and updated October 2025.