HashiCorp Consul is a service mesh for service discovery, runtime configuration, and service segmentation for microservice applications and infrastructure. Consul enables the registration and discovery of services "internal" to your infrastructure as well as "external" ones such as those provided by third-party SaaS and other environments in which it is not possible to run the Consul agent directly.

This blog post explains how to work with external services in Consul and how to use Consul ESM (External Service Monitor) to enable health checks on those services. We will cover:

- Glossary of key terms for this topic

- Internal service registration and health checks

- External service registration and health checks

- Pull vs. push health checking

- Using Consul ESM to monitor the health of external services

All of the examples in this post use the Consul agent (download) version 1.2.1 running locally in -dev mode with the Consul -ui enabled and use the -enable-script-checks flag to allow a simple ping-based health check. We also provide a node name of web rather than the default of hostname to make the examples clearer.

$ consul agent -dev -enable-script-checks -node=web -ui

With the Consul dev agent running, the examples in this post use curl to interact with Consul's HTTP API as well as Consul’s Web UI, available at http://127.0.0.1:8500.

»Glossary of key terms for this topic

- Agent: Long-running daemon on every member of the Consul cluster. The agent is able to run in either client or server mode.

- Node: A node represents the "physical" machine on which an agent is running. This could be a bare metal device, VM, or container.

- Service: A service is an application or process that is registered in Consul's catalog for discovery. Services can be internal (inside your datacenter) or external (like an RDS cluster) and may optionally have health checks associated with them.

- Check: A check is a locally run command or operation that returns the status of the object to which it is attached. Checks may be attached to nodes and services.

- Catalog: The catalog is the registry of all registered nodes, services, and checks.

»Internal service registration and health checks

First, we'll take a look at how to register internal services. In the context of Consul, internal services are those provided by nodes (machines) where the Consul agent can be run directly.

Internal services are registered via service definitions. Service definitions can be supplied by configuration files loaded when the Consul agent is started, or via the local HTTP API endpoint at /agent/service/register.

We'll register an example internal web with the following configuration, web.json:

{

"id": "web1",

"name": "web",

"port": 80,

"check": {

"name": "ping check",

"args": ["ping","-c1","www.google.com"],

"interval": "30s",

"status": "passing"

}

}

This example web service will have unique id web1, logical name web, run on port 80 and have one health check.

Register the example web service by calling the HTTP API with a PUT request:

$ curl --request PUT --data @web.json localhost:8500/v1/agent/service/register

To verify that our example web service has been registered, query the /catalog/service/:service endpoint:

$ curl localhost:8500/v1/catalog/service/web

[

{

"ID": "a2ebf70e-f912-54b5-2354-c65e2a2808de",

"Node": "web",

"Address": "127.0.0.1",

"Datacenter": "dc1",

"TaggedAddresses": {

"lan": "127.0.0.1",

"wan": "127.0.0.1"

},

"NodeMeta": {

"consul-network-segment": ""

},

"ServiceID": "web1",

"ServiceName": "web",

"ServiceAddress": "",

"ServiceMeta": {},

"ServicePort": 80,

"ServiceEnableTagOverride": false,

"CreateIndex": 7,

"ModifyIndex": 7

}

]

Registering a service definition with the agent/service/register endpoint registers the local node as the service provider. The local Consul agent on the node is responsible for running any health checks registered for the service and updating the catalog accordingly.

In our sample web service, we defined one health check with the following configuration:

"check": {

"name": "ping check",

"args": ["ping","-c1","www.google.com"],

"interval": "30s",

"status": "passing"

}

This health check verifies that our web service can connect to the public internet by pinging google.com every 30 seconds. In the case of an actual web service, a more useful health check should be configured. Consul provides several kinds of health checks, including: Script, HTTP, TCP, Time to Live (TTL), Docker, and gPRC.

To inspect all the health checks configured for services registered with a given local node use the /agent/checks/ endpoint:

$ curl localhost:8500/v1/agent/checks

{

"service:web1": {

"Node": "web",

"CheckID": "service:web1",

"Name": "ping check",

"Status": "passing",

"Notes": "",

"Output": "PING www.google.com (172.217.3.164): 56 data bytes\n64 bytes from 172.217.3.164: icmp_seq=0 ttl=52 time=21.902 ms\n\n--- www.google.com ping statistics ---\n1 packets transmitted, 1 packets received, 0.0% packet loss\nround-trip min/avg/max/stddev = 21.902/21.902/21.902/0.000 ms\n",

"ServiceID": "web1",

"ServiceName": "web",

"ServiceTags": [

"rails"

],

"Definition": {},

"CreateIndex": 0,

"ModifyIndex": 0

}

}

The health of individual services can be queried with /health/service/:service and nodes can be queried with /health/node/:node.

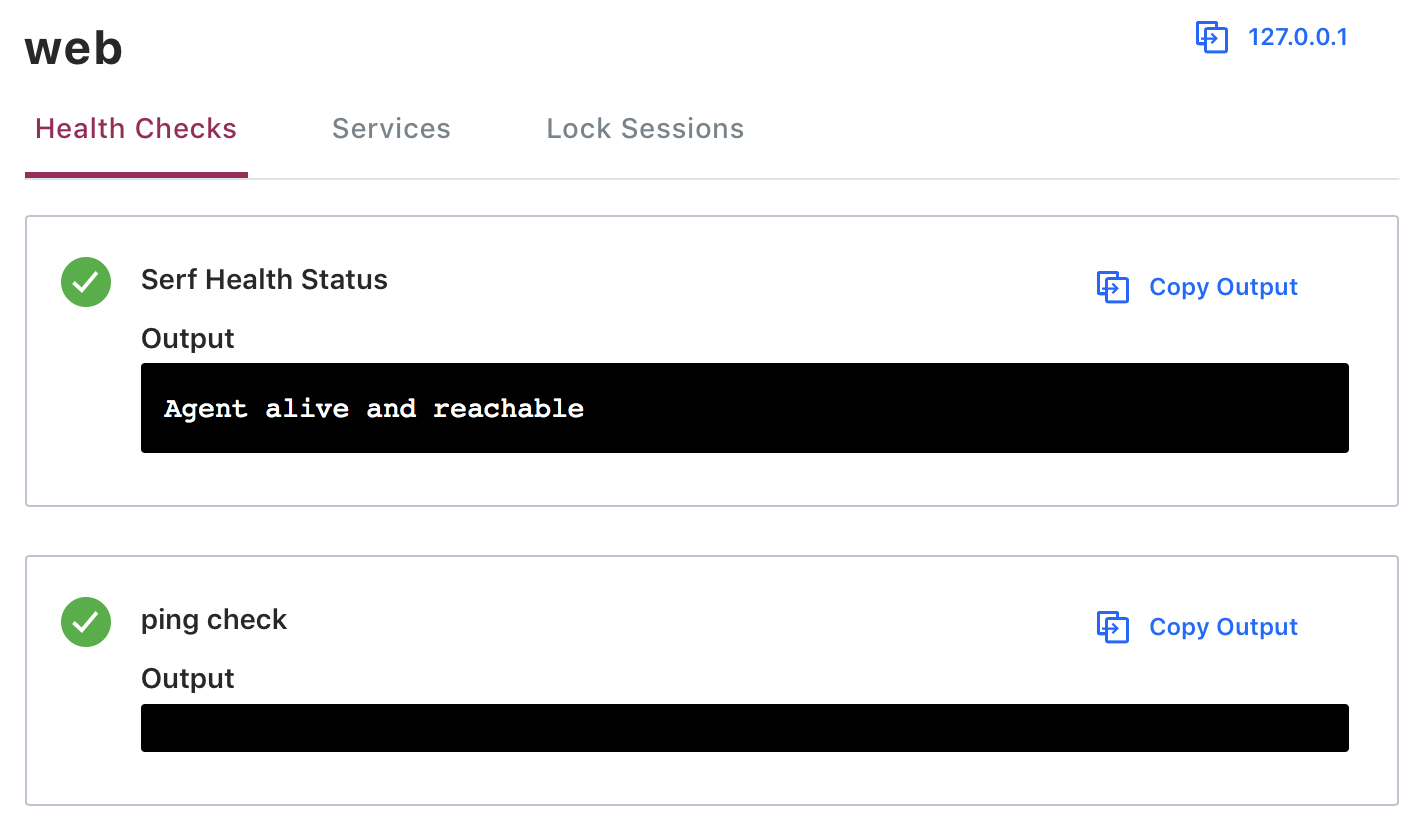

Health checks are also viewable in the Consul UI:

»External services registration and health checks

In the context of Consul, external services are those provided by nodes where the Consul agent cannot be run. These nodes might be inside your infrastructure (e.g. a mainframe, virtual appliance, or unsupported platforms) or outside of it (e.g. a SaaS platform).

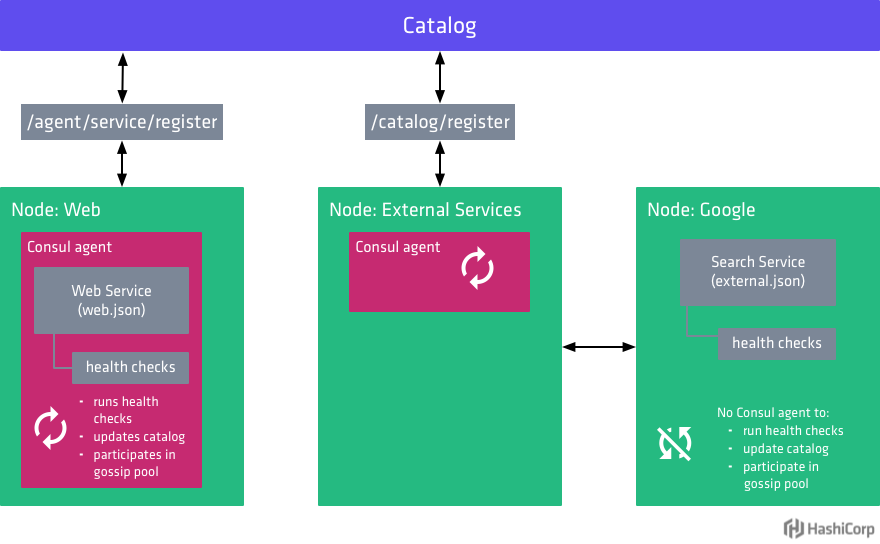

Because external services, by definition, run on nodes where there is not Consul agent running, they cannot be registered with that local agent. Instead, they must be registered directly with the catalog using the /catalog/register endpoint. The object context for this endpoint is the node, rather than service like with the /agent/service/register endpoint. When using the /catalog/register endpoint, an entire node is registered. With the /agent/service/register endpoint, which is the endpoint we used in our first example above, individual services in the context of the local node are registered.

The configuration for an external service registered directly with the catalog is slightly different than for an internal service registered via an agent:

-

NodeandAddressare both required since they cannot be automatically determined from the local node Consul agent. - Services and health checks are defined separately.

- If a

ServiceIDis provided that matches the ID of a service on that node, the check is treated as a service level health check, instead of a node level health check. - The

Definitionfield can be provided with details for a TCP or HTTP health check. For more information, see Health Checks.

To demonstrate how external service registration works, consider an external search service provided by Google. We register this service with the following configuration, external.json:

{

"Node": "google",

"Address": "www.google.com",

"NodeMeta": {

"external-node": "true",

"external-probe": "true"

},

"Service": {

"ID": "search1",

"Service": "search",

"Port": 80

},

"Checks": [{

"Name": "http-check",

"status": "passing",

"Definition": {

"http": "https://www.google.com",

"interval": "30s"

}

}]

}

This configuration defines a node named google available at address www.google.com that provides a search service with an ID of search1 running on port 80. It also defines an HTTP-type health check to be run every 30 seconds and sets an initial state for that check of passing.

Typically external services are registered via nodes dedicated for that purpose so we’ll continue our example using the Consul dev agent (localhost) as if it were running on a different node (e.g. "External Services") from the one running our internal web service (e.g. "Web).

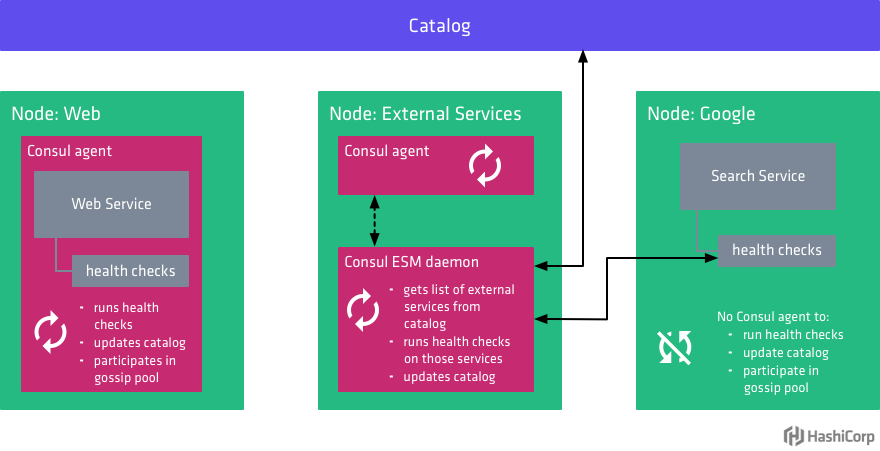

This diagram visualizes the differences between how internal and external services are registered with Consul:

Use a PUT request to register the external service:

$ curl --request PUT --data @external.json localhost:8500/v1/catalog/register

true

As with internal services registered via the agent endpoint, we can verify registration of external services by querying the /catalog/service/:service endpoint:

$ curl localhost:8500/v1/catalog/service/search

[

{

"ID": "",

"Node": "google",

"Address": "www.google.com",

"Datacenter": "dc1",

"TaggedAddresses": null,

"NodeMeta": {

"external-node": "true",

"external-probe": "true"

},

"ServiceID": "search1",

"ServiceName": "search",

"ServiceTags": [],

"ServiceAddress": "",

"ServiceMeta": {},

"ServicePort": 80,

"ServiceEnableTagOverride": false,

"CreateIndex": 246,

"ModifyIndex": 246

}

]

In our example of an internal web service, we verified our health check was active by querying the local agent endpoint /agent/checks. If we query this again after adding our external service, we won't see the health check listed because it was registered with the service catalog not the local agent. Instead, we need to query a catalog-level endpoint such as /health/service/:service, /health/node/:node, /health/state/:state.

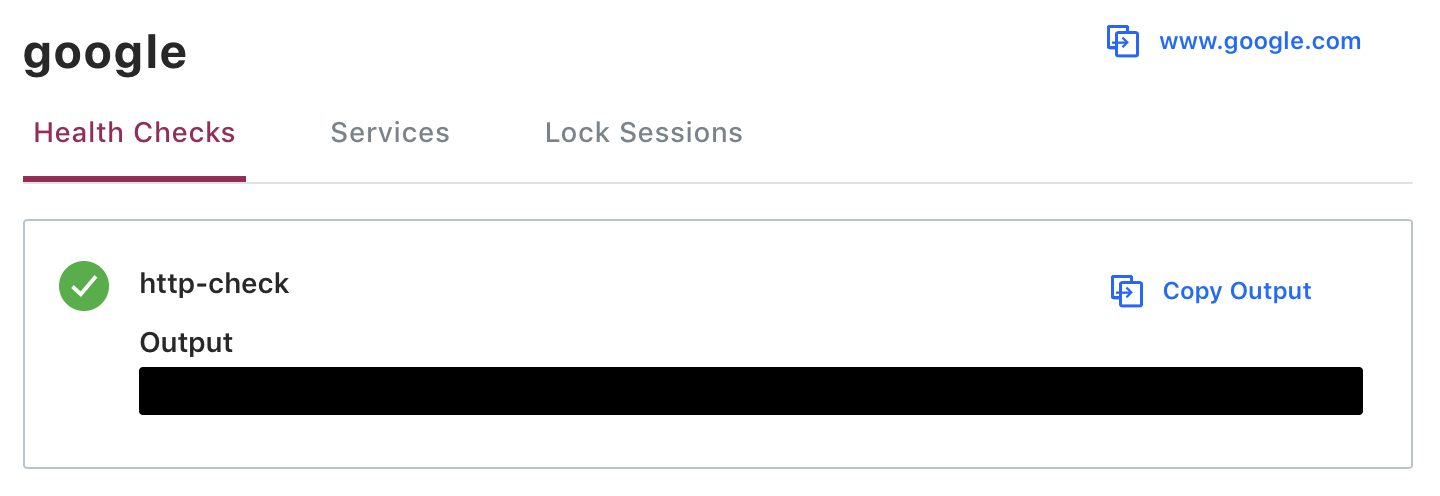

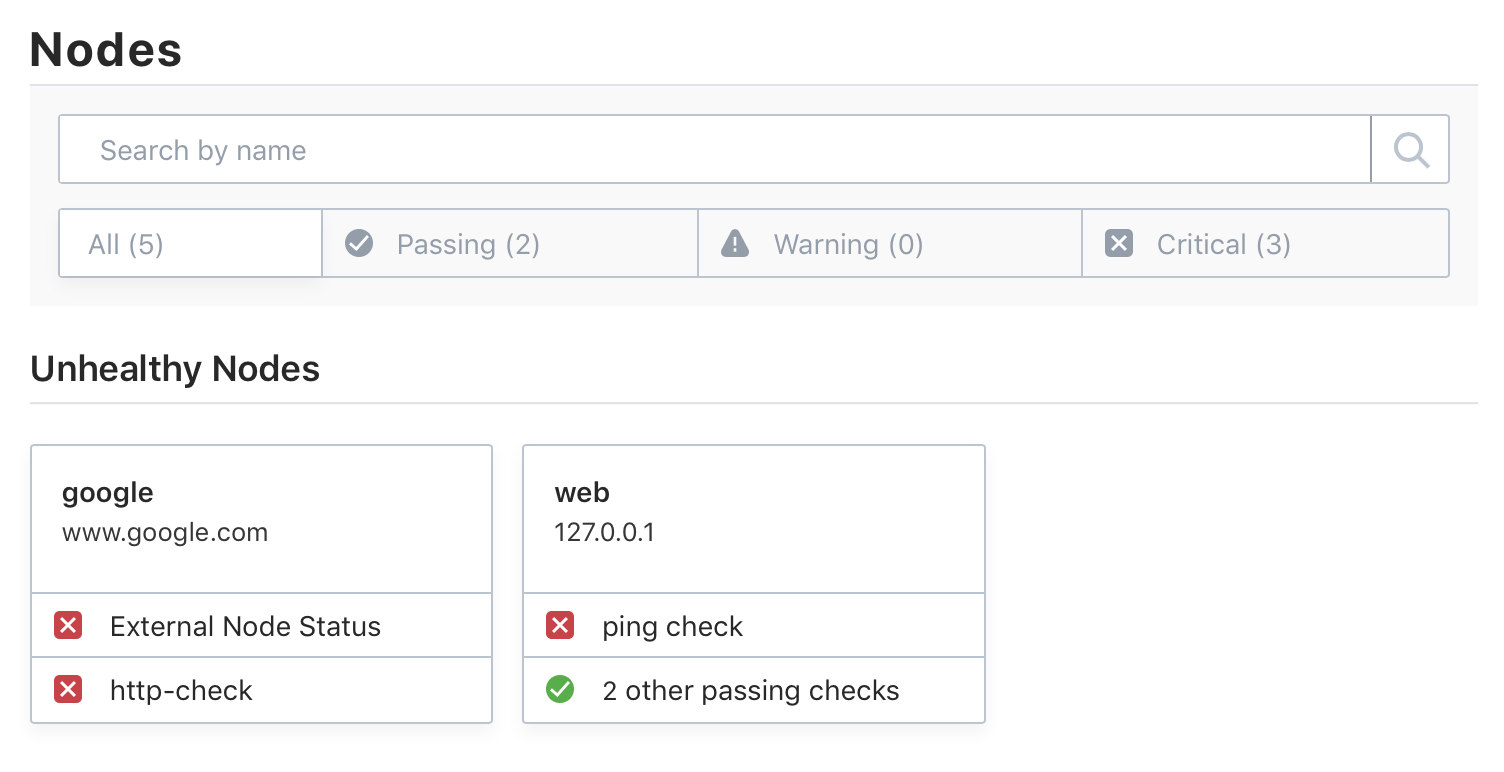

We can also see the service and its health check listed in the Consul UI:

We can see from the above that there is an entry for this health check in the catalog. However, because this node and service were registered directly with the Catalog, the actual health check has not been setup and the node's health will not be monitored.

To demonstrate the difference between health checks registered directly with the catalog versus through a local agent, query for node health before and after simulating an outage.

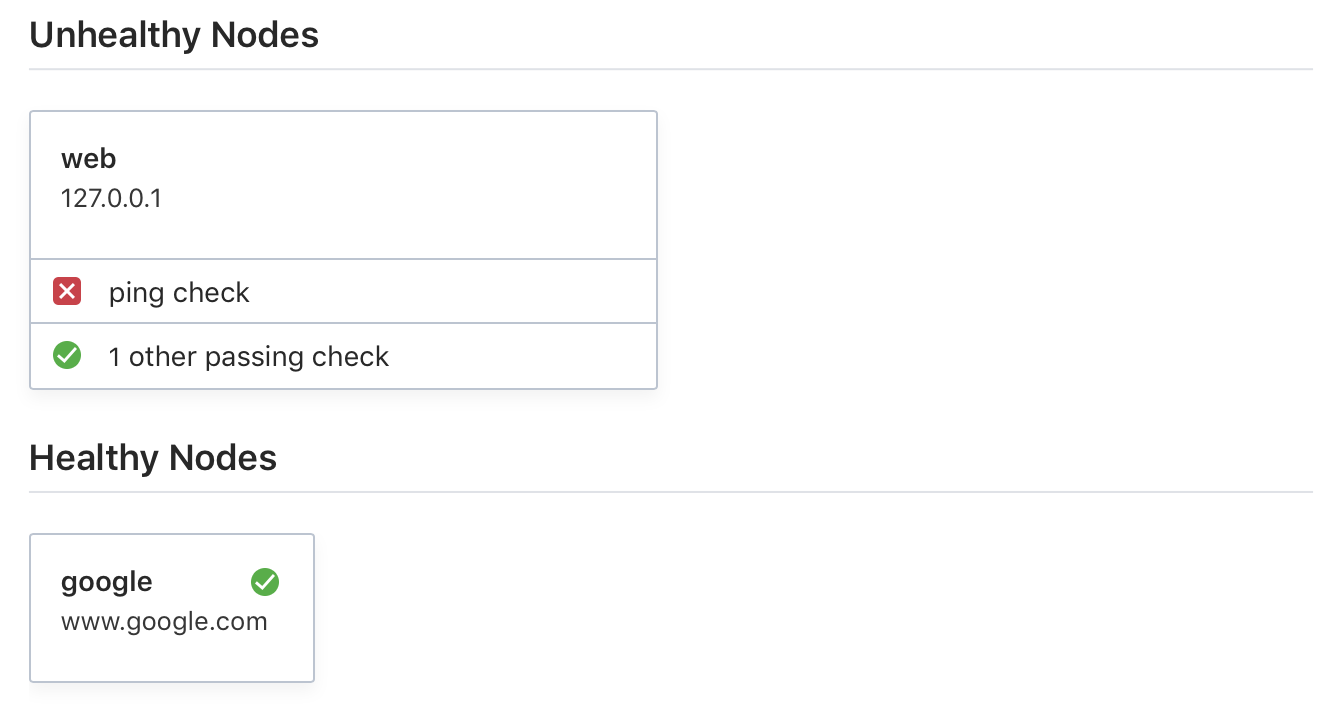

To simulate an outage, disconnect from the internet, wait a few moments, and check the Consul UI:

In the output above we no longer see a passing check for the internal web service but we continue to see the passing check for the external search service when we should not. Health checks for both services should fail while disconnected from the internet but only the check for our internal web service is showing as failing.

»Pull vs push health checking

One of the problems with traditional health checking is the use of a pull model. On a regular interval, the monitoring server asks all the nodes if they are healthy and the nodes respond with a status. This creates a bottleneck because as the number of nodes scales, so does the amount of traffic to the monitoring service. This clogs local network traffic and puts unnecessary load on the cluster.

Consul uses a push-based model where agents send events only upon status changes. As such, even large clusters will have very few requests. The problem with edge triggered monitoring is that there are no liveness heartbeats. That is, in the absence of any updates, Consul does not know if the checks are in a stable state or if the server has died. Consul gets around this by using a gossip-based failure detector. All cluster members take part in a background gossip, which has a constant load regardless of cluster size. The combination of the gossip and edge triggered updates allows Consul to scale to very large cluster sizes without being heavily loaded.

Because Consul monitoring requires that a Consul agent be running on the monitored service, health checks are not performed on external services. To enable health monitoring for external services, use Consul External Service Monitor (ESM).

»Using Consul ESM to monitor external services

Consul ESM is a daemon that runs alongside Consul in order to run health checks for external nodes and update the status of those health checks in the catalog. Multiple instances of ESM can be run for availability, and ESM will perform a leader election by holding a lock in Consul. The leader will then continually watch Consul for updates to the catalog and perform health checks defined on any external nodes it discovers. This allows externally registered services and checks to access the same features as if they were registered locally on Consul agents.

The diagram from earlier is updated to show how Consul ESM works with Consul to monitor the health of external services:

Consul ESM is provided as a single binary. To install, download the release appropriate for your system and make it available in your PATH. The examples in this post use Consul ESM v0.2.0.

Open a new terminal and start Consul ESM with consul-esm.

$ consul-esm

2018/06/14 12:50:44 [INFO] Connecting to Consul on 127.0.0.1:8500...

Consul ESM running!

Datacenter: (default)

Service: "consul-esm"

Leader Key: "consul-esm/lock"

Node Reconnect Timeout: "72h0m0s"

Log data will now stream in as it occurs:

2018/06/14 12:50:44 [INFO] Waiting to obtain leadership...

2018/06/14 12:50:44 [INFO] Obtained leadership

If your internet provider does not allow UDP pings, in order to make the examples in this post work, you may have to set ping_type = "socket" in a config file and launch consul-esm with that config file. If you are using macOS you will need to run with sudo:

$ sudo consul-esm -config-file=./consul-esm.hcl

Included in the example external service definition are the following NodeMeta values that enable health monitoring by Consul ESM:

-

"external-node"identifies the node as an external one that Consul ESM should monitor. -

"external-probe": "true"tells Consul ESM to perform regular pings to the node and maintain an externalNodeHealth check for the node (similar to the serfHealth check used by Consul agents).

Once Consul ESM is running, simulate an outage again by disconnecting from the internet. Now in Consul UI, we see that the health checks for our external service are updated to critical as they should be:

Consul ESM supports HTTP and TCP health checks. Ping health checks are added automatically with "external-probe": "true".

»Summary

Consul internal services are those provided by nodes where the Consul agent can be run directly. External services are those provided by nodes where the Consul agent cannot be run.

Internal services are registered with service definitions via the local Consul agent. The local Consul agent on the node is responsible for running any health checks registered for the service and updating the catalog accordingly. External services must be registered directly with the catalog because, by definition, they run on nodes without a Consul agent.

Both internal and external services can have health checks associated with them. Health checking in Consul uses a push-based model where agents send events only upon status changes. Consul provides several kinds of health checks, including: Script, HTTP, TCP, Time to Live (TTL), Docker, and gPRC. Because Consul monitoring requires that a Consul agent be running on the monitored service, health checks are not performed on external services. To enable health monitoring for external services, use Consul External Service Monitor (ESM).