Nomad 0.11 includes a new way to manage external storage volumes for your stateful workloads—support for the Container Storage Interface (CSI). We’ll provide an overview of this new capability in this blog post. You can register here to watch a live demo.

Before 0.11, if you've wanted to run a stateful workload like a database in Nomad, you've had a few different options. If you didn't have too much data, you could use the ephemeral_disk block to manage the job's data. Or you could use Docker volumes or the host_volume feature to mount a volume from the host into the container. But Docker volumes or host volumes can't be migrated between hosts, and require that you write your own orchestration for configuring the volumes.

We wanted to improve this experience for operators, but each storage provider has its own APIs and workflows. Rather than baking these directly into Nomad, we've adopted the Container Storage Interface (CSI). CSI is an industry standard that enables storage vendors to develop a plugin once and have it work across a number of container orchestration systems. CSI plugins are third-party plugins that run as Nomad jobs and can mount volumes created by your cloud provider. Nomad is aware of CSI-managed volumes during the scheduling process, enabling it to schedule your workloads based on the availability of volumes on a specific client.

Each storage provider builds its own CSI plugin, and we can support all of them in Nomad. You can launch jobs that claim storage volumes from AWS Elastic Block Storage (EBS) or Elastic File System (EFS) volumes, GCP persistent disks, Digital Ocean droplet storage volumes, Ceph, vSphere, or vendor-agnostic third-party providers like Portworx. This means that the same plugins written by storage providers to support Kubernetes also support Nomad out of the box (check the full list here)!

»CSI Plugins

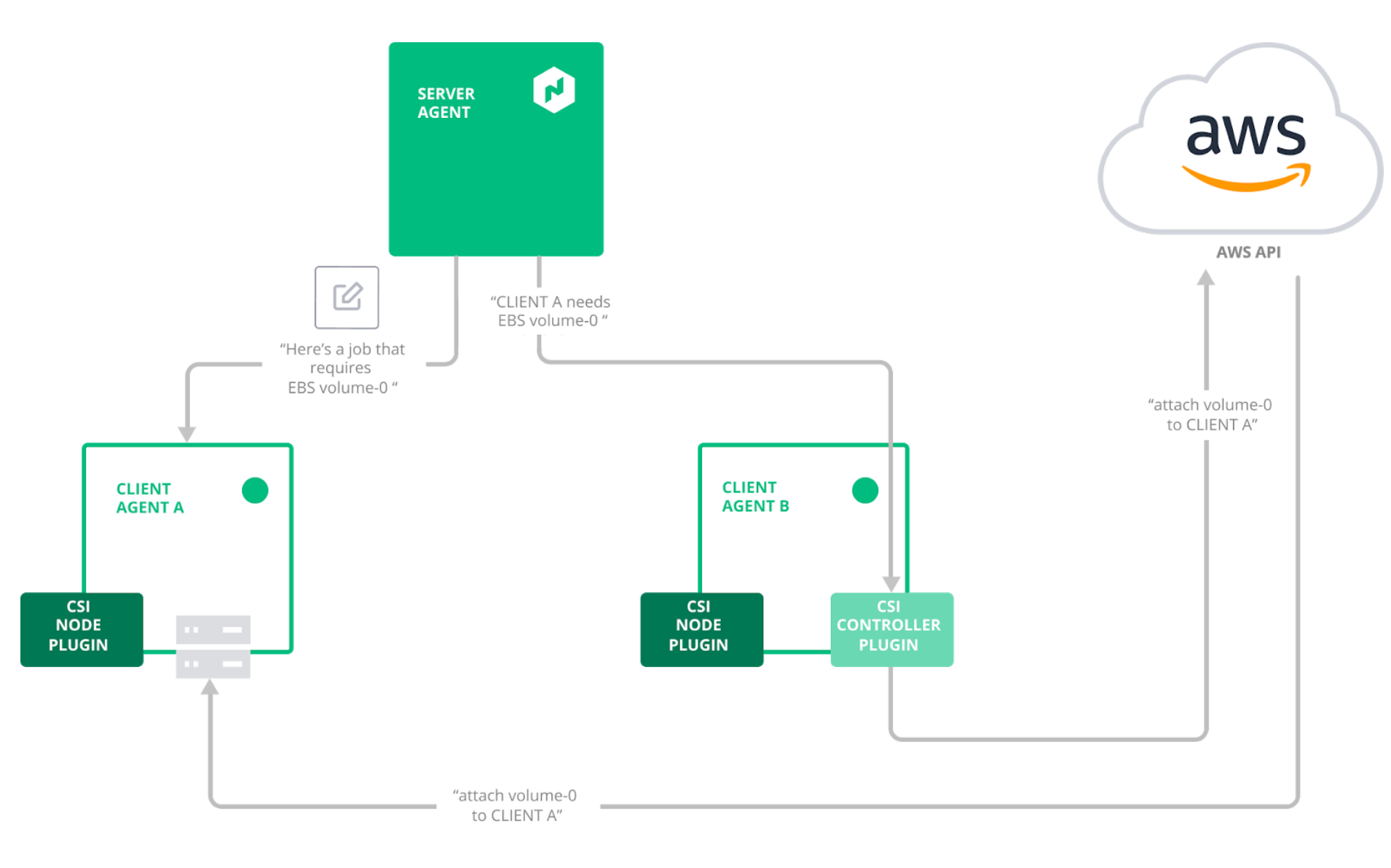

CSI Plugins run as Nomad jobs, with a new csi_plugin block to tell Nomad that the job is implementing the CSI interface. There are two types of plugins. Controller Plugins communicate with the storage provider's APIs. So for example, when you run a job that needs an AWS EBS volume, Nomad will tell the controller plugin that it needs a volume to be mounted on the client node. The controller will make the API calls to AWS to attach the EBS volume to the right EC2 instance. Node Plugins do the work on each client node, like creating bind mounts. Not every plugin provider has or needs a controller—that's specific to the provider implementation. But typically you'll run a node plugin on each Nomad client where you want to mount volumes and run one or two instances of the controller plugin.

Note that unlike Nomad task drivers and Nomad device drivers, CSI plugins are being managed dynamically—you don't need to install plugins beforehand! We're excited to see where this model takes us towards the possibility of other dynamic plugins in the future.

In the example below, we're telling Nomad that the job exposes a plugin we want to call aws-ebs0, that it's a controller plugin, and to expect the plugin to expose its Unix domain socket at /csi inside the container.

csi_plugin {

id = "aws-ebs0"

type = "controller"

mount_dir = "/csi"

}

We'll run two jobs for this plugin—a service job for the controller and a system job for the nodes so that we have one on each Nomad client. If we wanted to run the AWS EFS plugin instead, we'd look at its documentation and see it only needs a node plugin.

»Volumes

Once you have CSI plugins running, you can register volumes from your storage provider with the new nomad volume register command. We define the volume in HCL. In the example below, we have a volume intended for use as a MySQL database storage, mapped to the external ID for an EBS volume we've created elsewhere (perhaps through Terraform).

type = "csi"

id = "mysql0"

name = "mysql0"

external_id = "vol-0b756b75620d63af5"

access_mode = "single-node-writer"

attachment_mode = "file-system"

plugin_id = "aws-ebs0"

Every plugin has its own capabilities for access modes and attachment modes. Some plugins can support having multiple readers with a single writer and allow only one container to consume the volume. Some plugins support a "device mode", for when the application can handle a raw device rather than a pre-formatted file system.

Next we register the volume:

$ nomad volume register volume.hcl

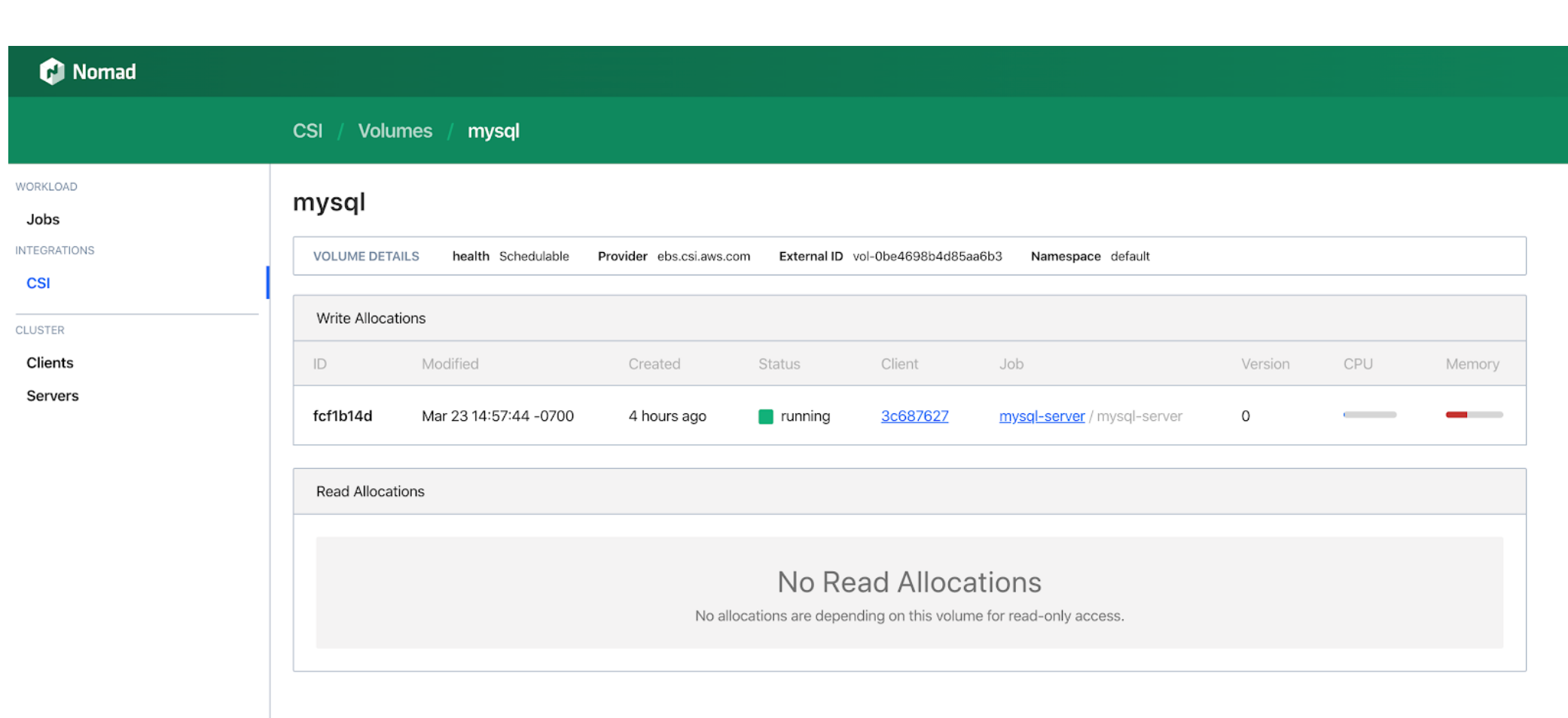

$ nomad volume status mysql0

ID = mysql0

Name = mysql0

External ID = vol-0b756b75620d63af5

Plugin ID = aws-ebs0

Provider = ebs.csi.aws.com

Version = v0.6.0-dirty

Schedulable = true

Controllers Healthy = 1

Controllers Expected = 1

Nodes Healthy = 2

Nodes Expected = 2

Access Mode = single-node-writer

Attachment Mode = file-system

Namespace = default

And now Nomad jobs can use this volume with the same volume and volume_mount blocks they would use to claim a host volume. Except now when we start the job, Nomad will attach the external volume to the host dynamically.

job "mysql-server" {

datacenters = ["dc1"]

type = "service"

group "mysql-server" {

count = 1

volume "mysql" {

type = "csi"

read_only = false

source = "mysql0"

}

task "mysql-server" {

driver = "docker"

volume_mount {

volume = "mysql0"

destination = "/srv"

read_only = false

}

config {

image = "mysql:latest"

args = ["--datadir", "/srv/mysql"]

}

}

}

}

»Getting Started

We're releasing Nomad's Container Storage Interface integration in beta for Nomad 0.11 to get feedback from the community. We've started with well-tested support for AWS EBS and AWS EFS, and we'd like to hear what other CSI plugins you're interested in seeing. In the meantime, feel free to try it out and give us feedback in the issue tracker. To see this feature in action, please register for the upcoming live demo session here.