This operator is now out of alpha. You should now read the consistently updated guide on this operator, which can be found here: Deploy Infrastructure with the Terraform Cloud Operator for Kubernetes.

We are pleased to announce the alpha release of HashiCorp Terraform Operator for Kubernetes. The new Operator lets you define and create infrastructure as code natively in Kubernetes by making calls to Terraform Cloud.

Use cases include:

-

Hardening and configuring application-related infrastructure as a Terraform module and creating them with a Kubernetes-native interface. For example, a database or queue that an application uses for data or messaging.

-

Automating Terraform Cloud workspace and variable creation with a Kubernetes-native interface.

By adding the Operator to your Kubernetes namespace, you can create application-related infrastructure from a Kubernetes cluster. In this example, you will use the new Operator to deploy a message queue that an application needs before it is deployed to Kubernetes. This pattern can extend to other application infrastructure, such as DNS servers, databases, and identity and access management rules.

»Internals

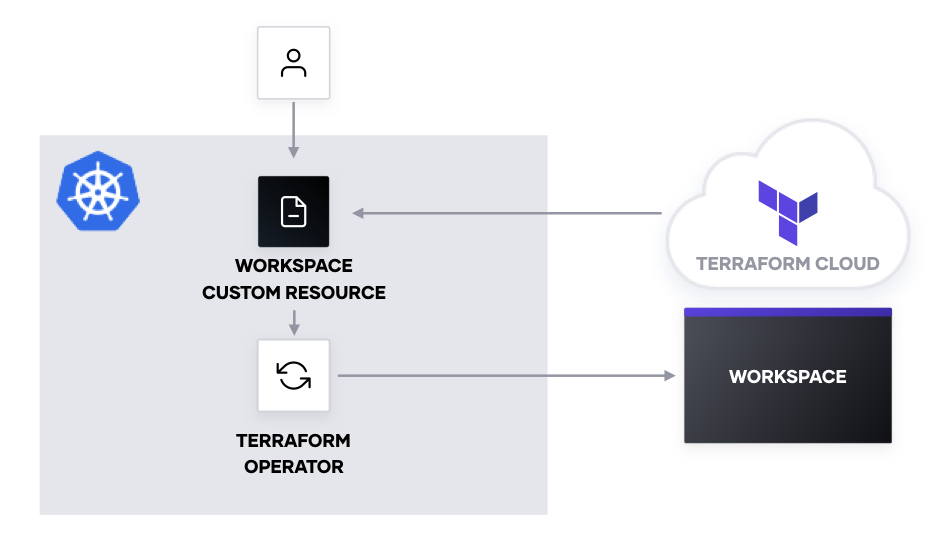

The Operator pattern extends the Kubernetes API to create and configure custom resources internal and external to the Kubernetes cluster. By using an Operator, you can capture and automate tasks to manage a set of services.

The HashiCorp Terraform Operator for Kubernetes executes a Terraform Cloud run with a module, using a custom resource in Kubernetes that defines Terraform workspaces. By using Terraform Cloud in the Operator, we leverage an existing control plane that ensures proper handling and locking of state, sequential execution of runs, and established patterns for injecting secrets and provisioning resources.

The logic for creating and updating workspaces in Terraform Cloud exists in the terraform-k8s binary. It includes the Workspace controller, which reconciles the Kubernetes Workspace custom resource with the Terraform Cloud workspace. The controller will check for changes to inline non-sensitive variables, module source, and module version. Edits to sensitive variables or variables with ConfigMap references will not trigger updates or runs in Terraform Cloud due to security concerns and ConfigMap behavior, respectively. Runs will automatically execute with terraform apply -auto-approve, in keeping with the Operator pattern.

We package terraform-k8s into a container and deploy it namespace-scoped to a Kubernetes cluster as a Kubernetes deployment. This allows the Operator to access the Terraform Cloud API token and workspace secrets within a specific namespace. By namespace-scoping the Operator, we can isolate changes, scope secrets, and version custom resource definitions. For additional information about namespace-scoping versus cluster-scoping, see information outlined by the operator-sdk project.

Next, we create a Workspace custom resource definition (CRD) in the cluster, which defines the schema for a Terraform Cloud workspace and extends the Kubernetes API. The CRD must be deployed before we can create a Workspace custom resource in the cluster.

Finally, we apply a Workspace custom resource to build a Terraform Cloud workspace. When you create a Workspace in the Kubernetes cluster in the same namespace as the Operator, you trigger the Operator to:

- Retrieve values from the

Workspacespecification - Create or update a Terraform Cloud workspace

- Create or update variables in the Terraform Cloud workspace

- Execute a run in Terraform Cloud

- Update the

Workspacestatus in Kubernetes

»Authenticate with Terraform Cloud

The Operator requires an account in Terraform Cloud with a free or paid Terraform Cloud organization. After signing into your account, obtain a Team API Token under Settings -> Teams. If you are using a paid tier, you must grant a team access to “Manage Workspaces”. If you are using a free tier, you will only see one team called “Owners” that has full access to the API.

The Team API token should be stored as a Kubernetes secret or in a secrets manager to be injected into the Operator’s deployment. For example, we save the Terraform Cloud API token to a file called credentials to use for a Kubernetes secret.

> less credentials

credentials app.terraform.io {

token = "REDACTED"

}

Note that this is a broad-spectrum token. As a result, ensure that the Kubernetes cluster using this token has proper role-based access control limiting access to the secret that is storing the token, or store the secret in a secret manager with access control policies.

»Deploy the Operator

To deploy the Operator, download its Helm chart. Before installing the chart, make sure you have created a namespace and added the Terraform Cloud API token and sensitive variables as Kubernetes secrets. This example uses a file called credentials, a CLI configuration file with the API token.

> kubectl create ns preprod

> kubectl create -n preprod secret generic terraformrc --from-file=credentials

> kubectl create -n preprod secret generic workspacesecrets --from-literal=AWS_SECRET_ACCESS_KEY=${AWS_SECRET_ACCESS_KEY} --from-literal=AWS_ACCESS_KEY_ID=${AWS_ACCESS_KEY_ID}

By default, the Helm chart expects to mount a Kubernetes secret with the Terraform Cloud API token named terraformrc at the file path /etc/terraform. The key of the Kubernetes secret defined in the example is credentials. Sensitive variables default to the Kubernetes secret named workspacesecrets at the directory /tmp/secrets. The key of each secret is the name of a file mounted in the /tmp/secrets directory.

Once you stage the namespace and secrets, deploy the Operator to your cluster by installing the helm chart and enabling the workspace synching capability.

> helm install -n preprod operator ./terraform-helm --set="global.enabled=true"

The command manually enables the Operator with the --set option. To learn more about Helm values and how to configure them with values files, see Helm documentation.

The Operator runs as a pod in the namespace.

> kubectl get -n preprod pod

NAME READY STATUS RESTARTS AGE

operator-terraform-sync-workspace-74748fc4b9-d8brb 1/1 Running 0 10s

In addition to deploying the Operator, the Helm chart adds a Workspace custom resource definition to the cluster.

> kubectl get crds

NAME CREATED AT

workspaces.app.terraform.io 2020-02-11T15:15:06Z

»Deploy a Workspace

When applying the Workspace custom resource to a Kubernetes cluster, you must define the Terraform Cloud organization, the file path to secrets on the Operator, a Terraform module, its variables, and any outputs you would like to see in the Kubernetes status.

Copy the following snippet into a file named workspace.yml.

# workspace.yml

apiVersion: app.terraform.io/v1alpha1

kind: Workspace

metadata:

name: greetings

spec:

organization: hashicorp-team-demo

secretsMountPath: "/tmp/secrets"

module:

source: "terraform-aws-modules/sqs/aws"

version: "2.0.0"

outputs:

- key: url

moduleOutputName: this_sqs_queue_id

variables:

- key: name

value: greetings.fifo

sensitive: false

environmentVariable: false

- key: fifo_queue

value: "true"

sensitive: false

environmentVariable: false

- key: AWS_DEFAULT_REGION

valueFrom:

configMapKeyRef:

name: aws-configuration

key: region

sensitive: false

environmentVariable: true

- key: AWS_ACCESS_KEY_ID

sensitive: true

environmentVariable: true

- key: AWS_SECRET_ACCESS_KEY

sensitive: true

environmentVariable: true

- key: CONFIRM_DESTROY

value: "1"

sensitive: false

environmentVariable: true

Currently, the Operator deploys the Terraform configuration encapsulated in a module. The module source can be any publicly available remote source (Terraform Registry or version-controlled and publicly available). It does not support local paths or separate *.tf outside of the module.

For variables that must be passed to the module, ensure that the variable key in the specification matches the name of the module variable. In the example, the terraform-aws-modules/sqs/aws expects a variable called name. The workspace specification is equivalent to the following Terraform configuration:

module “queue” {

source = “terraform-aws-modules/sqs/aws”

version = “2.0.0”

name = var.name

fifo_queue = var.fifo_queue

}

In addition to matching the key of the variable to the expected module variable, you must specify if the variable is sensitive or must be created as an environment variable. The value of the variable is optional and can be defined inline or with a Kubernetes ConfigMap reference. If it is defined for a sensitive variable, the Operator will overwrite the inline value with one from the secretsMountPath.

The outputs in the workspace specification map module outputs to your Terraform Cloud outputs. In the workspace.yml snippet, the terraform-aws-modules/sqs/aws outputs a value for this_sqs_queue_id. The example sets this to a Terraform output with the key url. The workspace specification is equivalent to the following Terraform configuration:

output “url” {

value = module.queue.this_sqs_queue_id

}

Create a ConfigMap for the default AWS region.

# configmap.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: aws-configuration

data:

region: us-east-1

Apply the ConfigMap and the Workspace to the namespace.

> kubectl apply -n preprod -f configmap.yml

> kubectl apply -n preprod -f workspace.yml

Examine the Terraform configuration uploaded to Terraform Cloud by checking the terraform ConfigMap. The Terraform configuration includes the module’s source, version, and inputs.

> kubectl describe -n preprod configmap greetings

# omitted for clarity

Data

====

terraform:

----

terraform {

backend "remote" {}

}

module "operator" {

source = "terraform-aws-modules/sqs/aws"

version = "2.0.0"

name = var.name

fifo_queue = var.fifo_queue

}

Debug the actions of the Operator and check if the workspace creation runs into errors by checking the Operator’s logs.

> kubectl logs -n preprod $(kubectl get pods -n preprod --selector "component=sync-workspace" -o jsonpath="{.items[0].metadata.name}")

# omitted for clarity

{"level":"info","ts":1581434593.6183743,"logger":"terraform-k8s","msg":"Run incomplete","Organization":"hashicorp-team-demo","RunID":"run-xxxxxxxxxxxxxxxx","RunStatus":"applying"}

{"level":"info","ts":1581434594.3859985,"logger":"terraform-k8s","msg":"Checking outputs","Organization":"hashicorp-team-demo","WorkspaceID":"ws-xxxxxxxxxxxxxxxx","RunID":"run-xxxxxxxxxxxxxxxx"}

{"level":"info","ts":1581434594.7209284,"logger":"terraform-k8s","msg":"Updated outputs","Organization":"hashicorp-team-demo","WorkspaceID":"ws-xxxxxxxxxxxxxxxx"}

Check the status of the workspace to determine the run status, outputs, and run identifiers.

> kubectl describe -n preprod workspace greetings

Name: greetings

Namespace: preprod

# omitted for clarity

Status:

Outputs:

Key: url

Value: https://sqs.us-east-1.amazonaws.com/REDACTED/greetings.fifo

Run ID: run-xxxxxxxxxxxxxxxx

Run Status: applied

Workspace ID: ws-xxxxxxxxxxxxxxxx

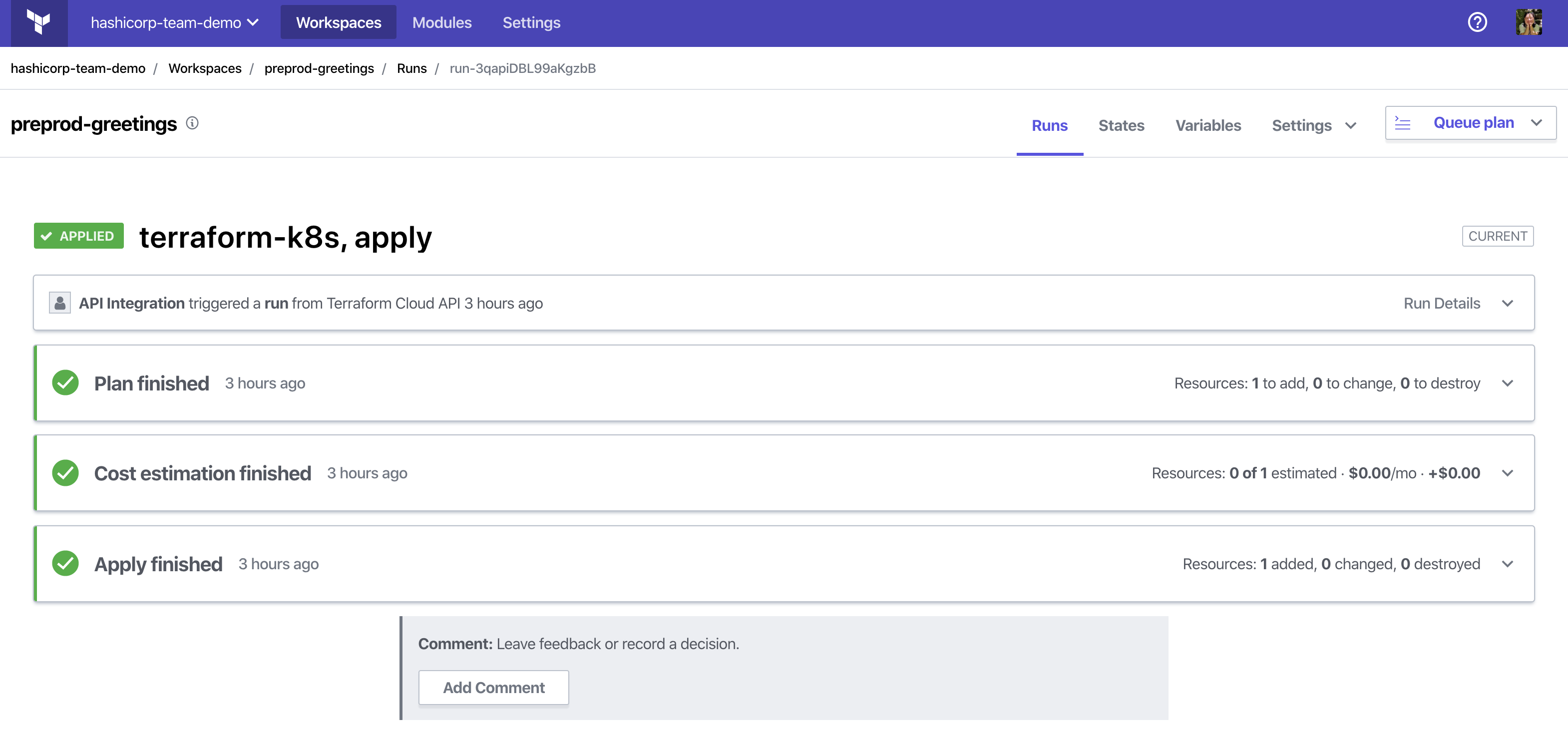

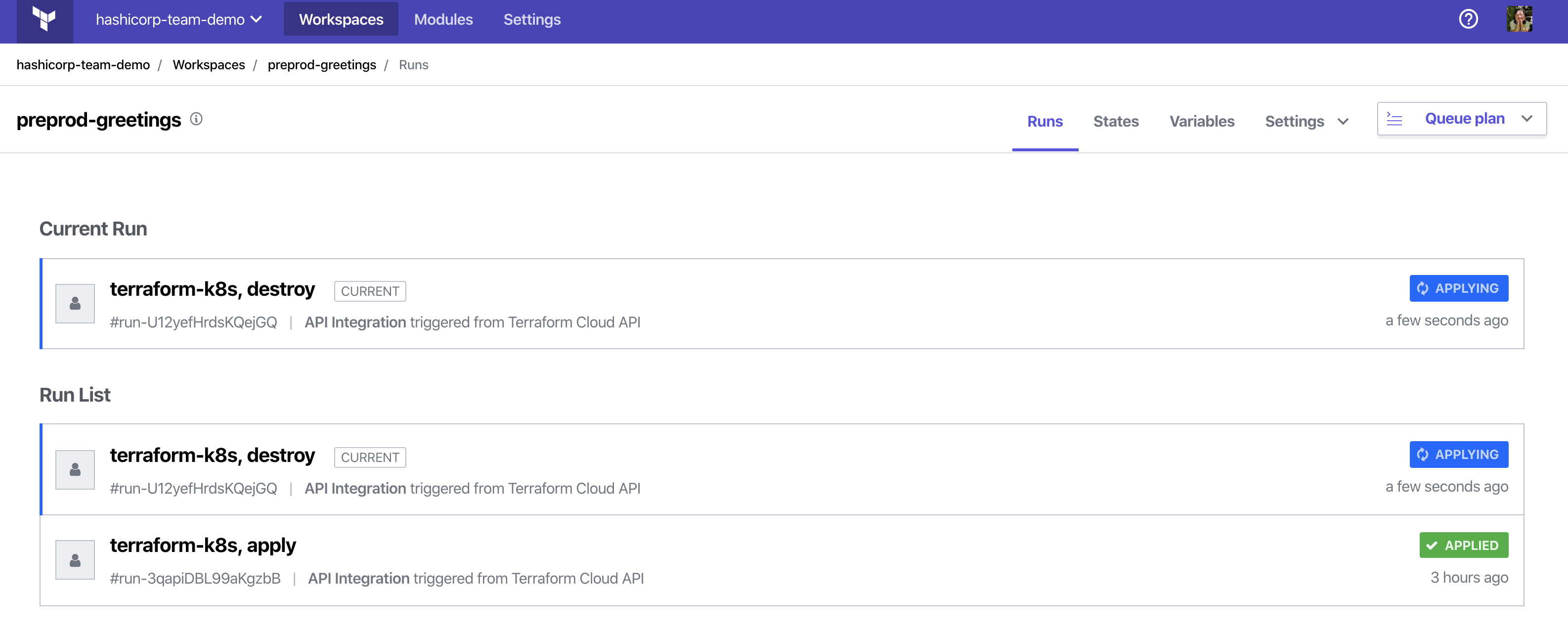

In addition to debugging with the Kubernetes interface, you can check the status of the run in the Terraform Cloud UI.

The Workspace custom resource reflects that the run was applied and updates its corresponding outputs in the status.

> kubectl describe -n preprod workspace greetings

# omitted for clarity

Status:

Outputs:

Key: url

Value: https://sqs.us-east-1.amazonaws.com/REDACTED/greetings.fifo

Run ID: run-xxxxxxxxxxxxxxxx

Run Status: applied

Workspace ID: ws-xxxxxxxxxxxxxxxx

In addition to the workspace status, the output configuration can be consumed by the application from a ConfigMap with data for the Terraform outputs.

> kubectl describe -n preprod configmap greetings-output

Name: greetings-outputs

Namespace: preprod

Labels: <none>

Annotations: <none>

Data

====

url:

----

https://sqs.us-east-1.amazonaws.com/REDACTED/greetings.fifo

Now that the queue has been deployed, the application can send and receive messages on the queue. The ConfigMap with the queue configuration can be mounted as an environment variable to the application. Save the following job definition in a file called application.yaml to run a test to send and receive messages from the queue.

apiVersion: batch/v1

kind: Job

metadata:

name: greetings

labels:

app: greetings

spec:

template:

metadata:

labels:

app: greetings

spec:

restartPolicy: Never

containers:

- name: greetings

image: joatmon08/aws-sqs-test

command: ["./message.sh"]

env:

- name: QUEUE_URL

valueFrom:

configMapKeyRef:

name: greetings-outputs

key: url

- name: AWS_DEFAULT_REGION

valueFrom:

configMapKeyRef:

name: aws-configuration

key: region

volumeMounts:

- name: sensitivevars

mountPath: "/tmp/secrets"

readOnly: true

volumes:

- name: sensitivevars

secret:

secretName: workspacesecrets

This example re-uses the AWS credentials, used by the Operator to deploy the queues, in order to read and send messages to the queues. Deploy the job and examine the logs from the pod associated with the job.

> kubectl apply -n preprod -f application.yaml

> kubectl logs -n preprod $(kubectl get pods -n preprod --selector "app=greetings" -o jsonpath="{.items[0].metadata.name}")

sending a message to queue https://sqs.us-east-1.amazonaws.com/REDACTED/greetings.fifo

{

"MD5OfMessageBody": "fc3ff98e8c6a0d3087d515c0473f8677"

}

reading a message from queue https://sqs.us-east-1.amazonaws.com/REDACTED/greetings.fifo

{

"Messages": [

{

"Body": "test"

]

}

»Update a Workspace

Applying changes to inline, non-sensitive variables and module source and version in the Kubernetes Workspace custom resource will trigger a new run in the Terraform Cloud workspace. However, as previously mentioned, edits to sensitive variables or variables with ConfigMap references will not trigger updates or runs in Terraform Cloud.

Update the workspace.yml file by changing the name and type of the queue from FIFO to standard.

# workspace.yml

apiVersion: app.terraform.io/v1alpha1

kind: Workspace

metadata:

name: greetings

spec:

# omitted for clarity

variables:

- key: name

value: greetings

- key: fifo_queue

value: "false"

# omitted for clarity

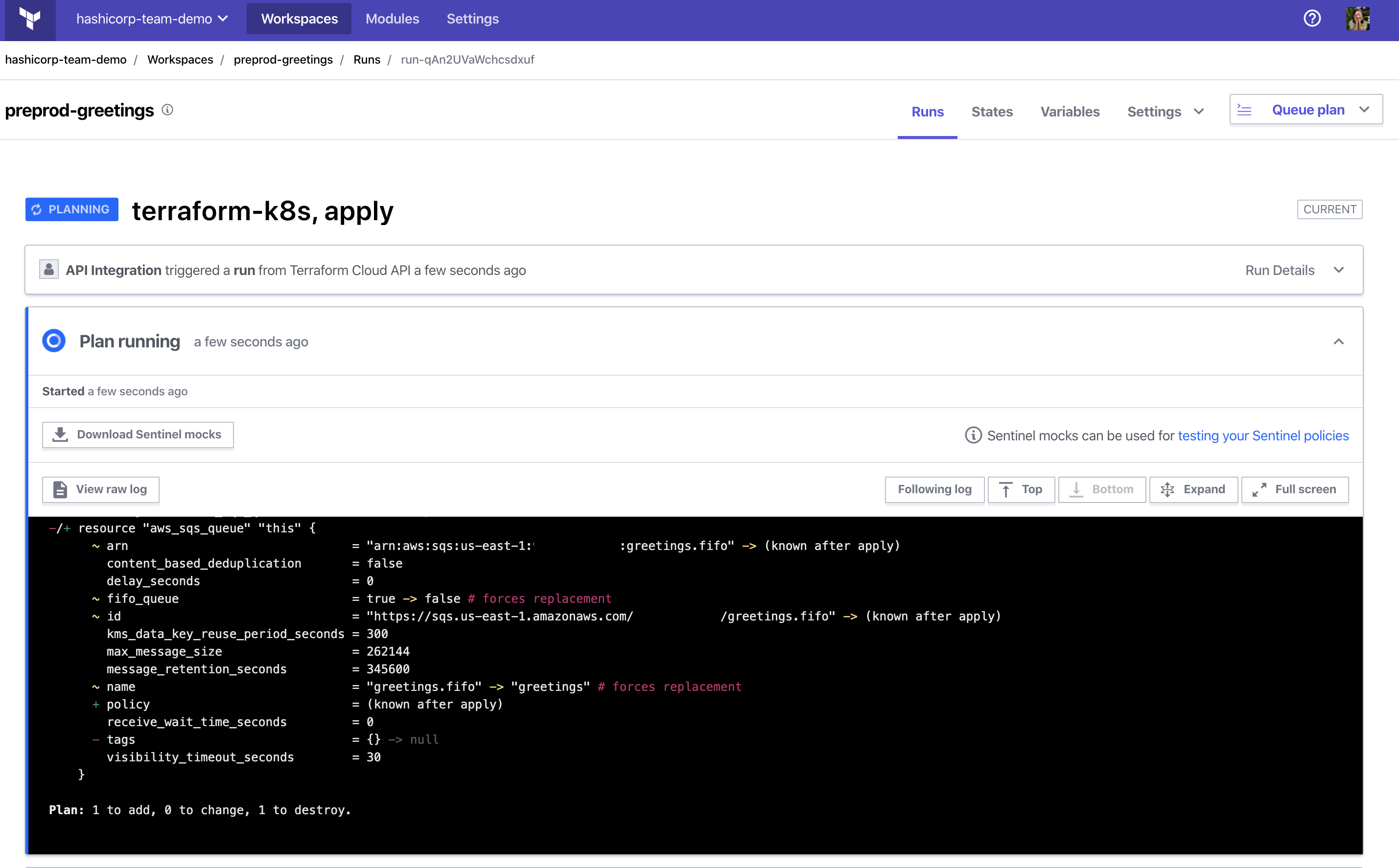

Next, apply the updated workspace configuration. The Terraform Operator retrieves the configuration update, pushes it to Terraform Cloud, and executes a run.

> kubectl apply -n preprod -f workspace.yaml

Examine the run for the workspace in the Terraform Cloud UI. The plan indicates a replacement of the queue.

Updates to the workspace from the Operator can be audited through Terraform Cloud, as it maintains a history of runs and the current state.

»Delete a Workspace

When deleting the Workspace custom resource, you may notice that the command hangs for a few minutes.

> kubectl delete -n preprod workspace greetings

workspace.app.terraform.io "greetings" deleted

The command hangs because the Operator executes a finalizer, a pre-delete hook. It executes a terraform destroy on workspace resources and deletes the workspace in Terraform Cloud.

Once the finalizer completes, Kubernetes will delete the Workspace custom resource.

»Conclusion

The HashiCorp Terraform Operator leverages the benefits of Terraform Cloud with a first-class Kubernetes experience. For additional details about the workspace synchronization, see our detailed documentation. A deeper dive on the Kubernetes Operator pattern can be found in Kubernetes documentation.

To deploy the Terraform Operator for Kubernetes, review the instructions on its Helm chart. To learn more about the Operator and its design, check it out at hashicorp/terraform-k8s. Additional resources for Terraform Cloud can be found at app.terraform.io.

We would love to hear your feedback and expand on the project!

Post bugs, questions, and feature requests regarding deployment of the Operator by opening an issue on hashicorp/terraform-helm!