HashiCorp Nomad 1.1 introduces several new resource control mechanisms to unlock higher efficiency and better performance, including memory oversubscription, reserved CPU cores, and improved UI visibility into resource utilization. This blog post takes a closer look at each of those new features.

Learn more: Announcing HashiCorp Nomad 1.1. Beta

»Memory Oversubscription

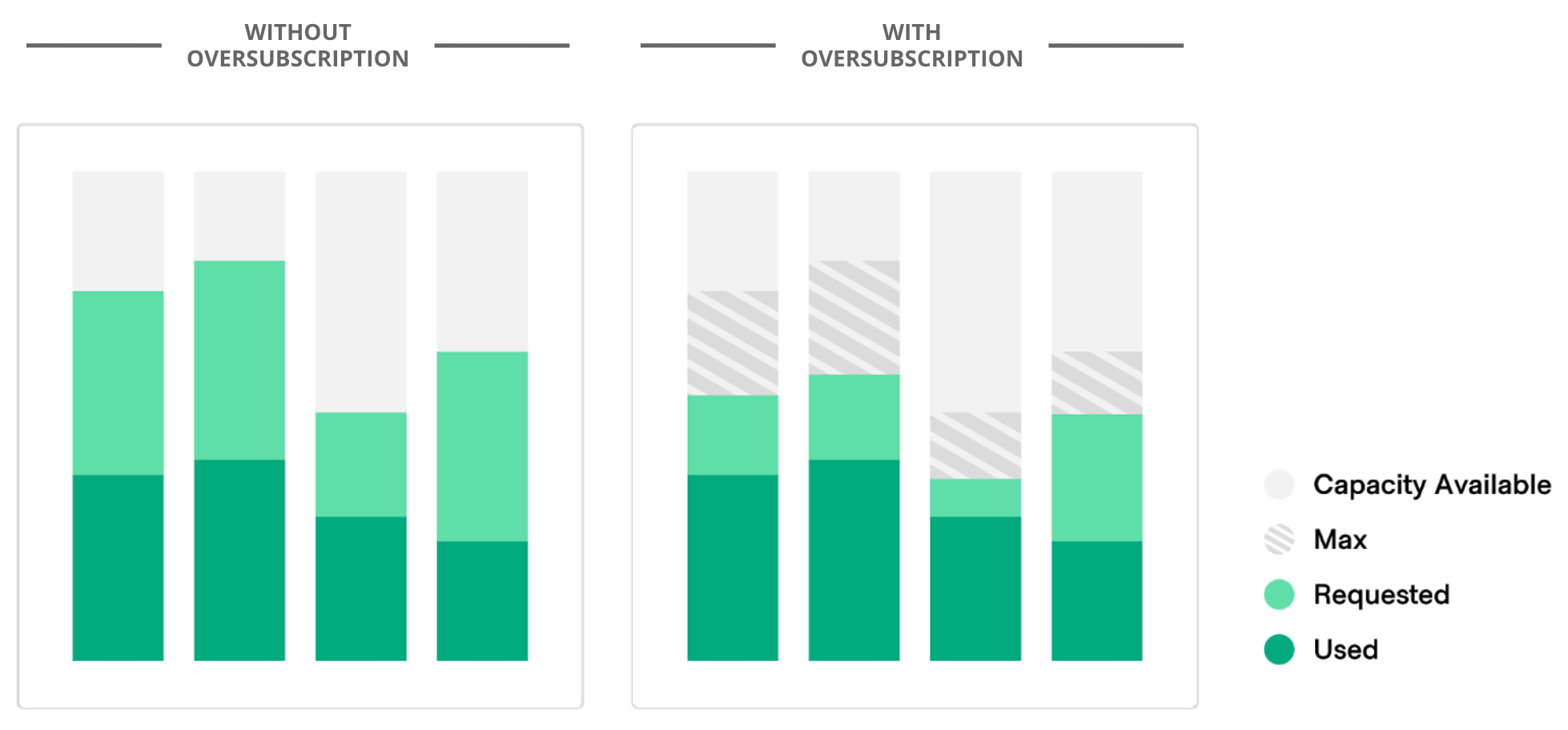

Setting task memory limits is a challenging act of balance. Job authors must set a memory limit for each task — if it’s too low, the task may be interrupted if it exceeds the limit unexpectedly; if it’s too high, the cluster is left underutilized and resources are wasted. Job authors usually set limits based on their task's typical memory usage and then add a safety margin to handle unexpected load spikes or uncommon scenarios. That means that in some clusters, 20-40% of the cluster memory may be reserved but unused.

To help minimize reserved but not used cluster memory, Nomad 1.1 now lets job authors set two separate memory limits:

- A reserve limit to represent the task’s typical memory usage — this number is used by the Nomad scheduler to reserve and place the task

- A max limit, which is the maximum memory the task may use

If a client’s memory becomes contended or low, Nomad uses the operating system primitives to recover. In Linux via Cgroups, Nomad reclaims memory by pushing the tasks back to their reserved memory limits and may reschedule tasks to other clients.

The new max limit attribute is currently supported by Docker and all default Nomad drivers except raw_exec and QEMU. HashiCorp will help third-party drivers support memory oversubscription.

Enterprise operators can set resource quotas to limit the aggregate max memory limit of applications, similar to reserved memory limits.

»Reserved Memory Example

To understand the benefit, consider a web service that typically uses 150MB of memory, but historically has seen short load spikes up to 300MB. Previously, job authors may have conservatively set memory limits to an even higher value than the spikes, e.g. 350MB, just to be safe. In Nomad 1.1, the task resource block can set 150MB as the reserved memory but have 350MB as the maximum memory for the task. The task resource block would look like this:

resources {

cpu = 1000

memory = 150

memory_max = 350

}

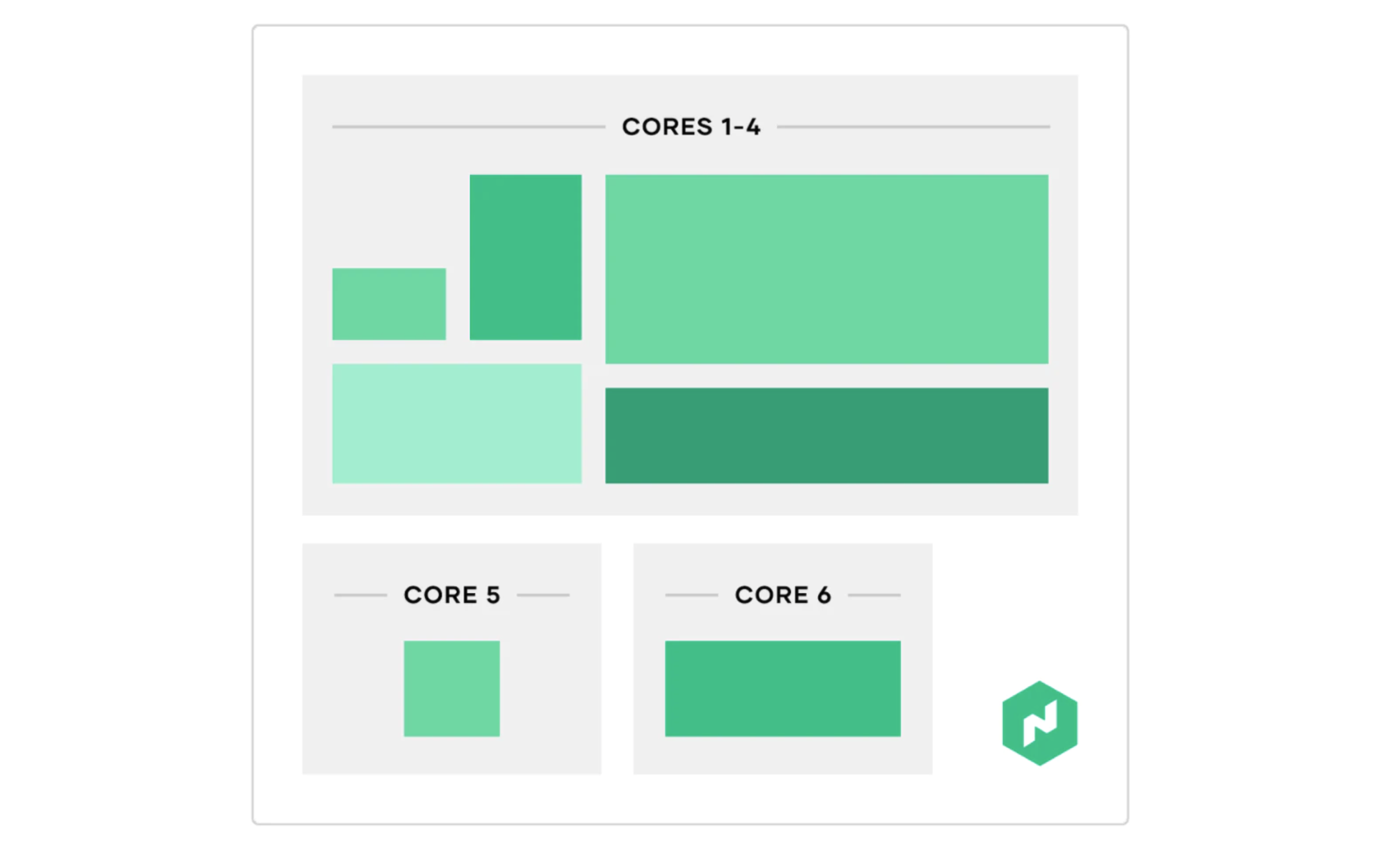

»Reserved CPU Cores

Nomad is great at packing different kinds of jobs with different resource requirements onto each machine. While CPU and memory isolation mechanisms help ensure jobs don’t use more than what has been allocated for them, latency-sensitive workloads can still experience problems reserving the CPU cores they need.

On Linux machines, Nomad’s CPU isolation mechanism uses a feature called “cpu shares” to make sure jobs each get their fair share of time utilizing the CPU. For latency-sensitive tasks, users often want one or more entire CPU cores to be reserved rather than shared among multiple tasks.

Applications with both shared and reserved CPU cores

In Nomad 1.1, job authors can now fulfill this need through a new core resource that can be used in place of cpu to describe the number of reserved cores the task requires. This works in conjunction with the existing CPU shares system so a Nomad client could have tasks with shared CPUs, reserved cores, or a mix of both.

»Reserved CPU Cores Example

Consider a game server that is latency-sensitive and requires four CPU cores. Previously, job authors would have to know what machine type to schedule on — perhaps through a constraint — to set the appropriate amount of CPU resource (measured in MHz). Now, with Nomad 1.1, they can specify the number of cores and Nomad will take care of finding clients that have enough room to reserve the required number of cores:

resources {

cores = 4

memory = 4069

}

»UI Improvements

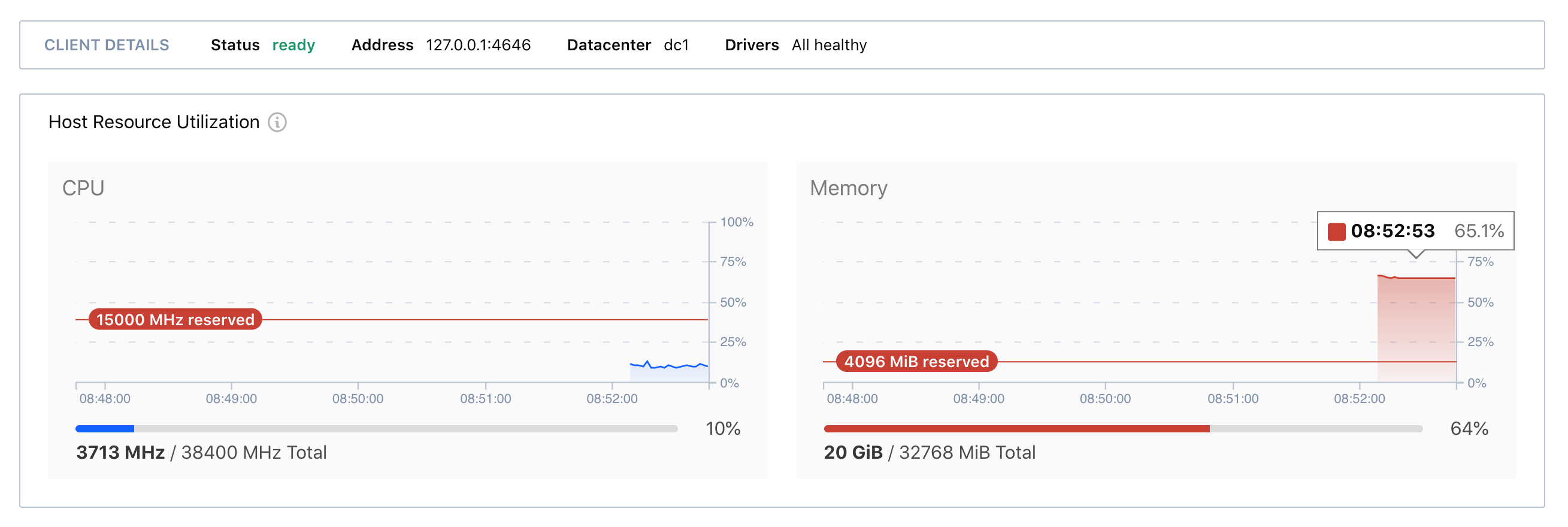

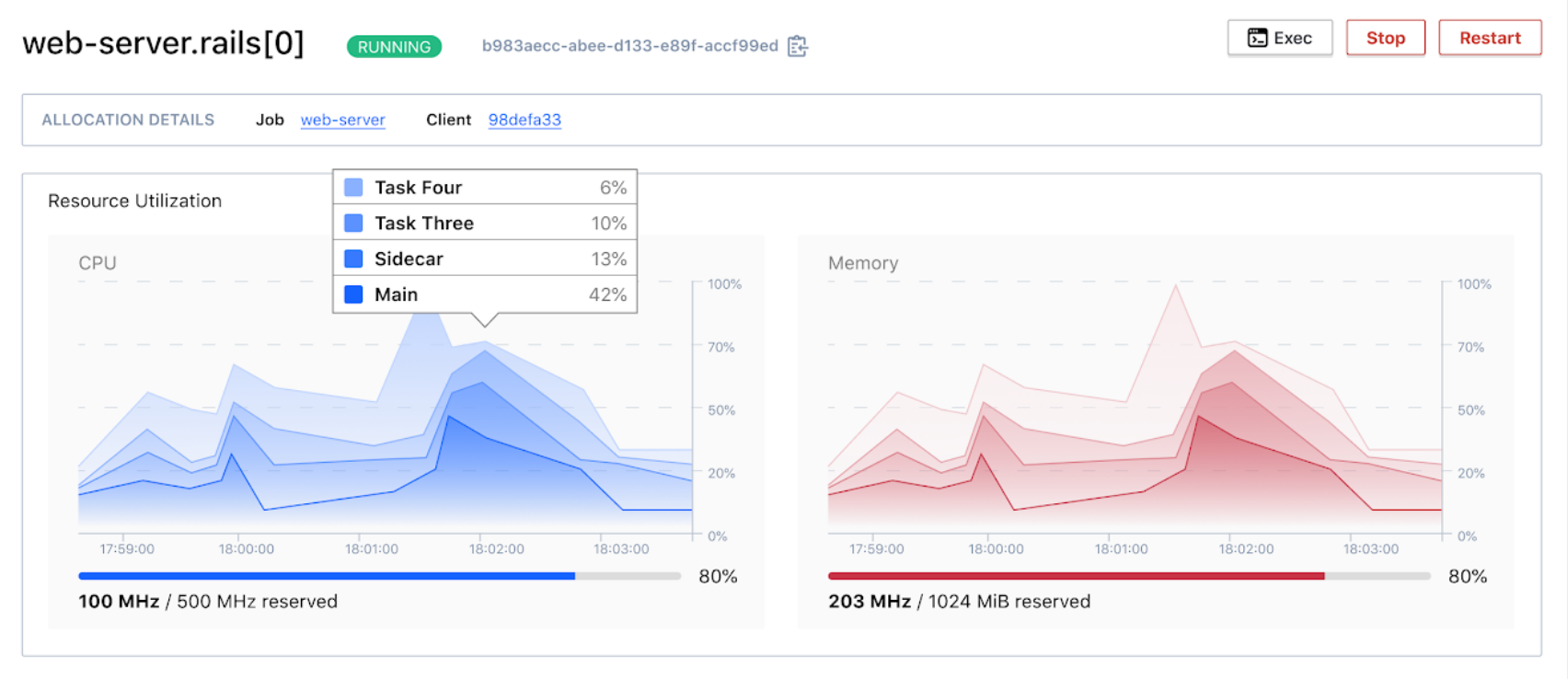

The Nomad 1.1 UI features several updates, including exposure of CPU and memory reservations and per-task breakdowns on allocation utilization charts.

»Reserved Resources

Client resource utilization charts now reflect when CPU and memory resources are reserved for non-Nomad processes. This helps highlight when resource reservations have been configured on a client node.

»Allocation Per-Task Metrics

Allocation resource utilization charts now break down usage for each task. This helps operators understand how the tasks that make up an allocation are consuming resources without having to visit individual task detail pages. Reserved CPU cores are not yet exposed in the UI but work on that is forthcoming.

»Next Steps

We are excited to deliver these long-awaited features to the Nomad community. We encourage you to download the Nomad 1.1. beta release and let us know about your experience in GitHub or our discussion forum.

To see these new features in action, register for the Nomad 1.1 introduction webinar, on May 19, 2021 at 9 a.m. PT.